Is it worth paying for Apache Hadoop?

In 2010, Apache Hadoop, MapReduce, and their associated technologies led to the spread of a new phenomenon in the field of information technology called “big data” or “Big Data”. Understanding what the Apache Hadoop platform is all about, why it is needed and why it can be used, is slowly penetrating the minds of specialists around the world. Originated as a single-person idea and rapidly growing to an industrial scale, Apache Hadoop has become one of the most widely discussed platforms for distributed computing, as well as a platform for storing unstructured or loosely structured information. In this article, I would like to dwell on the Apache Hadoop platform itself and consider the commercial implementations provided by third-party companies and their differences from the freely distributed version of Apache Hadoop.

Before starting to consider commercial implementations, I would like to dwell on the history of the origin and development of the Apache Hadoop platform. The creators and inspiration behind Apache Hadoop are Doug Cutting and Michael Cafarella, who in 2002 began developing a search engine for the Nutch part-time project. In 2004, the MapReduce distributed computing paradigm, which was being developed at that time, was also attached to the Nutch project, as well as a distributed file system. At the same time, Yahoo was thinking about developing a distributed computing platform for indexing and finding pages on the Internet. In fact, Yahoo’s competitors did not sleep and also thought about this issue - this has led to the fact that there are currently quite a lot of platforms for distributed computing, for example, the Google App Engine, Appistry CloudIQ, Heroku, Pervasive DataRush, etc. Yahoo rightly decided that developing and maintaining its own proprietary distributed computing platform would be more expensive and the quality of the result would be lower than investing in an open source platform. Therefore, they began to search for suitable solutions among open source projects, reasoning reasonably that the harsh and unbiased look of the community will allow them to improve the quality, and at the same time reduce the cost of maintaining the platform, as it will be developed not only by Yahoo, but the entire free IT community . Quite quickly they came across Nutch, which at that time stood out against competitors by its already confirmed results and decided to invest in the development of this project. To do this, in 2006, they invited Doug Cutting to lead a dedicated team for a new project called e14, whose goal was to develop a distributed computing infrastructure. In the same year, Apache Hadoop was issued as a separate open source project.

It can be said that from the decision to invest in the development of an open source distributed computing platform, Yahoo received quite tangible benefits, just as Apache Hadoop received an impetus in development with the help of a huge corporation. Apache Hadoop helped Yahoo bring internationally renowned scientists to the company and create an advanced research and development center, which is now one of the leading centers for search, advertising, spam detection, personalization and many other things related to the Internet. Many things Yahoo did not have to develop from scratch, he took advantage of third-party developers, for example, he used Apache HBase and Apache Hive to solve their problems. Since Apache Hadoop is an open platform, now Yahoo does not need to train specialists, it can find such people in the labor market, who already have experience with Hadoop. If Yahoo decided to develop its own platform, then it would be forced to train specialists within the company to work with it. Apache Hadoop is an industry standard to some extent and the development of this platform is carried out by many companies and third-party developers - so Yahoo has saved a lot of money by constantly investing in the development of this platform and got rid of the problem of constant software obsolescence. All this allowed Yahoo to launch Yahoo WebSearch already in 2008, using an Apache Hadoop cluster of 4000 machines. Apache Hadoop is an industry standard to some extent and the development of this platform is carried out by many companies and third-party developers - so Yahoo has saved a lot of money by constantly investing in the development of this platform and got rid of the problem of constant software obsolescence. All this allowed Yahoo to launch Yahoo WebSearch already in 2008, using an Apache Hadoop cluster of 4000 machines. Apache Hadoop is an industry standard to some extent and the development of this platform is carried out by many companies and third-party developers - so Yahoo has saved a lot of money by constantly investing in the development of this platform and got rid of the problem of constant software obsolescence. All this allowed Yahoo to launch Yahoo WebSearch already in 2008, using an Apache Hadoop cluster of 4000 machines.

However, the development of Apache Hadoop at Yahoo has not been cloudless throughout its journey. So, in September 2009, Doug Cutting, having not found a common language with Yahoo management, leaves for California-based startup Cloudera, which is engaged in the commercial development and promotion of Apache Hadoop in the market for big data solutions. Honestly, I don’t have any information about exactly what their views did not agree with, but the fact remains - offended by such a decision by Doug Cutting, Yahoo gives money in 2011 to create a company called Hortonworks, whose main activity is also commercialization and promotion of the platform Apache Hadoop. These companies will be discussed later in this article. I will try to compare the two distributed computing solutions provided by these companies, and also try to find out

Cloudera Inc.

In October 2008, in America, three engineers from Google, Facebook and Yahoo and one manager from Oracle created the new company Cloudera. They relied on distributed computing systems based on the MPP architecture. Reasonably judging that the amount of data in the world that needs to be analyzed is growing every day, and the number of companies that need tools for such data analysis will constantly grow, they relied on the fact that with the creation of a company that will have a sufficient level and qualified in this area, they will be able to earn quite a lot. Since they did not have their own product, and also did not have time to develop it, they decided to take some open source project and build their business around it. Apache Hadoop did its best for several reasons - they all knew it, worked with them and understood

However, the distribution, which consists of assembling open source libraries and programs, is not for sale to anyone, so it was decided to develop its own software for Apache Hadoop. The creators of Hadoop, Doug Cutting and Michael Cafarella, were involved in the company. It was decided to develop a tool for deployment, monitoring and cluster management Apache Hadoop - Cloudera Manager. This tool automates the Apache Hadoop cluster deployment process, provides real-time monitoring of current activities and the status of individual nodes, compiles heatmaps, can generate messages for certain events, controls user access, stores historical information about cluster usage, collects logs from nodes and allows you to view them.

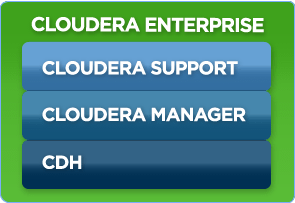

All this allowed Cloudera to launch a package of services called Cloudera Enterprise, consisting of three products:

where

CDH is the Apache Hadoop distribution (HDFS, MapReduce and MapReduce2, Hadoop Common), which includes a number of related programs and libraries, such as Apache Flume, Apache Hive, Hue, Apache Mahout, Apache Oozie, Apache Pig, Apache Sqoop, Apache Whirr and Apache Zookeeper.

Cloudera Manager is a tool for deploying, monitoring and managing an Apache Hadoop cluster.

Cloudera Support is the professional support provided by Cloudera's CDH and Cloudera Manager team.

All this is sold as a subscription and is quite expensive - for example, Cloudera Manager costs $ 4000 per node. Despite this, for some companies this solution is reasonable, since Apache Hadoop has a high cost of support and administration. In particular, to write MapReduce tasks you need a staff of qualified Java specialists, whose cost in the labor market is quite high. However, a limited number of companies use Cloudera's services - everyone tries to do it on their own. This is due to the fact that, in fact, the only Cloudera's own development is the Cloudera Manager, and even that costs a lot more than it can. In my opinion, the Cloudera Enterprise package is currently not worth the money, since essentially the only useful thing provided by Cloudera as part of this package is Cloudera Manager. With everything else, a sufficiently qualified specialist, if there is time, can figure it out on his own. The main advantage currently used by Cloudera is the limited number of Apache Hadoop specialists in the world, which allows Cloudera to speculate in the market for providing technical expertise on Apache Hadoop.

Be that as it may, on May 23, 2012 the Apache Hadoop 2.0.0 Alpha version became available for download from hadoop.apache.org , and already June 5, 2012 Cloudera announced with great fanfare the fourth version of CDH, which is the first in the world to support Apache Hadoop 2.0.0 Alpha codebase. According to most, the version of Apache Hadoop 2.0.0 Alpha is raw and unstable, and some companies prefer to wait until the stabilization period passes, during which most of the errors will be fixed. Despite this, Apache Hadoop 2.0.0 has several advantages over the first version, the main of which are the following:

- High Availability for NameNode

- YARN / MapReduce2

- HDFS Federation

As I wrote earlier, all this works so far is extremely unstable and is not recommended to be installed in a productive environment. However, the pioneer laurels prevented Cloudera from sleeping peacefully, which prompted them to release CDH4 based on Apache Hadoop 2.0 the first in the world. Thus, Cloudera announced its leadership in providing a platform for distributed computing, because no one else has a distribution based on Apache Hadoop 2.0. What does the main competitor Cloudera in this area offer - Hortonworks?

Hortonworks

The appearance of Cloudera made many people think about the prospects of the market in this area, many people wished to become leading leaders, setting the main vector of development and, accordingly, possessing the most complete expertise and qualifications in this area. Therefore, in 2011, Hortonworks was founded, the founders of which were engineers, mainly from Yahoo, who were able to attract financing from Yahoo and the Benchmark Capital investment fund for old relationships. The company did the same thing as Cloudera - the commercialization of Apache Hadoop. Most recently, June 12, 2012, the day before the Hadoop Summit 2012, Hortonworks announced its distributed computing platform based on Apache Hadoop 1.0 - Hortonworks Data Platform, abbreviated HDP. The architecture of this platform is presented in the picture below:

To be brief, this platform provides everything the same as Cloudera CDH4, but only based on Apache Hadoop 1.0 codebase. There is one small difference - as part of HDP, Hortonworks supplies the Hortonworks Management Center (HMC) based on Apache Ambari, which performs the same functions as Cloudera Manager, but is completely free, which is an obvious advantage, since Cloudera Manager for unknown reasons, it costs a lot of money (here you need to clarify that there is a free version of Cloudera Manager with truncated functionality and a limit of 50 nodes). One of the advantages of its HDP platform, Hortonworks for some reason declares the ability to download Talend's solution - ETL and ELT - Talend Open Studio for Big Data. I must say that this solution can be completely freely downloaded as an ETL, ELT tool and for Cloudera CDH4, so this is not an advantage inherent only to HDP. I was acquainted with Talend Open Studio and I can say that as an ELT and ETL solution, it is a good choice with rich functionality and stable, predictable behavior.

Since HDP uses Apache Hadoop 1.0 as a base, it lacks some of the advantages that CDH4 has. In particular, this is HA for NameNode, YARN / MapReduce2, HDFS Federation. However, to solve HA-related problems for NameNode, Hortonworks suggests installing a VMware vSphere-based add-on that can provide virtual machine-level fault tolerance for NameNode and JobTracker. In my opinion, a non-trivial decision that has dubious benefits and leads to additional costs.

Hortonworks also decided to build its business on the paid provision of support for its HDP platform. Support is sold as an annual subscription and is divided into levels. It is difficult to say about the level of quality of support for Hortonworks, since I have not found a single client who would use it at the moment. There are negative reviews about Cloudera support - a very long response period, but this can be said about the support of almost any manufacturer.

Now, Apache Hadoop and related software is being transformed from an open source project into a complete solution developed by several companies in the world. It has already grown from the walls of the laboratory and is ready to practice prove its applicability as an enterprise solution for the analysis and storage of extra-large amounts of data. To date, Hortonworks is catching up, while Cloudera is clearly the leader with its CDH4 platform. In fact, at the moment, many companies have evaluated the prospects of this market and are trying to gain a foothold in it with their solutions that have functionality based on Apache Hadoop or the ability to work with it. All of them are far behind the two leaders. This means that today there is such a situation that the most complete and working distributions, Including all the necessary libraries and programs, two companies on the market possess Cloudera with CDH3 and CDH4 and Hortonworks with HDP. It is these solutions that have the right to life as an enterprise tool for analysis in companies where it is needed. Nevertheless, at the current moment, when there are very few specialists in the market, deploying Apache Hadoop and setting it up on your own is a long-term process with an uncertain result, i.e. we can say that this is a long way of trial and error of the experimenter with one or another of the methods provided by open source. In the case of CDH4 and HDP, it means working with solutions that have already proven their worth and providing support when necessary. Therefore, the question of paying or not paying for Apache Hadoop is not worth it - if you plan to use it for experimental purposes or the company is ready to invest time and money in training its own specialists, then, of course, it is not worth paying for it. However, if Apache Hadoop will be used as an enterprise solution, it is better to have support with an accumulated knowledge base on solutions to various problems and having a deep understanding of the principles of work.

Solutions of various companies that commercialize Hadoop in one way or another

Cloudera Inc

website Hortonworks website

A brief history of the creator of Apache Hadoop