CISCO ACE. Part 2: balancing remote servers and applications

In the first part of CISCO ACE - application balancing, we plunged a little into the world of application and network resource balancing. We got acquainted with the characteristics, purpose and capabilities of the family of such devices. We examined the main implementation scenarios and the benefits that the use of balancers brings us.

Most likely, many caught themselves thinking that it is very expensive and did not see the advantages that an industrial balancer can provide them with. In the post I will pay attention to this very moment and try to show that the majority can use the service.

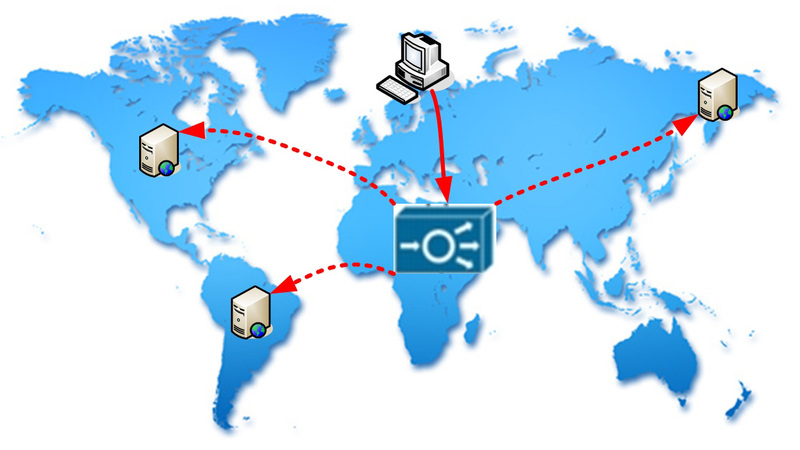

In the first part, we examined the case of balancing applications (balancing between servers) that are directly located in the same data center. You could even say - in one L2 domain. Suppose that you are interested in the described benefits and want to receive this service. In most public data centers, such equipment is absent, since the user needs to provide a server for 50-100 dollars a month. Nobody will let you into your corporate data center from your servers (not only the moment of physical placement, but also the existing information security policy is taken into account).

The indicated reasons are not uniform. If your project is growing, then a good option is to place an additional server in another data center to increase the availability of the service. And in the case of the growing popularity of information raiding, the option is even necessary. Is this service reachable for you? Yes, you can also use the existing functionality.

So, let us dwell on the fact that CISCO ACE (or any other decent balancer) is able to distribute the load not only between directly connected servers, but also between remote resources. This mode is called Routed One-Arm-Mode (or On-a-Stick).

There is nothing special in the architecture of this mode, just instead of Destination NAT (to the farm hosts), the balancer produces an additional Source NAT. Well, no one has canceled all the other functions. I propose to consider an example demonstrating the possibilities.

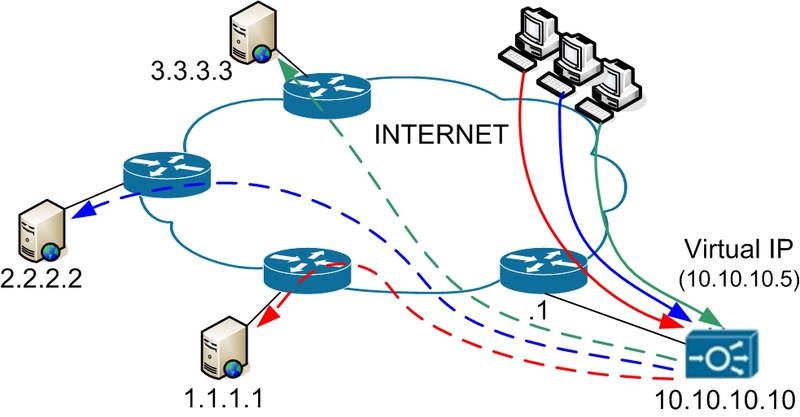

In this case, all your traffic is directed to a virtual address (VIP). This address is assigned to the balancer. Processing requests, it redirects them to remote servers. All servers are included in one structural group - the server farm. Let's make a little setup. In many ways, it will repeat the structure discussed in the first article.

1. Create a server

In our case, three servers with the addresses indicated in the topology: 2. We describe the rules for determining the availability of services (let there be a WEB server)

rserver host SERVER-1

description SERVER-1

ip address 1.1.1.1

inservice

rserver host SERVER-2 description SERVER-2

ip address 2.2.2.2

inservice

rserver host SERVER-3 description SERVER-3

ip address 3.3.3.3

inservice

probe http HTTP_PROBE

interval 5

passdetect interval 10

passdetect count 2

request method head url /index.html

expect status 200 210

header User-Agent header-value "LoadBalance"

probe icmp ICMP_PROBE interval 10

passdetect interval 60

passdetect count 4

receive 1

What parameters and for what are intended - described in the first article .

3. We will unite the devices into the farm

serverfarm host FARM

probe HTTP_PROBE

probe ICMP_PROBE

rserver SERVER-1

inservice

rserver SERVER-2

inservice

rserver SERVER-3

inservice4. Create a virtual address and describe the balancing policy

Since the servers will receive requests directly from the balancer, their client addresses will remain a mystery. You guessed what to do, add the X-Forwarded-For header. Here we indicated that we will balance to the FARM farm. 5. Create a policy for the interface that will receive incoming traffic. Pay attention to the last line of the policy. It tells the balancer how to translate the sender address, that is, his own address. Well, the interface configuration itself. Let it be Interface Vlan 100.

class-map match-all SERVER-VIP

2 match virtual-address 10.10.10.5 any

policy-map type loadbalance first-match LB-POLICY class class-default

serverfarm FARM

insert-http X-Forwarded-For header-value "%is"policy-map multi-match FARM-POLICY

class SERVER-VIP

loadbalance vip inservice

loadbalance policy LB-POLICY

loadbalance vip icmp-reply

nat dynamic 10 vlan 100interface vlan 100

ip address 10.10.10.10 255.255.255.0

service-policy input FARM-POLICY

nat-pool 10 10.10.10.11 10.10.10.11 netmask 255.255.255.255 pat

no shutdown

ip route 0.0.0.0 0.0.0.0 10.10.10.1 6. What do we get as a result?

6.1. Record in DNS: domain.org IN A 10.10.10.5.

6.2. Clients send requests to the balancer.

6.3. The balancer distributes requests between active servers, changing the destination address to the real server address, and the source address to the one specified in the configuration (10.10.10.11).

6.4. Servers receive all requests from 10.10.10.11, but have the X-Forwarded-For header. I answer servers to the balancer.

6.5. The balancer returns the received responses to the clients of the resource.

On the client side, you must provide a list of server addresses and make a change to the DNS record. Naturally, the number of functioning servers can increase or decrease. In general, the functional does not suffer.

This functional has one interesting feature that can be advantageously used.

We examined the case when ACE has one interface. Let's look at a two-interface option. Moreover, one interface it is connected to one provider, the second - to another. Or you have your own AS, a couple of prefixes, a connection to two providers (you will understand why there are so many conditions below) and you can make traffic to one subnet through one provider, and the second through another. (Some interesting aspects of BGP traffic control are discussed in BGP: some features of traffic behavior ).

Let's look at what happens in such a situation.

1. Clients access the virtual address (thanks to a record in the DNS).

2. Traffic passes through provider No. 1 and loads its channel.

3. On the way from provider No. 1 to the balancer, we can “clear” the traffic. Thanks to behavioral and signature analysis, DDoS and all kinds of scans / intrusions are cut off.

4. The balancer sends requests to the server using provider No. 2. Its channel (ISP-2) remains unloaded by DDoS.

5. The server processes only legitimate requests and sends responses to the balancer, and then returns them to clients.

Naturally, the scheme provides for the availability of DDoS protection equipment (Arbor Peakflow, CISCO Detector / Guard). Also good IPS will not hurt.

Such protection against DDoS on-demand. If necessary, or on an ongoing basis, the client only needs to make changes to the DNS.

To implement the scheme, it is necessary to make small additional balancer settings. In the event of an attack, the channel with provider No. 1 is loaded. Channel with provider No. 2 remains “clean”. The subtleties and features of traffic management is a separate issue, the idea was to demonstrate how this can be implemented.

Good luck!