Efficient Cloud Queue Management (Azure Queue)

In this fourth article from the series “Internal structure and architecture of the AtContent.com service”, I propose to get acquainted with the background processing of jobs using instances of the Azure service (Worker Role).

We recommend that you use Azure Queues as the primary communication channel between instances. But using only this channel does not allow the most efficient use of service instances. So in this article you will learn how

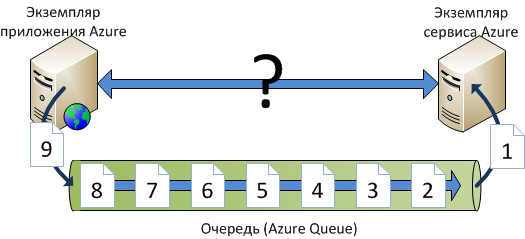

As we can see from the diagram, the standard SDK tools for Azure assume a scenario of interaction exclusively through the queue (Azure Queue). At the same time, the Azure service instance does not know at what point the application instance will send the job through the queue to it. Therefore, he has to periodically check for jobs in the queue, which causes some problems and inconveniences.

One of the problems is the delay in processing tasks. It arises for the following reason. A service instance is forced to periodically check for jobs in the queue. Moreover, if you do this check too often, this will generate a large number of transactions to Azure Queue. If at the same time such tasks will be queued rarely enough, we get a large number of "idle" transactions. If you check the availability of jobs in the queue less often, then the interval between such checks will be longer. Thus, if the task enters the queue immediately after it is checked by the service instance, then it will be processed only at the beginning of the next interval. This introduces significant delays in message processing.

Another problem is idle transactions. That is, even if there are no messages in the queue, the service instance still has to contact it to check for the presence of these messages. This creates overhead.

With the standard approach that the SDK offers, you have to choose between transaction costs and the amount of delay in processing jobs. For some scenarios, the processing time may be insignificant, and the tasks themselves arrive quite regularly. In this case, you can follow the recommendations from the SDK and process tasks by periodically selecting them from the queue. But if tasks arrive irregularly, there may be surges and lulls, then the efficiency of processing tasks in a standard way decreases.

You can solve these problems by using the messaging mechanism between instances and roles. It was described in a previous article in the series ( http://habrahabr.ru/post/140461/ ). A few words about this mechanism. It allows you to transfer messages from one instance to another, or to all instances of the role. Using it, you can run various handlers on instances, which allows, for example, to synchronize the state of instances. As applied to the task, it allows you to start processing the queue immediately after adding the task to the queue. At the same time, it is not necessary to constantly check the availability of jobs in the queue, which eliminates “idle” transactions.

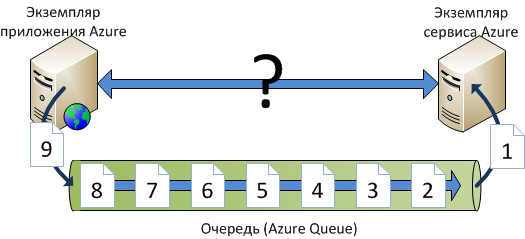

If we consider this mechanism in more detail, then we will see the following:

An Azure application instance adds the task to the Azure Queue and sends a message to the Azure service instance. The message, in turn, activates the handler. Further actions of the handler depend on the settings. If there is a record in the settings stating that queue processing has already been started, then there is no point in restarting it. Otherwise, the handler will start processing and add the corresponding record to the settings. He will select tasks from the queue as they progress and continue until they end.

Handler settings can be stored in various ways. For example, in the memory of the instance (which is not the most reliable way), in the storage of the instance or in the storage of blobs (Azure Blob Storage).

The CPlase library for processing queues has a special Queue class that allows you to create queue handlers. For this, the library also has an IWorkerQueueHandler interface and extensions for it, which make it convenient to work with queues:

The interface is very simple, it has just one method in which the entire logic of processing a message from a queue is implemented. The extension, however, carries one goal - to get an acceptable name for the queue from the type of handler. This eliminates the need for the programmer to control the queue names for various handlers.

The Queue class itself implements the choice of the necessary queue and its creation, if it is not in the Azure storage. As well as adding a task to the queue, which involves sending a message to an instance of the Azure service. At the same time, as noted earlier, the mechanism of exchanging messages between instances and roles is used.

It is easy to calculate the benefits of such a solution, if you approximately know the amount of tasks that enter the queue and the approximate structure of the stream. So, for example, if your tasks arise mainly in the evening from 20 to 23 hours, then most of the day checking tasks with an interval will work "idle". And it is easy to calculate that for 21 hours she will choose tasks from the queue without result. Moreover, if such a check is performed once a second, then this will entail about 75,000 “idle” transactions per day. With a transaction value of $ 0.01 per 10,000, this amount will be $ 0.075 per day or $ 2.25 per month. But if you have 100 different queues, then the additional costs per month will be $ 225.

The other side of job processing at intervals is the delay in processing. For some tasks, such a delay will not be significant. But we have tasks that need to be processed as quickly as possible, for example, the distribution of income between the author, distributor and service. This task is sensitive to the processing time, since the author wants to see his earned money on the account immediately, and not after some time. Moreover, if the flow of jobs is very large, then a delay of one second between processing will limit the flow of processed tasks to 60 per minute, and this provided that processing is performed instantly. Thus, at peak load, the job flow can inflate the queue very quickly.

With this approach to processing tasks from the queue at certain time intervals, it is necessary to balance between the costs of “idle” attempts to select a task from the queue and the delay in processing at peak loads. Our proposed solution eliminates the need to take care of intervals and idle transactions. And it allows you to build the processing of tasks from the queue without delay, with high efficiency and without additional costs for "idle" transactions.

I also note that the mechanism for working with queues is part of the Open Source CPlase library, which will soon be published and available to everyone.

Read in the series:

We recommend that you use Azure Queues as the primary communication channel between instances. But using only this channel does not allow the most efficient use of service instances. So in this article you will learn how

- minimize the delay between sending a job to an instance and the beginning of its processing

- minimize the number of transactions to Azure Queue

- increase the efficiency of job processing

As we can see from the diagram, the standard SDK tools for Azure assume a scenario of interaction exclusively through the queue (Azure Queue). At the same time, the Azure service instance does not know at what point the application instance will send the job through the queue to it. Therefore, he has to periodically check for jobs in the queue, which causes some problems and inconveniences.

One of the problems is the delay in processing tasks. It arises for the following reason. A service instance is forced to periodically check for jobs in the queue. Moreover, if you do this check too often, this will generate a large number of transactions to Azure Queue. If at the same time such tasks will be queued rarely enough, we get a large number of "idle" transactions. If you check the availability of jobs in the queue less often, then the interval between such checks will be longer. Thus, if the task enters the queue immediately after it is checked by the service instance, then it will be processed only at the beginning of the next interval. This introduces significant delays in message processing.

Another problem is idle transactions. That is, even if there are no messages in the queue, the service instance still has to contact it to check for the presence of these messages. This creates overhead.

With the standard approach that the SDK offers, you have to choose between transaction costs and the amount of delay in processing jobs. For some scenarios, the processing time may be insignificant, and the tasks themselves arrive quite regularly. In this case, you can follow the recommendations from the SDK and process tasks by periodically selecting them from the queue. But if tasks arrive irregularly, there may be surges and lulls, then the efficiency of processing tasks in a standard way decreases.

You can solve these problems by using the messaging mechanism between instances and roles. It was described in a previous article in the series ( http://habrahabr.ru/post/140461/ ). A few words about this mechanism. It allows you to transfer messages from one instance to another, or to all instances of the role. Using it, you can run various handlers on instances, which allows, for example, to synchronize the state of instances. As applied to the task, it allows you to start processing the queue immediately after adding the task to the queue. At the same time, it is not necessary to constantly check the availability of jobs in the queue, which eliminates “idle” transactions.

If we consider this mechanism in more detail, then we will see the following:

An Azure application instance adds the task to the Azure Queue and sends a message to the Azure service instance. The message, in turn, activates the handler. Further actions of the handler depend on the settings. If there is a record in the settings stating that queue processing has already been started, then there is no point in restarting it. Otherwise, the handler will start processing and add the corresponding record to the settings. He will select tasks from the queue as they progress and continue until they end.

Handler settings can be stored in various ways. For example, in the memory of the instance (which is not the most reliable way), in the storage of the instance or in the storage of blobs (Azure Blob Storage).

The CPlase library for processing queues has a special Queue class that allows you to create queue handlers. For this, the library also has an IWorkerQueueHandler interface and extensions for it, which make it convenient to work with queues:

public interface IWorkerQueueHandler

{

bool HandleQueue(string Message);

}

public static class WorkerQueueHandlerExtensions

{

private static string CleanUpQueueName(string DirtyQueueName)

{

return DirtyQueueName.Substring(0, DirtyQueueName.IndexOf(",")).ToLowerInvariant().Replace(".", "-");

}

public static string GetQueueName(this IWorkerQueueHandler Handler)

{

return CleanUpQueueName(Handler.GetType().AssemblyQualifiedName);

}

public static string GetQueueName(Type HandlerType)

{

return CleanUpQueueName(HandlerType.AssemblyQualifiedName);

}

}

The interface is very simple, it has just one method in which the entire logic of processing a message from a queue is implemented. The extension, however, carries one goal - to get an acceptable name for the queue from the type of handler. This eliminates the need for the programmer to control the queue names for various handlers.

The Queue class itself implements the choice of the necessary queue and its creation, if it is not in the Azure storage. As well as adding a task to the queue, which involves sending a message to an instance of the Azure service. At the same time, as noted earlier, the mechanism of exchanging messages between instances and roles is used.

public static CloudQueue GetQueue(string QueueName)

{

CreateOnceQueue(QueueName);

return queueStorage.GetQueueReference(QueueName);

}

public static bool AddToQueue(string Task) where QueueHandlerType : IWorkerQueueHandler

{

var Queue = GetQueue(WorkerQueueHandlerExtensions.GetQueueName(typeof(QueueHandlerType)));

return AddToQueue(Queue, Task);

}

public static bool AddToQueue(CloudQueue Queue, string Task)

{

try

{

var Message = new CloudQueueMessage(Task);

Queue.AddMessage(Message);

Internal.RoleCommunicatior.WorkerRoleCommand(typeof(QueueHandlerType));

return true;

}

catch { return false; }

}

It is easy to calculate the benefits of such a solution, if you approximately know the amount of tasks that enter the queue and the approximate structure of the stream. So, for example, if your tasks arise mainly in the evening from 20 to 23 hours, then most of the day checking tasks with an interval will work "idle". And it is easy to calculate that for 21 hours she will choose tasks from the queue without result. Moreover, if such a check is performed once a second, then this will entail about 75,000 “idle” transactions per day. With a transaction value of $ 0.01 per 10,000, this amount will be $ 0.075 per day or $ 2.25 per month. But if you have 100 different queues, then the additional costs per month will be $ 225.

The other side of job processing at intervals is the delay in processing. For some tasks, such a delay will not be significant. But we have tasks that need to be processed as quickly as possible, for example, the distribution of income between the author, distributor and service. This task is sensitive to the processing time, since the author wants to see his earned money on the account immediately, and not after some time. Moreover, if the flow of jobs is very large, then a delay of one second between processing will limit the flow of processed tasks to 60 per minute, and this provided that processing is performed instantly. Thus, at peak load, the job flow can inflate the queue very quickly.

With this approach to processing tasks from the queue at certain time intervals, it is necessary to balance between the costs of “idle” attempts to select a task from the queue and the delay in processing at peak loads. Our proposed solution eliminates the need to take care of intervals and idle transactions. And it allows you to build the processing of tasks from the queue without delay, with high efficiency and without additional costs for "idle" transactions.

I also note that the mechanism for working with queues is part of the Open Source CPlase library, which will soon be published and available to everyone.

Read in the series:

- “ AtContent.com. Internal structure and architecture ”,

- " A mechanism for exchanging messages between roles and instances ",

- “ Caching data on an instance and managing caching ”,

- " LINQ extensions for Azure Table Storage that implement Or and Contains operations ,"

- " Practical advice on dividing data into parts, generating PartitionKey and RowKey for Azure Table Storage ."