Recommender Systems: SVD Part I

We continue to talk about recommender systems. Last time, we made the first attempt to determine the similarity between users and the similarity between products. Today we will approach the same task from the other side - we will try to educate the factors that characterize users and products. If Vasya from the previous post likes films about tractors and doesn't like films about pigs, and Peter, on the contrary, it would be just great to learn to understand which films are “about pigs” and recommend them to Peter, and which films are “about tractors”, and recommend them to Vasya.

I remind once again (and, apparently, I will remind you until the end of time) that we have a matrix consisting of ratings (likes, purchase facts, etc.) that users (matrix rows) assigned to products (matrix columns). Let's look at the matrix in which the ratings we know are recorded. As a rule, a single user will not be able to evaluate a significant share of products, and it is unlikely that there will be many products that are ready to evaluate a significant proportion of users. This means that the matrix R is sparse; Is there any way to take advantage of this?

in which the ratings we know are recorded. As a rule, a single user will not be able to evaluate a significant share of products, and it is unlikely that there will be many products that are ready to evaluate a significant proportion of users. This means that the matrix R is sparse; Is there any way to take advantage of this?

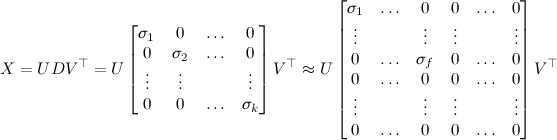

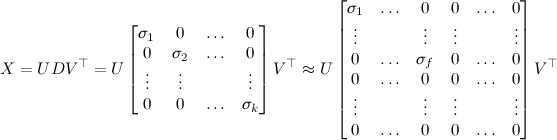

The main tool for us will be the so-called singular decomposition of the matrix R :

Singular decomposition is a fairly simple but very powerful tool. Actually, this is one of the main results of linear algebra from the practical point of view, and the result is already not new (SVD properties were studied at the latest in the 1930s), and it is even more surprising when university courses in linear algebra are quite detailed in which other aspects completely bypass the SVD side.

If you have three minutes, you can enjoy a vivid example of mathematical humor; humor, of course, is mathematical, so it’s not so funny, but the essence is lucid and the fans will surely like the music ... hmm, on the hub, it’s probably better to say what the fans of Fallout will like :

In case you still haven’t found three minutes, I’ll highlight the main property for us: if R is a matrix of large size but small rank f (in particular, sparse matrices are often of small rank), it can be decomposed into the product of the matrix

but small rank f (in particular, sparse matrices are often of small rank), it can be decomposed into the product of the matrix  and the matrix

and the matrix  , thereby drastically reducing the number of parameters, from NM to ( N + M ) f (to understand that this is a big progress, imagine that, as is usually the case in practice, N and M are measured in hundreds of thousands and millions, and f is less than a dozen )

, thereby drastically reducing the number of parameters, from NM to ( N + M ) f (to understand that this is a big progress, imagine that, as is usually the case in practice, N and M are measured in hundreds of thousands and millions, and f is less than a dozen )

SVD is very widely used in machine learning; in fact, if you want to bring something closer, it is possible that you will meet SVD somewhere along the way. A classic example of the application of SVD is noise reduction, for example, in images. Consider the (black and white) image as a matrix of X size , the elements of which are the intensities of each pixel. Now let's try to select f columns of pixels from the image, consider them “representative” and present each of the remaining columns as a linear combination of these. I will not go into mathematics now, but as a result, when you find the optimal representations of each column, it turns out that you presented the original matrix as a product of

, the elements of which are the intensities of each pixel. Now let's try to select f columns of pixels from the image, consider them “representative” and present each of the remaining columns as a linear combination of these. I will not go into mathematics now, but as a result, when you find the optimal representations of each column, it turns out that you presented the original matrix as a product of  matrices of size

matrices of size  and

and , that is, they just approximated the matrix X by a matrix of small rank f .

, that is, they just approximated the matrix X by a matrix of small rank f .

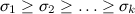

The main property of SVD is that SVD gives such an optimal approximation if you just leave exactly f of the first diagonal elements in the matrix D and zero the rest: In the diagonal matrix D , which is in the middle of the singular decomposition, the elements are ordered by size: so that nullify the last elements - this means nullify the smallest elements. If you choose f well , then as a result the image will lose weight as well, and there will be less noise on it. A f

in this case, it can be selected based on the size of the singular values of the matrix, i.e. of the very diagonal elements of the matrix D : it is desirable to discard as many as possible, but at the same time as small as possible such elements.

But we were distracted. In the case of recommendation systems, it turns out that we represent each user as a vector of f factors and represent each product as a vector of f factors

and represent each product as a vector of f factors  , and then, to predict the rating of user i to product j , we take their scalar product

, and then, to predict the rating of user i to product j , we take their scalar product . We can say that the vector of user factors shows how much the user likes or dislikes one or another factor, and the vector of product factors shows how much one factor or another is expressed in the product. Linear algebra tells us that for a sparse rating matrix, such a decomposition is often possible and has a meaningful meaning.

. We can say that the vector of user factors shows how much the user likes or dislikes one or another factor, and the vector of product factors shows how much one factor or another is expressed in the product. Linear algebra tells us that for a sparse rating matrix, such a decomposition is often possible and has a meaningful meaning.

It may turn out, by the way, that some factors will be easily understood by the human mind: for films, something like “comedy-drama”, “share of action”, “share of romance”, etc., can stand out, and user factors, accordingly, they will show how much the corresponding characteristics of the film are to their liking. But nothing substantial can stand out - there are no guarantees, formally we simply juggle with numbers.

Next time we will turn this basic idea - to bring the rating matrix closer to a low-rank matrix using SVD - into a well-posed optimization problem and learn how to solve it.

I remind once again (and, apparently, I will remind you until the end of time) that we have a matrix consisting of ratings (likes, purchase facts, etc.) that users (matrix rows) assigned to products (matrix columns). Let's look at the matrix

in which the ratings we know are recorded. As a rule, a single user will not be able to evaluate a significant share of products, and it is unlikely that there will be many products that are ready to evaluate a significant proportion of users. This means that the matrix R is sparse; Is there any way to take advantage of this?

in which the ratings we know are recorded. As a rule, a single user will not be able to evaluate a significant share of products, and it is unlikely that there will be many products that are ready to evaluate a significant proportion of users. This means that the matrix R is sparse; Is there any way to take advantage of this? The main tool for us will be the so-called singular decomposition of the matrix R :

Singular decomposition is a fairly simple but very powerful tool. Actually, this is one of the main results of linear algebra from the practical point of view, and the result is already not new (SVD properties were studied at the latest in the 1930s), and it is even more surprising when university courses in linear algebra are quite detailed in which other aspects completely bypass the SVD side.

If you have three minutes, you can enjoy a vivid example of mathematical humor; humor, of course, is mathematical, so it’s not so funny, but the essence is lucid and the fans will surely like the music ... hmm, on the hub, it’s probably better to say what the fans of Fallout will like :

In case you still haven’t found three minutes, I’ll highlight the main property for us: if R is a matrix of large size

but small rank f (in particular, sparse matrices are often of small rank), it can be decomposed into the product of the matrix

but small rank f (in particular, sparse matrices are often of small rank), it can be decomposed into the product of the matrix  and the matrix

and the matrix  , thereby drastically reducing the number of parameters, from NM to ( N + M ) f (to understand that this is a big progress, imagine that, as is usually the case in practice, N and M are measured in hundreds of thousands and millions, and f is less than a dozen )

, thereby drastically reducing the number of parameters, from NM to ( N + M ) f (to understand that this is a big progress, imagine that, as is usually the case in practice, N and M are measured in hundreds of thousands and millions, and f is less than a dozen )SVD is very widely used in machine learning; in fact, if you want to bring something closer, it is possible that you will meet SVD somewhere along the way. A classic example of the application of SVD is noise reduction, for example, in images. Consider the (black and white) image as a matrix of X size

, the elements of which are the intensities of each pixel. Now let's try to select f columns of pixels from the image, consider them “representative” and present each of the remaining columns as a linear combination of these. I will not go into mathematics now, but as a result, when you find the optimal representations of each column, it turns out that you presented the original matrix as a product of

, the elements of which are the intensities of each pixel. Now let's try to select f columns of pixels from the image, consider them “representative” and present each of the remaining columns as a linear combination of these. I will not go into mathematics now, but as a result, when you find the optimal representations of each column, it turns out that you presented the original matrix as a product of  matrices of size

matrices of size  and

and , that is, they just approximated the matrix X by a matrix of small rank f .

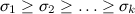

, that is, they just approximated the matrix X by a matrix of small rank f . The main property of SVD is that SVD gives such an optimal approximation if you just leave exactly f of the first diagonal elements in the matrix D and zero the rest: In the diagonal matrix D , which is in the middle of the singular decomposition, the elements are ordered by size: so that nullify the last elements - this means nullify the smallest elements. If you choose f well , then as a result the image will lose weight as well, and there will be less noise on it. A f

in this case, it can be selected based on the size of the singular values of the matrix, i.e. of the very diagonal elements of the matrix D : it is desirable to discard as many as possible, but at the same time as small as possible such elements.

But we were distracted. In the case of recommendation systems, it turns out that we represent each user as a vector of f factors

and represent each product as a vector of f factors

and represent each product as a vector of f factors  , and then, to predict the rating of user i to product j , we take their scalar product

, and then, to predict the rating of user i to product j , we take their scalar product . We can say that the vector of user factors shows how much the user likes or dislikes one or another factor, and the vector of product factors shows how much one factor or another is expressed in the product. Linear algebra tells us that for a sparse rating matrix, such a decomposition is often possible and has a meaningful meaning.

. We can say that the vector of user factors shows how much the user likes or dislikes one or another factor, and the vector of product factors shows how much one factor or another is expressed in the product. Linear algebra tells us that for a sparse rating matrix, such a decomposition is often possible and has a meaningful meaning. It may turn out, by the way, that some factors will be easily understood by the human mind: for films, something like “comedy-drama”, “share of action”, “share of romance”, etc., can stand out, and user factors, accordingly, they will show how much the corresponding characteristics of the film are to their liking. But nothing substantial can stand out - there are no guarantees, formally we simply juggle with numbers.

Next time we will turn this basic idea - to bring the rating matrix closer to a low-rank matrix using SVD - into a well-posed optimization problem and learn how to solve it.