AI Challenge 2011 Ants. Through the eyes of the participant Murashka (15th place)

The tournament attracted by its simplicity and gathered a wide audience. The idea came to the taste of high school students and the wise experience of a guru who remembered the 1972 world computer chess championship.

The algorithms used by the leaders were approximately the same, the basic ones were two - breadth-first search ( BFS ), to determine the nearest path to distant targets and minimax in close combat. The devil was hiding in the correct method of choosing goals and fine-tuning details.

BFS illustration for multiple sources (author BenJackson):

To find the details that influence the result, you had to do a lot of theoretical and experimental work.

For a quick implementation, have a simple, easily modifiable core algorithm.

To set up, quick and accurate tests to determine the usefulness of the innovation (70 percent of the seemingly useful changes had a negative effect).

But at the time of launch, I did not know all this and, based on a quick analysis of the forum, I began to struggle with the time limit. As a result, a quick, but cumbersome and not at all convenient for experimentation wide search option was born. The first version was ready in a few days, only knew how to collect food, but easily climbed into the Top 100 on the official server.

I wanted something big and clean, but, in the end, it was implemented by a truncated minimax and worked quite well.

Each turn, 5 cards were calculated on the assumption that the enemy would move with all ants in one direction or stand still. Then the map was divided into squares of 5 * 5 cells and my ants, falling into the attack zone (5 pieces maximum), moved in all possible ways. The results were collected by minimax into the assessment and the most favorable combination of moves was chosen. For more correct processing of joints, the 5 * 5 grid itself also shifted slightly each turn.

Now it was possible to equate the enemy ants closest to the heal with food, the rest of the attack algorithm was done by itself.

Details that allowed to significantly improve the game looked quite trivial:

I tried to implement the scheme proposed by Memetix (the final 10th place).

According to the algorithm, it was suggested that each cell on the map give value, according to the distance from the target. That would fit me nicely into the attack algorithm - I would just add value to the overall score and get the best combination with minimax. But nothing good happened right away - the collection of food was worse than the current version and it was not possible to defeat the bunching of ants at the intersection of the "fields" even after various dances with repulsion. There was no time left to finish the method. It was decided to leave as is and bring to mind the existing code.

The latest coefficients are selected. On a TCP server, 8 (4 + 4) copies with two different sets of constants hang around the clock. However, to determine who is better this does not help - constant timeouts due to the large number of players or when approaching the limit of 200 ants (teams go to the server for a long time). In addition, the spread in the class of bots does not allow the rating algorithm to correctly determine the rating. The test set of cards also shows conflicting results, so the slightly more aggressive version (due to the technique of displacing the mask 5 by 5 when calculating the attack) goes to the final. Also, at the last moment, a bonus is bolted for moving towards food at the time of the attack. Tests unanimously swore that it was useful.

The official server in chaos, most of the main participants resubmit with the latest changes.

4 games without surprises. The choice of rivals is random.

Games are very slow.

Starting with the 5th installment, the organizers have included the TrueSkill rating evaluation algorithm - the rating consists of two coefficients: mu - the rating itself and sigma - reflecting the confidence of the system in the rating. The overall rating is mu-3 * sigma. Opponents are selected with a close rating and with a lower sigma. In practice, this has led to the fact that due to large sigma top bots play much less often than others.

For Murashka, the 5th game ended up in the fact that, having passed under the cover of the fog of war, on a small poor map a bot, not included in the first two hundred, stole a single heal. Total -4 to mu and a place in the fifth hundred. The casino is open with chic.

The rating of some players is considered to be erroneous. Errors are once again corrected, the server is restarted.

Games go even slower.

Starter packs gladly played 20 games each other, the leaders look at them with envy and 6 games in the asset. The organizers change the algorithm for selecting opponents to even out the number of games, after which they include clipping to the 5000th place. And in the evening until the 3000th and extend the competition for a day and a half. Hallelujah!

Cut-off in 2000 and 1000 places.

The Russian teapotahedron team is one game away from xathis, all in anticipation of a change of leader ... not fate.

Goosebumps peacefully rustling in the 20th.

500 clipping.

teapotahedron goes down (finish in 5th place).

But the scenario of the previous day is repeated with the Ukrainian GreenTea. This time it’s successful - the change of leader really happened 2 hours before the server stopped. In addition, the rival selection algorithm failed again, selecting a participant outside the cut-off limit of 500, and allocated all server power to compensate for games he did not play - the entire top half hour carefully sucks his paw.

But a miracle did not happen, xathis confidently and deservedly regained its first place, with which I congratulate him.

Goosebump, who occupied the 14th place a couple of hours before the finish, was ahead of the Japanese Komaki by the last game in a full-time meeting. And at the same time, it was passed by the Russian SDil_ and meduz. Again the casino, but it could have been worse - 15th place for this set of cards is a good result.

Fast but cumbersome code complicated the modification and landed a fantasy flight.

A lot of time wasted trying to resolve the battle with no minimax.

Little attention is paid to the competent definition of goals and the distribution of ants between them. Ants tended to pile up in heaps opposite a group of opponents, instead of going down to where there were no enemies and grabbing a map.

Walking in line would definitely give an advantage, but how competently and quickly to implement it did not occur to me.

The initial distribution was not effective. The closer to the heal, the more often the distant target could change as a result of the ants twitching, not knowing where to go to them.

Catching an opponent with a head start in a few months is harder than it sounds.

A testing system to determine utility is very important.

On the last day, everything changes dramatically, and the final competition is very different from testing.

You need to get rid of small errors right away - they accumulate and can lead to the fact that a good strategy will stop working and will be discarded during testing.

The idea is great, the work is huge, the organizers deserve all thanks. It was not without errors, but we live in an imperfect world.

The controversial decision was to leave the bots from the starter pack in the competition. The organizers can be understood - I wanted to declare mass. But with more than modest computing power, it was inconvenient for real participants. However, the error was recognized and promised to filter for the future.

A set of cards with highly unstable results is also not entirely clear, because it is obvious that there are enough errors that TrueSkill needs to fix.

The sharp ups and downs in the ratings are probably also due to the set of cards and the features of the selection algorithm, which was not random enough. My version is that within a common set of final maps clusters have formed that are sharply beneficial to some bots and not beneficial to others. As soon as the rating system evaluated by TrueSkill came to an equilibrium state, the map selection algorithm came across the next such combination and mixed the table for 5-10 games. Sigma near the end shrank to a minimum, no longer affected anything and could not compensate for the bursts.

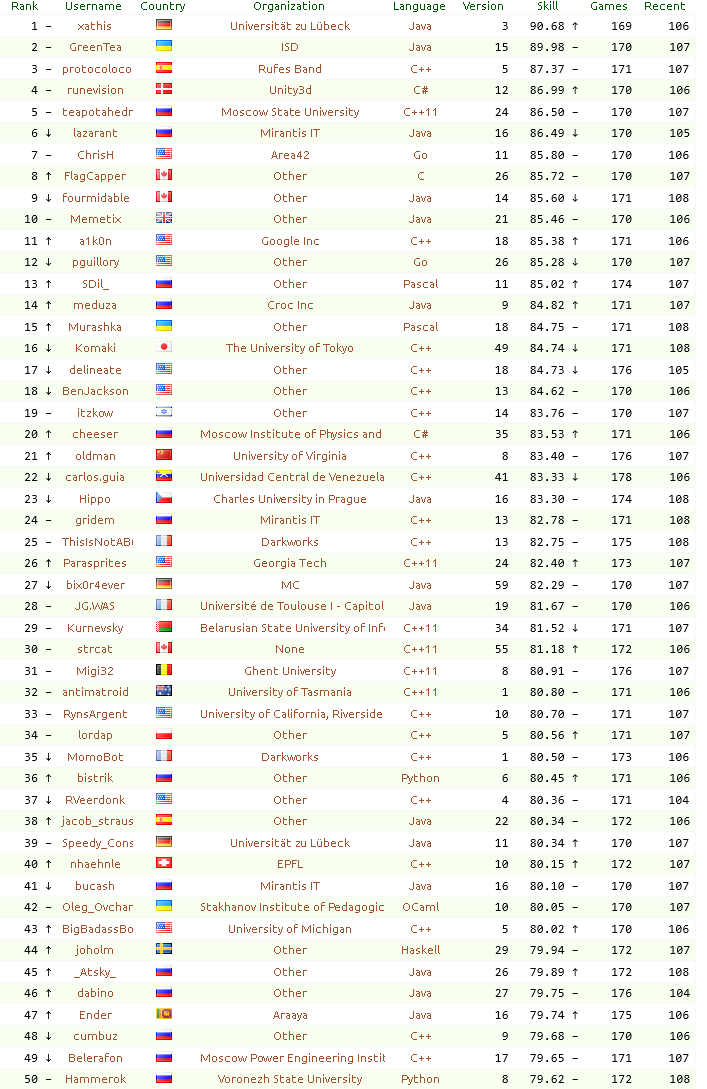

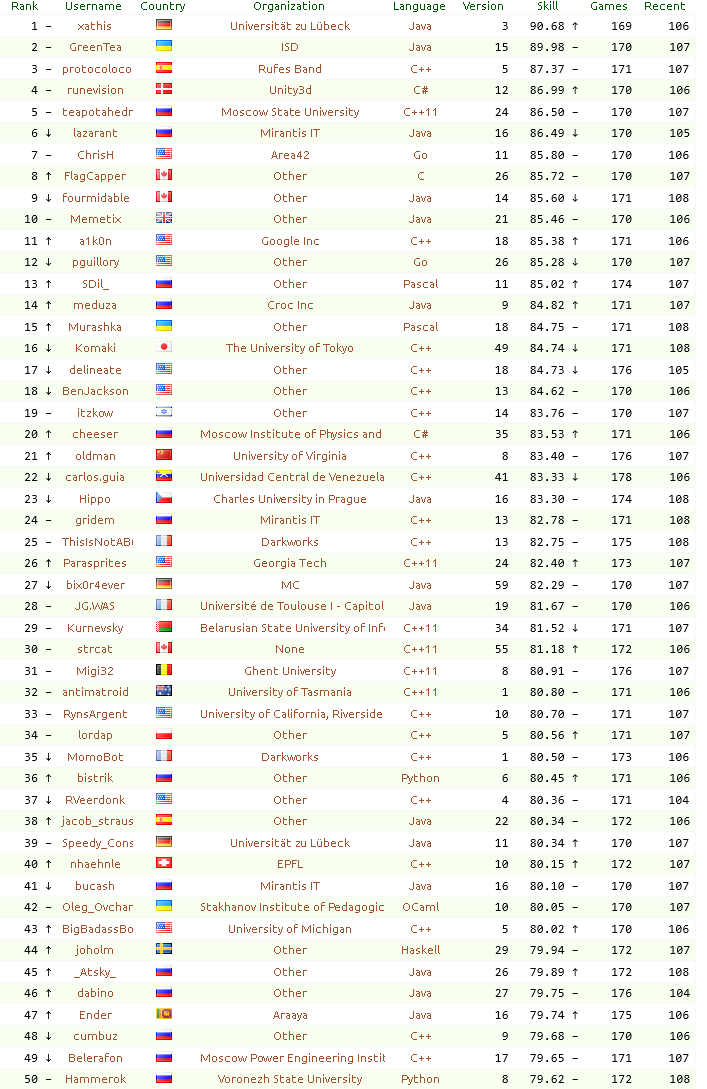

Final rating

Winner report

Lastly, a little Ant-Art performed by Murashka.

All with the upcoming and good luck in the competition!

The algorithms used by the leaders were approximately the same, the basic ones were two - breadth-first search ( BFS ), to determine the nearest path to distant targets and minimax in close combat. The devil was hiding in the correct method of choosing goals and fine-tuning details.

BFS illustration for multiple sources (author BenJackson):

To find the details that influence the result, you had to do a lot of theoretical and experimental work.

For a quick implementation, have a simple, easily modifiable core algorithm.

To set up, quick and accurate tests to determine the usefulness of the innovation (70 percent of the seemingly useful changes had a negative effect).

But at the time of launch, I did not know all this and, based on a quick analysis of the forum, I began to struggle with the time limit. As a result, a quick, but cumbersome and not at all convenient for experimentation wide search option was born. The first version was ready in a few days, only knew how to collect food, but easily climbed into the Top 100 on the official server.

Opponent collision algorithm

I wanted something big and clean, but, in the end, it was implemented by a truncated minimax and worked quite well.

Each turn, 5 cards were calculated on the assumption that the enemy would move with all ants in one direction or stand still. Then the map was divided into squares of 5 * 5 cells and my ants, falling into the attack zone (5 pieces maximum), moved in all possible ways. The results were collected by minimax into the assessment and the most favorable combination of moves was chosen. For more correct processing of joints, the 5 * 5 grid itself also shifted slightly each turn.

Now it was possible to equate the enemy ants closest to the heal with food, the rest of the attack algorithm was done by itself.

Details that allowed to significantly improve the game looked quite trivial:

- not stand on one’s heel if there’s nothing more to do or under attack

- don't crush each other

- retreat to your chil

- bypass attacked cells

- prioritize attacking enemies that are too close to the heal

- during the attack, move to food (otherwise they would hang opposite the enemy)

- in a one-on-one game, behave more aggressively.

2 weeks before the deadline

I tried to implement the scheme proposed by Memetix (the final 10th place).

According to the algorithm, it was suggested that each cell on the map give value, according to the distance from the target. That would fit me nicely into the attack algorithm - I would just add value to the overall score and get the best combination with minimax. But nothing good happened right away - the collection of food was worse than the current version and it was not possible to defeat the bunching of ants at the intersection of the "fields" even after various dances with repulsion. There was no time left to finish the method. It was decided to leave as is and bring to mind the existing code.

Day before the deadline

The latest coefficients are selected. On a TCP server, 8 (4 + 4) copies with two different sets of constants hang around the clock. However, to determine who is better this does not help - constant timeouts due to the large number of players or when approaching the limit of 200 ants (teams go to the server for a long time). In addition, the spread in the class of bots does not allow the rating algorithm to correctly determine the rating. The test set of cards also shows conflicting results, so the slightly more aggressive version (due to the technique of displacing the mask 5 by 5 when calculating the attack) goes to the final. Also, at the last moment, a bonus is bolted for moving towards food at the time of the attack. Tests unanimously swore that it was useful.

The official server in chaos, most of the main participants resubmit with the latest changes.

Finals

The first day

4 games without surprises. The choice of rivals is random.

Games are very slow.

Second day

Starting with the 5th installment, the organizers have included the TrueSkill rating evaluation algorithm - the rating consists of two coefficients: mu - the rating itself and sigma - reflecting the confidence of the system in the rating. The overall rating is mu-3 * sigma. Opponents are selected with a close rating and with a lower sigma. In practice, this has led to the fact that due to large sigma top bots play much less often than others.

For Murashka, the 5th game ended up in the fact that, having passed under the cover of the fog of war, on a small poor map a bot, not included in the first two hundred, stole a single heal. Total -4 to mu and a place in the fifth hundred. The casino is open with chic.

The rating of some players is considered to be erroneous. Errors are once again corrected, the server is restarted.

Games go even slower.

Day three

Starter packs gladly played 20 games each other, the leaders look at them with envy and 6 games in the asset. The organizers change the algorithm for selecting opponents to even out the number of games, after which they include clipping to the 5000th place. And in the evening until the 3000th and extend the competition for a day and a half. Hallelujah!

Day four

Cut-off in 2000 and 1000 places.

The Russian teapotahedron team is one game away from xathis, all in anticipation of a change of leader ... not fate.

Goosebumps peacefully rustling in the 20th.

Fifth day - final

500 clipping.

teapotahedron goes down (finish in 5th place).

But the scenario of the previous day is repeated with the Ukrainian GreenTea. This time it’s successful - the change of leader really happened 2 hours before the server stopped. In addition, the rival selection algorithm failed again, selecting a participant outside the cut-off limit of 500, and allocated all server power to compensate for games he did not play - the entire top half hour carefully sucks his paw.

But a miracle did not happen, xathis confidently and deservedly regained its first place, with which I congratulate him.

Goosebump, who occupied the 14th place a couple of hours before the finish, was ahead of the Japanese Komaki by the last game in a full-time meeting. And at the same time, it was passed by the Russian SDil_ and meduz. Again the casino, but it could have been worse - 15th place for this set of cards is a good result.

Mistakes

Fast but cumbersome code complicated the modification and landed a fantasy flight.

A lot of time wasted trying to resolve the battle with no minimax.

Little attention is paid to the competent definition of goals and the distribution of ants between them. Ants tended to pile up in heaps opposite a group of opponents, instead of going down to where there were no enemies and grabbing a map.

Walking in line would definitely give an advantage, but how competently and quickly to implement it did not occur to me.

The initial distribution was not effective. The closer to the heal, the more often the distant target could change as a result of the ants twitching, not knowing where to go to them.

Notes for the future

Catching an opponent with a head start in a few months is harder than it sounds.

A testing system to determine utility is very important.

On the last day, everything changes dramatically, and the final competition is very different from testing.

You need to get rid of small errors right away - they accumulate and can lead to the fact that a good strategy will stop working and will be discarded during testing.

Organization

The idea is great, the work is huge, the organizers deserve all thanks. It was not without errors, but we live in an imperfect world.

The controversial decision was to leave the bots from the starter pack in the competition. The organizers can be understood - I wanted to declare mass. But with more than modest computing power, it was inconvenient for real participants. However, the error was recognized and promised to filter for the future.

A set of cards with highly unstable results is also not entirely clear, because it is obvious that there are enough errors that TrueSkill needs to fix.

The sharp ups and downs in the ratings are probably also due to the set of cards and the features of the selection algorithm, which was not random enough. My version is that within a common set of final maps clusters have formed that are sharply beneficial to some bots and not beneficial to others. As soon as the rating system evaluated by TrueSkill came to an equilibrium state, the map selection algorithm came across the next such combination and mixed the table for 5-10 games. Sigma near the end shrank to a minimum, no longer affected anything and could not compensate for the bursts.

Final rating

Winner report

Lastly, a little Ant-Art performed by Murashka.

All with the upcoming and good luck in the competition!