Tactoom.com Inside - NodeJS / NoSQL Social Blogging Platform

So, it's time to reveal some of the cards and talk about how Tactoom works from the inside out.

So, it's time to reveal some of the cards and talk about how Tactoom works from the inside out. In this article I will talk about the development and production of a web service using:

NodeJS (fibers), MongoDB, Redis, ElasticSearch, Capistrano, Rackspace.

Introduction

Three weeks ago, David and I ( DMiloshev ) launched the Tactoom.com infosocial network. About what it is you can read here .

Against the backdrop of the noise recently raised around NodeJS, many are probably wondering what this technology is not in words but in action.

NodeJS is not a panacea at all. This is just another technology, in fact, no better than others. In order to achieve good performance and scalability, you have to sweat a lot - just like everywhere else.

Application architecture

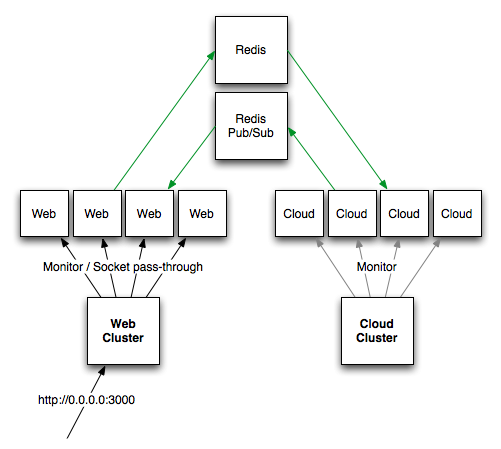

NodeJS application is divided into two types of processes:

1. Web process (http)

2. Cloud process (queues)

All processes are completely independent from each other, can be located on different servers and even in different parts of the world. At the same time, the application is scaled precisely by the multiplication of these processes. Communication between them takes place purely through a centralized message server (redis).

Web processes serve direct http requests from users. Each process can process multiple requests at once. Given the specifics of Eventloop, depending on the CPU / IO ratio of each particular request, the parallel processing limit can either decrease or increase for an individual process at a time.

Cloud processesperform operations that are not directly related to user requests. For example: sending emails, data denormalization, search indexing. Like the Web, a single Cloud process can handle many different types of tasks at the same time.

It is worth noting that the “atomicity” of tasks / queries is very important here. That is, you need to ensure that the capacious task / calculation is divided into many smaller parts, which will then be evenly distributed over the rest of the processes. This will increase the speed of the task, fault tolerance and reduce memory consumption and blocking coefficient of each process and server as a whole.

Web → Cloud

I try to organize Web processes in such a way as to increase the overall IO time ratio against the CPU, which means focusing on the rapid issuance of http at high competitive requests. This means that the Web delegates high-cpu logic to the Cloud , waits for its execution, then receives the result of the calculations. Accordingly, due to the asynchronous architecture of nodejs, the Web can make other requests while waiting.

Clustering The

architecture of the Web and Cloud is very similar, except that instead of the http socket Cloud “listens” to the redis queue.

Clustering of node processes occurs according to the following principles:

1. One supervisor process ( node-cluster ) is launched on each physical server

2. The child processes of the supervisor are our Web-s and Cloud-s, the number of which is always equal to the number of server cores.

3. The supervisor monitors the memory consumption of each child process and, if the specified norm is exceeded, restarts it (after waiting for the completion of the current requests of this process).

Fibers

The entire high-level layer of the application is written using node-sync (fibers) , without which I generally have little idea of its development. The fact is that it is very difficult, if not stupid, to implement such complex things as the same static assembly in the “official” callback-driven paradigm. For those who have not yet seen the code of the same npm , I strongly recommend that you look at it and try to understand what is happening there, and most importantly - why. And holivars and trolling, which grow around the asynchronous paradigm of nodejs almost every day, to put it mildly, amaze me.

You can find out more about node-sync in my article:

node-sync - pseudo-synchronous programming on nodejs using fibers

Web

The general logic of the Web application is implemented on the expressjs framework in the "express" style. Except that each request is wrapped in a separate Fiber, inside which all operations are performed in a synchronous style.

Due to the impossibility of overriding some parts of expressjs functionality, in particular routing, it had to be removed from npm and included in the main project repository. The same applies to a number of other modules (especially those developed by LearnBoost ), because contributing to their projects is very difficult and generally not always possible .

CSS is generated through stylus . It is really very convenient.

Template engine - ejs(both on the server and on the client).

File upload - connect-form .

The web is very fast, since all modules and initialization are loaded into the process memory at startup. I try to keep the average response time of the Web process on any page - up to 300ms (excluding image upload, registration, etc.). When profiling, I was surprised to find that 70% of this time is taken up by mongoose (mongodb ORM for nodejs) - more on that below.

i18n

For a long time I was looking for a suitable solution for internationalization in nodejs, and my searches converged on node-gettext with a little dopilivanie. It works like a clock, locale files are pulled “on the fly” by server nodejs processes during updating.

Cache

The caching functionality with all its logic fit into two screens of code. Redis is used as cache-backend.

Memory

In Web processes, memory flows like a river, as it later turned out, because of mongoose. One process (during the day, during the average load), eats up to 800MB in two hours, after which it is restarted by the supervisor.

It is rather difficult to search for memory leaks in nodejs, if you know interesting ways - let me know.

Data

Practice has shown that the schema-less mongodb paradigm is ideal for the Tactoom model. The database itself behaves well (weighs 376MB, of which 122MB is the index), the data is selected solely by indexes, so the result of any query is no more than 30ms, even at high load (most queries generally <1ms).

If it’s interesting, in the second part I can talk in more detail about how we managed to “tame” mongodb for a number of non-trivial tasks (and how it failed).

mongoosejs (mongodb ORM for nodejs)

I want to say separately about it. Choosing a list of 20 users: requesting and selecting data in mongo takes 2ms, data transfer takes 10ms, then mongoose does something else 200ms(already silent about the memory) and in the end I get the objects. If you rewrite this to a lower-level node-mongodb-native , then all this will take 30ms.

Gradually, I had to rewrite almost everything to mongodb-native, while increasing the overall performance of the system by a factor of 10.

Statics

All Tactoom statics are stored on Rackspace Cloud Storage . In doing so, I use the static cdn domain X .infosocial.net , where X is 1..n. This domain forwards through DNS to the container’s internal domain in Cloud Storage, allowing browsers to load static files in parallel. Each static file is stored in two copies (plain and gzip) and has a unique name in which the version is wired. If the file version is updated, the address will change, and browsers will download the new file.

Assemblyapplication statics (client js and css, pictures) occurs through a self-written mechanism that defines modified files (via git-log), makes minify, makes a gzip copy and loads them onto a CDN. The build script also monitors the changed images and updates their addresses in the corresponding css files.

A list (mapping) of the static addresses of all files is stored in Redis. This list loads every Web process into memory at startup or when updating static versions.

In fact, the de-deployment of any changes to the statics is done by one team that does everything on its own. Moreover, this does not require any reboot, since nodejs applications pick up changed addresses of static files on the fly through redis pub / sub.

Custom staticsIt is also stored on Rackspace, but unlike the application statics, it does not have versions, but simply undergoes a certain canonization, which allows it to obtain addresses of all its sizes on the CDN using the hash of the picture.

For host definitions (cdn X ) on which a particular static file is stored, consistent hashing is used.

Server architecture

In fact, Tactoom is spread out over 3 hermetic zones:

1. Rackspace - a platform for fast scaling and storage of statics

2. A platform in Europe - our physical servers here

3. Secret (logs are rotated here, background calculations are made and statistics are collected)

Only one is looking at the world the server is nginx, with open ports 80 and 4000. The latter is used for COMET connections.

The rest of the servers communicate with each other via direct ip, are closed from the world via iptables.

: 80

nginx proxies requests through the upstream configuration to Web servers. At the moment there are two upstream: tac_main and tac_media. Each of them contains a list of Web servers running node-cluster over 3000 ports, each Web server has its own priority in the distribution of requests.

tac_main is a cluster of Web servers that are close to the database and are responsible for delivering most web pages to registered Tactoom users.

tac_media - a cluster of Web servers located close to the CDN. Through them, all operations for downloading and resizing images take place.

The webN and cloudN servers are shown to show where I add the servers in the Habra effect and other pleasant events.

New servers rise within 10 minutes - in the image stored on the CDN.

: 4000

Here is the usual proxy-pass to the comet server , where the nodejs COMET Beseda application is running , which I will discuss in the second part.

tac1, tac2, data1

These are the main Tactoom servers: XEON X3440 4x2.53 GHz 16 GB 2x1500 GB Raid1.

The Mongod process runs on each of them; all of them are combined in ReplicaSet with automatic failover and distribution of read operations to slaves.

On tac1, the main Web cluster; on tac2, the Cloud cluster. Each cluster has 8 nodejs processes.

In the near future I will create another upstream tac_search , on which only search queries will be routed. It will have a Web-cluster, which I will put next to elasticsearch (about him in the second part) by the server.

conclusions

Quoting the slogan of the creators of NodeJS:

“Because nothing blocks, less-than-expert programmers are able to develop fast systems.”

“Because nothing is blocked, less-than-experts can develop fast systems.”

- This is a lie. I’ve been using nodejs for almost 2 years now and I know from my own experience that in order to develop “fast systems” on it, you need no less experience (or even more) than any other technology. In reality, with the callback-driven paradigm in nodejs and the features of javascript in general, it is more likely to make a mistake (and then look for it for a very long time) than to gain in performance.

- On the other hand, trolling Mr. Ted Dziuba also complete nonsense, for example with the "Fibonacci numbers" sucked from the finger. Only a person who does not understand how Eventloop works and why it is needed at all will do this (which, incidentally, is proved by point 1).

After the report on DevConf this spring, I am often asked questions about whether or not to make a new project NodeJS. My answer to everyone:

If you have a lot of time and you are ready to invest it in the development of new, raw and controversial technology - go ahead. But if you have timelines / customers / investors and you don’t have much experience with server-side JS, you shouldn’t .

As practice has shown, raising a project on NodeJS is real. It works. But it cost me a lot. What is the node open-source community worth, which I still try to write about.

Part 2

The second part of the article will be the other day. Here is a short list of what I’ll write about it:

1. Search (elasticsearch)

2. Mail (google app engine)

3. Deployment (capistrano, npm)

4. Queues (redis, kue)

5. COMET server (beseda)

Too a lot of information for one article.

If I see interesting questions in the comments, I will answer them in the second part.

PS

- There will be no food . Any comments containing criticism will be ignored without reference to my own achievements.

- We are looking for a front-end ninja, details here

- Let me remind you that Tactoom is in closed beta testing. Registration is limited. Leave an email and you may soon receive an invite.

UPD 19.10:

The second part is delayed, because a lot of work.