Experience 2 million headless sessions

- Transfer

Published June 4, 2018 in the browserless corporate blog We are

glad to announce that we have recently crossed the milestone of two million sessions served ! These are millions of generated screenshots, printed PDF and tested sites. We have done almost everything you can think of to do with a headless browser.

Although it is pleasant to achieve such a milestone, there are clearly many overlays and problems on the way . In connection with the huge amount of traffic received, I would like to take a step back and make general recommendations for launching headless browsers (and puppeteer ) in production.

Here are some tips.

In no way, if at all possible, never start the browser in headless mode . Especially on the same infrastructure as your application (see above). The headless browser is unpredictable, voracious, and breeds like Mr. Misyks from Rick and Morty. Almost everything that a browser can do (except for interpolating and running JavaScript) can be done using simple Linux tools. The Cheerio libraries and others offer an elegant Node API for extracting data with HTTP requests and scraping, if that is your goal.

For example, you can pick up a page (assuming it is some kind of HTML) and perform scraping with simple commands like this:

Obviously, the script does not cover all use cases, and if you are reading this article, then most likely you will have to use a headless browser. Therefore we will start.

We are faced with numerous users who are trying to keep the browser running, even if it is not used (with open connections). Although this may be a good strategy to speed up the launch of a session, it will crash after a few hours. In many ways, because browsers love to cache everything and gradually consume memory. As soon as you stop using the browser intensively - close it immediately!

In browserless, we usually fix this error ourselves for users, always setting a timer on the session and closing the browser when WebSocket is disabled. But if you are not using our service or a backup Docker image , then be sure to check for some kind of automatic closing of the browser, because it will be unpleasant when everything falls in the middle of the night.

3. Your friend

In Puppeteer, there are many nice methods like keeping DOM selectors and other things around Node. Although it is very convenient, you can easily shoot yourself in the foot, if something on the page makes this DOM node mutate . It’s not so cool, but in reality it’s better to do all the work on the browser side in the context of the browser . This usually means loading

For example, instead of something similar ( three actions async):

It is better to do this (one async action):

Another advantage of wrapping actions into a call

A simple rule of thumb is to count the number

So, we realized that the browser does not run well and should be done only when absolutely necessary. The next tip is to run only one session per browser. Although in reality it is possible to save resources by parallelizing the work through

Instead of this:

Better do this:

Each new browser instance gets clean

One of the main browserless features is the ability to accurately limit the parallelization and queue. So client applications just run

The best and easiest way is to take our Docker image and run it with the necessary parameters:

This limits the number of parallel requests to ten (including debugging sessions and more). The queue is configured variable

6. Do not forget

One of the most common problems that we have encountered is actions that trigger the loading of pages and then abruptly stop the scripts. This happens because the actions that launch

For example, this

But it works in another ( see demo ).

Read more about waitForNavigation here . This function has approximately the same interface parameters as the y

For Chrome to work correctly, you need a lot of dependencies. Really a lot. Even after installing everything you need to worry about things like fonts and phantom processes. Therefore, it is ideal to use some kind of container to put everything there. Docker is almost specifically created for this task, since you can limit the amount of available resources and isolate it. If you want to create your own

And to avoid zombie processes (common in Chrome), it’s better to use something like dumb-init to run properly :

If you want to learn more, take a look at our Dockerfile .

It is useful to remember that there are two JavaScript runtime environments (Node and a browser). This is great for separating tasks, but confusion inevitably occurs, because some methods will require an explicit transfer of references instead of closures or hoistings.

For example, take

Thus, instead of referring to

Better pass the parameter:

We are incredibly optimistic about the future of headless browsers and all the automation they allow to achieve. Using powerful tools like puppeteer and browserless, we hope that debugging and running headless automation in production will be easier and faster. Soon we will launch pay-as-you-go billing for accounts and features that will help you better cope with your headless work!

glad to announce that we have recently crossed the milestone of two million sessions served ! These are millions of generated screenshots, printed PDF and tested sites. We have done almost everything you can think of to do with a headless browser.

Although it is pleasant to achieve such a milestone, there are clearly many overlays and problems on the way . In connection with the huge amount of traffic received, I would like to take a step back and make general recommendations for launching headless browsers (and puppeteer ) in production.

Here are some tips.

1. Do not use a headless browser at all.

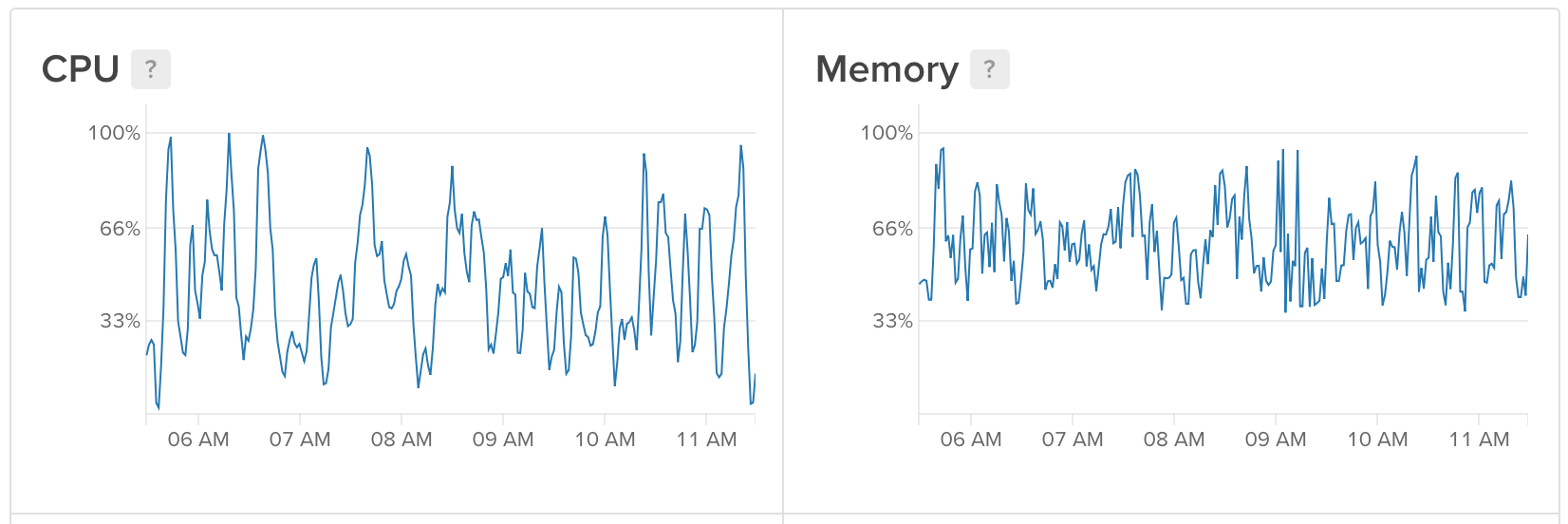

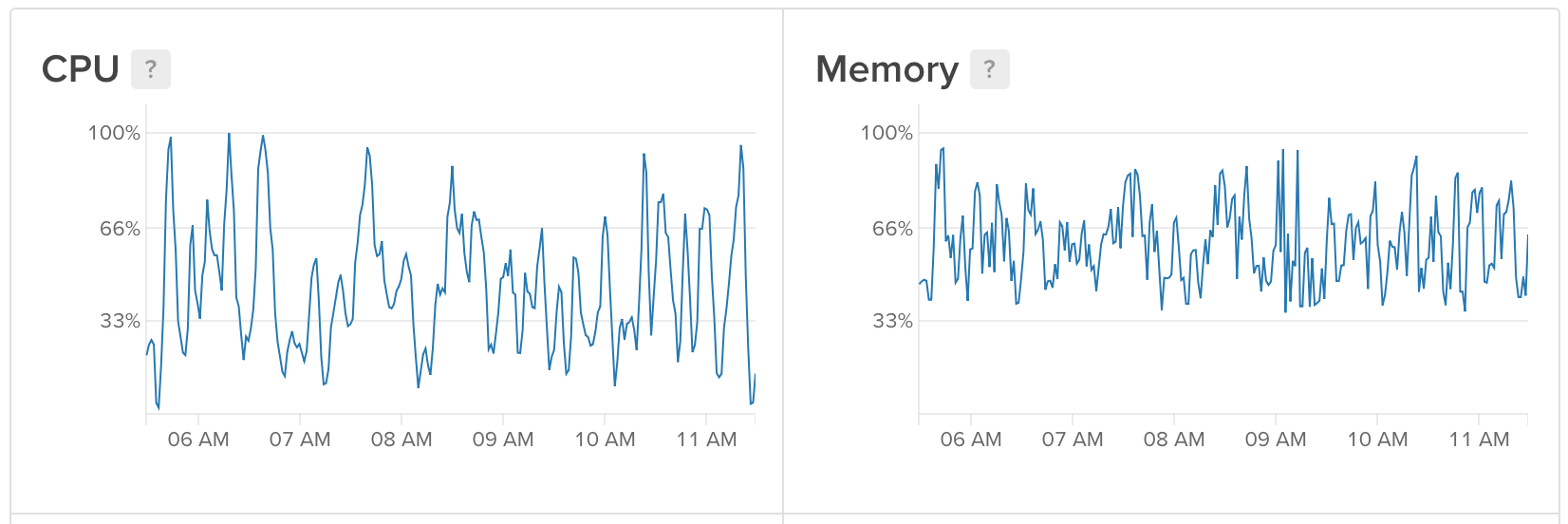

Variable Resource Consumption Headless Chrome

In no way, if at all possible, never start the browser in headless mode . Especially on the same infrastructure as your application (see above). The headless browser is unpredictable, voracious, and breeds like Mr. Misyks from Rick and Morty. Almost everything that a browser can do (except for interpolating and running JavaScript) can be done using simple Linux tools. The Cheerio libraries and others offer an elegant Node API for extracting data with HTTP requests and scraping, if that is your goal.

For example, you can pick up a page (assuming it is some kind of HTML) and perform scraping with simple commands like this:

import cheerio from'cheerio';

import fetch from'node-fetch';

asyncfunctiongetPrice(url) {

const res = await fetch(url);

const html = await res.test();

const $ = cheerio.load(html);

return $('buy-now.price').text();

}

getPrice('https://my-cool-website.com/');Obviously, the script does not cover all use cases, and if you are reading this article, then most likely you will have to use a headless browser. Therefore we will start.

2. Do not launch the headless browser unnecessarily

We are faced with numerous users who are trying to keep the browser running, even if it is not used (with open connections). Although this may be a good strategy to speed up the launch of a session, it will crash after a few hours. In many ways, because browsers love to cache everything and gradually consume memory. As soon as you stop using the browser intensively - close it immediately!

import puppeteer from'puppeteer';

asyncfunctionrun() {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto('https://www.example.com/');

// More stuff ...page.click() page.type()

browser.close(); // <- Always do this!

}In browserless, we usually fix this error ourselves for users, always setting a timer on the session and closing the browser when WebSocket is disabled. But if you are not using our service or a backup Docker image , then be sure to check for some kind of automatic closing of the browser, because it will be unpleasant when everything falls in the middle of the night.

3. Your friend page.evaluate

Be careful with transpilers like babel or typescript, as they like to create helper functions and assume that those are available with closures. That is, the .evaluate callback may not work correctly.

In Puppeteer, there are many nice methods like keeping DOM selectors and other things around Node. Although it is very convenient, you can easily shoot yourself in the foot, if something on the page makes this DOM node mutate . It’s not so cool, but in reality it’s better to do all the work on the browser side in the context of the browser . This usually means loading

page.evaulatefor all the work that needs to be done. For example, instead of something similar ( three actions async):

const $anchor = await page.$('a.buy-now');

const link = await $anchor.getProperty('href');

await $anchor.click();

return link;It is better to do this (one async action):

await page.evaluate(() => {

const $anchor = document.querySelector('a.buy-now');

const text = $anchor.href;

$anchor.click();

});Another advantage of wrapping actions into a call

evaluateis portability: you can run this code in the browser for testing instead of trying to rewrite the Node code. Of course, it is always recommended to use a debugger to reduce development time. A simple rule of thumb is to count the number

awaitor thenin code. If there is more than one, then it is probably better to run the code inside the call page.evaluate. The reason is that all the async actions go back and forth between the Node runtime and the browser, which means constant serialization and deserialization of JSON. Although there is not such a huge amount of parsing (because everything is supported by WebSockets), it still takes time, which is better to spend on something else.4. Parallelize browsers, not web pages.

So, we realized that the browser does not run well and should be done only when absolutely necessary. The next tip is to run only one session per browser. Although in reality it is possible to save resources by parallelizing the work through

pages, but if one page falls, it can crash the entire browser. In addition, it is not guaranteed that every page is perfectly clean (cookies and storage can become a headache, as we see ). Instead of this:

import puppeteer from'puppeteer';

// Launch one browser and capture the promiseconst launch = puppeteer.launch();

const runJob = async (url) {

// Re-use the browser hereconst browser = await launch;

const page = await browser.newPage();

await page.goto(url);

const title = await page.title();

browser.close();

return title;

};Better do this:

import puppeteer from'puppeteer';

const runJob = async (url) {

// Launch a clean browser for every "job"const browser = puppeteer.launch();

const page = await browser.newPage();

await page.goto(url);

const title = await page.title();

browser.close();

return title;

};Each new browser instance gets clean

--user-data-dir( unless otherwise noted ). That is, it is fully processed as a fresh new session. If Chrome falls for some reason, it will not pull other sessions with it either.5. Queue and limitation of parallel operation

One of the main browserless features is the ability to accurately limit the parallelization and queue. So client applications just run

puppeteer.connect, but they themselves do not think about the implementation of the queue. This prevents a huge number of problems, mainly with parallel Chrome instances, which consume all the available resources of your application. The best and easiest way is to take our Docker image and run it with the necessary parameters:

# Pull in Puppeteer@1.4.0 support

$ docker pull browserless/chrome:release-puppeteer-1.4.0

$ docker run -e "MAX_CONCURRENT_SESSIONS=10" browserless/chrome:release-puppeteer-1.4.0This limits the number of parallel requests to ten (including debugging sessions and more). The queue is configured variable

MAX_QUEUE_LENGTH. As a rule, you can perform approximately 10 parallel requests for each gigabyte of memory. The percentage of CPU usage may vary for different tasks, but basically you will need a lot of RAM.6. Do not forget page.waitForNavigation

One of the most common problems that we have encountered is actions that trigger the loading of pages and then abruptly stop the scripts. This happens because the actions that launch

pageloadoften cause “swallowing” of the subsequent work. To get around the problem, you usually need to trigger the page load action — and immediately wait for the download. For example, this

console.logdoes not work in one place ( see demo ):await page.goto('https://example.com');

await page.click('a');

const title = await page.title();

console.log(title);But it works in another ( see demo ).

await page.goto('https://example.com');

page.click('a');

await page.waitForNavigation();

const title = await page.title();

console.log(title);Read more about waitForNavigation here . This function has approximately the same interface parameters as the y

page.goto, but only with the “wait” part.7. Use Docker for all necessary

For Chrome to work correctly, you need a lot of dependencies. Really a lot. Even after installing everything you need to worry about things like fonts and phantom processes. Therefore, it is ideal to use some kind of container to put everything there. Docker is almost specifically created for this task, since you can limit the amount of available resources and isolate it. If you want to create your own

Dockerfile, check below all the necessary dependencies:# Dependencies needed for packages downstream

RUN apt-get update && apt-get install -y \ unzip \ fontconfig \ locales \ gconf-service \ libasound2 \ libatk1.0-0 \ libc6 \ libcairo2 \ libcups2 \ libdbus-1-3 \ libexpat1 \ libfontconfig1 \ libgcc1 \ libgconf-2-4 \ libgdk-pixbuf2.0-0 \ libglib2.0-0 \ libgtk-3-0 \ libnspr4 \ libpango-1.0-0 \ libpangocairo-1.0-0 \ libstdc++6 \ libx11-6 \ libx11-xcb1 \ libxcb1 \ libxcomposite1 \ libxcursor1 \ libxdamage1 \ libxext6 \ libxfixes3 \ libxi6 \ libxrandr2 \ libxrender1 \ libxss1 \ libxtst6 \ ca-certificates \ fonts-liberation \ libappindicator1 \ libnss3 \ lsb-release \ xdg-utils \ wgetAnd to avoid zombie processes (common in Chrome), it’s better to use something like dumb-init to run properly :

ADD https://github.com/Yelp/dumb-init/releases/download/v1.2.0/dumb-init_1.2.0_amd64 /usr/local/bin/dumb-init

RUN chmod +x /usr/local/bin/dumb-initIf you want to learn more, take a look at our Dockerfile .

8. Remember two different execution environments.

It is useful to remember that there are two JavaScript runtime environments (Node and a browser). This is great for separating tasks, but confusion inevitably occurs, because some methods will require an explicit transfer of references instead of closures or hoistings.

For example, take

page.evaluate. Deep in the depths of the protocol, there is a literal stringing of the function and its transfer to Chrome . Therefore, things like closures and lifts will not work at all . If you need to pass any references or values to the call to evaluate, simply add them as arguments that will be processed correctly. Thus, instead of referring to

selectorvia closures:const anchor = 'a';

await page.goto('https://example.com/');

// `selector` here is `undefined` since we're in the browser contextconst clicked = await page.evaluate(() =>document.querySelector(anchor).click());Better pass the parameter:

const anchor = 'a';

await page.goto('https://example.com/');

// Here we add a `selector` arg and pass in the reference in `evaluate`const clicked = await page.evaluate((selector) =>document.querySelector(selector).click(), anchor);page.evaluateOne or more arguments can be added

to the function , since it is variable here. Be sure to take advantage of this!Future

We are incredibly optimistic about the future of headless browsers and all the automation they allow to achieve. Using powerful tools like puppeteer and browserless, we hope that debugging and running headless automation in production will be easier and faster. Soon we will launch pay-as-you-go billing for accounts and features that will help you better cope with your headless work!