Four-dimensional rendering: features, problems, solutions

In the commentary on the article “JavaScript Race Tracer,” its author ankh1989 spoke about plans to write a ray tracer for four-dimensional space. I will try to express some of my thoughts on this topic here.

So, let us have a scene in four-dimensional space. It consists of three-dimensional surfaces (polyhedra, spheres, cylinders, etc.), which are somehow painted and have a reflective and scattering ability. We want to display it on a flat (two-dimensional) drawing.

What can I do? The options may be as follows:

1) we build a three-dimensional section of the scene. We consider it as an ordinary 3D scene, and perform rendering according to the laws of three-dimensional space.

2) Project 4D onto 3D (for example, parallel projection). We believe that the visible projection points inherit the properties of their originals, and, again, perform 3D rendering.

3) We perform the central projection of 4D onto 3D using the four-dimensional raytracing algorithm. We get a three-dimensional array of pixels. Now somehow project it onto 2D. There are such options:

3a) we select any direction, and on each line going in this direction, we find the first filled pixel (parallel orthogonal projection). The color of this pixel will give the color of the dot of the final image.

3b) the same, but we use the central projection.

3c, d) we take the projection from (3a, b), but instead of taking the color of the first pixel of the line, average all the colors that fell on the line.

We discard the first two approaches as uninteresting (although in some cases they may be useful). And consider, for example, option 3b.

So, we have a camera located at the origin, and directed towards Ow = (0,0,0,1). She projects the point with coordinates (x, y, z, w) at (p, q, r) = (x / w, y / w, z / w). Only points with w> 0 are visible.

The second camera is in three-dimensional space somewhere on the Or axis (point (0,0, -a)) and projects the point (p, q, r) on a two-dimensional screen, at the point (u, v) = (p / (a + r), q / (a + r)). Substituting the values p, q, r from the first formula, we obtain that (u, v) = (x / (z + a * w), y / (z + a * w)). This means that instead of two central projections, it’s enough for us to get along with one — we just need to rotate the camera. The second projection will be orthogonal - along the axis perpendicular to both the screen and the camera axis. Thus, options (3a) and (3b) are equivalent.

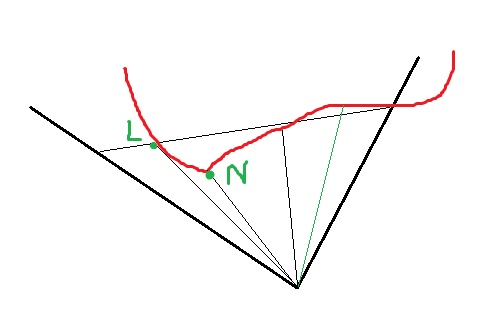

Now let's take a closer look at what these projections are. It is easy to understand that information about the rays coming from a certain plane angle gets into each pixel of the screen. There are a one-dimensional set of such rays, and we either take all of them (and, for example, average them — these will be variants (3c, d), or choose one of the rays that did not come from the void, for example, the leftmost one — these will be variants (3a, b )

I did not like the option of the “leftmost point”: the OL line touches the object, and what we would see would be called a four-dimensional viewer by the silhouette of the scene. Therefore, I decided to try a less usual option - to consider the points of the scene that fell into a flat angle, and take the color of the point closest to the camera. Such a nearest point (when the boundaries of the angle are calculated) can be quickly found for any base object, after which tracing can be done only for it - it turns out one trace per pixel, which is pretty fast.

What happened?

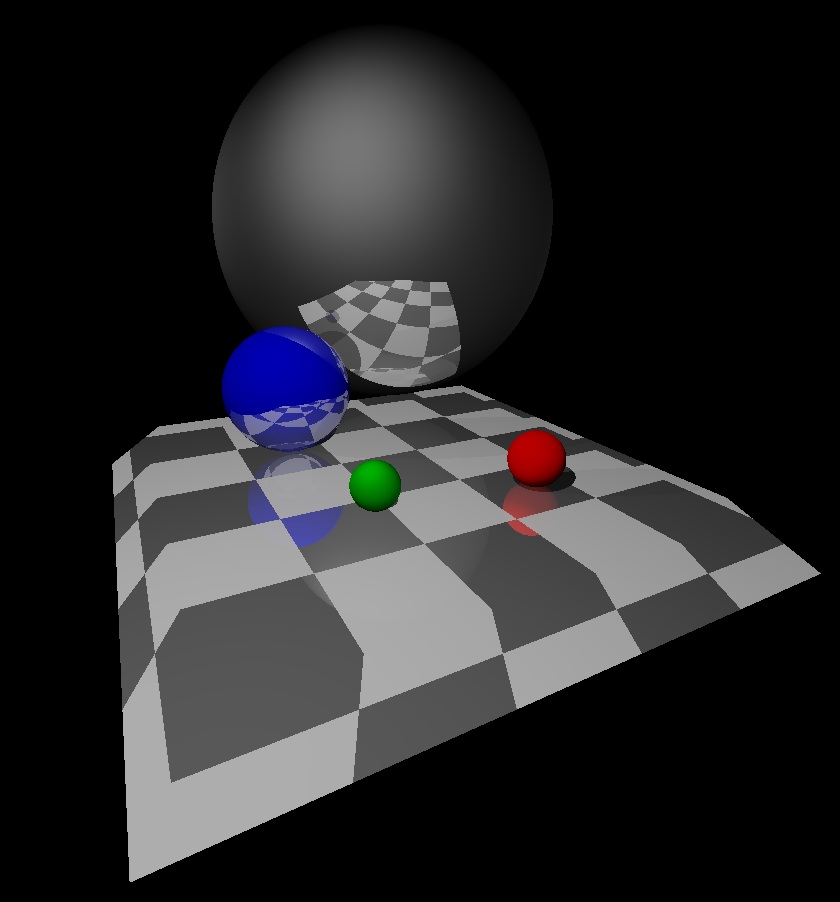

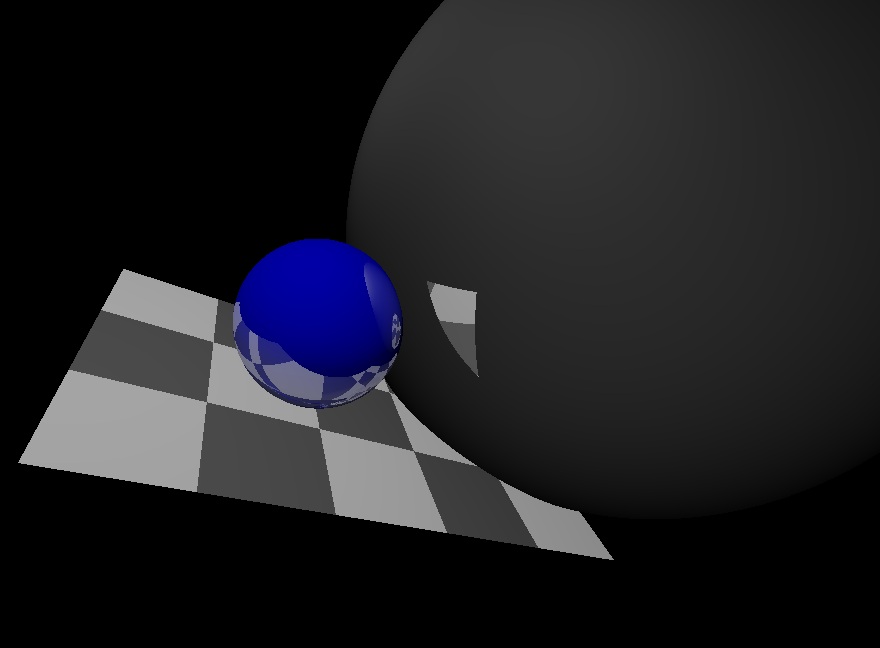

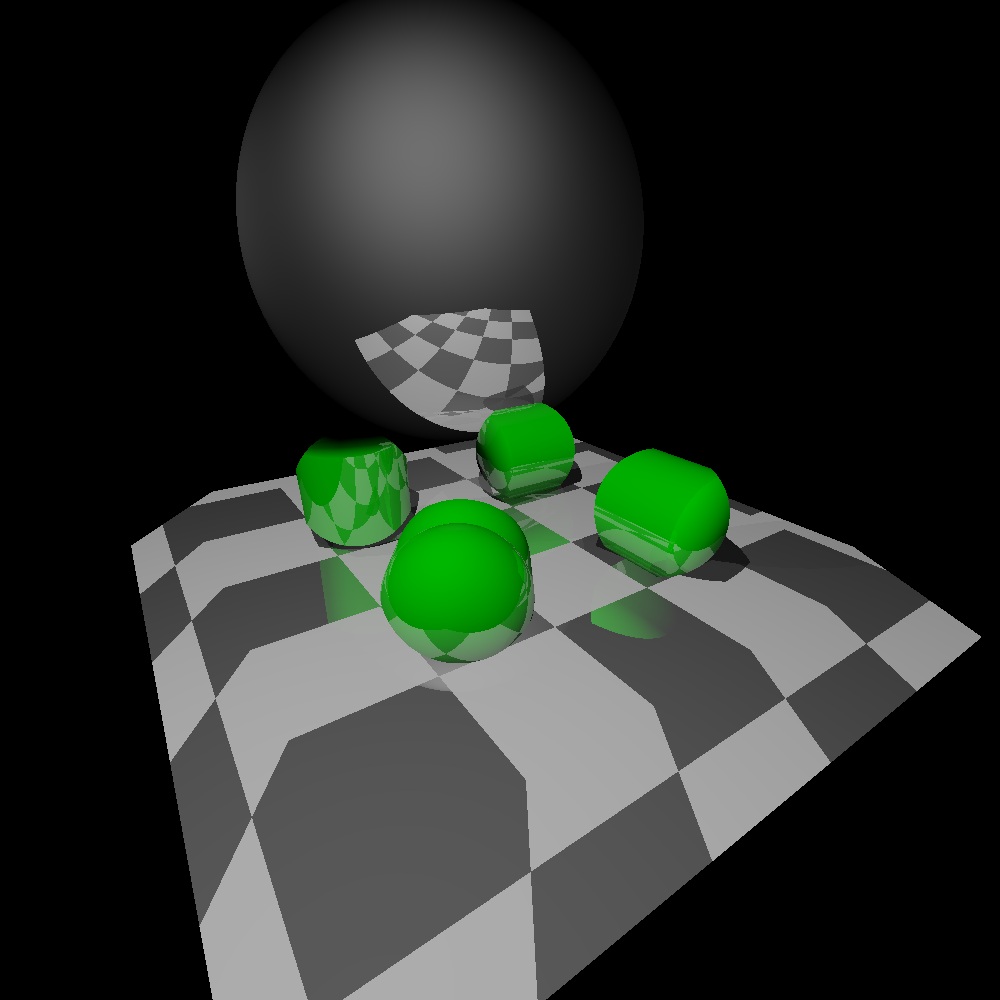

First, I placed all the objects in the scene (including the camera) in one plane w = 0. Naturally, the rendering result does not differ from the three-dimensional (only the quality is worse, I did not try very hard):

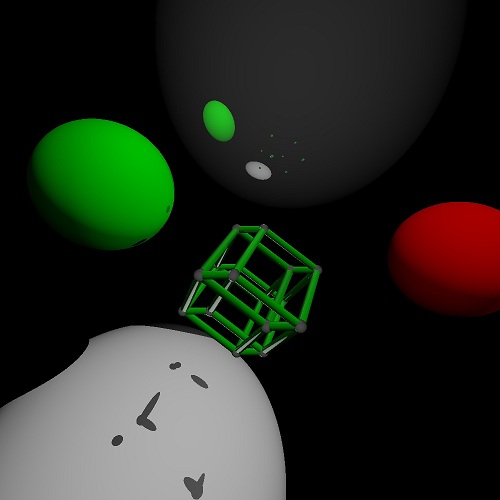

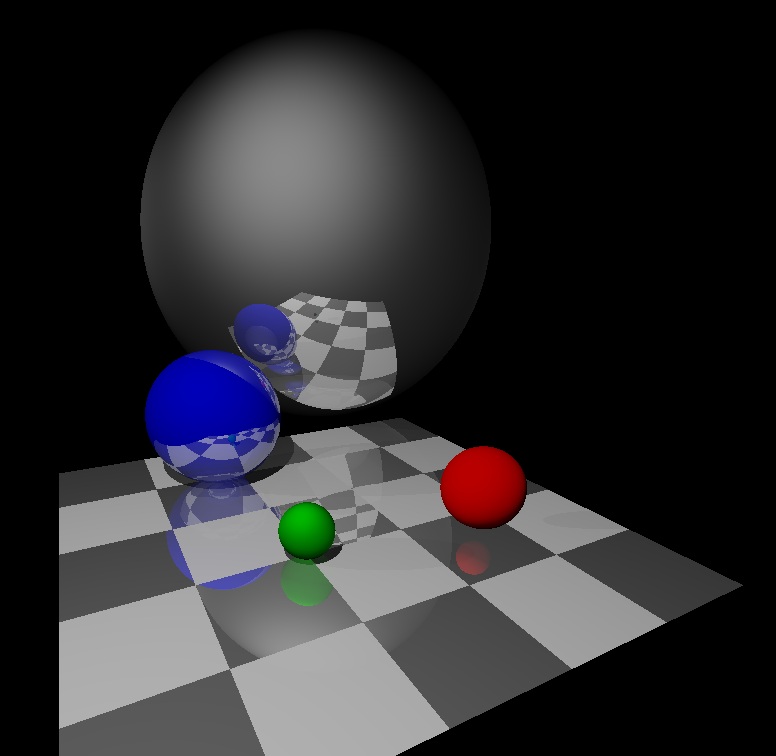

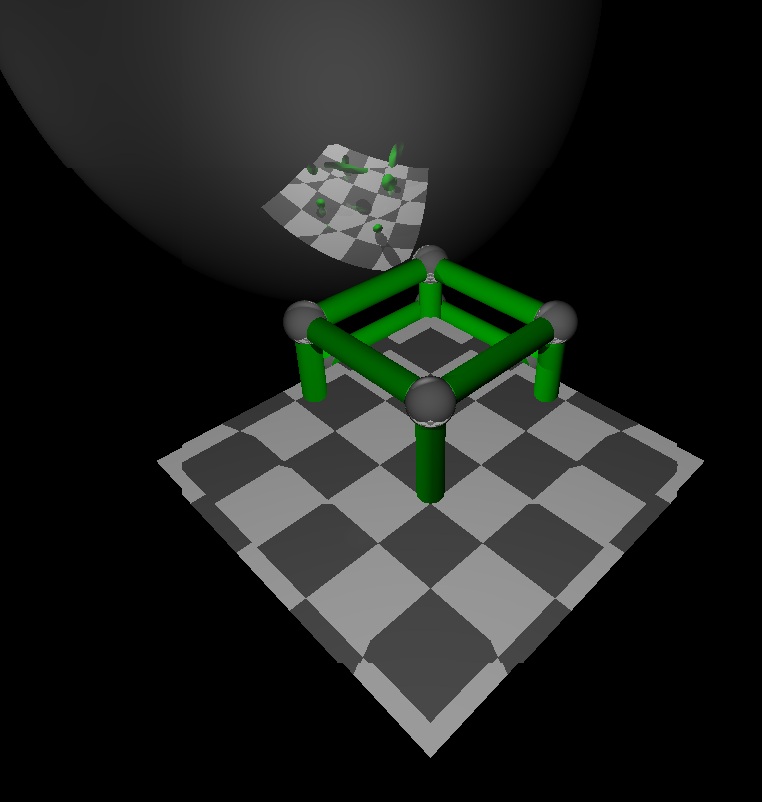

Then, without moving objects, I began to rotate the camera in four dimensions. After the first shift, the result was as follows:

Firstly, the shadow of the green ball disappeared, and secondly, the reflections of red and green balls in a large sphere disappeared! This happened because the points of the sphere closest to the camera became different, not those in which the balls were reflected.

In addition, the shape of the stand has changed. Now it is clear that it is not flat, but three-dimensional, in fact, it consists of 5 * 5 * 3 = 75 3D cubes.

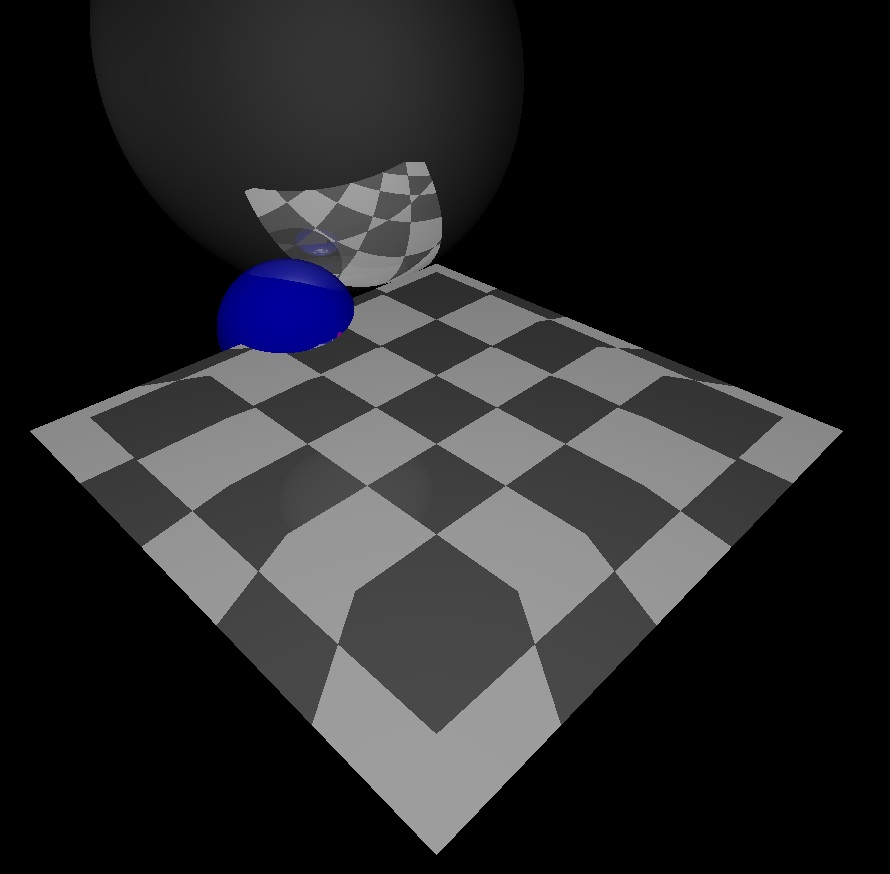

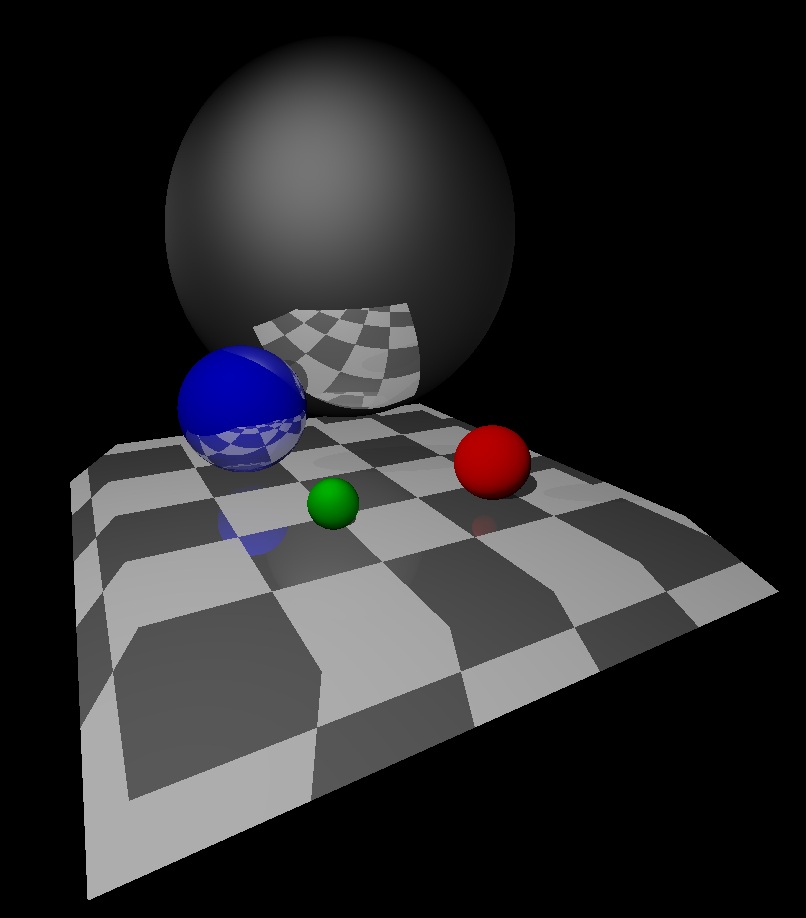

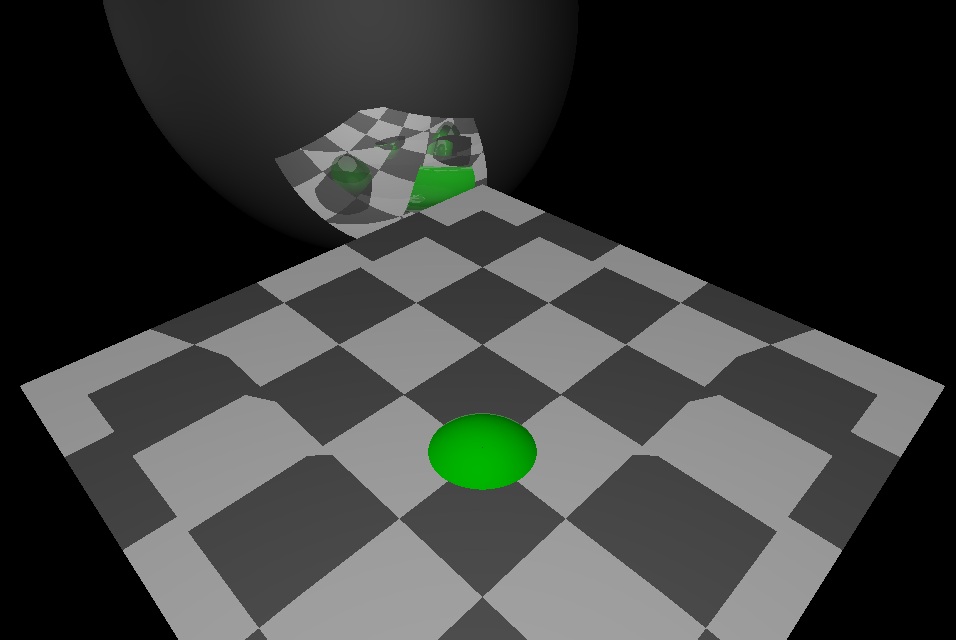

After the next turn, the picture changed even more: two balls drowned in the stand, only one remained - and he was half immersed.

And finally, a rotation of 90 degrees from the starting position: now we look from the direction of w. And again we see one side of the stand, 3 * 5 in size.

Second experience. We will return the camera to its place, and move the balls in the w direction (in different directions). It can be seen that the reflections of the red and green balls in the big ball have disappeared, and in addition, the size of the reflection of the red ball in the plane has sharply decreased. Something is wrong with the shadows:

After the rotation, the reflections disappeared completely:

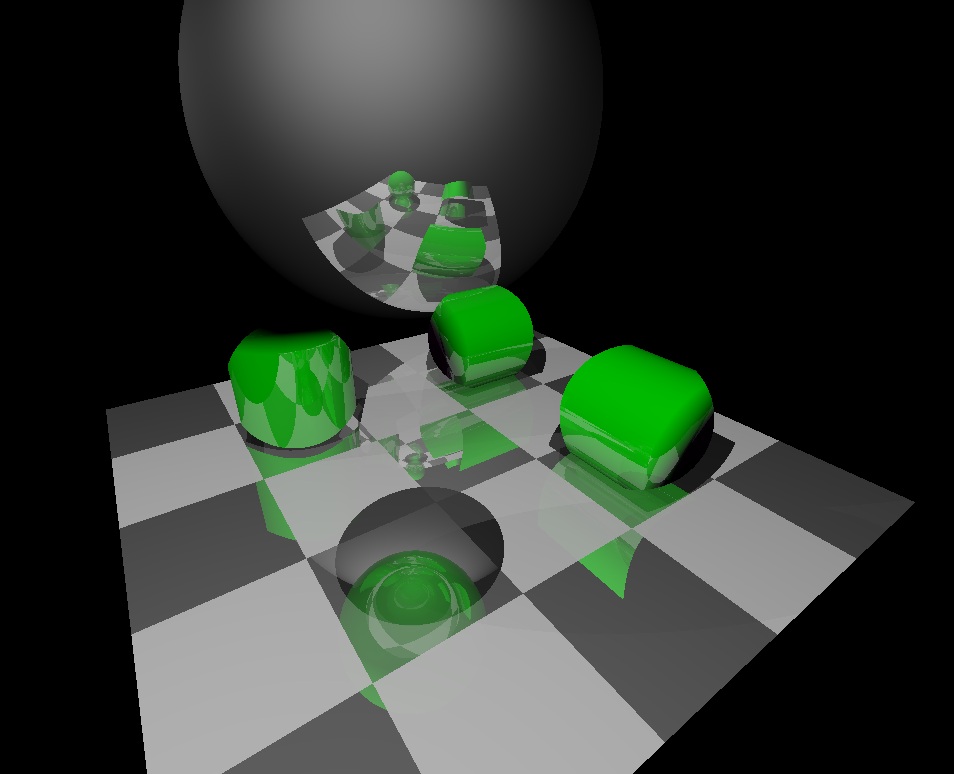

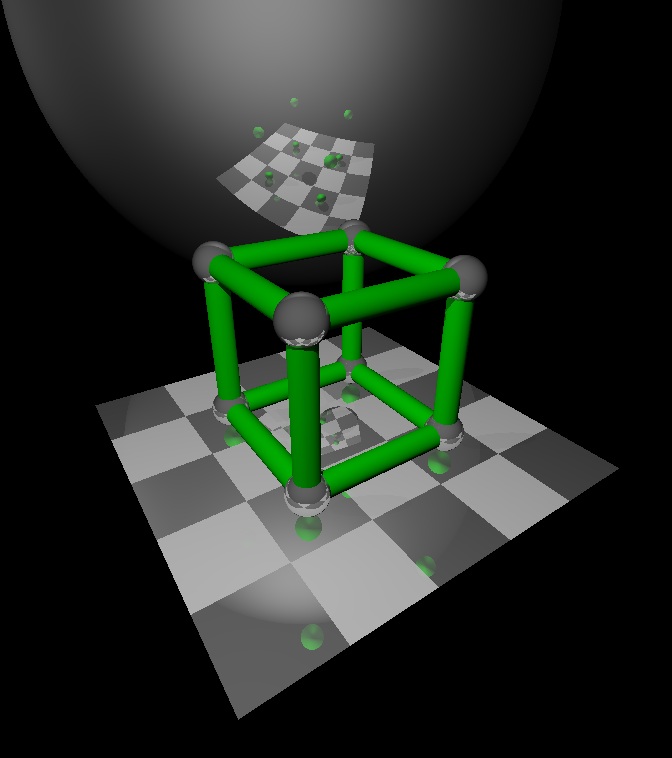

For the next scene, I used a new object - "Tubes with a spherical section." If you put 4 of these tubes in different orientations on the plane, this is what happens:

What a strange object is in the foreground, I did not understand. In my opinion, this is a hole. Or a bug in the program.

After the first turn, the reflections disappeared:

And after the second tube they drowned, but the reflections returned!

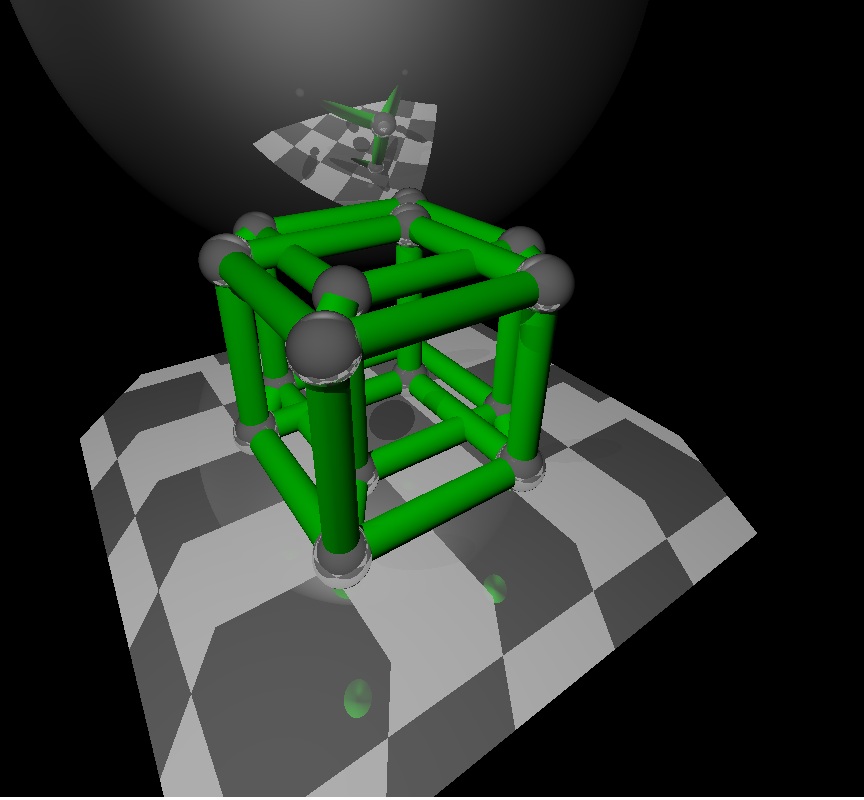

The fourth scene is a costal tesseract model composed of balls and spherical tubes. On the first frame there are only 8 balls: we look from such a side that they close each other:

Pay attention to the reflection in the plane. It consists of 8 green spheres - these are tube sections falling on the plane w = 0.

After turning the camera, all 16 vertices became visible, and the reflection in the sphere became unpredictable:

After the next turn, the cube was half drowned:

And then it emerged from the other side, but crossed with the mirror sphere:

And this is an attempt to place the cube in a space with spheres and look at its shadow .

By and large, there’s nothing to look at.

So, it is clear that with the selected projection, the result is not very predictable, and we see only a part of the surface of the objects - and if there are interesting details on it, then they are hidden from us. Probably, for more complete pictures, it will be necessary to use averaging over many rays. I hope that 100 rays per angle will be enough.