Data Center Technologies: General Information on QFX Switches

The article discusses the basic principles of networking in data centers, gives examples of hardware solutions from Juniper Networks. It does not carry a deep technical analysis, but it may be useful to those who are going to organize their first small data processing center, or are looking at alternative solutions and new features in existing data centers.

The topic of building communication networks in data centers is far from new today. This direction is now in a stage of rapid development, which is justified by the ever-increasing needs for information storage, as well as the mass migration of services and technologies to the "cloud".

In this regard, the requirements for data networks in the data center are increasing every day. This forces humanity to develop new technologies for network management, traffic processing and equipment operation. Optimize existing solutions, and of course reduce the burden on human resources, in order to eliminate errors associated with human factors.

It was also important to change the direction of traffic in the data center - if earlier large traffic flows passed from the bottom up - vertically from servers to the outside world, now the situation is quite different, and now the servers communicate with each other, transferring large information flows to each other, which changed the proportions of volumes traffic in favor of "horizontal" exchange.

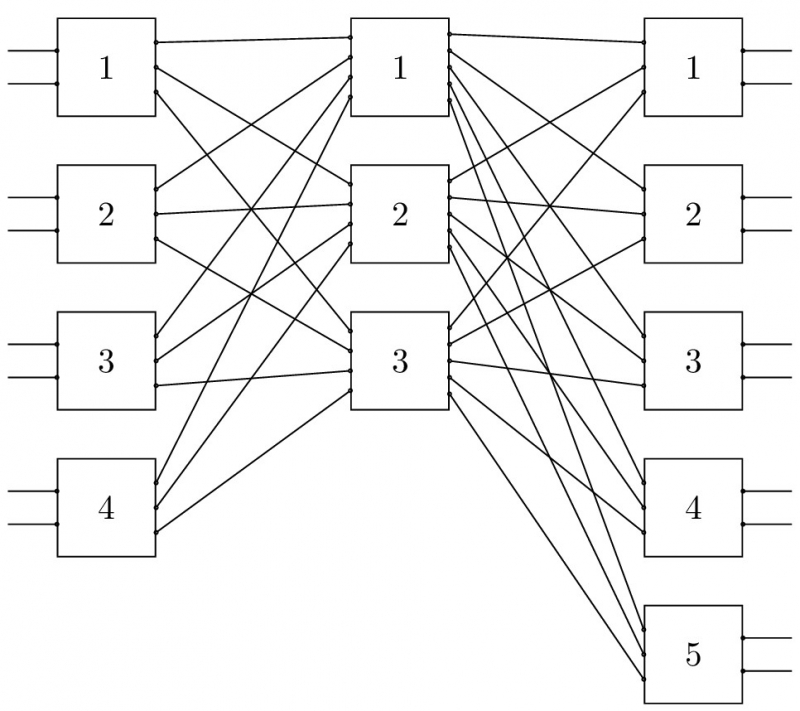

In 1953, mathematician Charles Kloz, solved the problem of optimizing network connections for use in telegraph networks. The “every with every” connection method was redundant and required a large amount of resources for implementation. The idea of Klose was to build a network according to the scheme of three levels. Entry - Intermediate - Exit. In such a scheme, the number of physical connections sharply decreased, but the overall network performance was sufficient for operation.

The same principle was taken as the basis for building data networks in the data center. With a single change, the “input” and “output” levels were combined into one, since the servers are both a source of information and its consumer. In the end, received a network connection scheme on the principle of Spine - Leaf.

Spine-Leaf network connection

scheme The scheme is constructed by analogy with plants in nature. Leaf - Sheet. The leaves are attached to the trunk. Spine - the trunk (or ridge). As a result, we get one common structure.

Leaf. Equipment access level where servers are connected in the data center. These are the switches Top of Rack, with a high density of ports. As you can see from the figure, all Leaf equipment is switched on in separate lines to each Spine level switch. This allows you to achieve a high level of redundancy and fault tolerance.

Spine. “Aggregation” equipment is usually high-performance equipment with a high port density of 25/40/50/100 Gb / s. A distinctive feature of this equipment is a wide range of supported functionality, and high performance compared with the level of Leaf.

As a result, we get a scheme in which traffic flows through equipment at the Spine level. This distribution of traffic flows may not seem optimal if both servers, the source and the receiver are connected to the same Leaf switch. And this is correct, in which case the traffic goes directly through the Leaf switch without rising to a higher level.

QFX is a series of switches for building data center networks.

The abbreviation QFX does not have any logical meaning, so we will not go into the meaning of these three letters. This equipment was developed and marketed by Juniper Networks, a leader in the design and manufacture of telecommunications equipment.

Nowadays, any self-respecting manufacturer of telecommunications equipment, be it Cisco, Arista, Extreme and some others, is engaged in switching and routing issues in data centers.

An interesting position is occupied by manufacturers of network software, which can be used in conjunction with equipment without pre-installed software, or directly on server platforms. The development of this direction occurs in close cooperation with manufacturers of telecommunications equipment, creating new products and technologies.

Returning to Juniper Networks QFX equipment. The entire series was designed to meet the needs of data centers. The software is written taking into account the necessary functionality, the hardware architecture takes into account the requirements for fault tolerance and redundancy.

The range of data center switches is presented in the following series:

- The QFX51xx series - Leaf level equipment.

- The QFX52xx series - equipment that can be used both at the Leaf level and at the Spine level.

- Series QFX10k - equipment level Spine.

Depending on the series, the equipment is built on Broadcom chips, or on Juniper's own developments. And the one and the other option has its pros and cons.

Consider the QFX51xx - the older QFX5100, as well as an updated version of the QFX5110. Both switches are based on Broadcom Trident 2+, but different versions. As a result, we got not the same switch behavior at work, and, of course, different support for software functions, and port capacity. As noted earlier, this equipment is positioned mainly as a Leaf-device. It is possible to use the 51xx series as Spine devices in small data centers.

QFX51xx supports AC / DC, AC / AC, DC / DC power supply redundancy.

Software, equipment is built using virtualization mechanisms. Unlike other series of company switches, Junos OS runs as a virtual machine under the KVM hypervisor that runs on the device. Due to this, the solution supports software update technology - TISSU. The upgrade process creates additional Junos OS virtual machines, and switches between them with minimal service interruptions.

The QFX5100 is built on an older version of the Trident 2+ chip and, in my humble opinion, is worthy of retirement, giving way to its older brother QFX5110.

QFX5100 is made in several versions:

- QFX5100-48S - switch 48 ports SFP + 6 ports QSFP +.

- QFX5100-96S - switch 96 ports SFP + 8 ports QSFP +.

- QFX5100-24Q - switch 24 QSFP + ports.

- QFX5100-48T - switch 48 ports 10G BASE-T 6 ports QSFP +.

QFX5110 is a newer model in the 51xx series, built on an updated version of the Trident 2+ chip. In my opinion, now it is the best available solution from Juniper to work as a Leaf-device.

QFX5110 - a newer model in the 51xx series, built on an updated version of the Trident 2+ chip.

QFX5110 is made in several versions

- QFX5110-48S - switch 48 ports SFP + 4 ports QSFP28.

- QFX5110-32Q - 32 QSFP + port switch or 20 QSFP28 ports.

In general, it is possible to note a high port density of 10 Gbit / s for switching on terminal devices, and rather “wide” uplink ports of 100 Gbit / s, which allows you to get the oversubscription level for uplink to “1: 1.2” with a full 5110 switch. I think this is a good indicator.

A small comparative table:

Consider the QFX52xx series. The equipment of this series is presented by two models QFX5200, and also QFX5210. The entire series is built on the first generation Broadcom Tomahawk chip. This imposes its own limitations on the functionality and performance of the solution. The series is positioned as a spine device for small data centers, or as a Leaf device if you need to connect 25 Gbit / s terminal equipment. This is due to the high density of QSFP28 ports and the fact that the equipment is built on a commercial chip from Broadcom, with limited functionality.

QFX52xx supports AC / DC, AC / AC, DC / DC power supply redundancy.

All of the above about the software operation on the QFX51xx hardware is also true for the QFX52xx series. The Junos OS operating system works in the same way as a virtual machine, with the ability to conduct TISSU.

The QFX5200 is represented by two models, an older QFX5200-32C, built on the Broadcom Tomahawk chip, and a newer model QFX5200-48Y, built on the Broadcom Tomahawk + chip:

- QFX5200-32C - Switch 32 QSFP28 ports.

- QFX5200-48Y - Switch 48 ports SFP28 6 ports QSFP28.

QFX5210 is represented by one model, which is essentially two QFX5200-32C switches, which gives us the same limitations.

QFX5210-64C - Switch 64 ports QSFP28.

In the end, we get a high port density of 100 Gbps for relatively little money, which makes this equipment a good Spine solution in small and medium data centers. Also, I would like to note the moment that the equipment allows you to migrate the level of Leaf at 25 Gbit / s

Let's move on to the QFX10k series. The equipment of this series is built on Juniper's own development, the Q5 chip, with a capacity of 500 Gbit / s. This chipset has the ability to connect external memory to store routing information RIB / FIB. This allows you to build equipment of high performance and introduce new functionality as it appears on the market through software updates. The QFX10k series switches are designed to meet all current market needs, for use in large data centers at the Spine level, as well as for building links between two geographically dispersed data centers - DCI - Data Center Interconnect.

QFX10k switches are available in fixed and modular design.

QFX10002 is a 2RU fixed-size switch. The series includes several models:

- QFX10002-36 / 72Q switch supporting 36/72 QSFP + port or 12/24 QSFP28 ports.

- QFX10002-60C switch with support for 60 QSFP28 ports.

QFX100xx is a modular switch. These are large chassis with the ability to connect various types of line cards, providing support for various types of interfaces (SFP, SFP +, SFP28, QSFP +, QSFP28). The equipment allows the use of redundancy components within a single chassis, such as control modules, line cards.

The series includes a chassis with the ability to include 8 or 16 line cards.

Today, QFX10k equipment occupies a leading position in the “Spine-device” segment for data center networks. Switches compete strongly with equipment from other manufacturers in this segment.

All equipment supports NetConf remote configuration mechanisms, statistics and analytics collection using Junos Telemetry.

In general, we conducted a review of the physical topology of building a network - in the best practice version - possible equipment from Juniper. It should be noted that analogues of such switches are available from other equipment manufacturers. Using our review, you can always choose what suits you best.

In conclusion, I propose to consider the logical options for building communication networks in data centers.

To date, I can distinguish two options for the logical construction of a network:

Based on closed algorithms and work protocols.

Based on open algorithms and work protocols.

We are still considering the manufacturer Juniper Networks.

In the first case, there are two main solutions for building networks in data centers:

Virtual chassis fabric

Junos Fusion Datacenter

Virtual Chassis Fabric. The solution is a development of Juniper's Virtual Chassis technology. It uses the same principle of combining switches into one common chassis using an internal VCCP protocol. VCCP is based on the open IS-IS protocol, and is its reworked version. As a result, the mechanism of the solution is hidden from the end user, and is left to the developers, which makes it difficult to solve any problems that arise during operation, but allows you to use the completed product.

- Single point of control through the main switch.

- Spine - Leaf architecture support.

- The maximum number of devices is 20 pieces (4 Spine and 16 Leaf).

- Maximum scaling up to 1536 access ports with support for 10 Gb / s - FCoE in one factory.

- Junos Space control system.

Junos Fusion DataCenter. The solution is a new product of the company, designed specifically for use in data centers. Only QFX-51xx / 10k series switches are supported. The solution is based on 802.1BR, netconf, json-rpc technologies. A single logical structure with the ability to control from a single console. The solution is being actively developed, and additional functionality is being introduced.

- Based on IEEE802.1BR.

- Single point of control.

- Spine - Leaf technology support.

- The maximum number of devices is 65 pieces.

- Maximum scaling up to 6144 10 Gbit / s -FCoE ports in one factory.

- The ability to use 1 or 2 devices at the level of Spine.

- Spine level is represented by QFX10k switches.

- Leaf is represented by QFX51xx switches.

- Support local switching at Leaf level.

- Ability to auto-configure vlan on Leaf level ports.

- Junos Space control system.

- MC-LAG support.

In the case when the network is based on open algorithms and work protocols - only one solution is IP Fabric. The solution allows the use of equipment from any manufacturer. IP fabric works exclusively on the third OSI level. It does not have any common points of management and a single console, but the possibilities of scaling, as well as troubleshooting, are quite extensive.

Division into levels Underlay and Overlay. As the technology used Underlay known to us the IGP protocols - such as OSPF / IS-IS, for small data centers. If the data center has many racks and uses a large number of connected terminals, then everyone's favorite iBGP / eBGP. In my opinion, when choosing a solution, underlay is a matter of taste and common sense.

As an overlay solution, something like VXLAN is selected, which is supported by most manufacturers of network solutions, together with MPLS technologies or, say, EVPN. Juniper equipment can be used to build similar solutions along with other manufacturers. There are no features here, I think, it is impossible.

- Wide range of deployment options.

- Spine - Leaf technology support.

- Open selection of technologies and protocols.

- The ability to use solutions from different manufacturers.

- Highest scalability compared to other solutions.

- Work only on the third level of the OSI model.

In this article, I conducted a general review of the principles of building networks for data processing centers, and also led the possibilities of using equipment and Juniper Networks solutions for their implementation.

All photographs courtesy of Juniper Networks, represented by engineer Bugakov Yevgeny. What a special thanks to him.