Explore JS execution speed and page display algorithm

Testing the speed of JS or page rendering is a thankless task. Any testing reflects reality only when it is carried out under the most identical conditions and things with the same functionality are tested. After all, when asked whether it’s faster, a truck or a sports car, everyone will immediately answer that the sports car. And if the field is yes with a dung trailer? The winner in each case will be the one who is best suited to perform specific tasks.

This article will have some hypotheses and some facts. There will be no fan speeches or calls to change the browser orientation.

So, our experimental rabbits:

I did not test IE9, because I have it installed on a virtual machine, and this is fraught with the presence of a penalty in speed and a noticeable spread in values.

The race for the speed of the JS engine did a good job: it increased the responsiveness of browsers, gave coders more control over the display of pages and made the animation pleasing to the eye. These are not all the advantages, and you can list them for a long time. We learned about the wonders of optimization for wonderful benchmarks, such as SunSpider , Dromaeo , V8 Benchmark Suite and others. Now the question is, what did all these benchmarks intend to do? JS execution speed? Maybe. And maybe not.

Let's take a simple, horse-vacuum-spherical example: a script in a loop creates DOM nodes, which it then adds to the document.

I'll run a little ahead. Knowing that Javascript engines can exist separately from the browser, I assume that the execution of our test script will be divided into three stages:

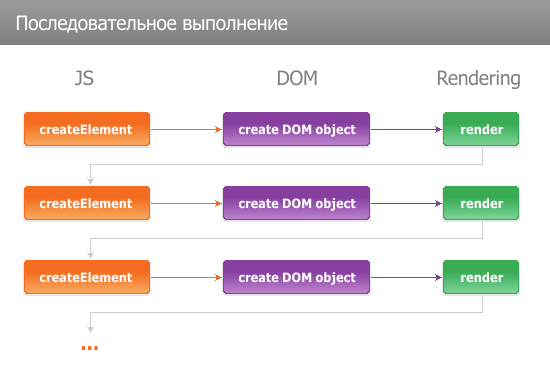

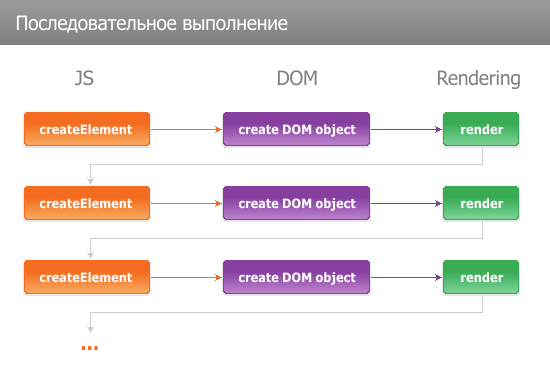

Obviously, the simplest way to implement the mechanism for displaying elements is to execute commands sequentially: first, we execute the JS function, by the command of which we get an instance of the DOM node, which we send for rendering.

This is not a bad implementation method. Its main drawback is that you cannot “cut off” the JS engine from other functional parts of the browser. However, there is an easy way around this.

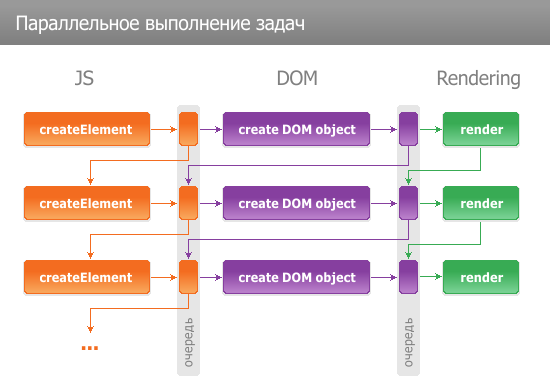

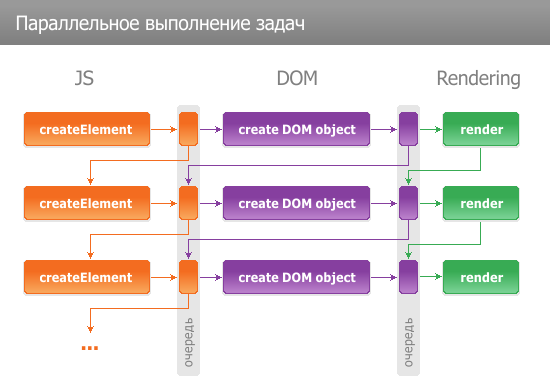

And let's make a feint with our ears and divide our layers with some execution queues. The JS engine will simply queue a set of commands for execution and go to sleep, after passing the task further to the factory of DOM objects. The factory will execute all the necessary commands, simultaneously creating a set of objects ready for rendering. Put everything in the rendering queue and pass the baton to the rendering system. Voila!

In such an architecture, it is quite simple to “cut off” one part of the browser from the other; communication takes place through the task queue.

In the previous embodiment, the steps are performed sequentially. But what if we make three parallel systems? The factory of DOM objects monitors the job queue, and as soon as the command appears, immediately makes the DOM node and sends it to the rendering queue.

Fearfully? Yes, very interesting!

Let's now measure the JS execution speed of each of the above architectures. Not yet starting testing, it is already clear that in the first case we will measure the speed of all three stages, in the second and third - only the speed of creating queues. But this will not reflect reality!

To balance the three approaches, I made a simple benchmark ( RapidShare / Yandex / pasteBin ). Clones of several blanks are randomly generated. If the clone is the last in the line, then it is assigned a width so that it occupies all available free space. Elements are floating and until they are drawn, it is not clear what position and width they have. In fact, I led everything to the sequential execution of commands.

Testing really surprised me.

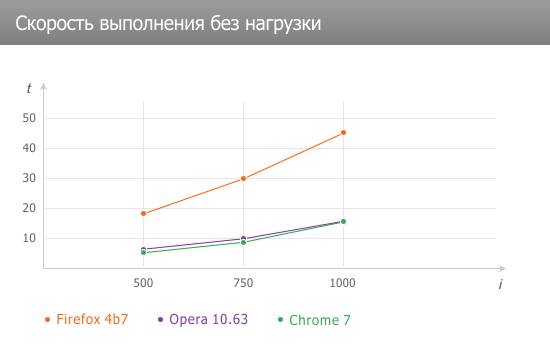

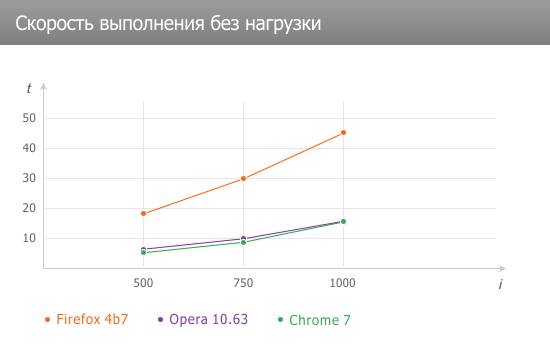

First, let's look at the test results without load.

Fox is three times behind Opera and Chrome. Chrome is a little faster than Opera, but with an increase in the number of iterations, the lag decreases.

Now turn on the "brake"

I could not believe my eyes. At a thousand iterations, Chrome falls into cruel thoughtfulness and issues a request not to mock his vulnerable soul and to end his suffering by stopping the execution of the script. This is a stick in the wheel!

Opera won the competition unconditionally, Fox second.

If we take the ratio of the results with the load to the results without it, then we see a rather interesting picture. Firefox loses performance from 50 to 100 times. The speed degradation from the number of iterations is almost linear. The picture and behavior of the browser fits very well in the first scheme - the sequential execution of steps. Visually, Fox does not render the page until it completes the loop.

At Opera, the degradation of speed when the load is turned on is from 100 to 150 times. Opera renders the page as the script runs, which is very similar to the three-stage parallel execution scheme.

Chromium’s speed degradation ranges from 1,000 to 4,000 times. Chrome does not render the page until it completes the loop. This is very similar to a sequence of steps.

Funny comes out.

My article is partly theoretical and the architectures that are presented in it are not actually confirmed.

When creating scripts that analyze the sizes of elements, be careful and vigilant. Differences in rendering architecture can lead to deplorable speed degradation.

UPD Added a link to the benchmark on Yandex .

Many people ask in which program I create charts. In Corel Photopaint X5 manually.

UPD2. Added a link to pasteBin. Thanks friends! .

This article will have some hypotheses and some facts. There will be no fan speeches or calls to change the browser orientation.

So, our experimental rabbits:

- Ff 4b7

- Opera 10.63

- Chrome 7

I did not test IE9, because I have it installed on a virtual machine, and this is fraught with the presence of a penalty in speed and a noticeable spread in values.

JS engines optimized, optimized, but not optimized

The race for the speed of the JS engine did a good job: it increased the responsiveness of browsers, gave coders more control over the display of pages and made the animation pleasing to the eye. These are not all the advantages, and you can list them for a long time. We learned about the wonders of optimization for wonderful benchmarks, such as SunSpider , Dromaeo , V8 Benchmark Suite and others. Now the question is, what did all these benchmarks intend to do? JS execution speed? Maybe. And maybe not.

What should we build a DOM?

Let's take a simple, horse-vacuum-spherical example: a script in a loop creates DOM nodes, which it then adds to the document.

I'll run a little ahead. Knowing that Javascript engines can exist separately from the browser, I assume that the execution of our test script will be divided into three stages:

- Execution of the JS-function of creating a node (for simplicity, let's call it “JS”)

- Creating a DOM in a browser (DOM)

- Rendering a node (Rendering)

Sequential execution

Obviously, the simplest way to implement the mechanism for displaying elements is to execute commands sequentially: first, we execute the JS function, by the command of which we get an instance of the DOM node, which we send for rendering.

This is not a bad implementation method. Its main drawback is that you cannot “cut off” the JS engine from other functional parts of the browser. However, there is an easy way around this.

Performing tasks one at a time

And let's make a feint with our ears and divide our layers with some execution queues. The JS engine will simply queue a set of commands for execution and go to sleep, after passing the task further to the factory of DOM objects. The factory will execute all the necessary commands, simultaneously creating a set of objects ready for rendering. Put everything in the rendering queue and pass the baton to the rendering system. Voila!

In such an architecture, it is quite simple to “cut off” one part of the browser from the other; communication takes place through the task queue.

Full concurrency

In the previous embodiment, the steps are performed sequentially. But what if we make three parallel systems? The factory of DOM objects monitors the job queue, and as soon as the command appears, immediately makes the DOM node and sends it to the rendering queue.

Fearfully? Yes, very interesting!

Testing

Let's now measure the JS execution speed of each of the above architectures. Not yet starting testing, it is already clear that in the first case we will measure the speed of all three stages, in the second and third - only the speed of creating queues. But this will not reflect reality!

To balance the three approaches, I made a simple benchmark ( RapidShare / Yandex / pasteBin ). Clones of several blanks are randomly generated. If the clone is the last in the line, then it is assigned a width so that it occupies all available free space. Elements are floating and until they are drawn, it is not clear what position and width they have. In fact, I led everything to the sequential execution of commands.

Testing really surprised me.

First, let's look at the test results without load.

| 500 | 750 | 1000 | |

|---|---|---|---|

| Firefox | 19 | thirty | 45 |

| Opera | 6 | 10 | 14 |

| Chrome | 5 | 9 | 14 |

Fox is three times behind Opera and Chrome. Chrome is a little faster than Opera, but with an increase in the number of iterations, the lag decreases.

Now turn on the "brake"

| 500 | 750 | 1000 | |

|---|---|---|---|

| Firefox | 950 | 2350 | 4800 |

| Opera | 610 | 1250 | 2100 |

| Chrome | 5700 | 20500 | ~ 55000 |

I could not believe my eyes. At a thousand iterations, Chrome falls into cruel thoughtfulness and issues a request not to mock his vulnerable soul and to end his suffering by stopping the execution of the script. This is a stick in the wheel!

Opera won the competition unconditionally, Fox second.

A bit of analysis

If we take the ratio of the results with the load to the results without it, then we see a rather interesting picture. Firefox loses performance from 50 to 100 times. The speed degradation from the number of iterations is almost linear. The picture and behavior of the browser fits very well in the first scheme - the sequential execution of steps. Visually, Fox does not render the page until it completes the loop.

At Opera, the degradation of speed when the load is turned on is from 100 to 150 times. Opera renders the page as the script runs, which is very similar to the three-stage parallel execution scheme.

Chromium’s speed degradation ranges from 1,000 to 4,000 times. Chrome does not render the page until it completes the loop. This is very similar to a sequence of steps.

Funny comes out.

Conclusion

My article is partly theoretical and the architectures that are presented in it are not actually confirmed.

When creating scripts that analyze the sizes of elements, be careful and vigilant. Differences in rendering architecture can lead to deplorable speed degradation.

UPD Added a link to the benchmark on Yandex .

Many people ask in which program I create charts. In Corel Photopaint X5 manually.

UPD2. Added a link to pasteBin. Thanks friends! .