Call cost

It is believed that the overhead of invoking methods and organizing the execution process should not exceed 15% of the application’s execution time, otherwise you should seriously consider refactoring the application and optimizing its logic. Armed with such thoughts, I came across a method

An interesting point here is the comparison of elements - to provide flexibility, it is carried out by a separate class that implements the interface

So we have a standard implementation of Hoar’s quick sort, which uses a method

Since we will exclusively investigate the call of the comparison operation, all changes to this function will be made only on lines 1, 12, 16, 53, and 61, which are marked with identifying comments in the listing.

To begin with, we estimate the contribution of the overhead to calling the operation of comparing two numbers in the duration of the sorting process. To do this, in the above function, we change the calls "

What could slow down the work so much? Obviously, the point is the excessive complexity of the comparison process (and this despite the fact that the operation itself is reduced to subtracting two numbers!):

In this case, the first two points are the difference between the CIL instruction

Well, the fourth point is called by the standard implementation of comparer (all checks on are

We will remove the layers one at a time, start with a call

Measurements show winning 15% of the time. And this despite the fact that we are considering an array of integers and the call

Thanks to the transfer of the actual type to the sorting function, when the generic parameters are expanded, the real type of comparer will be taken into account and a more efficient instruction will be used to call the comparison operation

On a note:In the STL library from C ++, all predicates are transferred in this way - the type goes through the template parameter. Additionally, thanks to this trick, the C ++ compiler gets full information about the types and, as a rule, performs inlining of the predicate code, thereby completely eliminating the cost of invoking the method. My experiences with disassembling the results of .Net JIT have shown that, contrary to all the tricks, no deployment takes place here. Apparently this is either related to generics, or it works exclusively for static methods.

Note # 2: It was not possible to save on transfer of comparer using an empty structure - size (

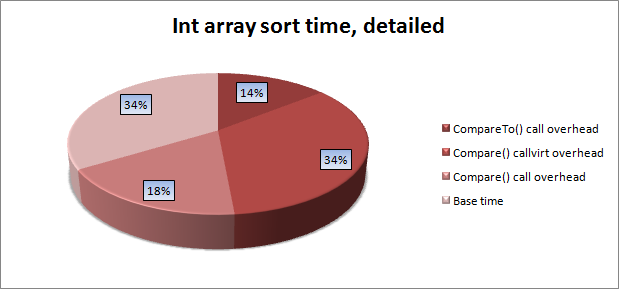

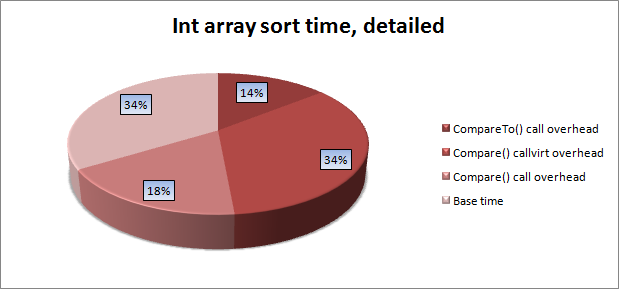

If we reduce the data into a single diagram, we get the following distribution of the sorting execution time by the standard method:

The obvious conclusion is that when developing heavy computational libraries, it makes sense to make frequently called predicates structures and transfer their type through generic parameters.

But our applications do not live by numbers :) The question of influence has arisen in the context of working with arrays of objects, check? Easy! We take such a simple class as a prototype:

And we conduct similar simple experiments, but with an array of objects, as a result, we get a slightly different situation:

And if the ratio between the parts of the comparison process remained unchanged, then their contribution to the total time decreased markedly. A very lucky question arises: what is the matter, what is slowing down? A quick glance at the sorting function code is enough to understand: the work of the only different piece of code slowed down - the exchange of values between array elements. But we are working with a reference type, only pointers are rearranged, and they are "at heart" of the number! Since it’s interesting, it means we’ll see that it slows down there, reading the CIL does not give much benefit - the exchange code is absolutely identical except for using instructions

Since CIL did not help, it means the exit from JIT, so we will look at the assembler :) Here we are in a lot of trouble - regardless of the configuration (Debug / Release), the JIT compiler looked at its environment and, depending on the presence of a debugger attached to the process generates a different code. Therefore, to access the real code through the Disassembly window, we will launch the application without debugging and set breakpoints in the form of calls

In listings, it immediately catches the eye of a call to some function to write to an array of objects, and this call was absent while we were watching CIL. Obviously, this function does more than just copy the address, and in addition to the costs of organizing this call, we get something else, which means that the details lie in the implementation of the CLR. Indeed, after reading the sources of the public version of CLR 2.0 (SSCLI 2.0 package), we find the following code:

As you can see from this, when writing an element to an array of objects, in addition to the standard check of the boundaries of the array, type compatibility is also checked and, if necessary, appropriate conversions are performed. It is worth noting that being a string typed, C # itself also contains type control, but since the information already arrives in the CIL instruction in the form

What conclusions can be drawn from all this? I will not offer hardcore optimizations, but it makes sense to avoid virtual calls inside large loops, especially in miniature predicate functions. In practice, this is for example:

Of course, these are not the only possible conclusions, but you have to leave the reader room for thoughts :)

QuickSortfrom the standard class ArraySortHelper<T>used to sort arrays in .Net. An interesting point here is the comparison of elements - to provide flexibility, it is carried out by a separate class that implements the interface

IComparer<T>. Armed with a variety of thoughts and a studio, it was decided to assess how much such flexibility costs and what could be done with it - under the cut is an analysis of the costs of comparing elements during the operation of QuickSort.So we have a standard implementation of Hoar’s quick sort, which uses a method

Compare(T x, T y)from an object that implements the interface to compare array elements IComparer<T>. For our experiments, using a reflector, we get the code for the sorting method in the following form:Copy Source | Copy HTML- static public void Sort<T, TValue>(T[] keys, TValue[] values, int left, int right, IComparer<T> comparer)

- {

- do

- {

- int index = left;

- int num2 = right;

- T y = keys[index + ((num2 - index) >> 1)];

- do

- {

- try

- {

- /* Cmp 1 */ while (comparer.Compare(keys[index], y) < 0)

- {

- index++;

- }

- /* Cmp 2 */ while (comparer.Compare(y, keys[num2]) < 0)

- {

- num2--;

- }

- }

- catch (IndexOutOfRangeException)

- {

- throw new ArgumentException(null, "keys");

- }

- catch (Exception)

- {

- throw new InvalidOperationException();

- }

- if (index > num2)

- {

- break;

- }

- if (index < num2)

- {

- T local2 = keys[index];

- keys[index] = keys[num2];

- keys[num2] = local2;

- if (values != null)

- {

- TValue local3 = values[index];

- values[index] = values[num2];

- values[num2] = local3;

- }

- }

- index++;

- num2--;

- }

- while (index <= num2);

- if ((num2 - left) <= (right - index))

- {

- if (left < num2)

- {

- /* Call 1 */ Sort<T, TValue>(keys, values, left, num2, comparer);

- }

- left = index;

- }

- else

- {

- if (index < right)

- {

- /* Call 2 */ Sort<T, TValue>(keys, values, index, right, comparer);

- }

- right = num2;

- }

- }

- while (left < right);

- }

Since we will exclusively investigate the call of the comparison operation, all changes to this function will be made only on lines 1, 12, 16, 53, and 61, which are marked with identifying comments in the listing.

Part one. Experiments with arrays of numbers (int)

To begin with, we estimate the contribution of the overhead to calling the operation of comparing two numbers in the duration of the sorting process. To do this, in the above function, we change the calls "

comparer.Compare(a, b)" to expressions of the form " a - b". We measure the working time of both versions and see ... We see a terrible picture - almost two-thirds of the time is spent on organizing the call of the predicate of the comparison number:

What could slow down the work so much? Obviously, the point is the excessive complexity of the comparison process (and this despite the fact that the operation itself is reduced to subtracting two numbers!):

- Check object

compareronnull - Search for a method

Comparein the virtual table of an object - Method call

Comparefor objectcomparer - Method call

CompareTo - Actually comparison of numbers

In this case, the first two points are the difference between the CIL instruction

callvirtand call. Let me remind you that callvirt, due to the presence of a check on null, it is generated to call all non-static class methods, regardless of their virtuality. Well, the fourth point is called by the standard implementation of comparer (all checks on are

nullnaturally removed by the JIT compiler when substituting intinstead T):Copy Source | Copy HTML- class GenericComparer<T> : IComparer<T> where T : IComparable<T>

- {

- public int Compare(T x, T y)

- {

- if (x != null)

- {

- if (y != null)

- {

- return x.CompareTo(y);

- }

- return 1;

- }

- if (y != null)

- {

- return -1;

- }

- return 0;

- }

- }

We will remove the layers one at a time, start with a call

CompareTo, since this is done elementarily - by writing our comparer of the following form:Copy Source | Copy HTML- class IntComparer : IComparer<int>

- {

- public int Compare(int x, int y)

- {

- return x - y;

- }

- }

Measurements show winning 15% of the time. And this despite the fact that we are considering an array of integers and the call

CompareTofor them, as for all methods for all structures, is non-virtual. The next layer, the difference between callvirtand callwhen called, is more difficult to verify, especially within the same requirements for the flexibility of the sort function. However, nothing is impossible - when working through an instance of a structure, all methods of all structures are called using an operation call, including the implementation of methods inherited from interfaces. Thanks to this, we can do the following optimization:Copy Source | Copy HTML- static public void SortNoVirt<T, TValue, TCmp>(T[] keys, TValue[] values, int left, int right, TCmp comparer) where TCmp : IComparer<T>

- {

- // ...

- }

-

- struct IntComparerNoVirt : IComparer<int>

- {

- public int Compare(int x, int y)

- {

- return x - y;

- }

- }

Thanks to the transfer of the actual type to the sorting function, when the generic parameters are expanded, the real type of comparer will be taken into account and a more efficient instruction will be used to call the comparison operation

call. Measurements of time show an increase in productivity of about 35% of the execution time of a “standard” call, while the increase from the subsequent replacement of the call Compareby subtraction is 18%. On a note:In the STL library from C ++, all predicates are transferred in this way - the type goes through the template parameter. Additionally, thanks to this trick, the C ++ compiler gets full information about the types and, as a rule, performs inlining of the predicate code, thereby completely eliminating the cost of invoking the method. My experiences with disassembling the results of .Net JIT have shown that, contrary to all the tricks, no deployment takes place here. Apparently this is either related to generics, or it works exclusively for static methods.

Note # 2: It was not possible to save on transfer of comparer using an empty structure - size (

sizeof) structures without fields in C # turned out to be equal to one (and not to zero, as I wanted). In VC ++, the size of an empty structure is also equal to one, while the standard says that the size of an empty class (structure) must be greater than zero. This is done because of the popular design for calculating the length of the " sizeof(array) / sizeof(array[0])" array . In C # /. Net, this is apparently done for binary compatibility when interacting with code written in C ++. If we reduce the data into a single diagram, we get the following distribution of the sorting execution time by the standard method:

The obvious conclusion is that when developing heavy computational libraries, it makes sense to make frequently called predicates structures and transfer their type through generic parameters.

Part two. What will happen to the objects?

But our applications do not live by numbers :) The question of influence has arisen in the context of working with arrays of objects, check? Easy! We take such a simple class as a prototype:

Copy Source | Copy HTML- class IntObj : IComparable<IntObj>

- {

- public int value;

-

- public int CompareTo(IntObj other)

- {

- return value - other.value;

- }

- }

And we conduct similar simple experiments, but with an array of objects, as a result, we get a slightly different situation:

And if the ratio between the parts of the comparison process remained unchanged, then their contribution to the total time decreased markedly. A very lucky question arises: what is the matter, what is slowing down? A quick glance at the sorting function code is enough to understand: the work of the only different piece of code slowed down - the exchange of values between array elements. But we are working with a reference type, only pointers are rearranged, and they are "at heart" of the number! Since it’s interesting, it means we’ll see that it slows down there, reading the CIL does not give much benefit - the exchange code is absolutely identical except for using instructions

*.i4for numbers and *.reffor objects.Since CIL did not help, it means the exit from JIT, so we will look at the assembler :) Here we are in a lot of trouble - regardless of the configuration (Debug / Release), the JIT compiler looked at its environment and, depending on the presence of a debugger attached to the process generates a different code. Therefore, to access the real code through the Disassembly window, we will launch the application without debugging and set breakpoints in the form of calls

Debugger.Break();. The following are listings with the code that is generated to exchange values in two cells of the array:In listings, it immediately catches the eye of a call to some function to write to an array of objects, and this call was absent while we were watching CIL. Obviously, this function does more than just copy the address, and in addition to the costs of organizing this call, we get something else, which means that the details lie in the implementation of the CLR. Indeed, after reading the sources of the public version of CLR 2.0 (SSCLI 2.0 package), we find the following code:

Copy Source | Copy HTML- /****************************************************************************/

- /* assigns 'val to 'array[idx], after doing all the proper checks */

-

- HCIMPL3(void, JIT_Stelem_Ref_Portable, PtrArray* array, unsigned idx, Object *val)

- {

- if (!array)

- {

- FCThrowVoid(kNullReferenceException);

- }

- if (idx >= array->GetNumComponents())

- {

- FCThrowVoid(kIndexOutOfRangeException);

- }

-

- if (val)

- {

- MethodTable *valMT = val->GetMethodTable();

- TypeHandle arrayElemTH = array->GetArrayElementTypeHandle();

-

- if (arrayElemTH != TypeHandle(valMT) && arrayElemTH != TypeHandle(g_pObjectClass))

- {

- TypeHandle::CastResult result = ObjIsInstanceOfNoGC(val, arrayElemTH);

- if (result != TypeHandle::CanCast)

- {

- HELPER_METHOD_FRAME_BEGIN_2(val, array);

-

- // This is equivalent to ArrayStoreCheck(&val, &array);

- #ifdef STRESS_HEAP

- // Force a GC on every jit if the stress level is high enough

- if (g_pConfig->GetGCStressLevel() != 0

- #ifdef _DEBUG

- && !g_pConfig->FastGCStressLevel()

- #endif

- )

- GCHeap::GetGCHeap()->StressHeap();

- #endif

-

- #if CHECK_APP_DOMAIN_LEAKS

- // If the instance is agile or check agile

- if (!arrayElemTH.IsAppDomainAgile() && !arrayElemTH.IsCheckAppDomainAgile() && g_pConfig->AppDomainLeaks())

- {

- val->AssignAppDomain(array->GetAppDomain());

- }

- #endif

- if (!ObjIsInstanceOf(val, arrayElemTH))

- {

- COMPlusThrow(kArrayTypeMismatchException);

- }

-

- HELPER_METHOD_FRAME_END();

- }

- }

-

- // The performance gain of the optimized JIT_Stelem_Ref in

- // jitinterfacex86.cpp is mainly due to calling JIT_WriteBarrierReg_Buf.

- // By calling write barrier directly here,

- // we can avoid translating in-line assembly from MSVC to gcc

- // while keeping most of the performance gain.

- HCCALL2(JIT_WriteBarrier, (Object **)&array->m_Array[idx], val);

-

- }

- else

- {

- // no need to go through write-barrier for NULL

- ClearObjectReference(&array->m_Array[idx]);

- }

- }

- HCIMPLEND

As you can see from this, when writing an element to an array of objects, in addition to the standard check of the boundaries of the array, type compatibility is also checked and, if necessary, appropriate conversions are performed. It is worth noting that being a string typed, C # itself also contains type control, but since the information already arrives in the CIL instruction in the form

System.Object, the CLR checks it again for reliability.Part three. Similarity of conclusions

What conclusions can be drawn from all this? I will not offer hardcore optimizations, but it makes sense to avoid virtual calls inside large loops, especially in miniature predicate functions. In practice, this is for example:

- implementing predicates in the form of structures, rather than classes, with subsequent prediction of type information through generic parameters

- replacement of small utilitarian class methods with static extension methods, which, due to their static nature, will be called to bypass the virtual table

Of course, these are not the only possible conclusions, but you have to leave the reader room for thoughts :)