OpenGL ES 2.0 Basics on iPhone 3G S

One of the nicest innovations in the iPhone 3GS is a faster and more powerful graphics platform with support for OpenGL ES 2.0 . Unfortunately, information from Apple on exactly how to use the opportunities that have opened is extremely small. For almost all APIs , they have excellent documentation with code samples, but the problem is that in the case of OpenGL, the examples always left, to put it mildly, much to be desired.

One of the nicest innovations in the iPhone 3GS is a faster and more powerful graphics platform with support for OpenGL ES 2.0 . Unfortunately, information from Apple on exactly how to use the opportunities that have opened is extremely small. For almost all APIs , they have excellent documentation with code samples, but the problem is that in the case of OpenGL, the examples always left, to put it mildly, much to be desired. Moreover, beginners with OpenGL ES 2.0 are not offered either basic examples or the Xcode template . To take advantage of the advanced graphics capabilities, you will have to master them yourself. Do not mistakenly believe thatOpenGL ES 2.0 is a slightly modified version of OpenGL ES 1.1 with a couple of new features. The differences between them are cardinal! The pipeline with fixed functions disappeared, and now to display the usual triangle on the screen, you will need a deeper understanding of the basics of computer graphics, including shaders.

Given the complete lack of documentation, I decided to create the simplest application on the iPhone using OpenGL ES 2.0 . For users, it may well be the starting point when creating applications. As options, I considered a rotating kettle and other designs, but in the end I decided not to go into details on loading the model, but simply update the OpenGL ES 1.1 application, which is part of the Xcode template . The full final code can be downloaded here .

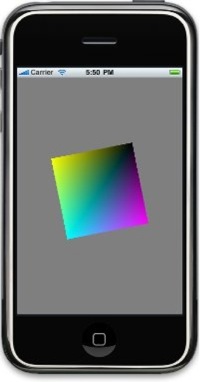

Nothing intriguing - just a spinning square. However, this is enough to get acquainted with the basics of launching OpenGL , creating shaders, and also connecting them to the program with subsequent use. Moreover, in the end we will consider a function that is impossible in OpenGL ES 1.1 . Ready?

Initialization

Initializing OpenGL is practically no different from OpenGL ES 1.1 . The only difference is that you will need to report a new version of the API for ES 2.0 .

context = [[EAGLContext alloc] initWithAPI:kEAGLRenderingAPIOpenGLES2];Everything else, including EAGLView and the creation of so-called back buffers , remains the same as before - I will not dwell on these points.

Remember that when you initialize OpenGL ES 2.0, you will not be able to invoke OpenGL ES 1.1 related ones.functions. Attempting to work with them will cause the program to crash, because there are no correct settings for these functions. Accordingly, if you want to take advantage of the 3GS graphics , while ensuring compatibility with previous models, you will need to check the device type by activating OpenGL ES 1.1 or 2.0 - each version will have its own codes.

Shader Creation

OpenGL ES 1.1 uses a pipeline with fixed functions to render polygons . To visualize an object on the screen in OpenGL ES 2.0 , it is necessary to register shaders - mini-programs created for a specific graphics platform. Their task is to transform the input data (vertices and states) into an image on the screen. Shaders are written in the OpenGL Shader Language (abbreviated GLSL ), which will not pose the slightest problems for those who are used to working with C. Of course, in order to use all its features, you will need to study some details. Below will be presented only the basic concepts and their relationships.

There are two types of shaders: vertex shaders are executed for each vertex, fragment shaders - for each pixel. Technically, the latter are performed for each fragment, which may not correspond to a pixel, for example, during smoothing. So far, we can safely assume that fragment shaders are executed for each rendered pixel.

A vertex shader calculates the position of a vertex in a truncated space ( Clip Space ). Other values can optionally be calculated for subsequent use by the fragment shader.

- A vertex shader accepts two types of data - unified and attributes. Unified inputs are values set once from the main program and applied to all vertices processed by the vertex shader when drawing is requested. For example, the transformation of the " world view " is a unified introductory.

- The input attributes of a single drawing request can vary for each vertex. Position, normal, color information are all introductory attributes.

At the output, the vertex shader provides two types of data:

- The implied position in the variable " gl_Position ", i.e. the position of the vertex in a truncated space (later transformed into the Viewport Space viewport ).

- Varying results. Variables defined by a varying attribute will be interpolated between the vertices, and the resulting values will be passed as input to fragment shaders.

In a typical program, a vertex shader simply transforms the vertex position from Model Space into truncated space and transfers the vertex color for interpolation by a fragment shader. Fragment shaders calculate the color of a fragment (pixel). The input parameters for them are the varying variables generated by the vertex shader in addition to the variable " gl_Position ". Color calculation can be limited by adding a constant to " gl_Position ", or it can be a search for a texture pixel by uv coordinates or a complex operation that takes into account the lighting conditions.

uniform mat4 u_mvpMatrix;

attribute vec4 a_position;

attribute vec4 a_color;

varying vec4 v_color;

void main()

{

gl_Position = u_mvpMatrix * a_position;

v_color = a_color;

}

Our fragment shader will be elementary: taking the color from the vertex shader, it applies it to this fragment. It is possible that all this sounds vague and vague, but this is the beauty of shaders: no presets, rendering depends only on the desires of the user (and hardware capabilities).

varying vec4 v_color;

void main()

{

gl_FragColor = v_color;

}

Shader Compilation

We have some ready-made shaders. How to make them work? It will take a few steps, the first of which will be compilation and linking.

In the process, you need to download the source code for each vertex along with the fragment shaders, and then compile it using a couple of calls to OpenGL . As soon as the compilation for the shaders is completed, you need to create a shader program, add the shaders themselves and link them together. Linking involves overriding vertex shader data at the output of the intended result of the fragment shader. It is possible to diagnose errors when compiling and establishing links with the display of a message about the cause of the error.

const unsigned int shader = glCreateShader(type);

glShaderSource(shader, 1, (const GLchar**)&source, NULL);

glCompileShader(shader);

m_shaderProgram = glCreateProgram();

glAttachShader(m_shaderProgram, vertexShader);

glAttachShader(m_shaderProgram, fragmentShader);

glLinkProgram(m_shaderProgram);

int success;

glGetShaderiv(shader, GL_COMPILE_STATUS, &success);

if (success == 0)

glGetShaderInfoLog(shader, sizeof(errorMsg), NULL, errorMsg);

If you don’t like the idea of compiling and linking programs in the process, I hasten to note that many people share this opinion. Ideally, this step should be performed offline, similar to compiling the source code for the main program in Objective-C . Unfortunately, the priority for Apple is openness and the ability to change the format in the future, so we are forced to compile with the establishment of communication “on the job”. This is not only annoying, but, theoretically, implies a fairly low speed in the presence of several shaders. Nobody wants to lose the extra seconds when launching the application, but so far they have to take it for granted in exchange for the ability to work with shaders on the iPhone .

Snap

We are almost ready to use shaders, but first you need to stipulate how to correctly configure the input data. The vertex shader relies on the configured mvp matrix ( model-view-projection ), as well as the vertex data stream with positions and colors.

To do this, we by name request the necessary parameters from the shader program. It returns a handle with which values are set immediately before the model is rendered.

m_a_positionHandle = glGetAttribLocation(m_shaderProgram, "a_position");

m_a_colorHandle = glGetAttribLocation(m_shaderProgram, "a_color");

m_u_mvpHandle = glGetUniformLocation(m_shaderProgram, "u_mvpMatrix");

Work with shaders

And finally, with the help of our shaders, you can render several polygons. All that is needed is to start the shader program ... ... and set up the correct input using the descriptors previously requested for entering parameters: Now we just call any of the rendering functions known by OpenGL ES 1.1 (" glDrawArrays " or " glDrawElements "): If everything went well, a correctly visualized model will appear on the screen. In our case, it’s just a square that will become the basis for rendering our own models - with the necessary transformations, shaders and effects.

glUseProgram(m_shaderProgram);

glVertexAttribPointer(m_a_positionHandle, 2, GL_FLOAT, GL_FALSE, 0, squareVertices);

glEnableVertexAttribArray(m_a_positionHandle);

glVertexAttribPointer(m_a_colorHandle, 4, GL_FLOAT, GL_FALSE, 0, squareColors);

glEnableVertexAttribArray(m_a_colorHandle);

glUniformMatrix4fv(m_u_mvpHandle, 1, GL_FALSE, (GLfloat*)&mvp.m[0] );

glDrawArrays(GL_TRIANGLE_STRIP, 0, 4);

And a little bonus in the end

I understand that it’s not too tempting to do all this just to get the same rotating square as in the OpenGL ES 1.1 template . Of course, this is a necessary and important first step, but still ... Therefore, to demonstrate how easy it is to create various effects with GLSL , I present a modified pixel shader. This version of the fragment shader checks whether the pixel is an even or odd line, and renders the even lines as completely transparent, creating the effect of stripes on the screen. Achieving a similar result in OpenGL ES 1.1 was very problematic, but in version 2.0 it is just a couple of simple lines.

float odd = floor(mod(gl_FragCoord.y, 2.0));

gl_FragColor = vec4(v_color.x, v_color.y, v_color.z, odd);

Armed with an example code and ideas about the functions of shaders, now you can create your own shaders and add interesting and unique visual effects to games.

The source code for the lessons can be downloaded here .

The text of the original article in English is here .