Getting smarter, cars start learning almost as much as we do.

- Transfer

Research shows that computer models, known as neural networks used in an ever-increasing number of applications, can learn to recognize sequences in data using the same algorithms as the human brain.

The brain solves its canonical problem — learning — by arranging many of its compounds according to an unknown set of rules. To uncover these rules, scientists began to develop computer models 30 years ago, trying to reproduce the learning process. Today, in a growing number of experiments, it becomes clear that these models behave very much like a real brain in performing certain tasks. Researchers say that this similarity speaks of the basic correspondence between brain and computer learning algorithms.

The algorithm used by the computer model is called the Boltzmann machine . He was invented by Jeffrey Hinton and Terry Seinovsky in 1983 [in fact, in 1985- approx. trans.]. It looks very promising as a simple theoretical explanation of several processes occurring in the brain - development, memory formation, recognition of objects and sounds, the cycle of sleep and wakefulness.

"This is the best opportunity we have today for understanding the brain," said Sue Becker, a professor of psychology, neuroscience and behavior at the University. McMaster in Hamilton, Ontario. “I don’t know a model that describes a wider range of phenomena related to learning and brain structure.”

Hinton, a pioneer in the field of AI, always wanted to understand the rules by which the brain strengthens the connection or weakens it - that is, the learning algorithm. “I decided that in order to understand something, it must be built,” he says. Following the reductionist approach of physicists, he plans to create simple computer models of the brain using different learning algorithms and see "which ones will work," says Hinton, who works partly at Toronto University as a computer science professor, and partly at Google.

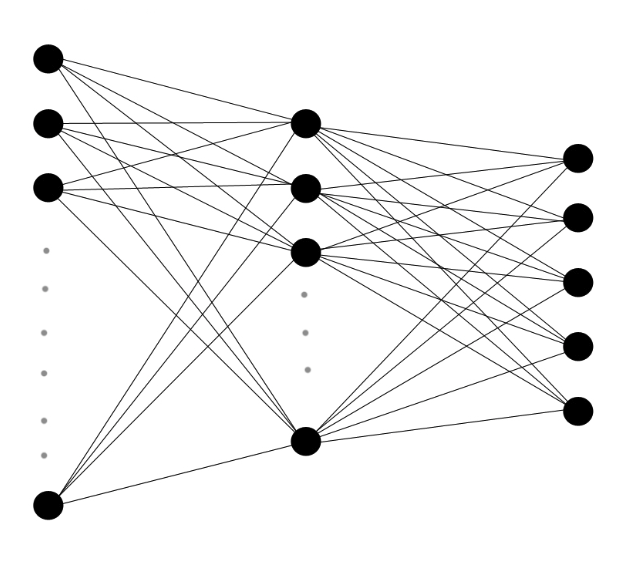

Multilayer neural networks consist of layers of artificial neurons with weighted connections between them. The incoming data is sent to the cascade of signals by layers, and the algorithm determines the change in the weights of each connection.

In the 1980s and 1990s, Hinton, the great-great-great-great-great grandson of the nineteenth-century logic of George Buhl, whose work formed the basis of modern computer science, invented several machine learning algorithms. Algorithms that control how a computer learns from data are used in computer models called “artificial neural networks” - the web of interconnected virtual neurons that transmit signals to their neighbors, turning on or off, or “triggered”. When data is fed into the network, this leads to a cascade of positives, and based on the picture of these positives, the algorithm chooses to increase or decrease the weights of connections, or synapses, between each pair of neurons.

For decades, many Hinton computer models have subsided. But thanks to advances in processor power, advances in understanding the brain and algorithms, neural networks play an ever-increasing role in neuroscience. Sejnowski, head of the Computational Laboratory of Neurobiology at the Institute of Biological Research. Salk in La Jolie (California), says: “Thirty years ago we had very rough ideas; Now we are starting to check some of them. ”

Brain machines

Hinton’s early attempts to reproduce the brain were limited. Computers could run its learning algorithms on small neural networks, but the scaling of models very quickly overloaded the processors. In 2005, Hinton discovered that if the neural networks were divided into layers and the algorithms were run separately on each layer, approximately repeating the structure and development of the brain, the process becomes more efficient.

Although Hinton published his discovery in two famous journals, neural networks were out of fashion by that time, and it “struggled to get people interested,” said Li Deng, a lead researcher at Microsoft Research. However, Deng knew Hinton and decided to try out his “depth learning” method in 2009, quickly recognizing his potential. In subsequent years, learning algorithms are used in practice in a growing number of applications, such as Google Now’s personal assistant or voice search feature on Microsoft Windows phones.

One of the most promising algorithms, the Boltzmann machine, is named after the 19th century Austrian physicist Ludwig Boltzmann, who developed the physics section dealing with a large number of particles, known as statistical mechanics. Boltzmann discovered an equation that gives the probability of possessing a molecular gas of a certain energy when it reaches equilibrium. If you replace the molecules with neurons, the result will tend to the same equation.

The network synapses begin with a random distribution of weights, and the weights are gradually adjusted according to a fairly simple procedure: the generated response pattern in the process of acquiring data by the machine (such as images or sounds) is compared with the random response pattern of the machine that occurs when no data is entered.

Geoffrey Hinton believes that the best approach to understanding the learning processes in the brain would be to build computers that learn in the same way.

Each virtual synapse tracks both statistical sets. If the neurons that they connect trigger more often in close sequence when receiving data than during random operation, the weight of the synapse increases by a quantity proportional to the difference. But if two neurons more often work together during random operation, the synapse connecting them is considered too strong and weakened.

The most commonly used version of the Boltzmann machine works best after the “workout”, reworking thousands of examples of data sequentially on each layer. First, the lower layer of the network receives raw data in the form of images or sounds, and, in the manner of retinal cells, neurons work if they detect contrasts in their data section, such as switching from light to dark. Their triggering may trigger the activation of neurons associated with them, depending on the weight of the synapse connecting them. As the triggering of pairs of virtual neurons is constantly compared with background statistics, meaningful connections between neurons gradually appear and strengthen. The weights of synapses are refined, and the categories of sounds and images are embedded in the connections. Each subsequent layer is trained in a similar way, using data from the layer below it.

If you feed a car image of a neural network, trained to detect certain objects in images, the bottom layer will work if it detects a contrast indicating a face or end point. These signals will pass through to higher levels of neurons defining angles, parts of wheels, etc. In the upper level, neurons are triggered only in response to the image of the car.

“The magic of what is happening on the web is that it can generalize,” says Yann LeCun, director of the Center for Data Science at New York University. “If you show her a car she had not seen before, and if the car has some forms and features that are common to the cars shown to her during the training, she can determine that this is a car.”

Neural networks have recently accelerated their development due to the Hinton multilayer mode, the use of high-speed computer chips for graphics processing and the explosive growth in the number of images and voice recording available for training. Networks are able to correctly recognize 88% of the words in English, while the average person recognizes 96%. They can identify cars and thousands of other objects in images with similar accuracy, and have taken a dominant position in machine learning competitions over the past few years.

Building a brain

No one knows how to directly figure out the rules by which the brain is trained, but there are many indirect coincidences between the behaviors of the brain and the Boltzmann machine.

Both are trained without supervision, using only the patterns that exist in the data. “Your mother doesn’t tell you a million times about what is shown in the picture,” says Hinton. - You have to learn to recognize things without the advice of others. After you study the categories, you are informed about the names of these categories. So kids will learn about dogs and cats, and then they will find out that dogs are called "dogs", and cats are called "cats".

The adult brain is not as flexible as the young one, just as the Boltzmann machine, having trained on 100,000 images of cars, will not change much after seeing another one. Her synapses have already established the necessary weights for categorizing cars. But learning does not end there. New information can be integrated into the structure of both the brain and the Boltzmann machine.

Over the past two decades, the study of brain activity in a dream has given the first evidence that the brain uses a Boltzmann-like algorithm to incorporate new information and memories into its structure. Neuroscientists have long known that sleep plays an important role in memory consolidation and helps integrate new information. In 1995, Hinton and colleagues suggestedthat sleep plays the role of the base level in the algorithm, denoting the activity of neurons in the absence of input data.

“During sleep, you just figure out the base frequency of the neurons,” says Hinton. - You find out the correlation of their work in the case when the system works by itself. And then, if the neurons correlate more, simply increase the weights between them. And if it is less, reduce weight. "

At the level of synapses," the algorithm can be achieved in several ways, "- said Sezhnovski, Presidential Administration Advisor within the BRAIN initiative , research with a grant of $ 100 million, aimed at developing a new brain study engineering.

He says, that it is easiest for the brain to work with the Boltzmann algorithm, switching from synapse buildup by day to reducing them by night.Giulio Tononi , head of the Center for the Study of Sleep and Consciousness at the University of Wisconsin-Madison, found that gene expression in synapses changes them according to this hypothesis: genes involved in synapse growth are more active during the day, and genes involved in contraction synapses - at night.

Alternatively, “the baseline can be calculated in a dream, and then changes relative to it can be made during the day,” says Seznovski. In his lab, detailed computer models of synapses and their supported networks are built to determine how they collect wakefulness and sleep statistics, and when the power of synapses changes to reflect this difference.

Brain problems

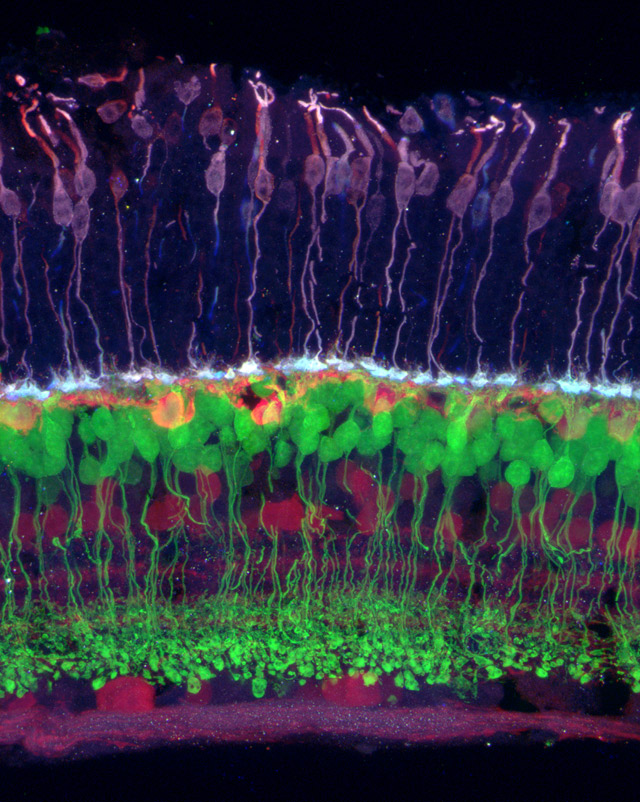

Image of the retina, on which different cell types are indicated by different colors. The color sensitive (purple) are connected to the horizontal (orange), which are connected to the bipolar (green), and those to the cells of the retina and ganglion (purple).

The Boltzmann algorithm may turn out to be one of many used by the brain to adjust synapses. In the 1990s, several independent groups developed a theoretical model of how the visual system effectively encodes the flow of information going to the retina. The theory postulated that in the lower layers of the visual cortex there is a process of “scattered coding”, similar to image compression, with the result that the later stages of the visual system work more efficiently.

Predictions of the model gradually pass more and more rigorous tests. In workpublished by PLOS Computational Biology, computational neuroscientists from Britain and Australia found that when neural networks using the Products of Experts scattered coding algorithm, invented by Hinton in 2002, process the same unusual visual data that live cats get (to For example, cats and neural networks study striped images), their neurons produce almost identical unusual connections.

“By the time information reaches the visual cortex, the brain, we believe, presents it as scattered code,” says Bruno Olshausen, a computational neuroscientist and director of the Redwood Center for Theoretical Neurobiology at the University of California-Berkeley, who helped develop scattered coding theory. “It’s as if Boltzmann’s machine is sitting in your head and trying to understand the connections between the elements of the scattered code.”

Olshausen and the team used the neural networks of the higher layers of the visual cortex to show how the brain is able to maintain a stable perception of visual input despite the movement of images. In another studythey found that the activity of neurons in the visual cortex of cats that observed a black-and-white film is very well described by the Boltzmann machine.

One of the possible applications of this work is the creation of neuroprostheses, for example, an artificial retina. If you understand how “information is formatted in the brain, you can understand how to stimulate the brain to make it think that it sees the image,” says Olshausen.

Seznowski says that understanding the algorithms for growing and decreasing synapses will allow researchers to change them and study how the functioning of the neural network is disturbed. “Then they can be compared to the known problems of people,” he says. - Almost all mental disorders can be explained by problems with synapses. If we can better understand the synapses, we can understand how the brain functions normally, how it processes information, how it learns, and what goes wrong if you have, say, schizophrenia. ”

The approach to studying the brain using neural networks contrasts sharply with the approach of the Human Brain project [ Human Brain Project]. This is the advertised plan of the Swiss neurobiologist Henry Markram to create an accurate simulation of the human brain using a supercomputer. Unlike the Hinton approach, which begins with a highly simplified model and follows a path of gradual complication, Markram wants to immediately include the largest possible amount of data, up to individual molecules, and hopes that as a result he will have full functionality and consciousness.

The project has received funding in the amount of $ 1.3 billion from the European Commission, but Hinton believes that this mega-simulation will fail, sticking into too many moving parts, which no one yet understands.

In addition, Hinton does not believe that the brain can be understood only by its images. Such data should be used to create and refine algorithms. “It requires theoretical thinking and study of the space of learning algorithms in order to create a theory like the Boltzmann machine,” he says. The next step for Hinton is the development of algorithms for training even more brain-like neural networks, such that synapses connect neurons within a single layer, and not just between different layers. “The main goal is to understand the benefits that can be gained by complicating the calculations at each stage,” he says.

The hypothesis is that more connections will lead to an increase in the return loops, which, according to Olshausen, most likely help the brain to “fill in the missing parts”. The higher layers interfere with the work of neurons from the lower layers dealing with partial information. “Everything is closely connected with consciousness,” he says.

The human brain is still much more complex than any model. It is larger, denser, more efficient, it has more interconnections and complex neurons - and it simultaneously works with several algorithms. Olshausen suggests that we understand about 15% of the activity of the visual cortex. Although the models are progressing, neuroscience is still “similar to physics before Newton,” he says. Nevertheless, he is confident that the process of working on the basis of these algorithms will someday be able to explain the main puzzle of the brain - how the data from the senses are transformed into a subjective sense of reality. Consciousness, says Olshausen, "is something that emerges from reality, a very complex Boltzmann machine."