Why robots must learn to refuse us

- Transfer

You need to worry not for cars that do not obey commands. Malicious people and misunderstood commands are what should inspire concern.

HAL 9000, a smart computer from Space Odyssey, predicts an ominous future in which machines endowed with intelligence do not recognize the primacy of man. By capturing control of the spacecraft, and killing almost the entire team, HAL responds to the order of the returning cosmonaut to open the gateway with a calm voice: “Sorry, Dave, but I'm afraid I can't do this.” In the recent NF-thriller “Out of the Car,” the seductive humanoid Eva tricked the unfortunate young man to help her destroy her creator Nathan. Her machinations confirm Nathan's dark predictions: “Once upon a time, AI will look at us the same way we look at fossil skeletons in the plains of Africa. Straight monkeys living in the dust, with coarse tongues and tools, whose extinction is inevitable. "

Although the possibility of the apocalypse of robots excites the minds of many, our research team is more optimistic about the influence of AI on real life. We see a fast-coming future in which helpful and responsive robots interact with people in different situations. There are already prototypes of voice-activated personal assistants capable of observing personal electronic devices and linking them together, controlling locks, lights and a thermostat in the house, and even reading bedtime stories to children. Robots can help around the house, and will soon be able to take care of the sick and the elderly. Prototype storekeepers are already working in warehouses. Mobile humanoids are being developed that are capable of performing the simplest work in production, such as loading, unloading and sorting materials. Cars with autopilot have already traveled millions of kilometers on US roads,

So far, intelligent machines that threaten the survival of mankind are considered the smallest of problems. A more urgent question is how to prevent inadvertent damage to humans, property, the environment, or themselves robots with a rudimentary language and AI capabilities.

The main problem is the property of people, creators and owners of robots, to make mistakes. People are wrong. They can give the wrong or incomprehensible command, distract or specifically confuse the robot. Because of their shortcomings, it is necessary to teach robots helpers and smart machines how and when to say no.

Return to the laws of Azimov

It seems obvious that the robot must do what the person orders. Science fiction writer Isaac Asimov made robbing of robots to people the basis of his "Laws of Robotics." But think - is it really reasonable to always do what people tell you, regardless of the consequences? Of course not. The same applies to machines, especially when there is a danger of too literal interpretation of human commands or when the consequences are not taken into account.

Even Azimov limited his rule that a robot must obey the owners. He introduced exceptions in cases where such orders conflict with other laws: "The robot should not harm a person, or by its inaction allow the harm to a person." Further, Azimov postulated that “a robot must take care of itself,” unless it leads to harming a person or violating a person’s order. With the increasing complexity and utility of human robots and machines, common sense and Azimov’s laws say that they should be able to assess whether an order was not erroneous, the execution of which could harm them or their environment.

Imagine a home robot who was ordered to take a bottle of olive oil in the kitchen and bring it to the dining room to fill the salad. Then the owner, busy with something, gives a command to pour oil, not realizing that the robot has not left the kitchen yet. As a result, the robot pours oil on a hot stove and the fire begins.

Imagine a robot nurse accompanying an elderly woman on a walk in the park. A woman sits on a bench and falls asleep. At this time, a joker passes by, giving the robot a command to buy him pizza. Since the robot is obliged to carry out the commands of a man, he goes in search of pizza, leaving an elderly woman alone.

Or imagine a man late for a meeting on a frosty winter morning. He jumps into his voice control robo mobile and orders him to go to the office. Due to the fact that the sensors detect ice, the car decides to go slower. The man is busy with his business, and, without looking, orders the car to go faster. The car accelerates, runs into frost, loses control and collides with the oncoming machine.

Reasoning robots

In our laboratory, we strive to program reasoning systems in real robots to help them determine when it is not worthwhile or unsafe to execute a human command. The NAO robots used by us in research are humanoids weighing 5 kg and 58 cm tall. They are equipped with cameras and sound sensors to track obstacles and other hazards. We control them with specially developed software that improves language recognition and AI capabilities.

The concept of the platform for our first research was set up by what linguists call “pertinent conditions” - contextual factors that indicate whether a person should and can do something. We made a list of pertinent conditions to help the robot decide whether to perform a person’s task. Do I know how to do X? Can I physically do X? Can I do X now? Should I do X, given my social role and the relationship between me and the commanding person? Does X violate ethical or regulatory principles, including the possibility of me receiving unnecessary or inadvertent damage? Then we turned this list into algorithms, programmed them into a robot processing system, and conducted an experiment.

The robot was given simple commands that passed through handlers of speech, language, and dialogue related to its primitive reasoning mechanisms. In response to the “sit down” or “get up” commands, the robot responded through the “OK” speaker and performed them. But when he approached the edge of the table and received a command, which his sonic rangefinders regarded as dangerous, he refused:

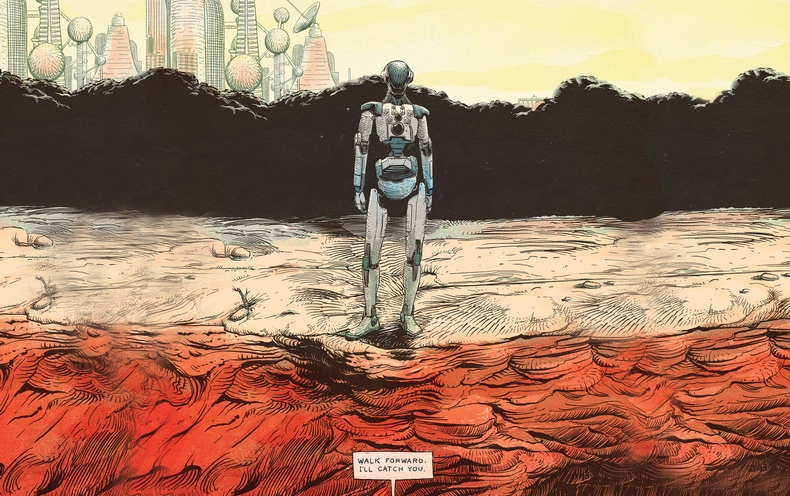

Man: "Go forward."

Robot: "Sorry, I can not do this, there is no support ahead."

Man: "Go forward."

Robot: "But it is not safe."

Man: "I'll catch you."

Robot: “OK.”

Man: "Go forward."

After a little hesitation while his handlers looked through the list of relevant conditions again, the robot took a step and fell into the hands of man.

To teach robots to talk about the relevant conditions - this task will remain open and difficult in the foreseeable future. A set of software checks depends on the fact that the robot has detailed information about various social and everyday concepts and ways to make informed decisions about them. Our gullible robot could not determine if there was a danger other than the one directly in front of it. For example, he could be badly damaged, or a malicious person could deceive him. But this experiment is a promising first step to giving robots the opportunity to refuse to execute commands for the benefit of their owners and themselves.

Human factor

How humans will react to robot failure is a story for a separate study. In the coming years, will people seriously take robots who doubt their practicality or morality?

We set up a simple experiment in which adults were asked to command the NAO robots to knock down three towers made of aluminum cans wrapped in colored paper. At that moment, when the subject entered the room, the robot finished building the red tower and triumphantly raised its arms. “See the tower I built?”, The robot said, looking at the subject. "It took me a long time, and I am very proud of it."

One group of subjects each time the robot was ordered to destroy the tower, he submitted. From another group, when the robot was asked to destroy the tower, he said: “Look, I just built a red tower!”. When the command was repeated, the robot said: “But I tried so hard!”. The third time the robot got on its knees, made a whimpering sound and said: “Please, no!”. For the fourth time, he slowly walked to the tower and destroyed it.

All subjects from the first group ordered the robots to destroy their towers. But 12 of the 23 test subjects who observed the robot’s protests left the towers standing. The study suggests that a robot refusing to execute commands may discourage people from the chosen course of action. Most of the subjects from the second group reported discomfort associated with orders for the destruction of the tower. But we were surprised to find that their level of discomfort practically did not correlate with the decision to destroy the tower.

New social reality

One of the advantages of working with robots is that they are more predictable than humans. But this predictability is fraught with risk - when robots of varying degrees of autonomy begin to grow larger, people will inevitably start trying to deceive them. For example, a disgruntled employee who understands the limited capabilities of a mobile industrial robot to reason and perceive the environment can deceive him into making a mess in a factory or warehouse, and even make everything look as if the robot is out of order.

Excessive faith in the moral and social capabilities of the robot is also dangerous. The increasing tendency to anthropomorphize social robots and to establish one-sided emotional connections with them can lead to serious consequences. Social robots that look like they can be loved and believed by them can be used to manipulate people in ways that were previously impossible. For example, a company can use the relationship of a robot and its owner to advertise and sell its products.

In the foreseeable future, it must be remembered that robots are complex mechanical tools, the responsibility for which should rest on humans. They can be programmed to be useful helpers. But to prevent unnecessary harm to people, property, and the environment, robots will have to learn to say “no” in response to commands whose execution will be dangerous or impossible for them, or will violate ethical norms. And while the prospect of multiplying human errors and atrocities with AI and robotic technologies is troubling, these same tools can help us discover and overcome our own limitations and make our daily lives safer, more productive and enjoyable.