How we developed IT at Leroy Merlin: rebuilding an engine on the go

Four years ago, a customer base was maintained separately in each store, plus another on the site.

In the previous series: three years ago, we decided that we needed to do our development in Russia. Two years ago, they began to write their own code instead of modifying the fork code of the parent company. Today's story will be about how we switched from one large Legacy monolith to a bunch of small microservices connected by a kind of bus (orchestrator).

The easiest user case: make an order through the site and pick it up in the Leroy Merlin real store in Russia. Previously, online store orders were processed in another application in general and according to a different scheme. Now we needed an omnichannel showcase so that any order was broken down into an interface: a cashier in a store, a mobile application, a terminal in a store, a website - whatever. If you put Linux on the microwave, let the microwave be. The main thing is that some interfaces can knock on the API to the back and say that here you need to place such and such an order. And they received a clear answer to this. The second story was with requests for the availability and properties of the goods from his card.

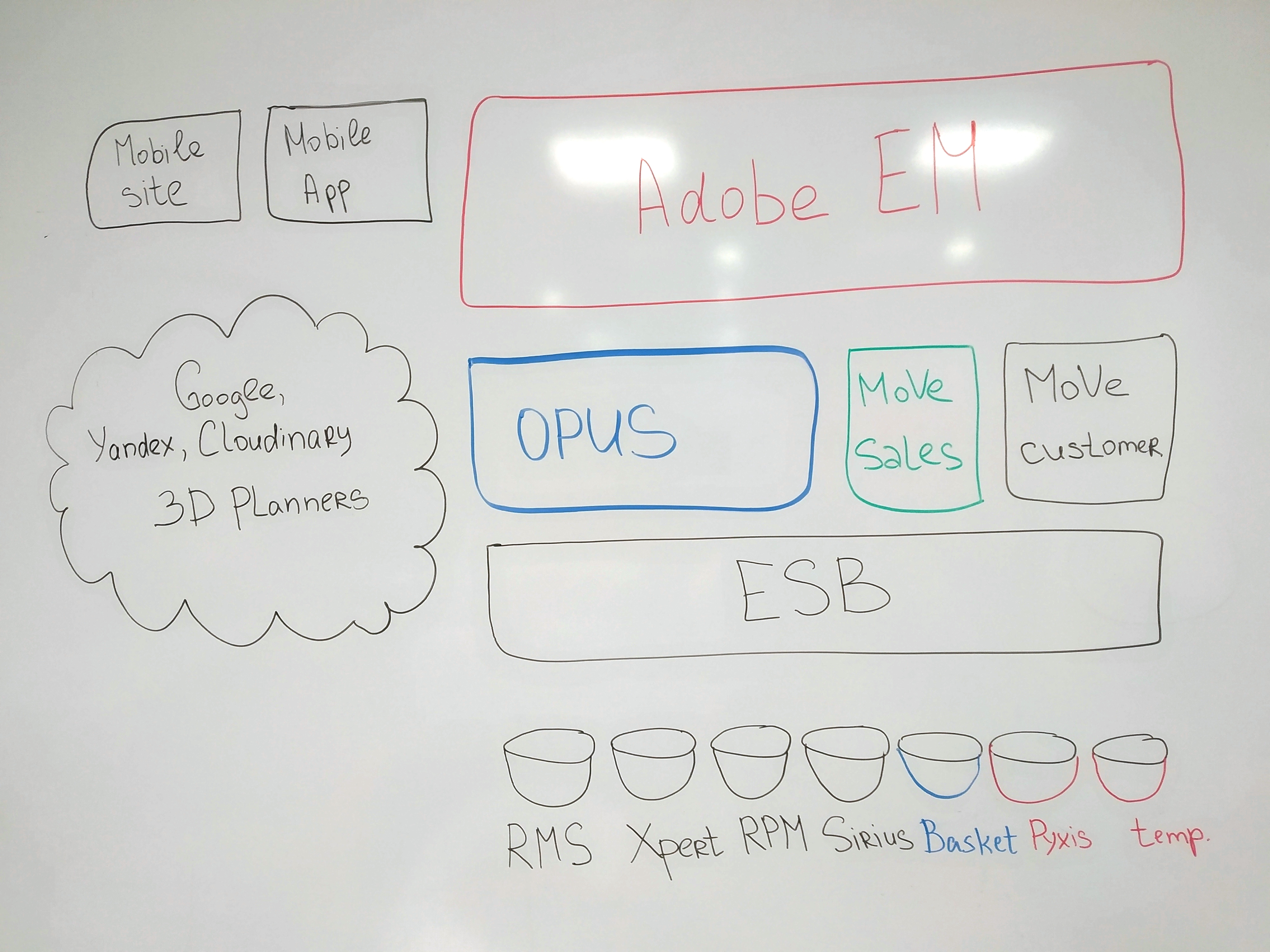

At the front (we will write about this soon), we have a monster - AEM, and behind it in the back were two large applications: OPUS and MoVe. The first is a database of the properties of each product (from dimensions to descriptions), the second is responsible for the checkout, that is, the cash desk monolith. If greatly simplified.

What was wrong with Opus?

OPUS is a large distributed base. More precisely, this is software that provides an interface for accessing the database, that is, it accesses the database and, for example, searches or simply sets the API so that clients do not go directly to the database. This software works and is supported in France. The second feature, as we have already said , is that the line of improvements on it is very long, and we are not in the highest priority in comparison with the business unit of France.

With great difficulty, we could understand how developers could make changes without a team from France; very long approvals took place. Feature released for six months. Actually, at first we wanted to do our own development and their review, and then we came to our own development, our infrastructure, our tests, and in general our own. And at the same time threw out almost a third of the Legacy code.

But back to OPUS. Since the system stores relevant information on the availability, characteristics, transactions and other things, we knocked on it for any reason. Specifically for the site, this meant that if the user has three products in the basket, then you need to knock on the database from each page, because relevance is checked. Even if you knock once for the cache, and then update it smartly, then there were still features. When you open the catalog page in general, all product specifications were taken from OPUS.

The logical next step is that we began to pull OPUS less often and made our base (more precisely, microservices with bases). The system as a whole was called Russian PUB.

Then they made an orchestra, which can already store aggregates, that is, in fact - the collected data for building pages. In the sense that when the user switches the page view from cards to lists, it’s still the same aggregate, only the front is different.

That is, we left the original OPUS (it is still in France), but our “almost complete” mirror “sucks” it, which cuts the base into pieces, ready for assembly in an orchestra. And the orchestrator collects and stores the aggregates (or quickly receives them and begins to store them), which other systems need. As a result, this part works as it should. Of the original functionality of the French OPUS, about five percent are left. Soon we will completely replace it.

What was wrong with MoVe?

Nothing special, except for the fact that we decided to throw out all the code, because it:

- Was ancient on an old stack.

- He took into account the characteristics of each region "Leroy Merlin" in the chain of IFs.

- It was so difficult to read and maintain that the best refactoring method was "write again and immediately document normally."

Which we did. Only we rewrote it not as a monolith, but began to make microservices for each individual function around. And then part of the functionality of MoVe with switching to microservice was smoothly taken away. And so - one by one, until the functionality of MoVe ended entirely. That is, it still works, but somewhere in a vacuum and without data streams.

Since we built the platform from pieces, the project was named Lego.

Lego completely changed this middle. Yes, let's clarify: a real backend is a legacy bus, file systems, databases, and other almost infrastructure level. Large applications around this and microservices of logic are middle. Presentation is already the front.

Why did you need to rewrite all this?

Because we lived with separate client bases for each instance 15 years from the opening in Russia, and this did not suit anyone. There was no synchronization either. In other countries they still live like that.

The parent company from France made the general logistics, we reused the Pixis system - this is a single store of receipts, that is, customer orders: one store sees the orders of another store. But this did not completely solve the omnichannel order problem. Therefore, it was necessary to consolidate the base and do general processing. This is the main thing.

The second reason was the federal box office law: with our development timelines for a common system (and testing) for all countries, we would have fined for fines.

An approximately similar option was rolled in in Brazil: they started Leroy Merlin there without any software from the parent company, and they succeeded. That was before the split decision. By the way, they commit a lot to innersors , they have very fast development.

Pixis allowed to place an order only from the cash register, in fact. We changed the situation in three stages:

- First we made a mobile application for the employee, which greatly simplifies his life. On the basis of this, they began to build an ecosystem where interfaces are separated with logic.

- While everything was set up, Internet orders were driven into the cash desk by hand.

- They put microservices in turn, which replaced it all in the middle.

Why did you need to start with the store app? Because we again have unique processes in comparison with France. For example, a person decided to buy six meters ten centimeters of a chain in a store. The seller cut him off, gave a document how long it is and how much it costs. You go to the cashier with this piece of paper, you pay there. From the point of view of logic, the sale should not be at the box office, but the seller should have it, but in fact it is at the box office that the most interesting thing starts: you need to have both the goods and the paper.

In the end, we are going to be a platform for placing orders: now, for example, on top of our main system, services for buying services of masters were added (I bought a kitchen - I ordered installation from an external master, but we found it and gave a guarantee from ourselves), marketplace(Buying directly from a supplier over a wider range), and soon there should be an affiliate so that our blocks can be placed anywhere. Something like embedding Amazon stores in blogs, only more versatile.

How was the replacement decision made?

I step. Refine the business model.

We checked, and indeed: the model, as in Russia — low prices every day — is successful. Leroy Merlin in Europe is significantly more expensive, but it is in Russia that this is our niche: a construction store where you can definitely find goods at the best price.

II step. Create a client script.

That is, to build processes as we want them to interact with us from the point of view of the client. That is, a single vision of who we want to be in a few years and how it looks from the point of view of architecture.

III step. Build an architecture.

Break this vision down into specific TK and architecture with higher detail. It turned out 110 projects, which we divided into five categories for five years.

Then they formed specialized teams. Most often these are their people plus a contractor. At first, they suffered greatly from this: when they went to the prod, they did not really understand how to digest such a large volume of changes. Then they began to make a common approach for tasks and gradually increase the share of their development.

In those places where the error was critical, they worked according to NASA schemes, where the error is unacceptable, not an option at all. This is all about money transactions.

And where it was possible to fall, the main thing was to get up quickly, we used an approach close to Google’s SRE. Iteratively, with jambs, but projects could be implemented as soon as possible. And now we are doing so much, and it is very cool to develop.

The third approach is innovation. We developed a sandbox of ideas to quickly do the first MVPs with our internal resources, which allow us to test quickly and cheaply. This is the real “try fast, fail fast”. This allowed you to get a budget and authority for those who came up with a cool project.

The second important focus was in geo-development. Then opened 20 stores a year (now a little slower). Six thousand employees. Many regions. It was necessary to rewrite the entire supply chain, to quickly develop processes for raising the infrastructure of stores.

In 2017, we decided to become a platform for construction orders: this is a promising strategy for several years to come.

For geography, we needed a large IT back office for the growth of the company and the growth of the supply chain. For omnichannel (general order) - a different level of SLA for internal systems, real time, microservices and synchronization between hundreds of subsystems. This is generally a different level of IT maturity. For the platform, the speed of change is also important.

When it was just starting, everyone thought that agile would save the world. With contractors, agile may not be as effective. Hence the desire to recruit 200 people in the IT department.

We watched how quickly we can implement everything without loss for the brand. Something could be written quickly, but did not have time to prepare the service. For example, if there is no stock information, then there is no way to pay online without a guarantee that the goods will be reserved. We decomposed the chain of interdependencies into several. Now we already know that we need to make cycles shorter, because the competencies of the team are still important. Now we are sawing features in small pieces, we are collecting a connection, now only the current year is in the plans. A long-term strategy, but by features, is one year maximum, and many separate product teams.