Big data - big responsibility, big stress and big money

The term Big Data is spoiled by modern fantastic exaggeration of new things. As AI enslaves people and the blockchain builds an ideal economy - so big data will let you know absolutely everything about everyone and see the future.

But reality, as always, is more boring and pragmatic. There is no magic in big data — as there is nowhere — there simply is so much information and connections between different data that it takes too long to process and analyze everything in the old ways.

New methods are emerging. New professions are with them. The dean of Big Data analytics department at GeekBrains Sergey Shirkin told what kind of profession they are, where they are needed, what they need to do and what they need to be able to do. What tools are used and how much do they usually pay to specialists.

What is Big Data?

The question “what to call big data” is rather confusing. Even in scientific journals, descriptions differ. Somewhere, millions of observations are considered “ordinary” data, and somewhere else hundreds of thousands are called large, because each of the observations has a thousand signs. Therefore, they decided to conditionally divide the data into three parts - small, medium and large - according to the simplest principle: the volume that they occupy.

Small data is a few gigabytes. Medium - everything about a terabyte. Big data is about a petabyte. But this did not remove the confusion. Therefore, the criterion is even simpler: everything that does not fit on the same server is big data.

Small, medium, and big data have different operating principles. Big data is usually stored in a cluster on several servers at once. Because of this, even simple actions are more complicated.

For example, a simple task is to find the average value of a quantity. If this is small data, we simply add up and divide by the quantity. And in big data we cannot collect all the information from all servers at once. It's complicated. Often, you don’t need to pull data to yourself, but send a separate program to each server. After the work of these programs, intermediate results are formed, and the average value is determined by them.

Sergey Shirkin

What big data companies

The first with big data began to work mobile operators and search engines. Search engines became more and more inquiries, and the text is heavier than numbers. Working with a paragraph of text takes longer than with a financial transaction. The user expects the search engine to complete the request in a split second - it is unacceptable for it to work even for half a minute. Therefore, the search engines first began to work with parallelization when working with data.

A little later, various financial organizations and retail joined. The transactions themselves are not so voluminous, but big data appears due to the fact that there are a lot of transactions.

The amount of data is growing at all. For example, banks had a lot of data before, but they did not always require principles of work, as with large ones. Then banks began to work more with customer data. They began to come up with more flexible deposits, loans, different tariffs, and they began to analyze transactions more closely. This already required quick ways to work.

Now banks want to analyze not only internal information, but also external information. They want to receive data from the same retail, they want to know what a person spends money on. Based on this information, they are trying to make commercial offers.

Now all the information is connected with each other. Retail, banks, telecom operators and even search engines - everyone is now interested in each other's data.

What a Big Data Specialist Should Be

Since the data is located on a cluster of servers, a more complex infrastructure is used to work with them. This puts a big burden on the person who works with her - the system must be very reliable.

Making one server reliable is easy. But when there are several of them, the probability of falling increases in proportion to the number, and the responsibility of the data engineer who works with this data also grows.

The analyst must understand that he can always receive incomplete or even incorrect data. He wrote the program, trusted in its results, and then found out that due to the fall of one server out of a thousand, part of the data was disconnected, and all conclusions were incorrect.

Take, for example, a text search. Let's say all the words are arranged in alphabetical order on several servers (if we speak very simply and conditionally). And one of them disconnected, all the words in the letter “K” disappeared. The search stopped giving out the word “Cinema”. All the news of the news disappears, and the analyst makes a false conclusion that people are no longer interested in movie theaters.

Therefore, a specialist in big data should know the principles of work from the lowest levels - servers, ecosystems, task planners - to the highest level programs - machine learning libraries, statistical analysis, and more. He must understand the principles of iron, computer equipment and everything that is configured on top of it.

For the rest, you need to know everything the same as when working with small data. We need mathematics, we need to be able to program, and we know especially well the algorithms of distributed computing, to be able to apply them to the usual principles of working with data and machine learning.

What tools are used

Since the data is stored on the cluster, a special infrastructure is needed to work with it. The most popular ecosystem is Hadoop. A lot of different systems can work in it: special libraries, planners, tools for machine learning, and much more. But first of all, this system is needed to cope with large amounts of data due to distributed computing.

For example, we are looking for the most popular tweet among data broken on a thousand servers. On one server, we would just make a table and that’s it. Here we can drag all the data to ourselves and recount. But this is not right, because for a very long time.

Therefore, there is a Hadoop with Map Reduce paradigms and the Spark framework. Instead of pulling data to themselves, they send program sections to this data. Work goes in parallel, in a thousand threads. Then we get a selection of thousands of servers based on which you can choose the most popular tweet.

Map Reduce is an older paradigm; Spark is newer. With its help, data is extracted from clusters, and machine learning models are built in it.

What professions there are in the field of big data

The two main professions are analysts and data engineers.

The analyst primarily works with information. He is interested in tabular data, he is engaged in models. His responsibilities include aggregation, purification, addition and visualization of data. That is, the analyst is the link between raw information and business.

The analyst has two main areas of work. First, he can transform the information received, draw conclusions and present it in an understandable way.

The second is that analysts develop applications that will work and produce the result automatically. For example, make a forecast on the securities market every day.

Data engineer- This is a lower level specialty. This is a person who must ensure the storage, processing and delivery of information to the analyst. But where there is supply and cleaning - their responsibilities may overlap.

The data engineer gets all the hard work. If the systems failed, or one of the servers disappeared from the cluster, it connects. This is a very responsible and stressful job. The system can shut down both on weekends and after hours, and the engineer must take prompt action.

These are two main professions, but there are others. They appear when parallel computing algorithms are added to tasks related to artificial intelligence. For example, an NLP engineer. This is a programmer who is engaged in natural language processing, especially in cases where you need not just to find words, but to grasp the meaning of the text. Such engineers write programs for chatbots and conversational systems, voice assistants and automated call centers.

There are situations when it is necessary to classify billions of pictures, make moderation, filter out the excess and find a similar one. These professions overlap more with computer vision.

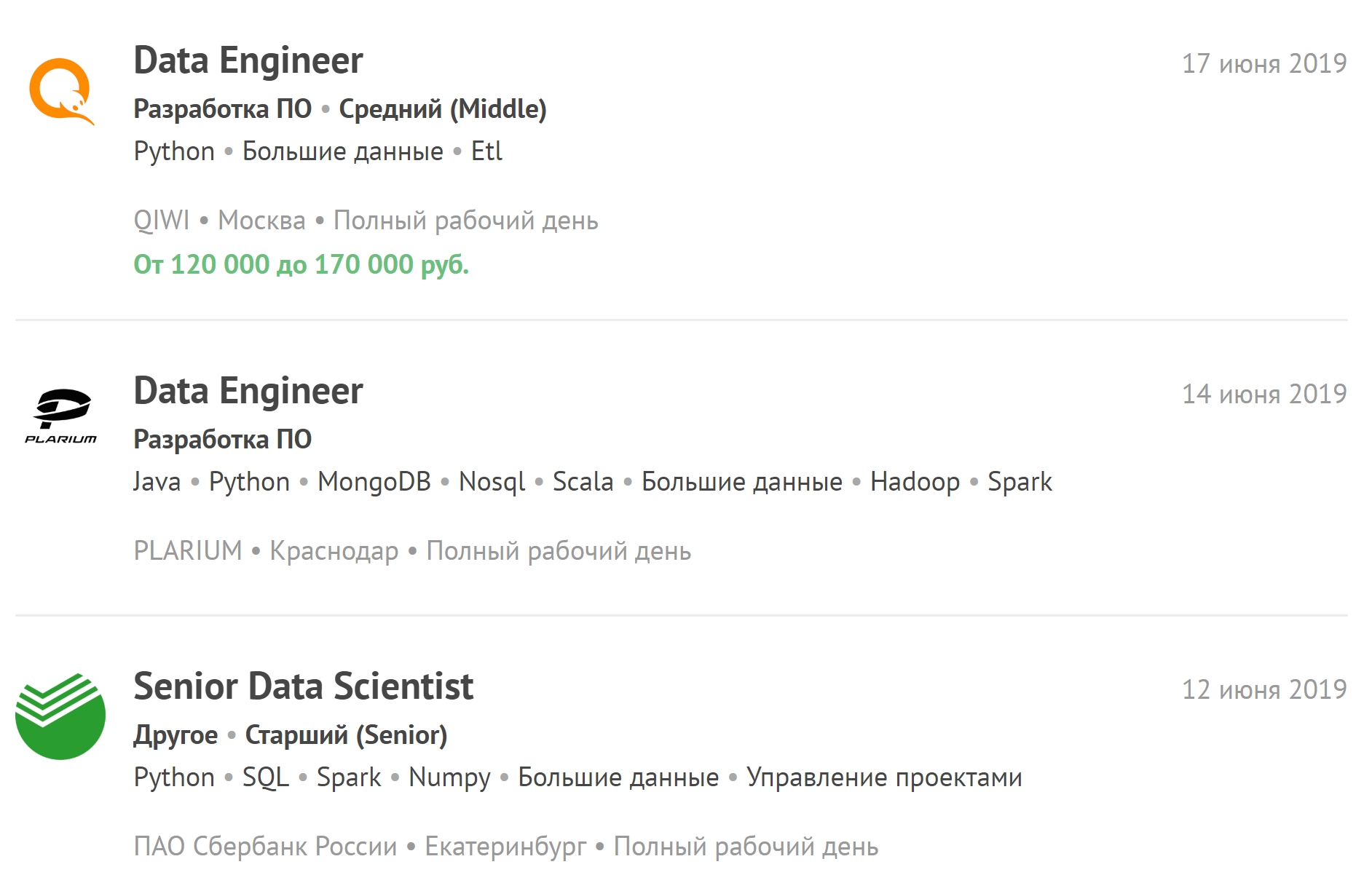

You can look at the latest vacancies related to big data and subscribe to new vacancies.

How long does the training take

We have been studying for a year and a half. They are divided into six quarters. In some, there is an emphasis on programming, in others - on working with databases, and in the third - on mathematics.

In contrast, for example, from the faculty of AI, there is less mathematics. There is no such strong emphasis on mathematical analysis and linear algebra. Knowledge of distributed computing algorithms is needed more than the principles of matanalysis.

But a year and a half is enough for real work with big data only if the person had experience with ordinary data and in general in IT. The remaining students after graduation are recommended to work with small and medium data. Only after this can specialists be allowed to work with large ones. After training, you should work as a data scientist - apply machine learning on different amounts of data.

When a person gets a job in a big company - even if he had experience - most often he will not be allowed to go to big data right away, because the price of an error there is much higher. Errors in the algorithms may not be detected immediately, and this will lead to large losses.

What salary is considered adequate for specialists on big data

Now there is a very large personnel shortage among data engineers. The work is difficult, a lot of responsibility falls on a person, a lot of stress. Therefore, a specialist with average experience receives about two hundred thousand. Junior - from one hundred to two hundred.

A data analyst may have a slightly lower starting salary. But there is no work in excess of working time, and he will not be called during non-working hours due to emergency cases.

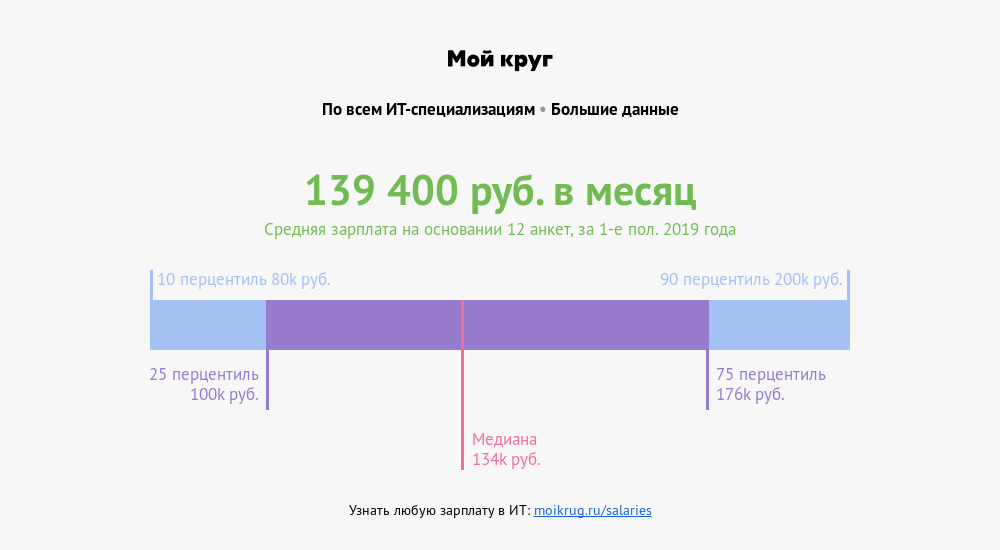

According to the “My Circle” salary cultivator, the average salary of specialists whose professions are associated with big data is 139,400 rubles . A quarter of specialists earn more than 176,000 rubles. A tenth - more than 200,000 rubles.

How to prepare for interviews

No need to delve into just one subject. At interviews they ask questions about statistics, machine learning, programming. They may ask about data structures, algorithms, and cases from real life: the server crashed, an accident happened - how to fix it? There may be questions on the subject area - something that is closer to business.

And if a person is too deep into one mathematics, and during the interview did not do a simple programming task, then the chances of finding a job are reduced. It is better to have an average level in each direction than to show yourself well in one, and fail completely in the other.

There is a list of questions asked at 80 percent of the interviews. If this is machine learning, they will definitely ask about gradient descent. If statistics - you will need to talk about correlation and hypothesis testing. Programming is likely to give a small task of medium complexity. And you can easily get your hands on tasks - just solve them more.

Where to gain experience on your own

Python can be pulled up on Pitontutyu , work with the database - on SQL-EX . There are given tasks for which in practice they learn to make requests.

Higher Mathematics - Mathprofi . There you can get clear information on mathematical analysis, statistics and linear algebra. And if it’s bad with the school curriculum, that is, youclever.org .

Distributed computing will work out only in practice. Firstly, this requires infrastructure, and secondly, algorithms can quickly become obsolete. Now something new is constantly appearing.

What trends does the community discuss

Another area is gradually gaining strength, which can lead to a rapid increase in the amount of data - the Internet of Things (IoT). Data of this kind comes from the sensors of devices connected in a network, and the number of sensors at the beginning of the next decade should reach tens of billions.

The devices are very different - from household appliances to vehicles and industrial machines, a continuous flow of information from which will require additional infrastructure and a large number of highly qualified specialists. This means that in the near future there will be an acute shortage of data engineers and big data analysts.