The Autonomous Future of DBMS

Who do you think would better configure PostgreSQL - DBA or ML algorithm? And if the second, then is it time for us to think about what to do when cars replace us. Or it won’t come to that, and important decisions should still be made by people. Probably, the level of isolation and requirements for transaction stability should remain with the administrator. But indexes can soon be trusted to determine the machine yourself.

Andy Pavlo on HighLoad ++ spoke about the DBMS of the future, which you can "touch" right now. If you missed this speech or prefer to receive information in Russian - under the cut is the translation of the speech.

It will be about the Carnegie Mellon University project on the creation of autonomous DBMS. The term “autonomous” means a system that can automatically deploy, configure, configure itself without any human intervention. It may take about ten years to develop something like this, but that is what Andy and his students are doing. Of course, machine learning algorithms are needed to create an autonomous DBMS, however, in this article we will focus only on the engineering side of the topic. Consider how to design software to make it standalone.

About the speaker: Andy Pavlo, associate professor at Carnegie Mellon University, under his leadership creates a "self-managed" DBMS PelotonDB , as well as ottertune , which helps to tune PostgreSQL and MySQL configs using machine learning. Andy and his team are now true leaders in self-managing databases.

The reason we want to create an autonomous DBMS is obvious. Managing these DBMS tools is a very expensive and time-consuming process. The average DBA salary in the United States is approximately 89 thousand dollars a year. Translated into rubles, 5.9 million rubles a year are obtained. This really large amount you pay people to just keep an eye on your software. About 50% of the total cost of using the database is paid for by the work of such administrators and related staff.

When it comes to really big projects, such as we discuss on HighLoad ++ and which use tens of thousands of databases, the complexity of their structure goes beyond human perception. Everyone approaches this problem superficially and tries to achieve maximum performance by investing minimal effort in tuning the system.

The idea of autonomous DBMSs is not new; their history dates back to the 1970s, when relational databases first began to be created. Then they were called self-adaptive databases (Self-Adaptive Databases), and with their help they tried to solve the classic problems of database design, over which people still struggle to this day. This is the choice of indexes, the partition and construction of the database schema, as well as data placement. At that time, tools were developed that helped database administrators deploy the DBMS. These tools, in fact, worked just like their modern counterparts work today.

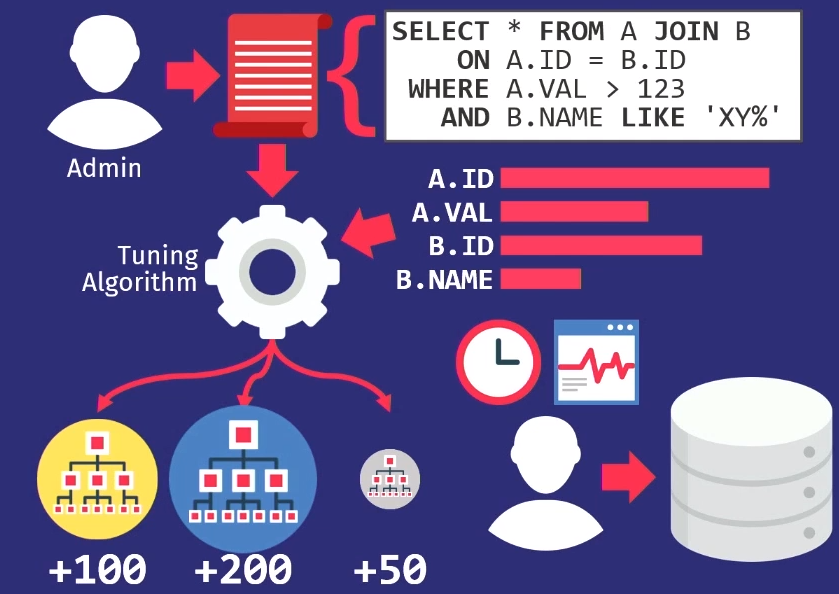

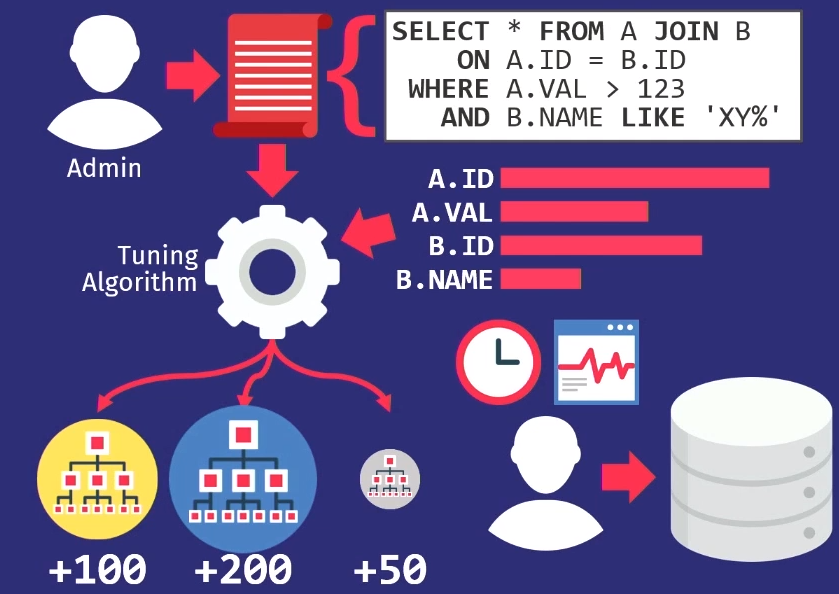

Administrators track requests executed by the application. They then pass this query stack to the tuning algorithm, which builds an internal model of how the application should use the database.

If you create a tool that helps you automatically select indexes, then build charts from which you can see how often each column is accessed. Then transfer this information to the search algorithm, which will look through many different locations - will try to determine which columns can be indexed in the database. The algorithm will use the internal cost model to show that this particular one will give better performance compared to other indexes. Then the algorithm will give a suggestion about what changes in the indices should be made. At this moment, it’s time to participate, consider this proposal and not only decide whether it is right, but also choose the right time for its implementation.

DBAs should know how the application is used when there is a drop in user activity. For example, on Sunday at 3:00 a.m. the lowest level of database queries, so at this time you can reload the indexes.

As I said, all the design tools of the time worked the same way - this is a very old problem . The scientific supervisor of my scientific supervisor wrote an article about automatic index selection in 1976.

In the 1990s, people, in fact, worked on the same problem, only the name changed from adaptive to self-tuning databases.

The algorithms got a little better, the tools got a little better, but at a high level they worked the same way as before. The only company at the forefront of the movement of self-tuning systems was Microsoft Research with their automatic administration project. They developed truly wonderful solutions, and in the late 90s and early 00s they again presented a set of recommendations for setting up their database.

The key idea that Microsoft put forward was different from what it was in the past - instead of having the customization tools support their own models, they actually simply reused the query optimizer cost model to help determine the benefits of one index against another. If you think about it, it makes sense. When you need to know whether a single index can really speed up queries, it does not matter how large it is if the optimizer does not select it. Therefore, the optimizer is used to find out if he will actually choose something.

In 2007, Microsoft Research published an article that set out a retrospective of research over ten years. And it covers well all the complex tasks that arose on every segment of the path.

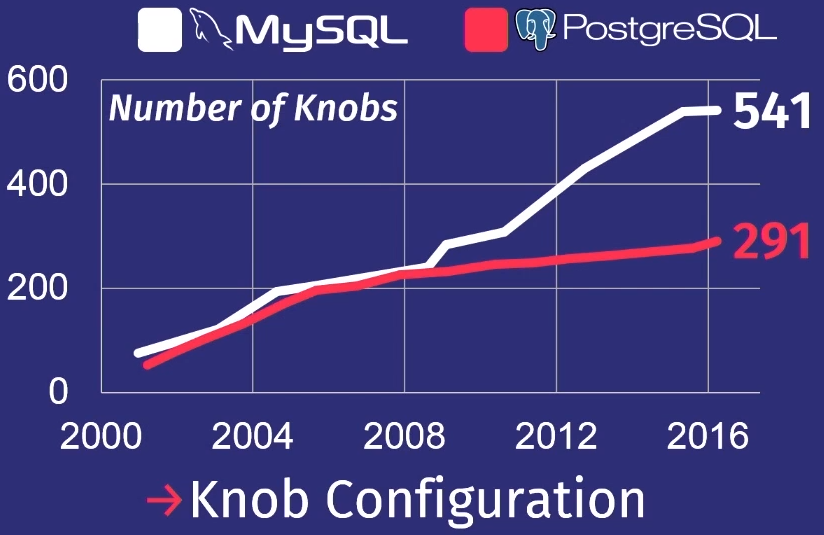

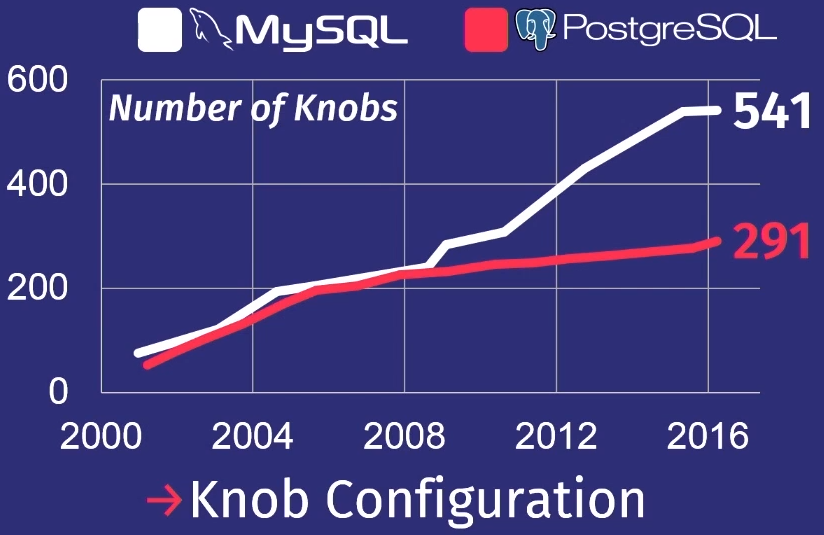

Another task that has been highlighted in the era of self-tuning databases is how to make automatic adjustments to regulators. A database controller is some kind of configuration parameter that changes the behavior of the database system at runtime. For example, a parameter that is present in almost every database is the size of the buffer. Or, for example, you can manage settings such as blocking policies, the frequency of disk cleanup, and the like. Due to the significant increase in the complexity of DBMS regulators in recent years, this topic has become relevant.

To show how bad things are, I’ll give a review that my student did after studying a lot of PostgreSQL and MySQL releases.

Of course, not all regulators actually control the task execution process. Some, for example, contain file paths or network addresses, so only a person can configure them. But a few dozen of them can really affect performance. No man can hold so much in his head.

Further we find ourselves in the era of the 2010s, in which we are to this day. I call it the era of cloud databases. During this time, much work has been done to automate the deployment of a large number of databases in the cloud.

The main thing that worries major cloud providers is how to host a tenant or migrate from one to another. How to determine how much resources each tenant will need, and then try to distribute them among the machines so as to maximize productivity or meet SLA with minimal cost.

Amazon, Microsoft and Google solve this problem, but mainly at the operational level. Only recently, cloud service providers began to think about the need to configure individual database systems. This work is not visible to ordinary users, but it determines the high level of the company.

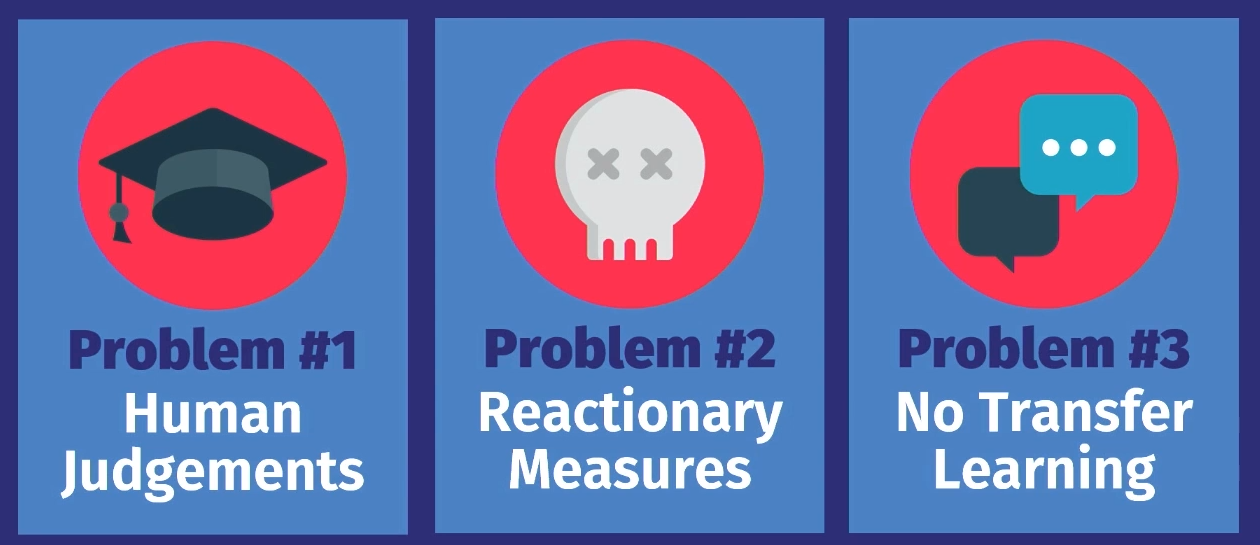

Why today we cannot have a truly autonomous system of self-government? There are three reasons for this.

First, all of these tools, except for the distribution of workloads from cloud service providers, are only advisory in nature . That is, on the basis of the calculated option, a person must make a final, subjective decision as to whether such a proposal is correct. Moreover, it is necessary to observe the operation of the system for some time to decide whether the decision made remains correct as the service develops. And then apply the knowledge to your own internal decision-making model in the future. This can be done for one database, but not for tens of thousands.

The next problem is that any action is only a reaction to something. In all the examples that we examined, the work goes with data on the past workload. There is a problem, records about it are transferred to the instrument, and he says: “Yes, I know how to solve this problem.” But the solution only concerns a problem that has already occurred. The tool does not predict future events and accordingly does not offer preparatory actions. A person can do this, and does it manually, but tools cannot.

The final reason is that none of the solutions has knowledge transfer.. Here is what I mean: for example, let's take a tool that worked in one application on the first database instance, if you put it in another same application on another database instance, it could, based on the knowledge gained when working with the first database data help set up a second database. In fact, all tools start working from scratch, they need to re-get all the data about what is happening. Man works in a completely different way. If I know how to configure one application in a certain way, I can see the same patterns in another application and, possibly, configure it much faster. But not one of these algorithms, not one of these tools still works this way.

Why am I sure it's time for change? The answer to this question is about the same as the question of why super-arrays of data or machine learning have become popular. Equipment is becoming better quality : production resources are increasing, storage capacity is growing, hardware capacity is increasing, which speeds up calculations for learning machine learning models.

Advanced software tools have become available to us. Previously, you needed to be an expert in MATLAB or low-level linear algebra to write some machine learning algorithms. Now we have Torch and Tenso Flow, which make ML available, and, of course, we have learned to better understand the data. People know what kind of data may be needed for decision-making in the future, therefore they do not discard as much data as before.

The goal of our research is to close this circle in autonomous DBMSs. We can, like the previous tools, propose solutions, but instead of relying on the person - whether the decision is right when exactly you need to deploy it - the algorithm will do this automatically. And then with the help of feedback, he will study and become better over time.

I want to talk about the projects that we are currently working at at Carnegie Mellon University. In them, we approach the problem in two different ways.

In the first - OtterTune - we look for ways to tune the database, treating them as black boxes. That is, ways to tune existing DBMSs without controlling the internal part of the system and observing only the response.

The Peloton project is about creating new databases from scratch , from scratch, given the fact that the system should work autonomously. What adjustments and optimization algorithms need to be laid down - which cannot be applied to existing systems.

Let's consider both projects in order.

The existing system adjustment project that we have developed is called OtterTune.

Imagine that the database is configured as a service. The idea is that you download the runtime metrics of heavy database operations that eat up all the resources, and the recommended configuration of regulators comes in response, which, in our opinion, will increase productivity. It can be a delay time, bandwidth or any other characteristic that you specify - we will try to find the best option.

The main thing that is new in the OtterTune project is the ability to use data from previous sessionssettings and improve the efficiency of the next sessions. For example, take the PostgreSQL configuration, which has an application that we have never seen before. But if it has certain characteristics or uses the database in the same way as the databases that we have already seen in our applications, then we already know how to configure this application more efficiently.

At a higher level, the algorithm of work is as follows.

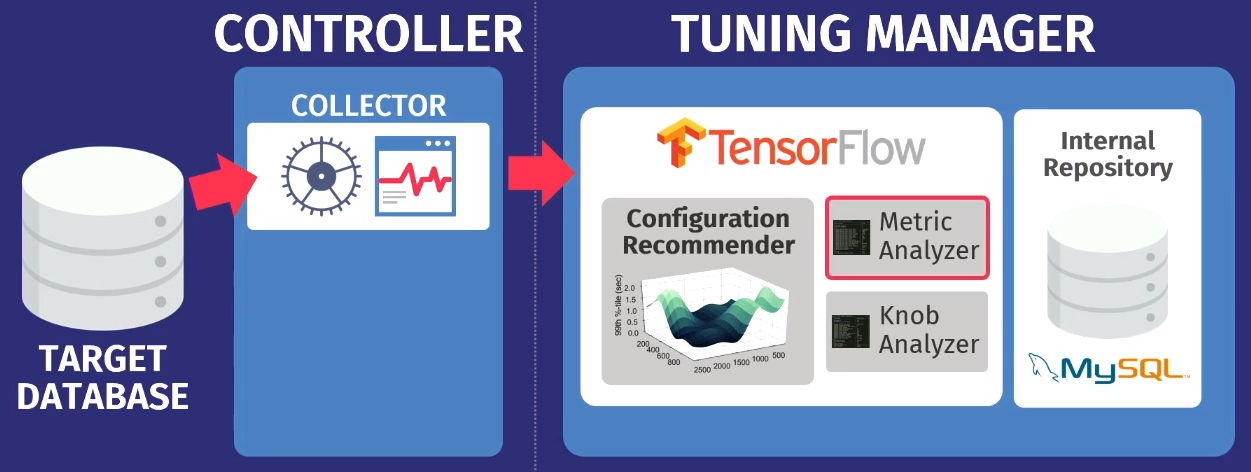

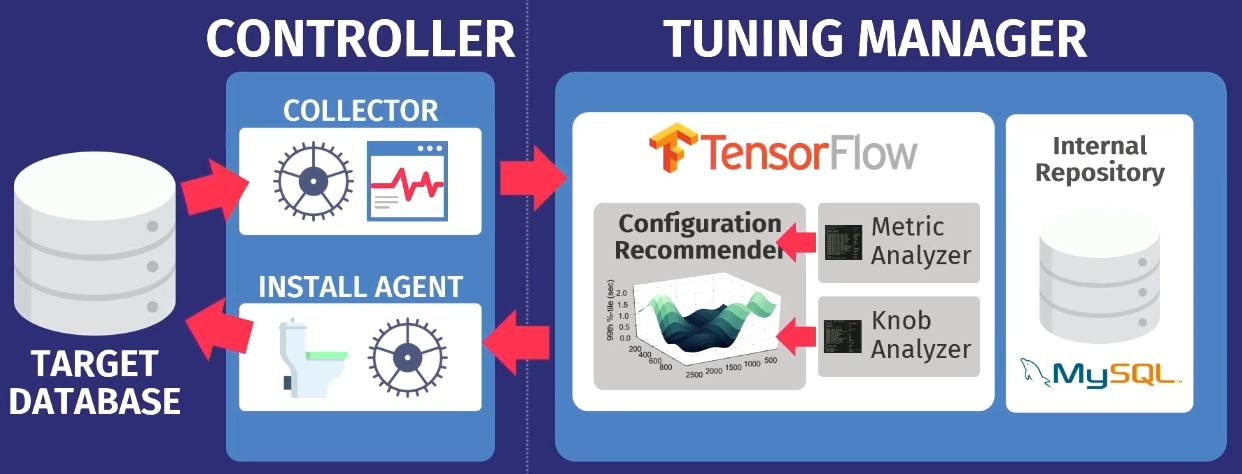

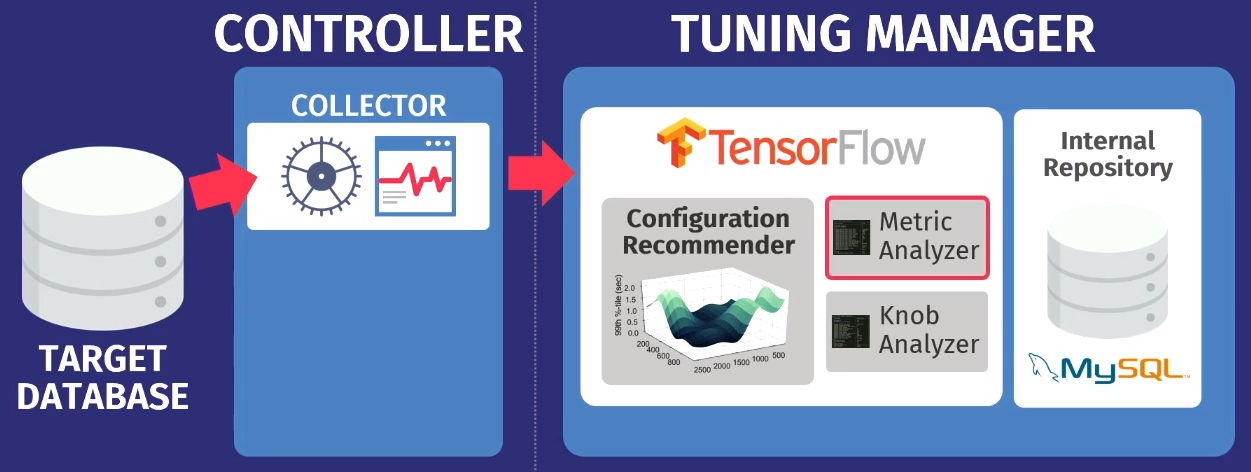

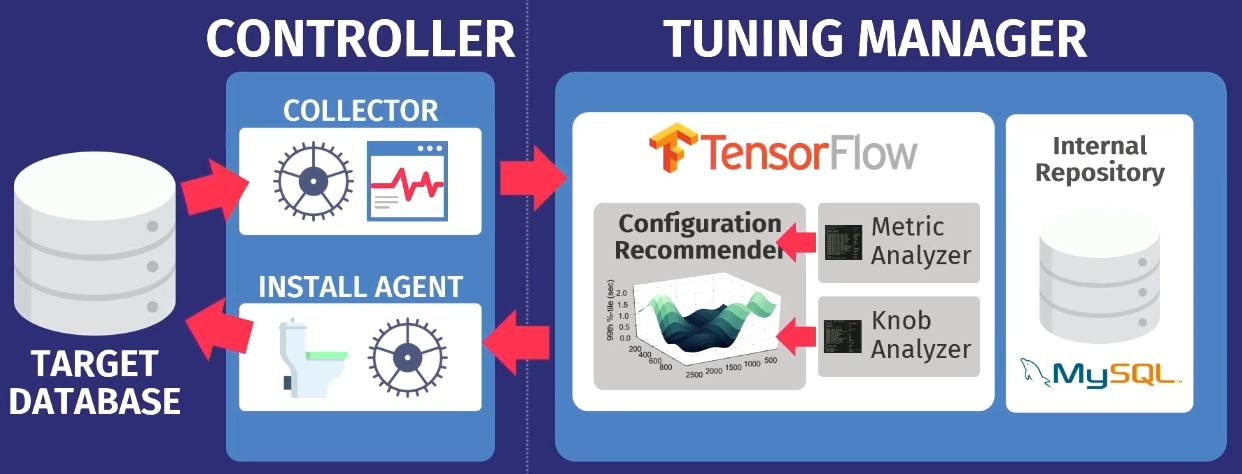

Let's say there is a target database: PostgreSQL, MySQL, or VectorWise. You must install the controller in the same domain, which will perform two tasks.

The first is performed by the so-called collector - a tool that collects data about the current configuration, i.e. query execution time metrics from applications to the database. The data collected by the collector is loaded into the Tuning Manager, a tuning service. It doesn’t matter if the database works locally or in the cloud. After downloading, the data is stored in our own internal repository, which stores all the test setup sessions ever made.

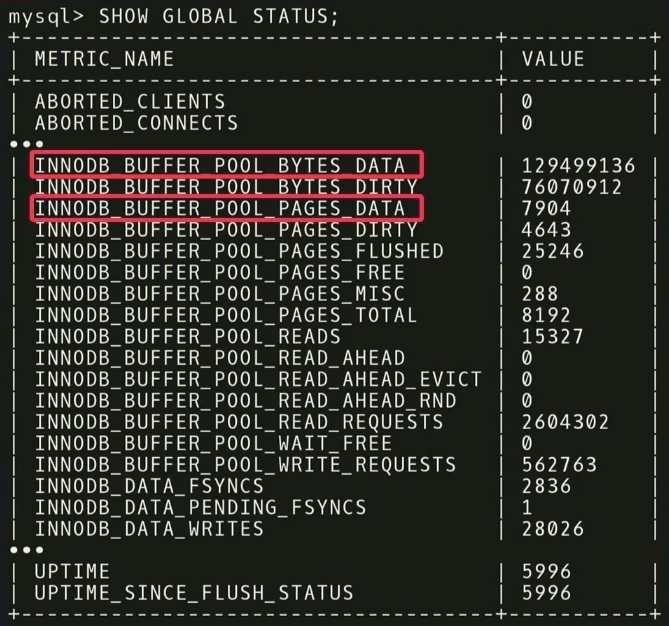

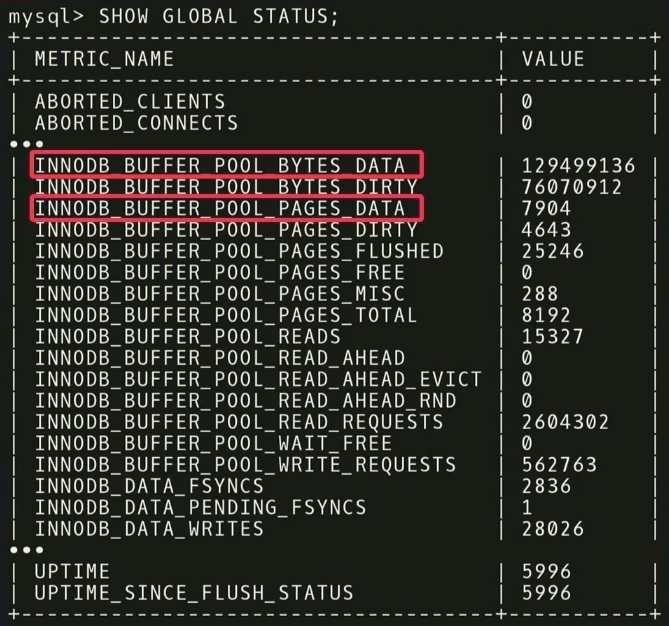

Before giving recommendations, you need to perform two steps. First, you need to look at the runtime metrics and find out which ones are actually important. In the example below, the indicators that MySQL returns to the command

For example, there are two metrics:

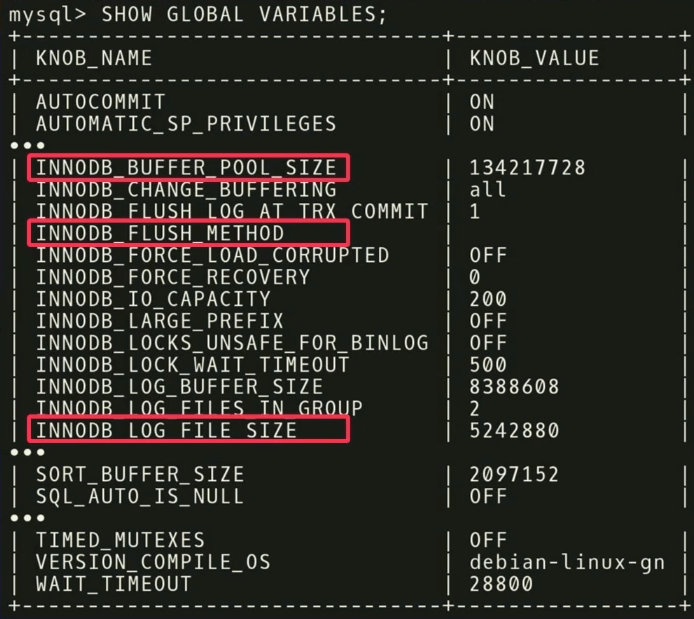

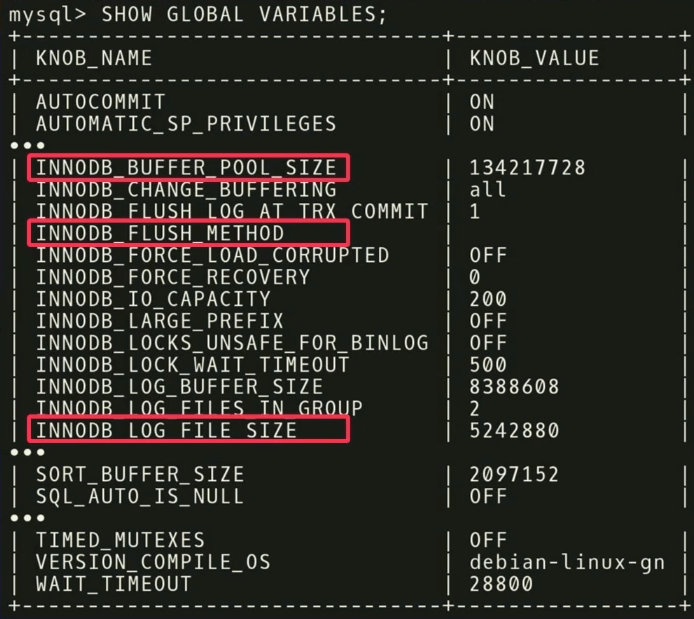

In the second stage, we do the same, only with regard to regulators. In MySQL 500 controllers, and, of course, not all of them are really significant, but different ones are important for different applications. It is necessary to conduct another statistical analysis to find out which regulators really affect the target function.

In our example, we found out that there are three regulators

There are other interesting points related to regulators. In the screenshot there is a knob with a name

After analysis, the data is transferred to our configuration algorithm using the Gaussian process model - a rather old method. You have probably heard of deep learning, we are doing something similar, but without deep networks. We use GPflow- a package for working with models of the Gaussian process, developed in Russia based on TensorFlow. The algorithm issues a recommendation that should improve the objective function; this data is transferred back to the installation agent working inside the controller. The agent applies the changes by performing a reset — unfortunately, it will have to restart the database — and then the process repeats again. Some more runtime metrics are collected, transferred to the algorithm, an analysis of the possibility of improving and increasing productivity is carried out, a recommendation is issued, and so on, again and again.

A key feature of OtterTune is that algorithms only need information about runtime metrics as input. We do not need to see your data and user requests. We just need to track read and write operations. This is a powerful argument - data belonging to you or your customers will not be disclosed to third parties. We do not need to see any requests, the algorithm works based only on runtime metrics, because it gives recommendations for regulators, and not for physical design.

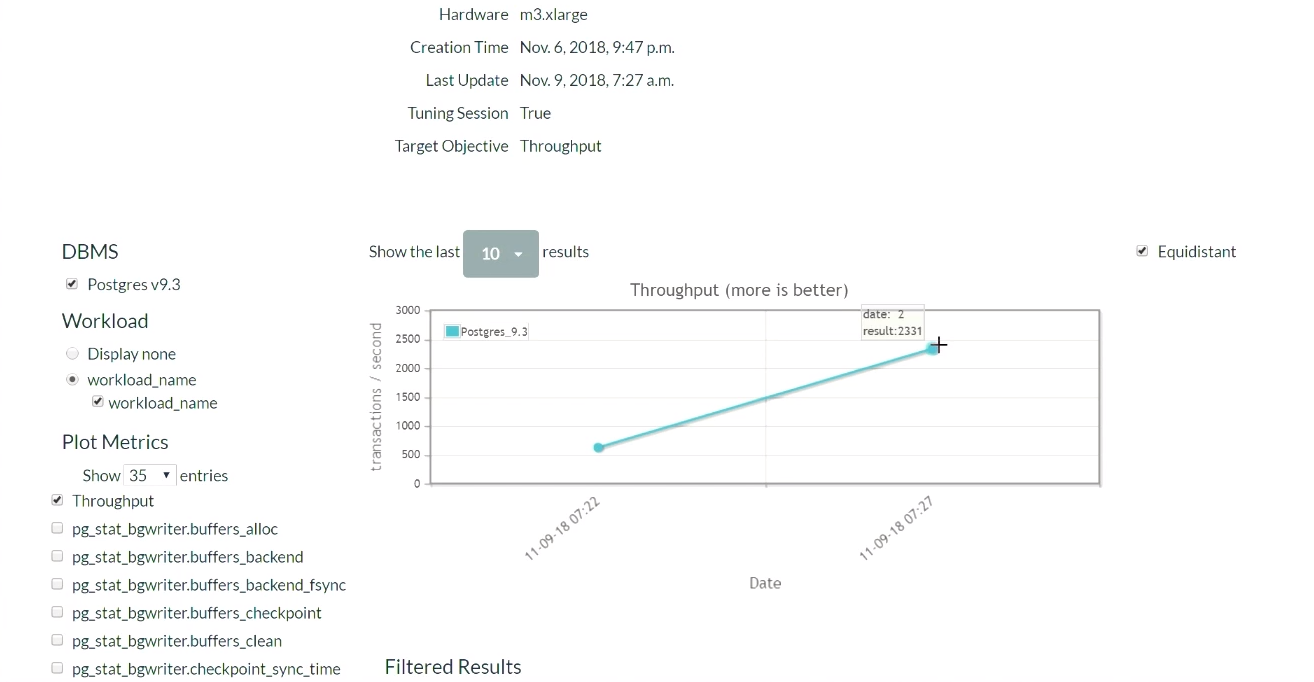

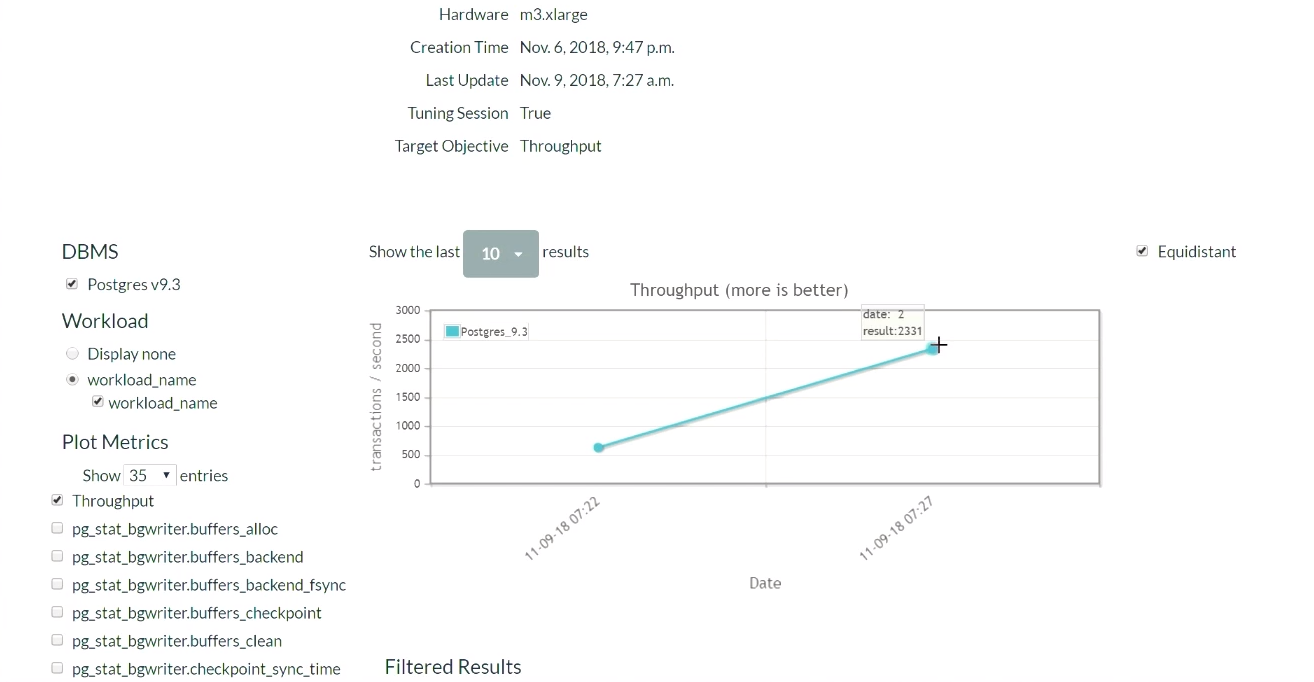

Let's take a look at the OtterTune demo. On the project’s website, we’ll run Postgres 9.6 and load the system with the TPC-C test. Let's start with the initial PostgreSQL configuration, which is deployed when installed on Ubuntu.

First, run the TPC-C test for five minutes, collect the necessary runtime metrics, upload them to the OtterTune service, get recommendations, apply the changes, and then repeat the process. We will come back to this later. The database system runs on one computer, the Tensor Flow service on another, and loads the data here.

Five minutes later, we refresh the page (a demonstration of this part of the results begins at this moment ). When we first started, in the default configuration for PostgreSQL, there were 623 transactions per second. Then, after receiving the recommendation and applying the changes once, the number of transactions increased to 2300 per second. It is worth recognizing that this demonstration has already been launched several times, so the system already has a set of previously collected data. That is why the solution is so fast. What would happen if the system did not have such previously collected data? This algorithm is a kind of step-by-step function, and gradually it would get to this level.

After some time and five iterations, the best result was 2600. We went from 600 transactions per second, and were able to reach a value of 2600. A small drop appeared because the algorithm decided to try a different way of adjusting the regulators after it achieved good results. The result was a margin, so a large drop in performance did not happen. Having received a negative result, the algorithm reconfigured and began to look for other ways of regulation.

We conclude that you should not be afraid of starting a bad strategy into work, because the algorithm will explore the solution space and try on various configurations to achieve the conditions of the SLA agreement. Although you can always configure the service so that the algorithm selects only improving solutions. And over time, you will receive all the best and best results.

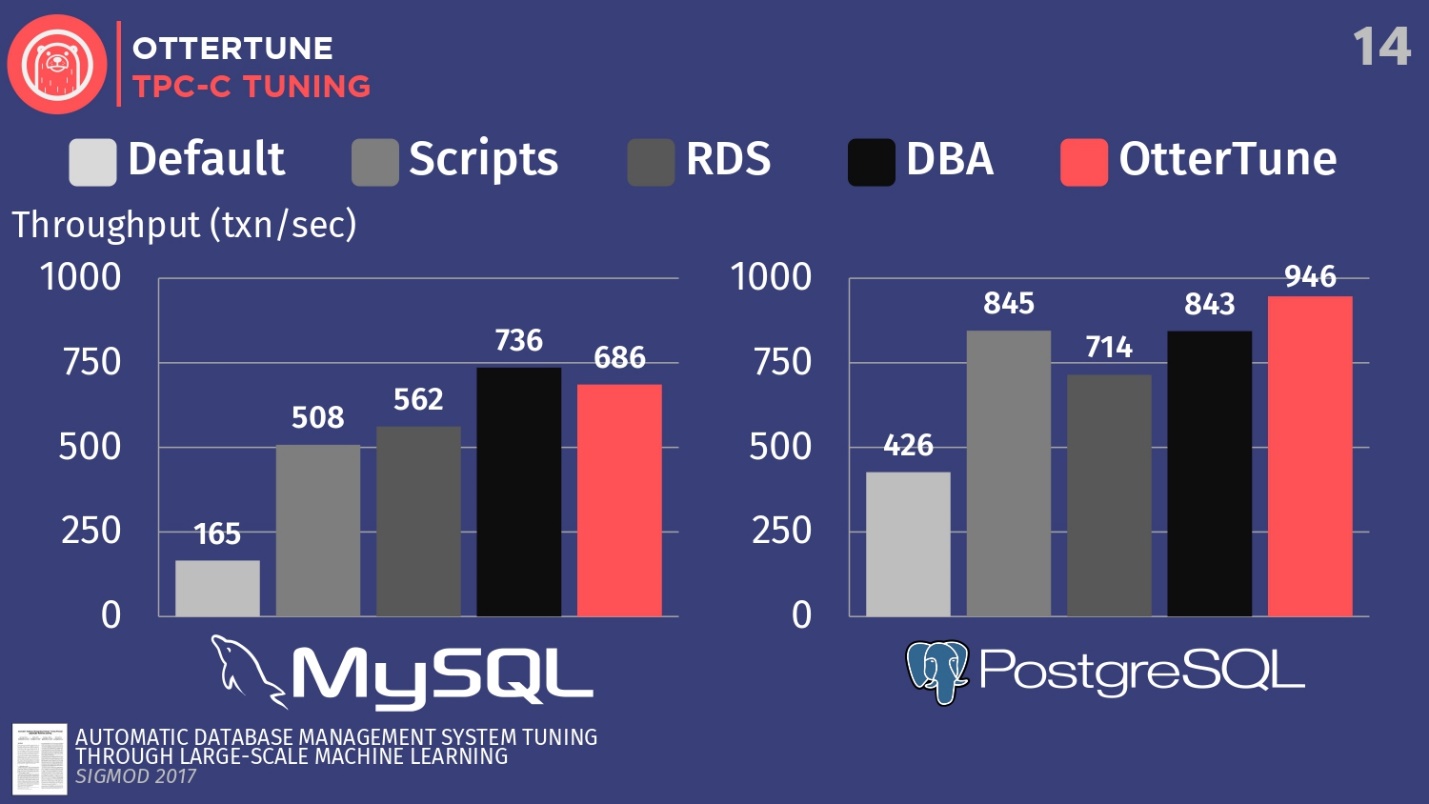

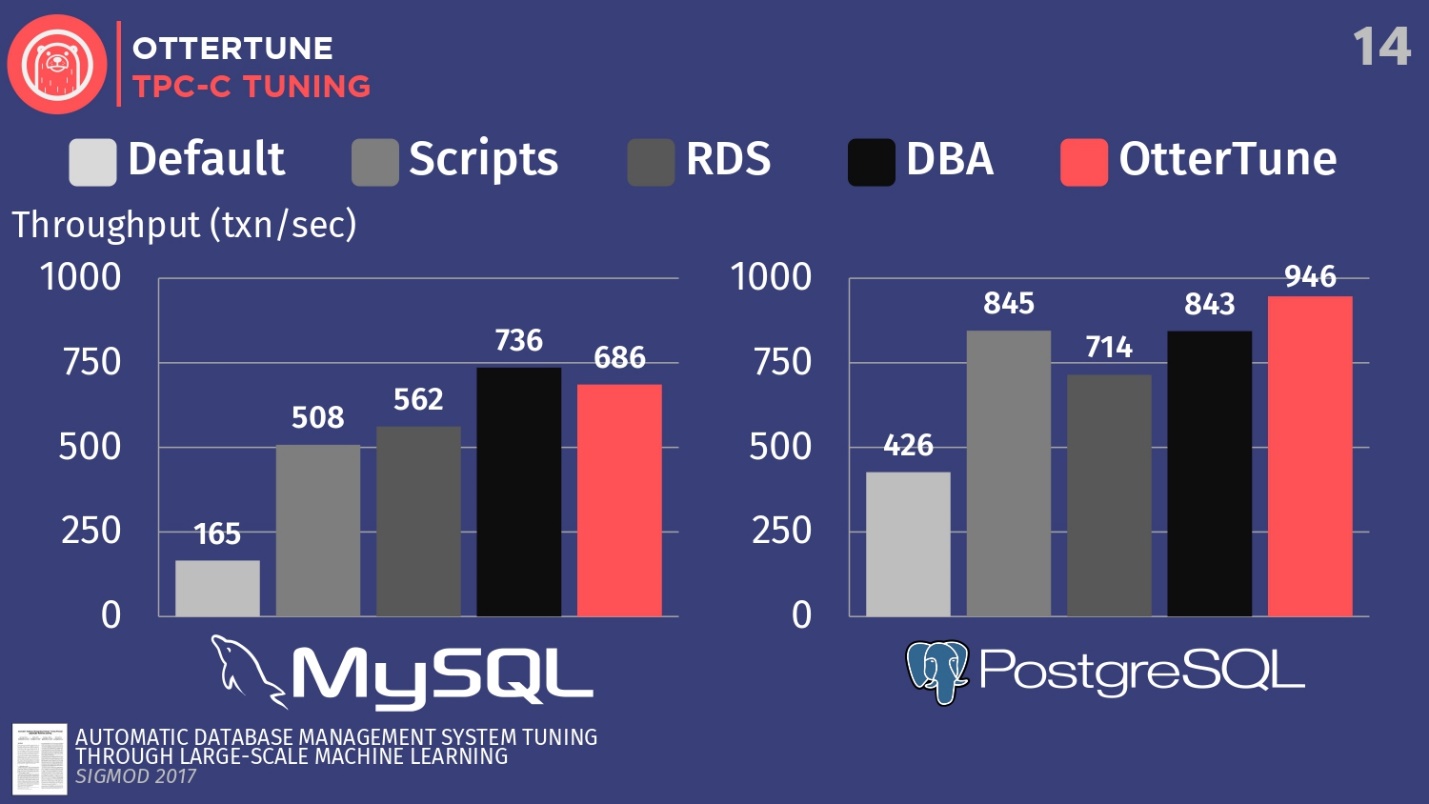

Now back to the topic of our conversation. I will tell you about the existing results from an article published in Sigmod. We configured MySQL and PostgreSQL for TPC-C using OtterTune, in order to increase throughput.

Compare the configurations of these DBMSs, deployed by default during the first installation on Ubuntu. Next, we’ll run some open source configuration scripts that you can get from Percona and some other consulting firms working with PostgreSQL. These scripts use heuristic procedures, such as the rule that you must set a certain buffer size for your hardware. We also have a configuration from Amazon RDS, which already has Amazon presets for the equipment you are working on. Then compare this with the result of manually setting up expensive DBAs, but with the condition that they have 20 minutes and the ability to set any parameters you want. And the last step is to launch OtterTune.

For MySQL, you can see that the default configuration is far behind, scripts work a little better, RDS is a little better. In this case, the best result was shown by the database administrator - the leading MySQL administrator from Facebook.

OtterTune lost to man . But the fact is that there is a certain regulator that disables the synchronization of log cleaning, and this is not important for Facebook. However, we have denied access to this OtterTune regulator because the algorithms do not know if you agree to lose the last five milliseconds of data. In our opinion, this decision should be made by a person. Perhaps Facebook agrees with such losses, we do not know this. If we adjust this regulator in the same way, we can compete with the person.

In the case of PostgreSQL, configuration scripts work well. RDS does a little worse. But, it is worth noting that OtterTune indicators in this surpassed the person. The histogram shows the results obtained after the database was set up by the Wisconsin Senior PostgreSQL Expert Advisor. In this example, OtterTune was able to find the optimal balance between the size of the log file and the size of the buffer pool, balancing the amount of memory used by these two components and ensuring the best performance.

The main conclusion is that the OtterTune service uses such algorithms and machine learning that we can achieve the same or better performance compared to the very, very expensive DBAs. And this applies not only to one instance of the database, we can scale the work to tens of thousands of copies, because it is just software, just data.

The second project that I would like to talk about is called Peloton. This is a completely new data system that we build from scratch at Carnegie Mellon. We call it a self-managing DBMS.

The idea is to find out what changes for the better can be made if you control the entire software stack. How to make the settings better than OtterTune can do, due to the knowledge about each fragment of the system, about the entire program cycle.

How it will work: we integrated reinforced machine learning componentsinto the database system, and we can observe all aspects of its behavior at runtime, and then give recommendations. And we are not limited to recommendations on adjusting regulators, as happens in the OtterTune service, we would like to perform the entire standard set of actions that I spoke about earlier: selecting indexes, choosing partition schemes, vertical and horizontal scaling, etc.

The name of the Peloton system is likely to change. I don’t know how in Russia, but in our USA, the term “ peloton” means “fearless” and “finish”, and in French it means “platoon”. But in the US there is a cycling companyPeloton, which has a lot of money. Every time a mention of them appears, for example, the opening of a new store, or a new advertisement on TV, all my friends write to me: “Look, they stole your idea, stole your name.” The ads show beautiful people who ride their exercise bikes, and we simply cannot compete with this. And recently, Uber announced a new resource planner called Peloton, so we can no longer call it our system. But we do not have a new name yet, so in this story I will still use the current version of the name.

Consider how this system works at a high level. For example, take the target database, I repeat, this is our software, this is what we work with. We collect the same workload history that I showed earlier. The difference is that we are going to generate forecasting models that allow us to predict what future workload cycles will be, what future workload requirements will be. That is why we call this system a self-managing DBMS.

An unmanned vehicle looks in front of itself and can see what is located in front of it on the road, can predict how to get to its destination. A stand-alone database system works in the same way. You should be able to look to the future and draw conclusions about how the workload will look in a week or an hour. Then we pass this predicted data to the planning component - we call it the brain - running on Tensor Flow.

The process echoes the work of AlphaGo from London as part of the Google Deep Mind project, at the top level it all works in a similar scenario: Monte Carlo searches the tree, the search result is various actions that must be performed to achieve the desired goal.

The following algorithm approximately determines the operation scheme:

Do not constantly resort to the metaphor of an unmanned car, but that’s how they work. This is called the planning horizon.

After looking at the horizon on the road, we set ourselves an imaginary point to reach, and then we begin to plan a sequence of actions to reach this point on the horizon: accelerate, slow down, turn left, turn right, etc. Then we mentally discard all actions except the first one that need to be performed, perform it, and then repeat the process again. UAVs run such an algorithm 30 times per second. For databases, this process is a bit slower, but the idea remains the same.

We decided to create our own database system from scratch, rather than building something on top of PostgreSQL orMySQL because, to be honest, they are too slow compared to what we would like to do. PostgreSQL is beautiful, I love it and use it in my university courses, but it takes too much time to create indexes, because all the data comes from disks.

In analogies with automobiles, an autonomous DBMS on PostgreSQL can be compared with an unmanned wagon. The truck will be able to recognize the dog in front of the road and go around it, but not if it ran out onto the roadway directly in front of the car. Then a collision is inevitable, because the truck is not sufficiently maneuverable. We decided to create a system from scratch in order to be able to apply changes as quickly as possible and find out what the correct configuration is.

Now we have solved the first problem and published an articleabout a combination of deep learning and classical linear regression for automatic selection and prediction of workloads.

But there is a bigger problem, for which we do not yet have a good solution - action catalogs . The question is not how to choose actions, because guys from Microsoft have already done this. The question is how to determine whether one action is better than another, in terms of what happens before deployment and after deployment. How to reverse an action if the index created by a person’s command is not optimal, how can you cancel this action and indicate the reason for the cancellation. In addition, there are a number of other tasks in terms of the interaction of our own system with the outside world, for which we do not yet have a solution, but we are working on them.

By the way, I’ll tell an entertaining story about a well-known database company. This company had an automatic index selection tool, and the tool had a problem. One client constantly canceled all indexes that the tool recommended and applied. This cancellation occurred so often that the tool hung. He did not know what the further strategy of behavior should be, because any solution offered to a person received a negative assessment. When the developers turned to the client and asked: “Why do you cancel all recommendations and suggestions on the indices?”, The client replied that he simply did not like their names. People are stupid, but you have to deal with them. And for this problem, I also have no solution.

Given two different approaches to creating autonomous database systems, let’s talk now about how to design a DBMS so that it is autonomous.

Let us dwell on three topics:

Once again, back to the key points: the database system must provide the correct information to machine learning algorithms for the subsequent adoption of better decisions. The amount of useless data that we transmit should be reduced in order to increase the speed of receiving responses.

I have already said that any regulator that requires a person to make a value judgment in autonomous components should be marked as prohibited. It is necessary to mark this parameter in the settings

The algorithm may find that when disabling synchronization in the log, the speed will increase significantly and apply such a change, however, this may not be the right decision in your project.

As for regulators, which should be set automatically, additional metadata is needed on how algorithms can change such regulators.

This includes parameters such as:

For different ranges of values, it is desirable to give the algorithm a range that it can use. If there is not enough memory on the machine, then it is logical to increase the value of the regulator in 10 kilobyte intervals, but when working on a machine with a large amount of memory, an increase step of 10 gigabytes is possible. Thus, the space of solutions is reduced, and this allows the algorithms to converge faster to the answer.

It is necessary to somehow mark each regulator, which is limited by hardware resources. There are nuances, however, this must be done. For example, you need to limit the total amount of memory that applications or database systems can use so that it does not exceed the total available amount of memory.

The problem is that sometimes it makes sense to lay redundant collateral, such as with processor cores. How the algorithm will actually work with this, we just do not know yet. But we want to be able to operate with metadata about what, say, this controller refers to memory, this one to disk, and so on. Because then we can understand within which ranges we need to stay.

We believe that it is necessary to remove all the regulators that can configure themselves, if any. This problem does not occur with open source software, it relates more to vendor software.

Suppose, in the process of work, an additional external factor is discovered that is not taken into account in machine learning algorithms. So, we need to build new models taking into account this, which in itself is an additional workload: we cannot know in advance what the query will return and, again, we need to build models that predict what will happen next. If your system has something like Oracle auto-adjust knobs, then they can change their values whenever they like, and you cannot simulate this in advance.

I repeat, this model of behavior is observed only in the commercial sphere, and to maintain autonomy, all such regulators must be disabled or removed.

As for metrics, we want to uncover the hardware capabilities that underlie the database system. We are talking about the capabilities of the processor, memory speed, disk speed, network speed and the like. There should be something like an internal directory, or an information scheme that would show what the processor looks like. The reason is simple: when you deploy PostgreSQL on a specific machine and on hardware, one controller configuration will be optimal, and when using more powerful equipment, the optimal controller configuration can be very different.

By providing hardware information to our algorithms, you can use machine learning methods and extrapolate what you can achieve on this computer and what on another computer.

If there are any subcomponents that can be customized, you must be sure that all the correct metrics are provided for each individual component. As an example, consider an example of a bad system in which this does not happen properly. I am talking about RocksDB, known as MyRocks in MySQL.

RocksDB allows you to customize different families of columns. You can define several families of columns per table, and then configure the parameters for each family individually. To find out if the family is set up correctly, you need to look at the metrics and understand how the hardware was actually used. You need to make a request to the RocksDB information scheme to get statistics on column families.

To determine the performance of a DBMS, it is important not to display the families of columns and how to configure them, but the number of read and write operations performed by the system. But MyRocks does not show such information on column families. The only way to get this information - take a look at the global statistics of family columns:

In the commercial field, things are a little better than in companies that use open source. Although the latter improve this aspect from release to release, so far no one has reached the ideal.

Besides the obvious downtime, the problem is that you need to simulate the length of downtime . MySQL has a regulator that sets the size of the log file. And depending on the current and future values, downtime will change. If the size of the log file is currently 5 GB, and I change the value to 10 GB, I can start working immediately after the system restarts, because the actual file size does not change. But if you need to change 5 GB for one, then the downtime will increase - it will take a lot of time to compress the file.

It is necessary to simulate this whole process, and thus more and more time is spent on the adoption of changes. For this reason, we are building our system from scratch - PostgreSQL does not allow this.

Notifications are a very important element of work. You need to know what is happening in the models. For example, that the work slowed down because the index is being built now, or because the index just built was not optimal.

Difficulties also arise when horizontally scaling a workload. As the load increases, the system will slow down, but you still need to work with it - in the future, the system will have to comply with the SLA level. At the moment, we do not yet know how we will resolve this issue.

And the last thing I want to talk about. As you know, many data systems operate in replicated environments to achieve high availability. Therefore, naturally, the desire to profitably use additional resources to collect more data for training. This requires replicas to be different and have different configurations.

Suppose you work in a system with one master database and two replicas, and solve the problem of selecting indexes. An excellent index has been selected on the master, but in the replicas you can try other indexes, suddenly some will be better than in the master. Imagine that the index found in the first replica turned out to be really the best - it gives higher performance than on the index in the master database, respectively, you can move it and use it further on the master.

However, do not forget that it happens that the replicas may have configurations that are not able to satisfy the application requirements for the workload.

Let's say the second replica uses an index with less good performance. This is bad, because if the master fails, you will need to switch to such a replica, and we will get a 5-minute delay. All this results in downtime, and the whole idea of high system availability is lost.

To avoid losses, you need to recognize the configuration as non-liquid at an early stage, and either roll it back, turn it off and restart, returning it to the correct configuration, or simply cancel the action and try to catch up with the lost as soon as possible. Since it is known that no DBMS allows you to have different configurations at the level that we are talking about here, the work must be done in an environment based on machine learning.

I am going to end my report with criticism towards Oracle . In 2017, Oracle said it released the world's first self-government DBMS. Larry Ellison, CEO and founder of Oracle, went on stage and said that "this is the most important thing that Oracle has done in 20 years."

Такая несправедливость немного разозлила меня. Не то, чтобы я хотел получить от них признание, но, по крайней мере, они могли бы сказать, что такая идея возникла у нас намного раньше. Мы представили статью на конференции CIDR за несколько месяцев до того, как они опубликовали свои идеи. Я послал Ларри электронное письмо, в котором написал: «Эй, я видел твое объявление, но было немного обидно, что ты ничего не сказал о нас. Конечно, мы некоторое время не работали над этим проектом, но как ты можешь говорить, что Oracle стал первым в мире в этой области?» Он не ответил на мое письмо, это нормально — он никогда не отвечает на мои письма.

However, let's take a look and see what the system presented by Oracle really is. According to them, five key ideas make the system autonomous.

First, this automatic optimized fix is a pretty good thing, but I would not call it self-management, because the point is that when a fresh version is released, changes are made without any downtime.

The next three ideas are automatic indexing, recovery, and scaling.. In fact, these are the same tools that I spoke about earlier, which came from the era of self-adjustment in the 2000s. The same tools that Oracle did ten years ago, now launched in a managed environment. The same limitations that I mentioned earlier apply to them: these are reactionary measures, the absence of transfer training - there is no way to get knowledge from any action or change for further improvement.

Therefore, I argue that such a system is not self-governing. We can argue that for DBMS the term self-government means, but this is a rather philosophical question.

The fifth point - automatic query tuning. If you are familiar with database literature, this is called adaptive query processing. The basic idea is this: take a complex query with many JOINs. We skip through the optimizer and get a query execution plan. After launching an optimized request into operation, doubts about the quality of optimization are possible. If this happens, you need to stop working with such a request, return to the optimizer and request a new plan. And then you need to continue from the stopping point or restart the request with a better plan.

The idea is not new and is not an Oracle development. Microsoft in 2017 announced SQL Server equipped with the same feature. IBM DB2 in the early 00s had something similar, called "LEO" - a learning optimizer. And even earlier, in the 1970s, that's exactly how Ingres query optimizer worked. He processed data tuples, because then it was not possible to make JOIN, and worked with tables numbering about a hundred tuples. Therefore, for that time the idea was applicable.

In conclusion, I would like to say that I think that it will become possible to achieve DBMS autonomy in the next decade. It will take a lot of research, many problems have not been solved, and they still need to be worked on. What we do is pretty exciting.

I want to give all those who work with such systems parting words - every time you add a new function, you must clearly understand how this function will be controlled by the machine. Do not think that there is a person who knows how to configure the regulator for any application. You should always think about how the information will be processed, how the system will restart, etc., if the parameters are controlled by software.

Andy Pavlo on HighLoad ++ spoke about the DBMS of the future, which you can "touch" right now. If you missed this speech or prefer to receive information in Russian - under the cut is the translation of the speech.

It will be about the Carnegie Mellon University project on the creation of autonomous DBMS. The term “autonomous” means a system that can automatically deploy, configure, configure itself without any human intervention. It may take about ten years to develop something like this, but that is what Andy and his students are doing. Of course, machine learning algorithms are needed to create an autonomous DBMS, however, in this article we will focus only on the engineering side of the topic. Consider how to design software to make it standalone.

About the speaker: Andy Pavlo, associate professor at Carnegie Mellon University, under his leadership creates a "self-managed" DBMS PelotonDB , as well as ottertune , which helps to tune PostgreSQL and MySQL configs using machine learning. Andy and his team are now true leaders in self-managing databases.

The reason we want to create an autonomous DBMS is obvious. Managing these DBMS tools is a very expensive and time-consuming process. The average DBA salary in the United States is approximately 89 thousand dollars a year. Translated into rubles, 5.9 million rubles a year are obtained. This really large amount you pay people to just keep an eye on your software. About 50% of the total cost of using the database is paid for by the work of such administrators and related staff.

When it comes to really big projects, such as we discuss on HighLoad ++ and which use tens of thousands of databases, the complexity of their structure goes beyond human perception. Everyone approaches this problem superficially and tries to achieve maximum performance by investing minimal effort in tuning the system.

You can save a round sum if you configure the DBMS at the application and environment level to ensure maximum performance.

Self-adaptive databases, 1970–1990

The idea of autonomous DBMSs is not new; their history dates back to the 1970s, when relational databases first began to be created. Then they were called self-adaptive databases (Self-Adaptive Databases), and with their help they tried to solve the classic problems of database design, over which people still struggle to this day. This is the choice of indexes, the partition and construction of the database schema, as well as data placement. At that time, tools were developed that helped database administrators deploy the DBMS. These tools, in fact, worked just like their modern counterparts work today.

Administrators track requests executed by the application. They then pass this query stack to the tuning algorithm, which builds an internal model of how the application should use the database.

If you create a tool that helps you automatically select indexes, then build charts from which you can see how often each column is accessed. Then transfer this information to the search algorithm, which will look through many different locations - will try to determine which columns can be indexed in the database. The algorithm will use the internal cost model to show that this particular one will give better performance compared to other indexes. Then the algorithm will give a suggestion about what changes in the indices should be made. At this moment, it’s time to participate, consider this proposal and not only decide whether it is right, but also choose the right time for its implementation.

DBAs should know how the application is used when there is a drop in user activity. For example, on Sunday at 3:00 a.m. the lowest level of database queries, so at this time you can reload the indexes.

As I said, all the design tools of the time worked the same way - this is a very old problem . The scientific supervisor of my scientific supervisor wrote an article about automatic index selection in 1976.

Self-tuning databases, 1990–2000

In the 1990s, people, in fact, worked on the same problem, only the name changed from adaptive to self-tuning databases.

The algorithms got a little better, the tools got a little better, but at a high level they worked the same way as before. The only company at the forefront of the movement of self-tuning systems was Microsoft Research with their automatic administration project. They developed truly wonderful solutions, and in the late 90s and early 00s they again presented a set of recommendations for setting up their database.

The key idea that Microsoft put forward was different from what it was in the past - instead of having the customization tools support their own models, they actually simply reused the query optimizer cost model to help determine the benefits of one index against another. If you think about it, it makes sense. When you need to know whether a single index can really speed up queries, it does not matter how large it is if the optimizer does not select it. Therefore, the optimizer is used to find out if he will actually choose something.

In 2007, Microsoft Research published an article that set out a retrospective of research over ten years. And it covers well all the complex tasks that arose on every segment of the path.

Another task that has been highlighted in the era of self-tuning databases is how to make automatic adjustments to regulators. A database controller is some kind of configuration parameter that changes the behavior of the database system at runtime. For example, a parameter that is present in almost every database is the size of the buffer. Or, for example, you can manage settings such as blocking policies, the frequency of disk cleanup, and the like. Due to the significant increase in the complexity of DBMS regulators in recent years, this topic has become relevant.

To show how bad things are, I’ll give a review that my student did after studying a lot of PostgreSQL and MySQL releases.

Over the past 15 years, the number of regulators in PostgreSQL has increased 5 times, and for MySQL - 7 times.

Of course, not all regulators actually control the task execution process. Some, for example, contain file paths or network addresses, so only a person can configure them. But a few dozen of them can really affect performance. No man can hold so much in his head.

Cloud DB, 2010–…

Further we find ourselves in the era of the 2010s, in which we are to this day. I call it the era of cloud databases. During this time, much work has been done to automate the deployment of a large number of databases in the cloud.

The main thing that worries major cloud providers is how to host a tenant or migrate from one to another. How to determine how much resources each tenant will need, and then try to distribute them among the machines so as to maximize productivity or meet SLA with minimal cost.

Amazon, Microsoft and Google solve this problem, but mainly at the operational level. Only recently, cloud service providers began to think about the need to configure individual database systems. This work is not visible to ordinary users, but it determines the high level of the company.

Summing up the 40-year research of databases with autonomous and non-autonomous systems, we can conclude that this work is still not enough.

Why today we cannot have a truly autonomous system of self-government? There are three reasons for this.

First, all of these tools, except for the distribution of workloads from cloud service providers, are only advisory in nature . That is, on the basis of the calculated option, a person must make a final, subjective decision as to whether such a proposal is correct. Moreover, it is necessary to observe the operation of the system for some time to decide whether the decision made remains correct as the service develops. And then apply the knowledge to your own internal decision-making model in the future. This can be done for one database, but not for tens of thousands.

The next problem is that any action is only a reaction to something. In all the examples that we examined, the work goes with data on the past workload. There is a problem, records about it are transferred to the instrument, and he says: “Yes, I know how to solve this problem.” But the solution only concerns a problem that has already occurred. The tool does not predict future events and accordingly does not offer preparatory actions. A person can do this, and does it manually, but tools cannot.

The final reason is that none of the solutions has knowledge transfer.. Here is what I mean: for example, let's take a tool that worked in one application on the first database instance, if you put it in another same application on another database instance, it could, based on the knowledge gained when working with the first database data help set up a second database. In fact, all tools start working from scratch, they need to re-get all the data about what is happening. Man works in a completely different way. If I know how to configure one application in a certain way, I can see the same patterns in another application and, possibly, configure it much faster. But not one of these algorithms, not one of these tools still works this way.

Why am I sure it's time for change? The answer to this question is about the same as the question of why super-arrays of data or machine learning have become popular. Equipment is becoming better quality : production resources are increasing, storage capacity is growing, hardware capacity is increasing, which speeds up calculations for learning machine learning models.

Advanced software tools have become available to us. Previously, you needed to be an expert in MATLAB or low-level linear algebra to write some machine learning algorithms. Now we have Torch and Tenso Flow, which make ML available, and, of course, we have learned to better understand the data. People know what kind of data may be needed for decision-making in the future, therefore they do not discard as much data as before.

The goal of our research is to close this circle in autonomous DBMSs. We can, like the previous tools, propose solutions, but instead of relying on the person - whether the decision is right when exactly you need to deploy it - the algorithm will do this automatically. And then with the help of feedback, he will study and become better over time.

I want to talk about the projects that we are currently working at at Carnegie Mellon University. In them, we approach the problem in two different ways.

In the first - OtterTune - we look for ways to tune the database, treating them as black boxes. That is, ways to tune existing DBMSs without controlling the internal part of the system and observing only the response.

The Peloton project is about creating new databases from scratch , from scratch, given the fact that the system should work autonomously. What adjustments and optimization algorithms need to be laid down - which cannot be applied to existing systems.

Let's consider both projects in order.

Ottertune

The existing system adjustment project that we have developed is called OtterTune.

Imagine that the database is configured as a service. The idea is that you download the runtime metrics of heavy database operations that eat up all the resources, and the recommended configuration of regulators comes in response, which, in our opinion, will increase productivity. It can be a delay time, bandwidth or any other characteristic that you specify - we will try to find the best option.

The main thing that is new in the OtterTune project is the ability to use data from previous sessionssettings and improve the efficiency of the next sessions. For example, take the PostgreSQL configuration, which has an application that we have never seen before. But if it has certain characteristics or uses the database in the same way as the databases that we have already seen in our applications, then we already know how to configure this application more efficiently.

At a higher level, the algorithm of work is as follows.

Let's say there is a target database: PostgreSQL, MySQL, or VectorWise. You must install the controller in the same domain, which will perform two tasks.

The first is performed by the so-called collector - a tool that collects data about the current configuration, i.e. query execution time metrics from applications to the database. The data collected by the collector is loaded into the Tuning Manager, a tuning service. It doesn’t matter if the database works locally or in the cloud. After downloading, the data is stored in our own internal repository, which stores all the test setup sessions ever made.

Before giving recommendations, you need to perform two steps. First, you need to look at the runtime metrics and find out which ones are actually important. In the example below, the indicators that MySQL returns to the command

SHOW_GLOBAL_STATUSon InnoDB. Not all of them are useful for our analysis. It is known that in machine learning a large amount of data is not always good. Because then even more data is required to separate the grain from the chaff. As in this case, it is important to get rid of entities that do not really matter .

For example, there are two metrics:

INNODB_BUFFER_POOL_BYTES_DATAand INNODB_BUFFER_POOL_PAGES_DATA. In fact, this is the same metric, but in different units. You can conduct a statistical analysis, see that the metrics are highly correlated, and conclude that using both is redundant for analysis. If you discard one of them, the dimension of the learning task will decrease and the time to receive an answer will be reduced. In the second stage, we do the same, only with regard to regulators. In MySQL 500 controllers, and, of course, not all of them are really significant, but different ones are important for different applications. It is necessary to conduct another statistical analysis to find out which regulators really affect the target function.

In our example, we found out that there are three regulators

INNODB_BUFFER_POOL_SIZE, FLUSH_METHODand they LOG_FILE_SIZEhave the greatest impact on performance. They reduce the delay time for a transactional workload. There are other interesting points related to regulators. In the screenshot there is a knob with a name

TIMED_MUTEXES. If you refer to the working documentation of MySQL, in section 45.7 it will be indicated that this regulator is out of date. But the machine learning algorithm cannot read the documentationtherefore does not know about it. He knows that there is a regulator that can be turned on or off, and it will take a long time to understand that this does not affect anything. But you can make calculations in advance and find out that the regulator does nothing, and do not waste time setting it up. After analysis, the data is transferred to our configuration algorithm using the Gaussian process model - a rather old method. You have probably heard of deep learning, we are doing something similar, but without deep networks. We use GPflow- a package for working with models of the Gaussian process, developed in Russia based on TensorFlow. The algorithm issues a recommendation that should improve the objective function; this data is transferred back to the installation agent working inside the controller. The agent applies the changes by performing a reset — unfortunately, it will have to restart the database — and then the process repeats again. Some more runtime metrics are collected, transferred to the algorithm, an analysis of the possibility of improving and increasing productivity is carried out, a recommendation is issued, and so on, again and again.

A key feature of OtterTune is that algorithms only need information about runtime metrics as input. We do not need to see your data and user requests. We just need to track read and write operations. This is a powerful argument - data belonging to you or your customers will not be disclosed to third parties. We do not need to see any requests, the algorithm works based only on runtime metrics, because it gives recommendations for regulators, and not for physical design.

Let's take a look at the OtterTune demo. On the project’s website, we’ll run Postgres 9.6 and load the system with the TPC-C test. Let's start with the initial PostgreSQL configuration, which is deployed when installed on Ubuntu.

First, run the TPC-C test for five minutes, collect the necessary runtime metrics, upload them to the OtterTune service, get recommendations, apply the changes, and then repeat the process. We will come back to this later. The database system runs on one computer, the Tensor Flow service on another, and loads the data here.

Five minutes later, we refresh the page (a demonstration of this part of the results begins at this moment ). When we first started, in the default configuration for PostgreSQL, there were 623 transactions per second. Then, after receiving the recommendation and applying the changes once, the number of transactions increased to 2300 per second. It is worth recognizing that this demonstration has already been launched several times, so the system already has a set of previously collected data. That is why the solution is so fast. What would happen if the system did not have such previously collected data? This algorithm is a kind of step-by-step function, and gradually it would get to this level.

After some time and five iterations, the best result was 2600. We went from 600 transactions per second, and were able to reach a value of 2600. A small drop appeared because the algorithm decided to try a different way of adjusting the regulators after it achieved good results. The result was a margin, so a large drop in performance did not happen. Having received a negative result, the algorithm reconfigured and began to look for other ways of regulation.

We conclude that you should not be afraid of starting a bad strategy into work, because the algorithm will explore the solution space and try on various configurations to achieve the conditions of the SLA agreement. Although you can always configure the service so that the algorithm selects only improving solutions. And over time, you will receive all the best and best results.

Now back to the topic of our conversation. I will tell you about the existing results from an article published in Sigmod. We configured MySQL and PostgreSQL for TPC-C using OtterTune, in order to increase throughput.

Compare the configurations of these DBMSs, deployed by default during the first installation on Ubuntu. Next, we’ll run some open source configuration scripts that you can get from Percona and some other consulting firms working with PostgreSQL. These scripts use heuristic procedures, such as the rule that you must set a certain buffer size for your hardware. We also have a configuration from Amazon RDS, which already has Amazon presets for the equipment you are working on. Then compare this with the result of manually setting up expensive DBAs, but with the condition that they have 20 minutes and the ability to set any parameters you want. And the last step is to launch OtterTune.

For MySQL, you can see that the default configuration is far behind, scripts work a little better, RDS is a little better. In this case, the best result was shown by the database administrator - the leading MySQL administrator from Facebook.

OtterTune lost to man . But the fact is that there is a certain regulator that disables the synchronization of log cleaning, and this is not important for Facebook. However, we have denied access to this OtterTune regulator because the algorithms do not know if you agree to lose the last five milliseconds of data. In our opinion, this decision should be made by a person. Perhaps Facebook agrees with such losses, we do not know this. If we adjust this regulator in the same way, we can compete with the person.

This example shows how we try to be conservative in that the final decision must be made by the person. Because there are certain aspects of databases that the ML algorithm is not aware of.

In the case of PostgreSQL, configuration scripts work well. RDS does a little worse. But, it is worth noting that OtterTune indicators in this surpassed the person. The histogram shows the results obtained after the database was set up by the Wisconsin Senior PostgreSQL Expert Advisor. In this example, OtterTune was able to find the optimal balance between the size of the log file and the size of the buffer pool, balancing the amount of memory used by these two components and ensuring the best performance.

The main conclusion is that the OtterTune service uses such algorithms and machine learning that we can achieve the same or better performance compared to the very, very expensive DBAs. And this applies not only to one instance of the database, we can scale the work to tens of thousands of copies, because it is just software, just data.

Peloton

The second project that I would like to talk about is called Peloton. This is a completely new data system that we build from scratch at Carnegie Mellon. We call it a self-managing DBMS.

The idea is to find out what changes for the better can be made if you control the entire software stack. How to make the settings better than OtterTune can do, due to the knowledge about each fragment of the system, about the entire program cycle.

How it will work: we integrated reinforced machine learning componentsinto the database system, and we can observe all aspects of its behavior at runtime, and then give recommendations. And we are not limited to recommendations on adjusting regulators, as happens in the OtterTune service, we would like to perform the entire standard set of actions that I spoke about earlier: selecting indexes, choosing partition schemes, vertical and horizontal scaling, etc.

The name of the Peloton system is likely to change. I don’t know how in Russia, but in our USA, the term “ peloton” means “fearless” and “finish”, and in French it means “platoon”. But in the US there is a cycling companyPeloton, which has a lot of money. Every time a mention of them appears, for example, the opening of a new store, or a new advertisement on TV, all my friends write to me: “Look, they stole your idea, stole your name.” The ads show beautiful people who ride their exercise bikes, and we simply cannot compete with this. And recently, Uber announced a new resource planner called Peloton, so we can no longer call it our system. But we do not have a new name yet, so in this story I will still use the current version of the name.

Consider how this system works at a high level. For example, take the target database, I repeat, this is our software, this is what we work with. We collect the same workload history that I showed earlier. The difference is that we are going to generate forecasting models that allow us to predict what future workload cycles will be, what future workload requirements will be. That is why we call this system a self-managing DBMS.

The basic idea of a self-managing DBMS is similar to the idea of a car with automatic control.

An unmanned vehicle looks in front of itself and can see what is located in front of it on the road, can predict how to get to its destination. A stand-alone database system works in the same way. You should be able to look to the future and draw conclusions about how the workload will look in a week or an hour. Then we pass this predicted data to the planning component - we call it the brain - running on Tensor Flow.

The process echoes the work of AlphaGo from London as part of the Google Deep Mind project, at the top level it all works in a similar scenario: Monte Carlo searches the tree, the search result is various actions that must be performed to achieve the desired goal.

The following algorithm approximately determines the operation scheme:

- The source data is a set of required actions, for example, deleting an index, adding an index, vertical and horizontal scaling, and the like.

- A sequence of actions is generated, which ultimately leads to the achievement of the maximum objective function.

- All criteria except the first are discarded, and the changes are applied.

- The system looks at the resulting effect, then the process repeats again and again.

Do not constantly resort to the metaphor of an unmanned car, but that’s how they work. This is called the planning horizon.

After looking at the horizon on the road, we set ourselves an imaginary point to reach, and then we begin to plan a sequence of actions to reach this point on the horizon: accelerate, slow down, turn left, turn right, etc. Then we mentally discard all actions except the first one that need to be performed, perform it, and then repeat the process again. UAVs run such an algorithm 30 times per second. For databases, this process is a bit slower, but the idea remains the same.

We decided to create our own database system from scratch, rather than building something on top of PostgreSQL orMySQL because, to be honest, they are too slow compared to what we would like to do. PostgreSQL is beautiful, I love it and use it in my university courses, but it takes too much time to create indexes, because all the data comes from disks.

In analogies with automobiles, an autonomous DBMS on PostgreSQL can be compared with an unmanned wagon. The truck will be able to recognize the dog in front of the road and go around it, but not if it ran out onto the roadway directly in front of the car. Then a collision is inevitable, because the truck is not sufficiently maneuverable. We decided to create a system from scratch in order to be able to apply changes as quickly as possible and find out what the correct configuration is.

Now we have solved the first problem and published an articleabout a combination of deep learning and classical linear regression for automatic selection and prediction of workloads.

But there is a bigger problem, for which we do not yet have a good solution - action catalogs . The question is not how to choose actions, because guys from Microsoft have already done this. The question is how to determine whether one action is better than another, in terms of what happens before deployment and after deployment. How to reverse an action if the index created by a person’s command is not optimal, how can you cancel this action and indicate the reason for the cancellation. In addition, there are a number of other tasks in terms of the interaction of our own system with the outside world, for which we do not yet have a solution, but we are working on them.

By the way, I’ll tell an entertaining story about a well-known database company. This company had an automatic index selection tool, and the tool had a problem. One client constantly canceled all indexes that the tool recommended and applied. This cancellation occurred so often that the tool hung. He did not know what the further strategy of behavior should be, because any solution offered to a person received a negative assessment. When the developers turned to the client and asked: “Why do you cancel all recommendations and suggestions on the indices?”, The client replied that he simply did not like their names. People are stupid, but you have to deal with them. And for this problem, I also have no solution.

Designing an autonomous DBMS

Given two different approaches to creating autonomous database systems, let’s talk now about how to design a DBMS so that it is autonomous.

Let us dwell on three topics:

- how to adjust the regulators,

- how to collect internal metrics,

- how to design actions.

Once again, back to the key points: the database system must provide the correct information to machine learning algorithms for the subsequent adoption of better decisions. The amount of useless data that we transmit should be reduced in order to increase the speed of receiving responses.

I have already said that any regulator that requires a person to make a value judgment in autonomous components should be marked as prohibited. It is necessary to mark this parameter in the settings

PG_SETTINGSor in any other configuration file in order to prevent the algorithm from accessing this regulator.With regard to parameters such as file paths, isolation levels, transaction stability requirements, etc. decisions must be made by man, not by algorithm.

The algorithm may find that when disabling synchronization in the log, the speed will increase significantly and apply such a change, however, this may not be the right decision in your project.

As for regulators, which should be set automatically, additional metadata is needed on how algorithms can change such regulators.

This includes parameters such as:

- Range of values . From minimum to maximum, when it comes to numerical variables, or sets of values for variables of enumerated types.

- Boolean flags to enable or disable options . If you have knobs for enabling and disabling options, you should not use -1 or 0, it is better to make a separate Boolean flag.

- Work with ranges of values , especially for variable intervals. For example, you need to set some buffer size. If it is a 64-bit variable, then you can set the range from 0 or 2 64 . But not all individual values from this interval make sense, or provide new information for the algorithm.

For different ranges of values, it is desirable to give the algorithm a range that it can use. If there is not enough memory on the machine, then it is logical to increase the value of the regulator in 10 kilobyte intervals, but when working on a machine with a large amount of memory, an increase step of 10 gigabytes is possible. Thus, the space of solutions is reduced, and this allows the algorithms to converge faster to the answer.

It is necessary to somehow mark each regulator, which is limited by hardware resources. There are nuances, however, this must be done. For example, you need to limit the total amount of memory that applications or database systems can use so that it does not exceed the total available amount of memory.

The problem is that sometimes it makes sense to lay redundant collateral, such as with processor cores. How the algorithm will actually work with this, we just do not know yet. But we want to be able to operate with metadata about what, say, this controller refers to memory, this one to disk, and so on. Because then we can understand within which ranges we need to stay.

We believe that it is necessary to remove all the regulators that can configure themselves, if any. This problem does not occur with open source software, it relates more to vendor software.

Suppose, in the process of work, an additional external factor is discovered that is not taken into account in machine learning algorithms. So, we need to build new models taking into account this, which in itself is an additional workload: we cannot know in advance what the query will return and, again, we need to build models that predict what will happen next. If your system has something like Oracle auto-adjust knobs, then they can change their values whenever they like, and you cannot simulate this in advance.

I repeat, this model of behavior is observed only in the commercial sphere, and to maintain autonomy, all such regulators must be disabled or removed.

As for metrics, we want to uncover the hardware capabilities that underlie the database system. We are talking about the capabilities of the processor, memory speed, disk speed, network speed and the like. There should be something like an internal directory, or an information scheme that would show what the processor looks like. The reason is simple: when you deploy PostgreSQL on a specific machine and on hardware, one controller configuration will be optimal, and when using more powerful equipment, the optimal controller configuration can be very different.

By providing hardware information to our algorithms, you can use machine learning methods and extrapolate what you can achieve on this computer and what on another computer.

If there are any subcomponents that can be customized, you must be sure that all the correct metrics are provided for each individual component. As an example, consider an example of a bad system in which this does not happen properly. I am talking about RocksDB, known as MyRocks in MySQL.

RocksDB allows you to customize different families of columns. You can define several families of columns per table, and then configure the parameters for each family individually. To find out if the family is set up correctly, you need to look at the metrics and understand how the hardware was actually used. You need to make a request to the RocksDB information scheme to get statistics on column families.

To determine the performance of a DBMS, it is important not to display the families of columns and how to configure them, but the number of read and write operations performed by the system. But MyRocks does not show such information on column families. The only way to get this information - take a look at the global statistics of family columns:

ROCKSDB_BITES_READ, ROCKSDB_BITES_WRITTEN. Such information is of little use to us, because it is aggregated, and we configure each family separately. We do not know what contribution our particular family of columns makes to the overall result in terms of the number of read and write operations. We need to collect a lot of data in order to then extract it from the aggregated aggregate value.

Пожалуйста, запомните, что никакие действия или новые функции, которые вы добавляете в систему управления базы данных не должны требовать перезапуска системы для вступления в силу.

In the commercial field, things are a little better than in companies that use open source. Although the latter improve this aspect from release to release, so far no one has reached the ideal.

Besides the obvious downtime, the problem is that you need to simulate the length of downtime . MySQL has a regulator that sets the size of the log file. And depending on the current and future values, downtime will change. If the size of the log file is currently 5 GB, and I change the value to 10 GB, I can start working immediately after the system restarts, because the actual file size does not change. But if you need to change 5 GB for one, then the downtime will increase - it will take a lot of time to compress the file.

It is necessary to simulate this whole process, and thus more and more time is spent on the adoption of changes. For this reason, we are building our system from scratch - PostgreSQL does not allow this.

Notifications are a very important element of work. You need to know what is happening in the models. For example, that the work slowed down because the index is being built now, or because the index just built was not optimal.

Difficulties also arise when horizontally scaling a workload. As the load increases, the system will slow down, but you still need to work with it - in the future, the system will have to comply with the SLA level. At the moment, we do not yet know how we will resolve this issue.

And the last thing I want to talk about. As you know, many data systems operate in replicated environments to achieve high availability. Therefore, naturally, the desire to profitably use additional resources to collect more data for training. This requires replicas to be different and have different configurations.

Suppose you work in a system with one master database and two replicas, and solve the problem of selecting indexes. An excellent index has been selected on the master, but in the replicas you can try other indexes, suddenly some will be better than in the master. Imagine that the index found in the first replica turned out to be really the best - it gives higher performance than on the index in the master database, respectively, you can move it and use it further on the master.

However, do not forget that it happens that the replicas may have configurations that are not able to satisfy the application requirements for the workload.

Let's say the second replica uses an index with less good performance. This is bad, because if the master fails, you will need to switch to such a replica, and we will get a 5-minute delay. All this results in downtime, and the whole idea of high system availability is lost.

To avoid losses, you need to recognize the configuration as non-liquid at an early stage, and either roll it back, turn it off and restart, returning it to the correct configuration, or simply cancel the action and try to catch up with the lost as soon as possible. Since it is known that no DBMS allows you to have different configurations at the level that we are talking about here, the work must be done in an environment based on machine learning.

Oracle autonomous database

I am going to end my report with criticism towards Oracle . In 2017, Oracle said it released the world's first self-government DBMS. Larry Ellison, CEO and founder of Oracle, went on stage and said that "this is the most important thing that Oracle has done in 20 years."

Такая несправедливость немного разозлила меня. Не то, чтобы я хотел получить от них признание, но, по крайней мере, они могли бы сказать, что такая идея возникла у нас намного раньше. Мы представили статью на конференции CIDR за несколько месяцев до того, как они опубликовали свои идеи. Я послал Ларри электронное письмо, в котором написал: «Эй, я видел твое объявление, но было немного обидно, что ты ничего не сказал о нас. Конечно, мы некоторое время не работали над этим проектом, но как ты можешь говорить, что Oracle стал первым в мире в этой области?» Он не ответил на мое письмо, это нормально — он никогда не отвечает на мои письма.

However, let's take a look and see what the system presented by Oracle really is. According to them, five key ideas make the system autonomous.

First, this automatic optimized fix is a pretty good thing, but I would not call it self-management, because the point is that when a fresh version is released, changes are made without any downtime.

The next three ideas are automatic indexing, recovery, and scaling.. In fact, these are the same tools that I spoke about earlier, which came from the era of self-adjustment in the 2000s. The same tools that Oracle did ten years ago, now launched in a managed environment. The same limitations that I mentioned earlier apply to them: these are reactionary measures, the absence of transfer training - there is no way to get knowledge from any action or change for further improvement.

Therefore, I argue that such a system is not self-governing. We can argue that for DBMS the term self-government means, but this is a rather philosophical question.

The fifth point - automatic query tuning. If you are familiar with database literature, this is called adaptive query processing. The basic idea is this: take a complex query with many JOINs. We skip through the optimizer and get a query execution plan. After launching an optimized request into operation, doubts about the quality of optimization are possible. If this happens, you need to stop working with such a request, return to the optimizer and request a new plan. And then you need to continue from the stopping point or restart the request with a better plan.

The idea is not new and is not an Oracle development. Microsoft in 2017 announced SQL Server equipped with the same feature. IBM DB2 in the early 00s had something similar, called "LEO" - a learning optimizer. And even earlier, in the 1970s, that's exactly how Ingres query optimizer worked. He processed data tuples, because then it was not possible to make JOIN, and worked with tables numbering about a hundred tuples. Therefore, for that time the idea was applicable.

As for systems with self-government in the form in which they exist today, I would not call it autonomy.

In conclusion, I would like to say that I think that it will become possible to achieve DBMS autonomy in the next decade. It will take a lot of research, many problems have not been solved, and they still need to be worked on. What we do is pretty exciting.

I want to give all those who work with such systems parting words - every time you add a new function, you must clearly understand how this function will be controlled by the machine. Do not think that there is a person who knows how to configure the regulator for any application. You should always think about how the information will be processed, how the system will restart, etc., if the parameters are controlled by software.

HighLoad ++ does not stand still, we are already packing our bags and moving to HighLoad ++ Siberia in Novosibirsk. There will be no foreign speakers there, but the program of 39 reports is very strong, does not allow repetition, which means that all highload developers in a good way should not wait for November and come with us. We also began to prepare

Moscow HighLoad ++ , today we are conducting a brainstorming session . If in this article you did not have enough details about machine learning, then pay attention to UseData Conf - the conference is September 16, application submission is open. Subscribe to the newsletter and you will know about all our interests.