Breakfast report with Charles Weatherly, author of the cult book Etudes for Programmers

Breakfast with Charles Weatherly, author of the cult book Etudes for Programmers, lasted four hours. In the end, the waitress asked us from a restaurant in Palo Alto, saying that there was a long line at the restaurant, and we were sitting here from eight in the morning. During this time, we discussed a lot of interesting things: Charles’s work in the Livermore Laboratory and Oracle, object-oriented and functional programming, compilers and hardware description languages, bookmarks to processors, neural network inefficiency and the undeservedly forgotten Prolog, Charles’s visit to Russia, text processing with a state machine in the hardware coprocessor and the creation of video games on FPGAs by schoolchildren.

The content of four hours with Charles Weatherly is enough for fifty articles on Habré, therefore I will list mainly topics, after which I will give some details about three of them:

Here we examined the recent disputes on Habré about OOP. Charles is campaigning for both OOP and functional programming, where applicable. I showed Charles an example I saw in two projects of unsuccessful class design for representing nodes of a parsing tree and optimizations on this tree , after which Charles said that of course, tree transformation algorithms should not be scattered into small classes in this way, but instead, the parsing tree should be quickly throw in a control flow graph, on which to use table driven transformations based on static single assignment, with a few exceptions. Charles enlightened me about the work of the Muchnik, Bob Morgan, and Randy Allen on vectorization:

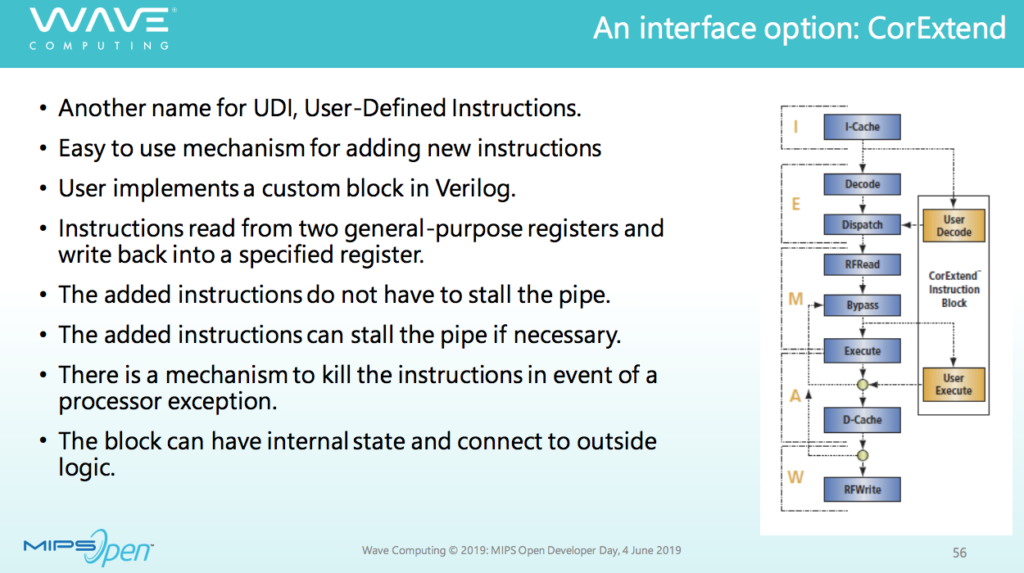

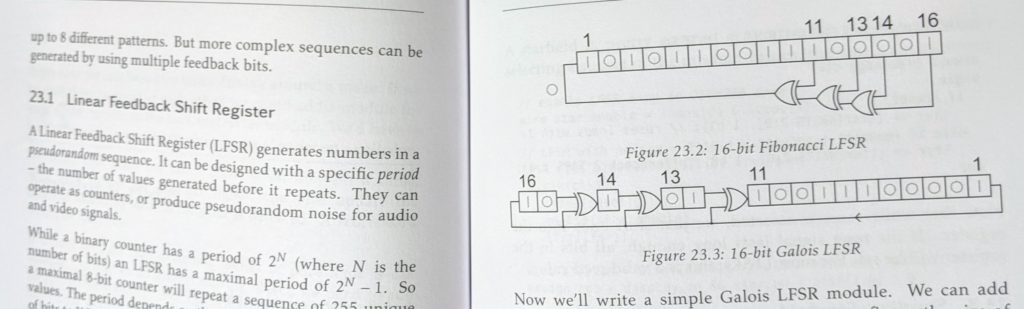

Then I told Charles that the day after tomorrow we will be in the companyseminar in Las Vegas at an electronics design automation conference , and I need his advice on a good example of a coprocessor based on the CorExtend / UDI protocol - User Defined Instructions. This protocol is used in MIPS cores. CorExtend / UDI allows you to embed a block in the processor that decodes and executes additional instructions to the main system of instructions that can be determined by the system designer on a chip. The block can be synthesized and become part of the microcircuit or be configured in the FPGA / FPGA.

Additional instructions move along the processor pipeline along with the main ones. They receive data from general registers visible to the programmer and can return the result to the register. These instructions can also save some state in the coprocessor. They can be killed by exceptions if an exception occurs, for example, in the pipeline following this instruction: The

day after tomorrow, in a presentation at a seminar, I am going to use an example with a simple convolution instruction for a neural network. But the acceleration achieved in this case is not impressive - only twice. Is it possible to make a better example?

Charles immediately came up with a much better example: hardware lexical analysis. A state machine can be placed in the coprocessor, which will determine the numbers, identifiers and comments in the text stream. It will save by storing state between individual commands that transmit text from registers to the machine. The result of the current analysis (marked up text) will also be returned to the register.

Charles also told me the story of the instructions for parsing text since IBM / 360 translate test and CDC STAR. He also told me that such a coprocessor can be used for machine learning, for pre-cleaning the data stream before applying machine learning algorithms to it.

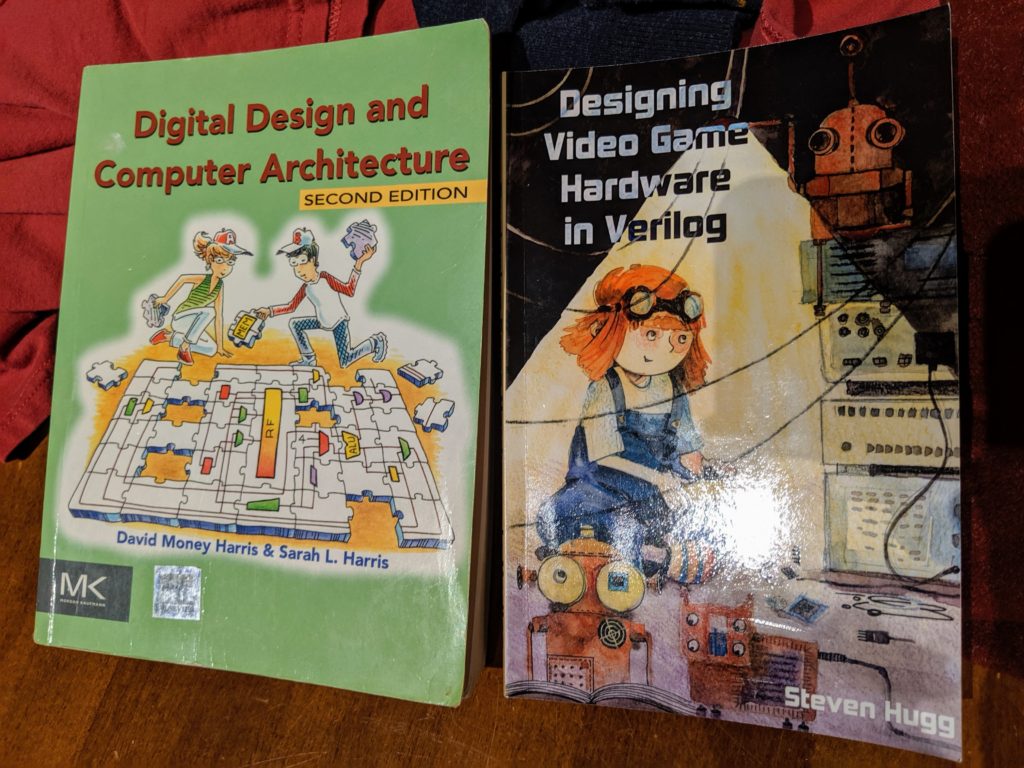

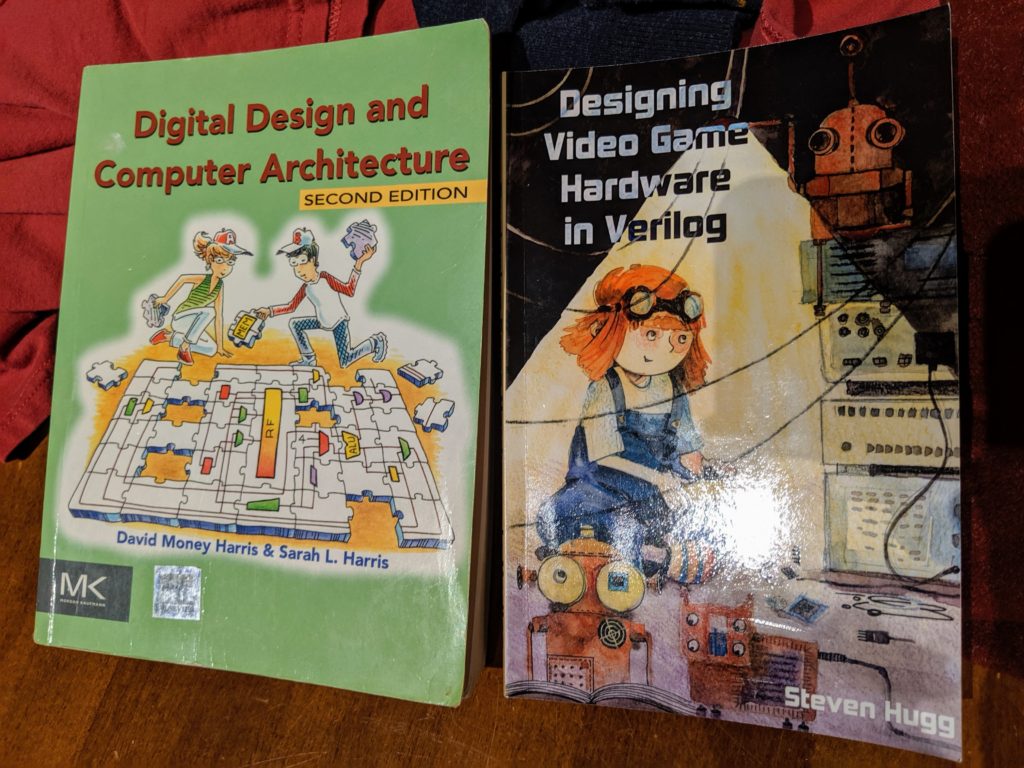

Then I told Charles Saga how a group of engineers and teachers translated and implemented in various Russian universities the textbook of David Harris and Sarah Harris “Digital circuitry and architecture of a computer” (see posts on Habr. 1 , 2 ,3). Now, with the combined efforts of MIET, RUSNANO, teachers of MEPhI and other universities, we are planning a summer school at MIET where advanced schoolchildren project video games on the FPGA with output to a graphic screen (section Between Physics and Programming ). For this, ideas from the book Designing Video Game Hardware in Verilog by Steven Hugg, December 15, 2018 are used:

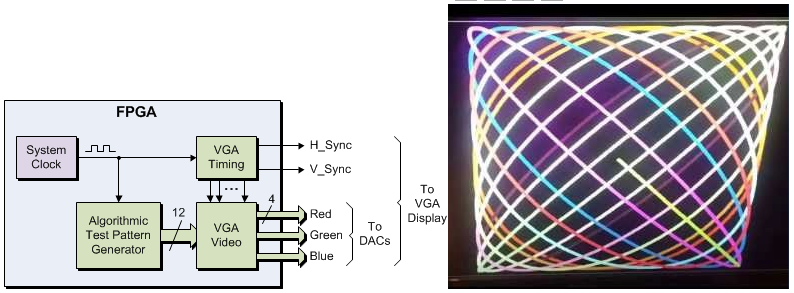

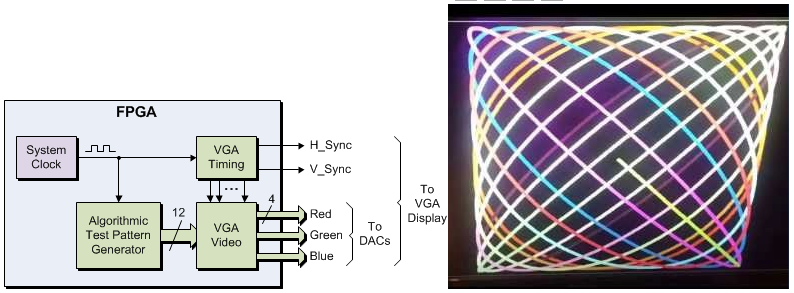

Games can be developed both in the form of purely hardware finite state machines, or in a combination of hardware graphics on FPGAs with programs on a simple schoolMIPS processor core, which is described in the posts of Stanislav Zhelnio on Habr and the wiki on schoolMIPS on GitHub . On FPGAs, it’s pretty easy to do scan generation for VGA, display the map from the memory and moving sprites with figures:

Charles suggested, in addition to games with tanks and races, to make a competition of finite automata (animals) that move the player to the target on the map (globe). Objects on the map have a “smell” - positive (food) or negative (electricity that can strike). Pupils can write finite machines on veril that see the environment, embed them in code that draws graphics and supports the map, and then compete with who has the code better:

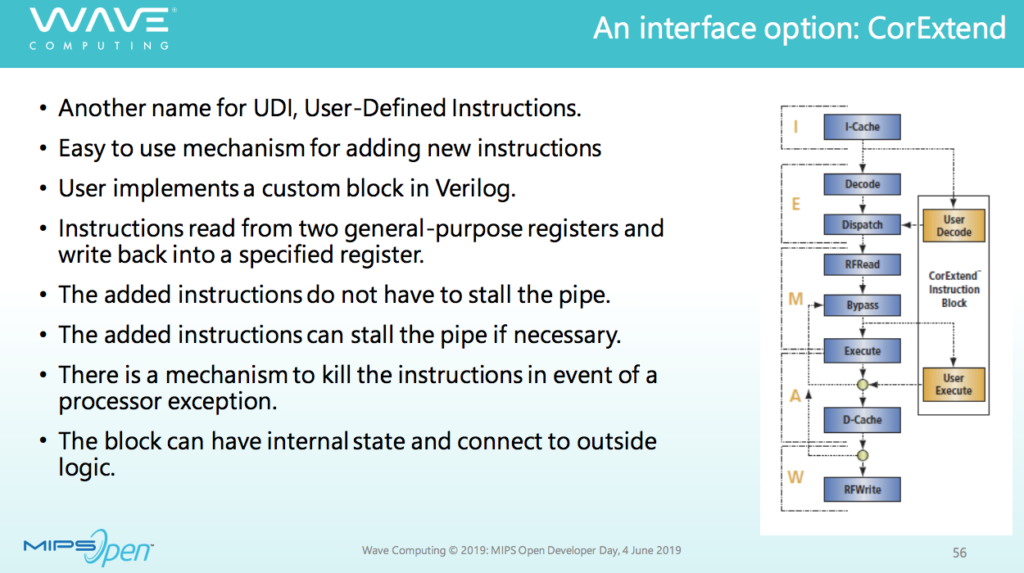

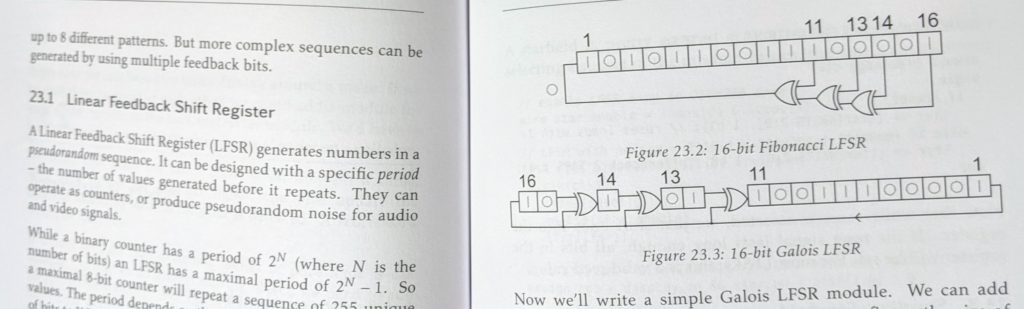

You can use LFSR hardware blocks to generate pseudo-random behavior elements:

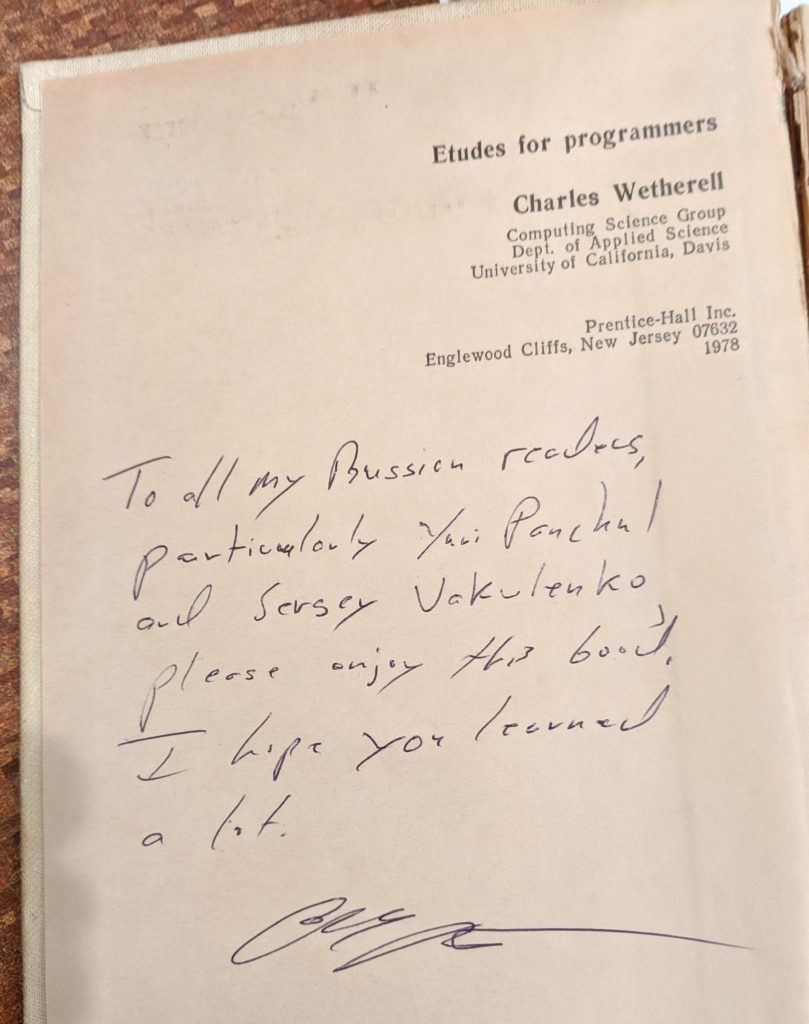

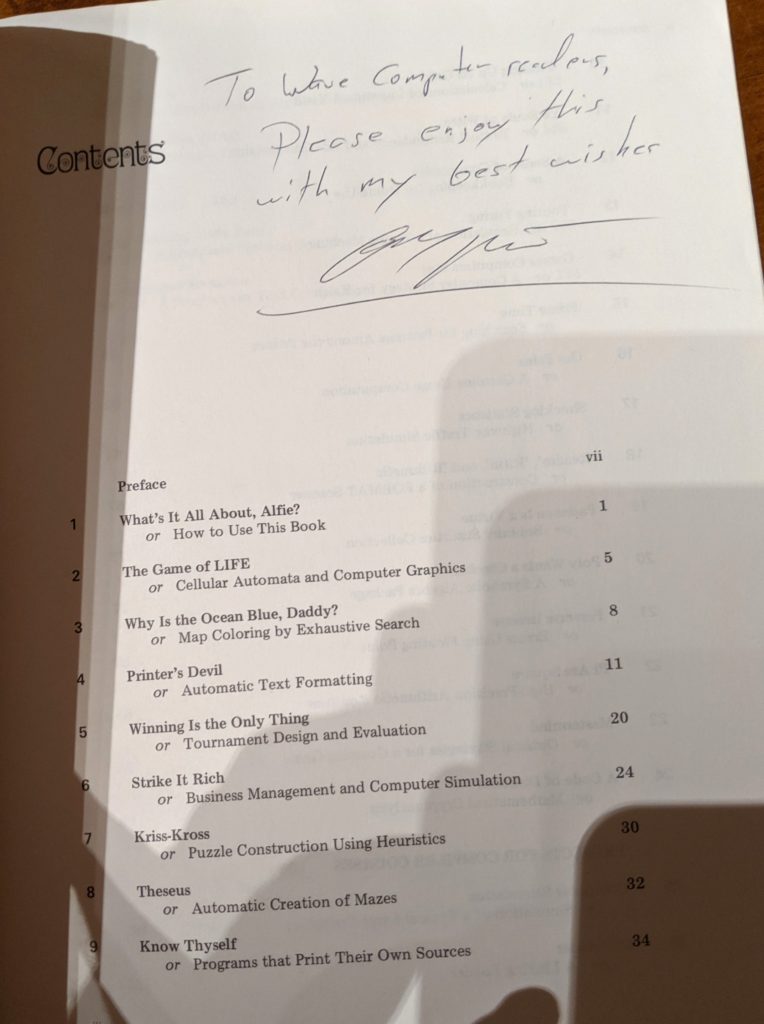

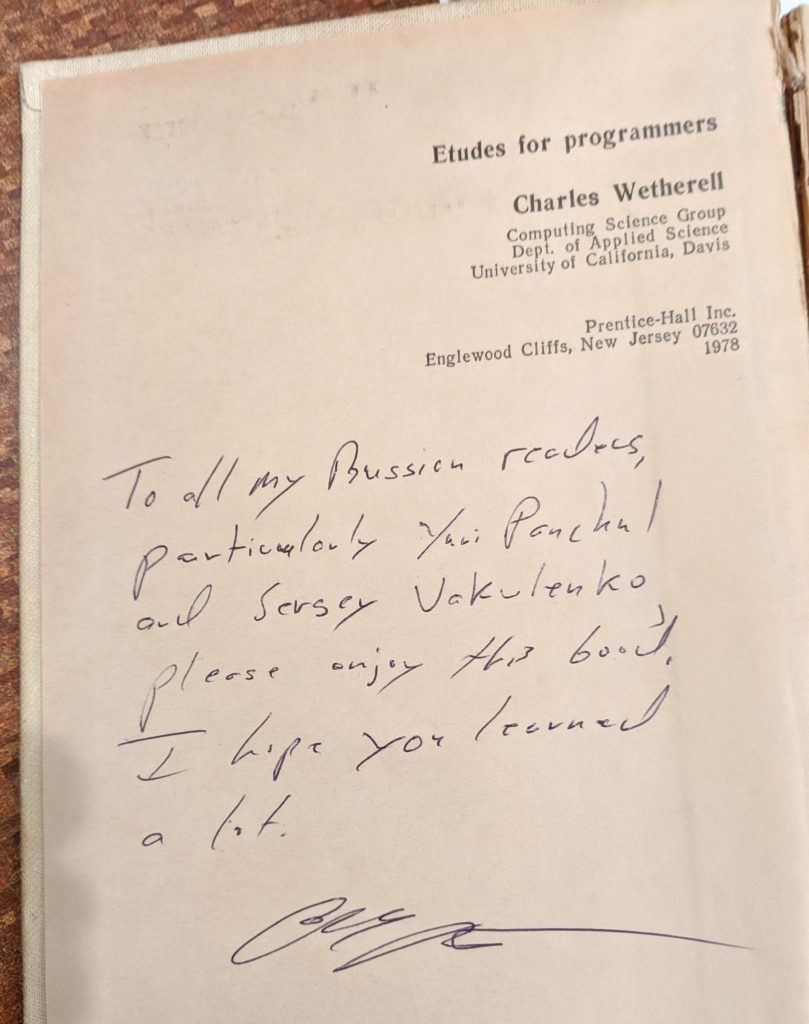

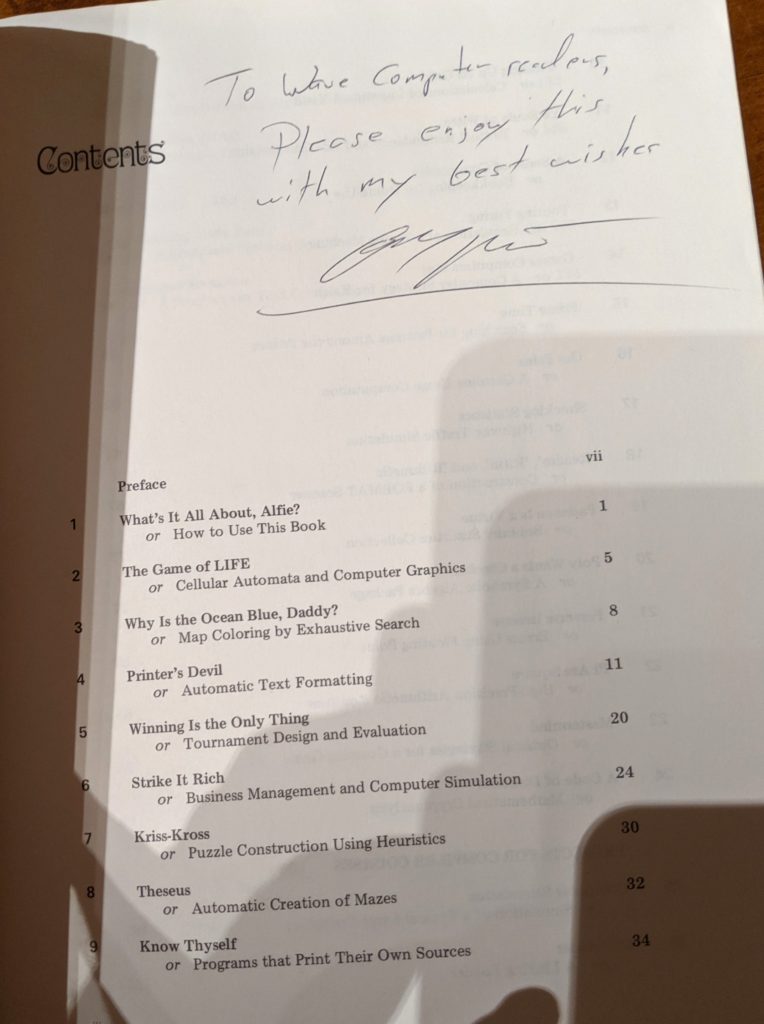

In the end, Charles left two autographs - for Russian readers (I borrowed a Russian book from Sergei Vakulenko) and readers in our company Wave Computing, from whose internal library I borrowed the original book in English:

The content of four hours with Charles Weatherly is enough for fifty articles on Habré, therefore I will list mainly topics, after which I will give some details about three of them:

- Object-oriented and functional programming. Single assignment, function values, get rid of mutations, get rid of timing.

- Data structures and compiler algorithms. Muchnik SSA and the book on optimizations. Bob Morgan (Compass) building optimizing compilers. Vectoring compilers and Randy Allen (my Wave colleague and Charles colleague about other companies).

- The evolution of the Yacc parser, the Ada language internals (DIANA), and the VHDL frontend in Synopsys.

- Attributive grammars and unsuccessful, in my opinion, their use in the MIPT training manual on the Theory of Implementation of Programming Languages (TRNP).

- JOVIAL programming language and Ada standardization. IDL language.

- Programming at the Livermore Computing Laboratory for physicists and chemists on the CDC 7600 and Cray-1. Livermore Fortran is an extension of Fortran-77 with structures and dynamic memory allocation. The use of microfiches, including for the automatic search and production of animations. Harry Nelson And how the Rubik's Cube got into the laboratory before it became known.

- Soviet clone Cray-1 Electronics SS BIS. The Fortran compiler in IPM and the C compiler that we worked on at MIPT.

- Reverse engineering a random number generator in Synopsys VCS. Congruential generator with register shift. LSFR.

- Neural network inefficiency and the undeservedly forgotten Prolog language.

- Application of methods from Prolog for static analysis of program text.

- Including analysis of processor code written in Verilog or VHDL in order to find bookmarks in it. A bookmark scattered in different parts of the processor description at the register transfer level. Finding "redundant" code that does something outside the specification. For example, a finite state machine that is waiting for a key phrase, text in registers visible to the programmer, after which the processor switches to privileged mode.

- Hybrid code analysis methods - dynamic execution with subsequent static investigation of the state space from a certain execution point.

- List of Hakmem from MIT.

- Most programmers in life use only five algorithms - quick sort, binary search, hashing, list insertion, and something else (AVL binary tree insertion?).

- The history of Unix troff at Bell Labs.

- Charles Wazerell's work in Oracle on SQL.

- A good example of using a hardware coprocessor for MIPS CorExtend / UDI is User Defined Instructions. Adding instructions to the processor for quick lexical analysis, with a state machine inside the coprocessor and maintaining state between individual instructions. Background since IBM / 360 translate test and CDC STAR.

- Using a hardware coprocessor to pre-clean the data stream before applying machine learning algorithms to it.

- Game Rogue, Scientific American in the states and the USSR.

- Summer School of Young Programmers in Novosibirsk and mosquitoes in it (according to my memories and stories of colleagues Charles Weatherly)

- How Charles spent 36 hours in Moscow and two weeks in St. Petersburg. Hermitage. In St. Petersburg universities, he did not give lectures.

- He suggested Charles to go to a summer school at MIET / Zelenograd in July or somewhere else in the fall (Moscow State University? MIPT? ITMO?).

- Education for schoolchildren and younger students. The need to exit the template (for example, sequential programming) and learning Verilog on the FPGA as one way to exit such a template.

- The use of microcircuits with a small degree of integration before FPGA exercises, so that a schoolchild or student intuitively understands that Verilog code is a description of an electronic circuit, not a program (a chain of instructions).

- An example for RTL on the FPGA for the summer school at MIET / Zelenograd in July is a self-learning finite state machine that calculates the opponent’s trends in the game “stone paper scissors”.

- Another example is the competition of finite state machines (animals) that move a player to a goal on a map (globe). Objects on the map have a “smell” - positive (food) or negative (electricity that can strike). Designing a card in FPGA, output and sprite of a player on VGA using the scan generation module.

Here we examined the recent disputes on Habré about OOP. Charles is campaigning for both OOP and functional programming, where applicable. I showed Charles an example I saw in two projects of unsuccessful class design for representing nodes of a parsing tree and optimizations on this tree , after which Charles said that of course, tree transformation algorithms should not be scattered into small classes in this way, but instead, the parsing tree should be quickly throw in a control flow graph, on which to use table driven transformations based on static single assignment, with a few exceptions. Charles enlightened me about the work of the Muchnik, Bob Morgan, and Randy Allen on vectorization:

Then I told Charles that the day after tomorrow we will be in the companyseminar in Las Vegas at an electronics design automation conference , and I need his advice on a good example of a coprocessor based on the CorExtend / UDI protocol - User Defined Instructions. This protocol is used in MIPS cores. CorExtend / UDI allows you to embed a block in the processor that decodes and executes additional instructions to the main system of instructions that can be determined by the system designer on a chip. The block can be synthesized and become part of the microcircuit or be configured in the FPGA / FPGA.

Additional instructions move along the processor pipeline along with the main ones. They receive data from general registers visible to the programmer and can return the result to the register. These instructions can also save some state in the coprocessor. They can be killed by exceptions if an exception occurs, for example, in the pipeline following this instruction: The

day after tomorrow, in a presentation at a seminar, I am going to use an example with a simple convolution instruction for a neural network. But the acceleration achieved in this case is not impressive - only twice. Is it possible to make a better example?

Charles immediately came up with a much better example: hardware lexical analysis. A state machine can be placed in the coprocessor, which will determine the numbers, identifiers and comments in the text stream. It will save by storing state between individual commands that transmit text from registers to the machine. The result of the current analysis (marked up text) will also be returned to the register.

Charles also told me the story of the instructions for parsing text since IBM / 360 translate test and CDC STAR. He also told me that such a coprocessor can be used for machine learning, for pre-cleaning the data stream before applying machine learning algorithms to it.

Then I told Charles Saga how a group of engineers and teachers translated and implemented in various Russian universities the textbook of David Harris and Sarah Harris “Digital circuitry and architecture of a computer” (see posts on Habr. 1 , 2 ,3). Now, with the combined efforts of MIET, RUSNANO, teachers of MEPhI and other universities, we are planning a summer school at MIET where advanced schoolchildren project video games on the FPGA with output to a graphic screen (section Between Physics and Programming ). For this, ideas from the book Designing Video Game Hardware in Verilog by Steven Hugg, December 15, 2018 are used:

Games can be developed both in the form of purely hardware finite state machines, or in a combination of hardware graphics on FPGAs with programs on a simple schoolMIPS processor core, which is described in the posts of Stanislav Zhelnio on Habr and the wiki on schoolMIPS on GitHub . On FPGAs, it’s pretty easy to do scan generation for VGA, display the map from the memory and moving sprites with figures:

Charles suggested, in addition to games with tanks and races, to make a competition of finite automata (animals) that move the player to the target on the map (globe). Objects on the map have a “smell” - positive (food) or negative (electricity that can strike). Pupils can write finite machines on veril that see the environment, embed them in code that draws graphics and supports the map, and then compete with who has the code better:

You can use LFSR hardware blocks to generate pseudo-random behavior elements:

In the end, Charles left two autographs - for Russian readers (I borrowed a Russian book from Sergei Vakulenko) and readers in our company Wave Computing, from whose internal library I borrowed the original book in English:

Only registered users can participate in the survey. Please come in.

If Charles Weatherly is invited to Russia, then where should he speak first of all?

- 8.7% at MSU 10

- 22.8% At MIPT 26

- 4.3% in Skolkovo 5

- 14% In Zelenograd 16

- 12.2% of ITMO 14

- 28.9% In Siberia 33

- 8.7% Elsewhere (indicate in the comments) 10