In addition to Moore - who else formulated the laws of scaling computer systems

We are talking about two rules that are also starting to lose relevance.

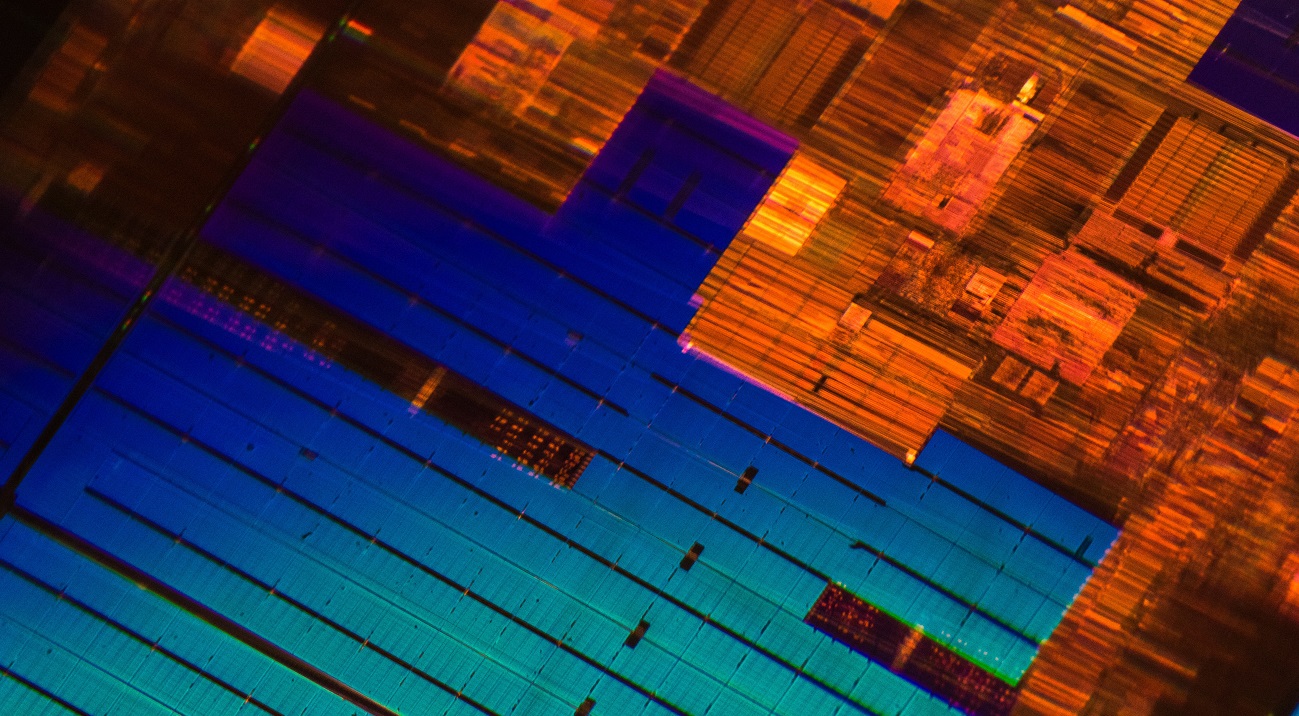

/ photo Laura Ockel Unsplash

Moore's Law was formulated more than fifty years ago. Throughout this time, for the most part, he remained fair. Even today, when switching from one technological process to another, the density of transistors on a chip increases approximately twice . But there is a problem - the speed of developing new technological processes is slowing down.

For example, Intel has been putting off mass production of its 10-nanometer Ice Lake processors for a long time. Although the IT giant will begin shipping devices next month, the announcement of the architecture took place about two and a half years ago. Also last August, GlobalFoundries integrated circuit manufacturer, who worked with AMD, stopped developing7-nm process technology (we talked about the reasons for this decision in our blog on Habré).

Journalists and executives of large IT companies have been prophesying death for Moore’s law for years. Even Gordon himself once declared that the rule he formulated would cease to apply. However, Moore’s law is not the only law that loses relevance and on which manufacturers of processors are equal.

Dennard's scaling law

It was formulated in 1974 by Robert Dennard, engineer and developer of dynamic memory DRAM, along with colleagues from IBM. The rule is as follows:

"By reducing the size of the transistor and increasing the processor clock speed, we can easily increase its performance."

Dennard’s rule fixed the reduction in the width of the conductor (process technology) as the main indicator of progress in the microprocessor technology industry. But Dennard’s scaling law ceased to apply around 2006. The number of transistors in the chips continues to increase, but this fact does not give a significant increase in device performance.

For example, representatives of TSMC (a semiconductor manufacturer) say that switching from 7-nm process technology to 5-nm will increase the processor clock speed by only 15%.

The reason for the slowdown in frequency growth is the leakage of currents, which Dennard did not take into account in the late 70s. With a decrease in the size of the transistor and an increase in the frequency, the current begins to heat the microcircuit more strongly, which can damage it. Therefore, manufacturers have to balance the power allocated by the processor. As a result, since 2006, the frequency of mass chips has been established at around 4-5 GHz.

/ photo by Jason Leung Unsplash

Today, engineers are working on new technologies that will solve the problem and increase the performance of microchips. For example, experts from Australia are developinga metal-air transistor that has a frequency of several hundred gigahertz. The transistor consists of two metal electrodes that act as drain and source and are located at a distance of 35 nm. They exchange electrons with each other due to the phenomenon of field emission .

According to the developers, their device will allow you to stop “chasing” the reduction of technological processes and concentrate on building high-performance 3D structures with a large number of transistors on a chip.

Kumi Rule

It was formulated in 2011 by Stanford professor Jonathan Koomey. Together with colleagues from Microsoft, Intel and Carnegie Mellon University, he analyzed information about the energy consumption of computing systems starting with the ENIAC computer, built in 1946. As a result, Kumi made the following conclusion:

“The volume of calculations per kilowatt of energy under static load doubles every year and a half.”

At the same time, he noted that the energy consumption of computers over the past years has also increased.

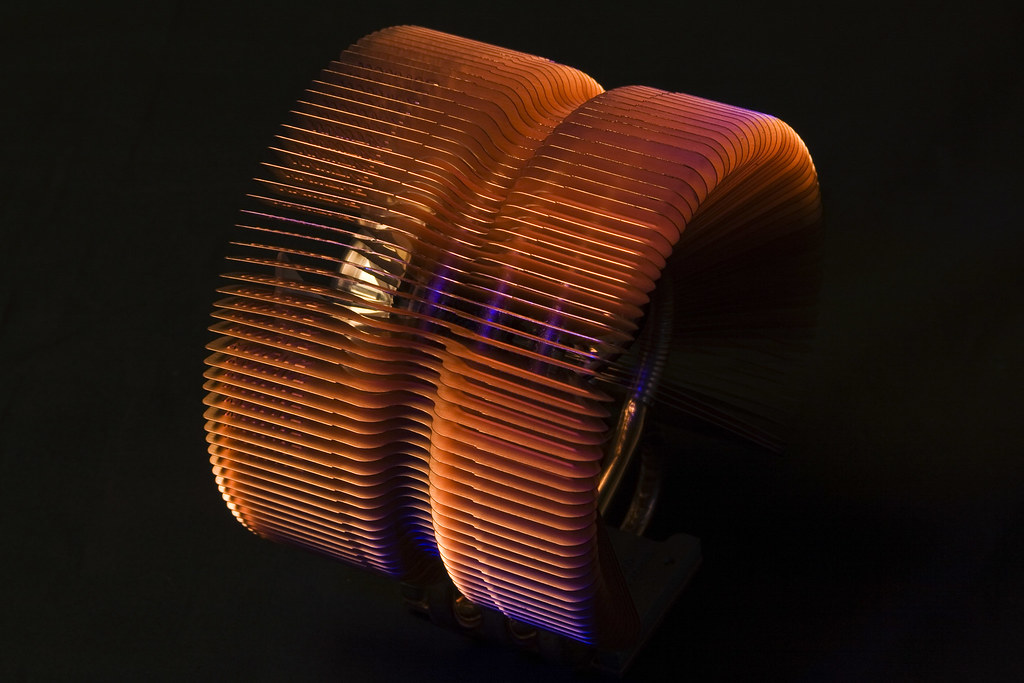

In 2015, Kumi returned to his work and supplemented the study with new data. He found that the trend he described had slowed down. The average chip performance per kilowatt of energy began to double approximately every three years. The trend has changed due to the difficulties associated with cooling the chips ( page 4 ), since with the decrease in the size of the transistors it becomes more difficult to remove heat.

/ photo Derek Thomas CC BY-ND

New chip cooling technologies are being developed now, but there is no need to talk about their mass implementation. For example, developers from a university in New York suggested using3D laser printing for applying a thin heat-conducting layer of titanium, tin and silver to a crystal. The thermal conductivity of such a material is 7 times better than that of other thermal interfaces (thermal paste and polymers).

Despite all the factors, according to Kumi , the theoretical energy limit is still far away. He refers to a study by physicist Richard Feynman, who back in 1985 noted that the energy efficiency index of processors will grow 100 billion times. At the time of 2011, this figure increased by only 40 thousand times.

The IT industry is accustomed to high growth in computing power, so engineers are looking for ways to extend Moore’s law and overcome the difficulties dictated by the rules of Kumi and Dennard. In particular, companies and research institutes are looking for a replacement for traditional transistor technology and silicon. We’ll talk about some of the possible alternatives next time.

What we write about in a corporate blog:

- Processors for servers: discussing the latest

- Data Center Development: Technology Trends

- How the IaaS Cloud Helps Organize Your Company’s Business: Avito.ru Case

- How to increase the energy efficiency of the data center

- How IaaS helps grow your business: three challenges that the cloud will solve

- How to place 100% of the infrastructure in the cloud of the IaaS provider and not regret it

- What is hidden behind the term vCloud Director - an inside look

Our reports with VMware EMPOWER 2019 on Habré: