Backup, Part 2: Overview and Testing rsync-based backup tools

This article continues

backup cycle

- Backup, part 1: Why do you need a backup, an overview of methods, technologies

- Backup, Part 2: Overview and Testing the rsync-based backup tool

- Backup, Part 3: Overview and Testing duplicity, duplicati

- Backup, Part 4: Overview and Testing zbackup, restic, borgbackup

- Backup, Part 5: Testing Bacula and Veeam Backup for Linux

- Backup, Part 6: Comparing Backup Tools

- Backup Part 7: Conclusions

As we already wrote in the first article, there are a very large number of backup programs based on rsync.

Of those that are most suitable for our conditions, I will consider 3: rdiff-backup, rsnapshot and burp.

Test File Sets

Test file sets will be the same for all candidates, including future articles.

The first set : 10 GB of media files, and about 50 MB - the source code for the site in php, file sizes from a few kilobytes for the source code, to tens of megabytes for media files. The goal is to simulate a site with statics.

The second set : obtained from the first when renaming a subdirectory with 5 GB media files. The goal is to study the behavior of the backup system on renaming a directory.

Third set : obtained from the first by deleting 3GB of media files and adding new 3GB of media files. The goal is to study the behavior of the backup system during a typical site update operation.

Getting Results

Any backup is performed at least 3 times and is accompanied by resetting the file system caches commands

syncand echo 3 > /proc/sys/vm/drop_cachesboth on the test server and the backup server. On the server that will be the source of the backups, monitoring software is installed - netdata, with which the load on the server will be estimated during backup, this is necessary to assess the load on the server by the backup process.

I also think that the backup storage server is slower than the main server, but has more capacious disks with a relatively low random write speed - the most common situation when backing up, and due to the fact that the backup server should not do anything good I will not monitor load other than backup, I will not monitor its load using netdata.

Also, my servers have changed, on which I will check various systems for backup.

Now they have the following characteristics

CPU

RAM, reading ...

... and recording

Disk on the data source server

Disk on the backup storage server

Network speed between servers

sysbench --threads=2 --time=30 --cpu-max-prime=20000 cpu run

sysbench 1.0.17 (using system LuaJIT 2.0.4)

Running the test with following options:

Number of threads: 2

Initializing random number generator from current time

Prime numbers limit: 20000

Initializing worker threads...

Threads started!

CPU speed:

events per second: 1081.62

General statistics:

total time: 30.0013s

total number of events: 32453

Latency (ms):

min: 1.48

avg: 1.85

max: 9.84

95th percentile: 2.07

sum: 59973.40

Threads fairness:

events (avg/stddev): 16226.5000/57.50

execution time (avg/stddev): 29.9867/0.00

RAM, reading ...

sysbench --threads=4 --time=30 --memory-block-size=1K --memory-scope=global --memory-total-size=100G --memory-oper=read memory run

sysbench 1.0.17 (using system LuaJIT 2.0.4)

Running the test with following options:

Number of threads: 4

Initializing random number generator from current time

Running memory speed test with the following options:

block size: 1KiB

total size: 102400MiB

operation: read

scope: global

Initializing worker threads...

Threads started!

Total operations: 104857600 (5837637.63 per second)

102400.00 MiB transferred (5700.82 MiB/sec)

General statistics:

total time: 17.9540s

total number of events: 104857600

Latency (ms):

min: 0.00

avg: 0.00

max: 66.08

95th percentile: 0.00

sum: 18544.64

Threads fairness:

events (avg/stddev): 26214400.0000/0.00

execution time (avg/stddev): 4.6362/0.12

... and recording

sysbench --threads=4 --time=30 --memory-block-size=1K --memory-scope=global --memory-total-size=100G --memory-oper=write memory run

sysbench 1.0.17 (using system LuaJIT 2.0.4)

Running the test with following options:

Number of threads: 4

Initializing random number generator from current time

Running memory speed test with the following options:

block size: 1KiB

total size: 102400MiB

operation: write

scope: global

Initializing worker threads...

Threads started!

Total operations: 91414596 (3046752.56 per second)

89272.07 MiB transferred (2975.34 MiB/sec)

General statistics:

total time: 30.0019s

total number of events: 91414596

Latency (ms):

min: 0.00

avg: 0.00

max: 1022.90

95th percentile: 0.00

sum: 66430.91

Threads fairness:

events (avg/stddev): 22853649.0000/945488.53

execution time (avg/stddev): 16.6077/1.76

Disk on the data source server

sysbench --threads=4 --file-test-mode=rndrw --time=60 --file-block-size=4K --file-total-size=1G fileio run

sysbench 1.0.17 (using system LuaJIT 2.0.4)

Running the test with following options:

Number of threads: 4

Initializing random number generator from current time

Extra file open flags: (none)

128 files, 8MiB each

1GiB total file size

Block size 4KiB

Number of IO requests: 0

Read/Write ratio for combined random IO test: 1.50

Periodic FSYNC enabled, calling fsync() each 100 requests.

Calling fsync() at the end of test, Enabled.

Using synchronous I/O mode

Doing random r/w test

Initializing worker threads...

Threads started!

File operations:

reads/s: 4587.95

writes/s: 3058.66

fsyncs/s: 9795.73

Throughput:

read, MiB/s: 17.92

written, MiB/s: 11.95

General statistics:

total time: 60.0241s

total number of events: 1046492

Latency (ms):

min: 0.00

avg: 0.23

max: 14.45

95th percentile: 0.94

sum: 238629.34

Threads fairness:

events (avg/stddev): 261623.0000/1849.14

execution time (avg/stddev): 59.6573/0.00

Disk on the backup storage server

sysbench --threads=4 --file-test-mode=rndrw --time=60 --file-block-size=4K --file-total-size=1G fileio run

sysbench 1.0.17 (using system LuaJIT 2.0.4)

Running the test with following options:

Number of threads: 4

Initializing random number generator from current time

Extra file open flags: (none)

128 files, 8MiB each

1GiB total file size

Block size 4KiB

Number of IO requests: 0

Read/Write ratio for combined random IO test: 1.50

Periodic FSYNC enabled, calling fsync() each 100 requests.

Calling fsync() at the end of test, Enabled.

Using synchronous I/O mode

Doing random r/w test

Initializing worker threads...

Threads started!

File operations:

reads/s: 11.37

writes/s: 7.58

fsyncs/s: 29.99

Throughput:

read, MiB/s: 0.04

written, MiB/s: 0.03

General statistics:

total time: 73.8868s

total number of events: 3104

Latency (ms):

min: 0.00

avg: 78.57

max: 3840.90

95th percentile: 297.92

sum: 243886.02

Threads fairness:

events (avg/stddev): 776.0000/133.26

execution time (avg/stddev): 60.9715/1.59

Network speed between servers

iperf3 -c backup

Connecting to host backup, port 5201

[ 4] local x.x.x.x port 59402 connected to y.y.y.y port 5201

[ ID] Interval Transfer Bandwidth Retr Cwnd

[ 4] 0.00-1.00 sec 419 MBytes 3.52 Gbits/sec 810 182 KBytes

[ 4] 1.00-2.00 sec 393 MBytes 3.30 Gbits/sec 810 228 KBytes

[ 4] 2.00-3.00 sec 378 MBytes 3.17 Gbits/sec 810 197 KBytes

[ 4] 3.00-4.00 sec 380 MBytes 3.19 Gbits/sec 855 198 KBytes

[ 4] 4.00-5.00 sec 375 MBytes 3.15 Gbits/sec 810 182 KBytes

[ 4] 5.00-6.00 sec 379 MBytes 3.17 Gbits/sec 765 228 KBytes

[ 4] 6.00-7.00 sec 376 MBytes 3.15 Gbits/sec 810 180 KBytes

[ 4] 7.00-8.00 sec 379 MBytes 3.18 Gbits/sec 765 253 KBytes

[ 4] 8.00-9.00 sec 380 MBytes 3.19 Gbits/sec 810 239 KBytes

[ 4] 9.00-10.00 sec 411 MBytes 3.44 Gbits/sec 855 184 KBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bandwidth Retr

[ 4] 0.00-10.00 sec 3.78 GBytes 3.25 Gbits/sec 8100 sender

[ 4] 0.00-10.00 sec 3.78 GBytes 3.25 Gbits/sec receiver

Testing methodology

- A file system with the first test set is prepared on the test server, and the repository is initialized on the backup storage server if necessary.

The backup process starts and its time is measured. - Files are migrated to the second test suite on the test server. The backup process starts and its time is measured.

- The test server migrates to the third test suite. The backup process starts and its time is measured.

- The resulting third test set is accepted by the new first; points 1-3 are repeated 2 more times.

- Data is entered into the pivot table, graphs with netdata are added.

- A report is prepared using a separate backup method.

Expected results

Since all 3 candidates are based on the same technology (rsync), it is expected that the results will be close to the usual rsync, including all its advantages, namely:

- Files in the repository will be stored "as is".

- The size of the repository will grow only including the difference between backups.

- There will be a relatively large load on the network when transmitting data, as well as a small load on the processor.

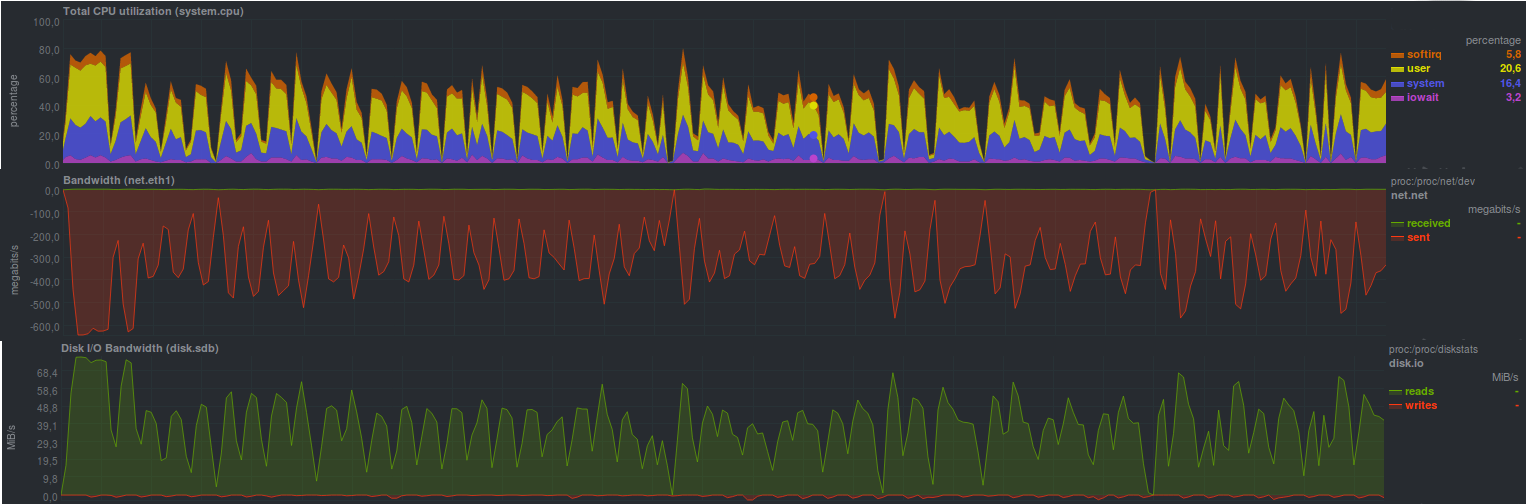

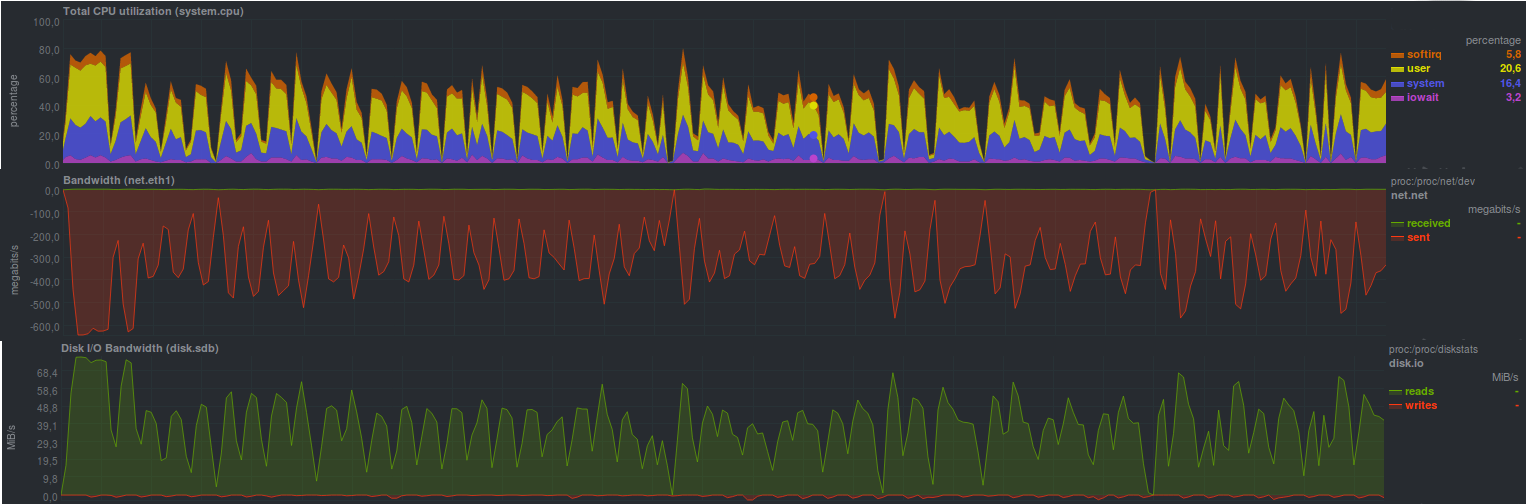

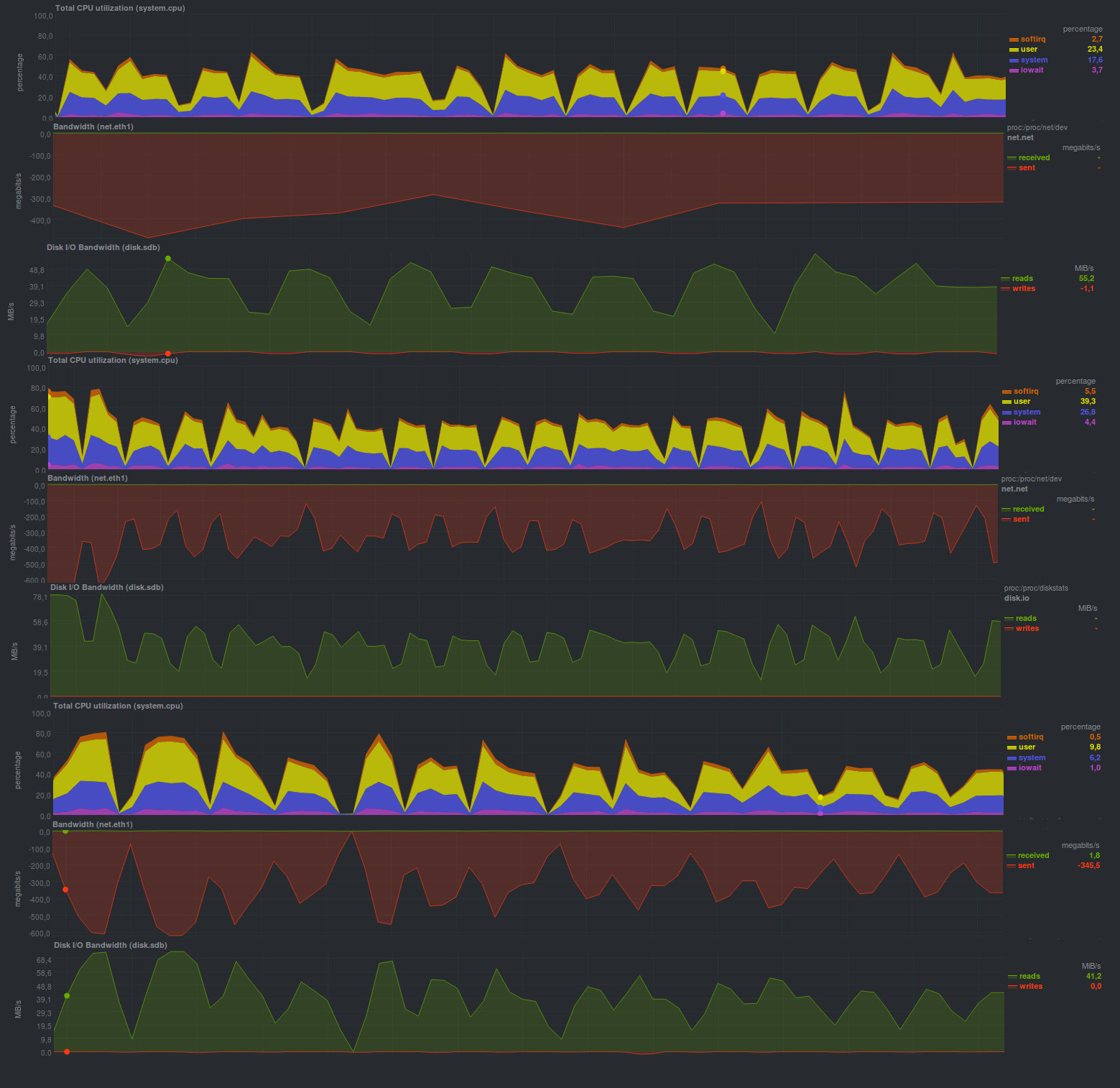

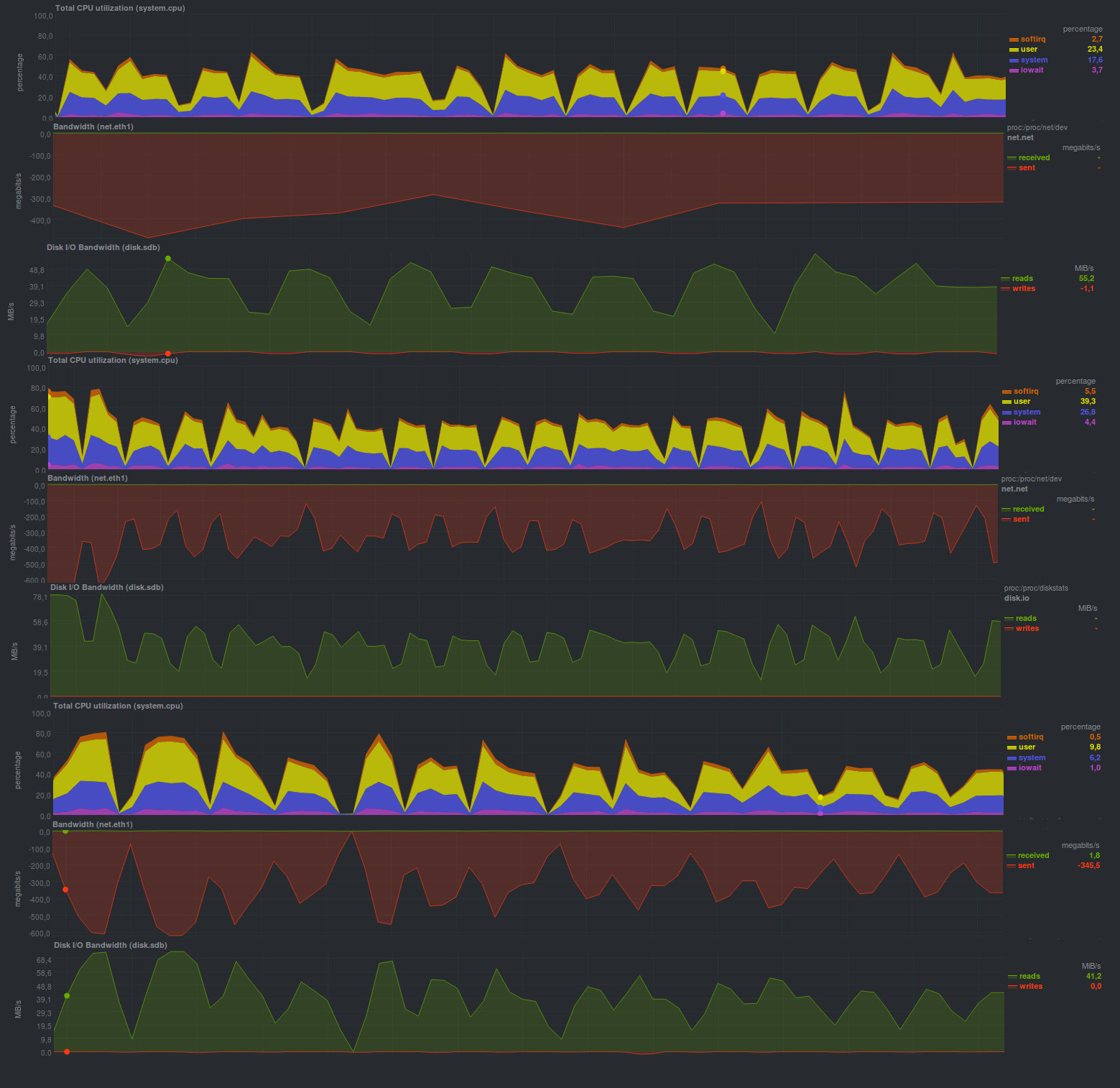

The test run of a regular rsync will be used as a reference, its results

these are

The bottleneck was on the backup storage server in the form of an HDD-based disk, which is quite clearly visible on the graphs in the form of a saw.

Data was copied in 4 minutes and 15 seconds.

The bottleneck was on the backup storage server in the form of an HDD-based disk, which is quite clearly visible on the graphs in the form of a saw.

Data was copied in 4 minutes and 15 seconds.

Testing rdiff-backup

The first candidate is rdiff-backup, a python script that backs up one directory to another. At the same time, the current backup copy is stored “as is”, and the backups made earlier are stored in a special subdirectory incrementally, and thus space is saved.

We will check the typical mode of operation, i.e. the start of the backup process is initiated by the client on its own, and on the server side, the process that receives data is launched for backup.

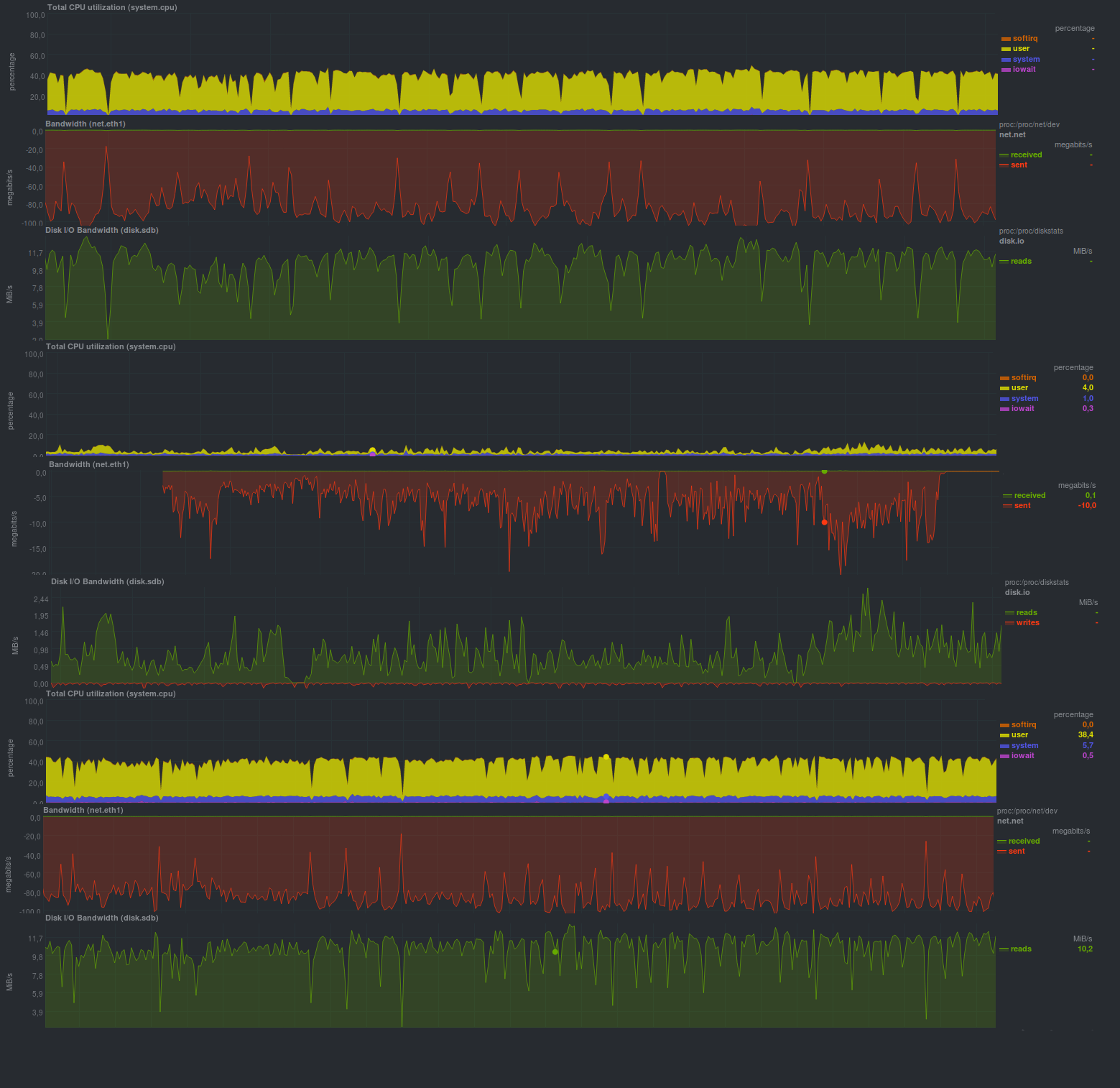

Let's get a look,

what is he capable of in our conditions

.

The running time of each test run:

The running time of each test run:

| First start | Second launch | Third launch | |

|---|---|---|---|

| First set | 16m32s | 16m26s | 16m19s |

| Second set | 2h5m | 2h10m | 2h8m |

| Third set | 2h9m | 2h10m | 2h10m |

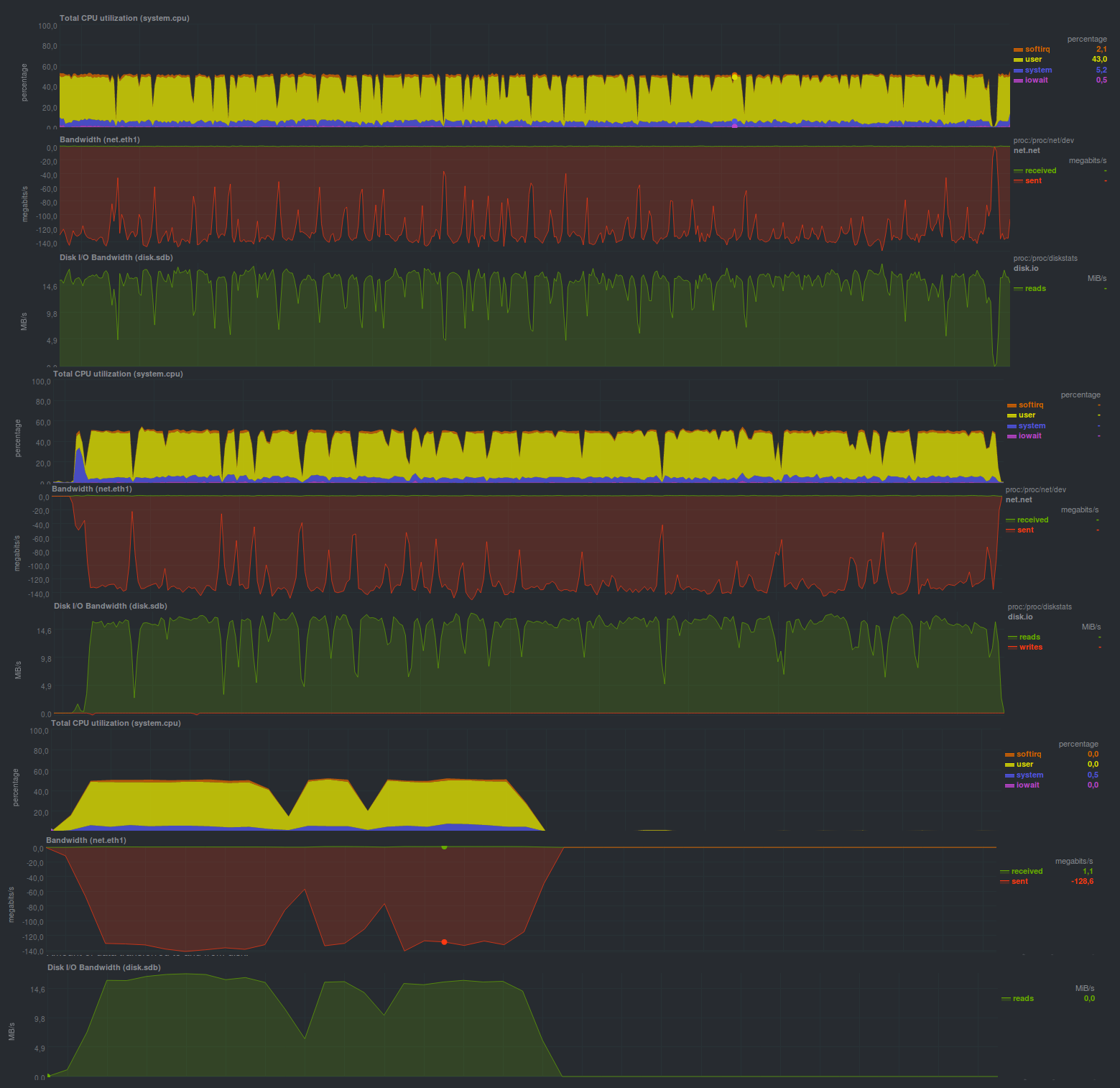

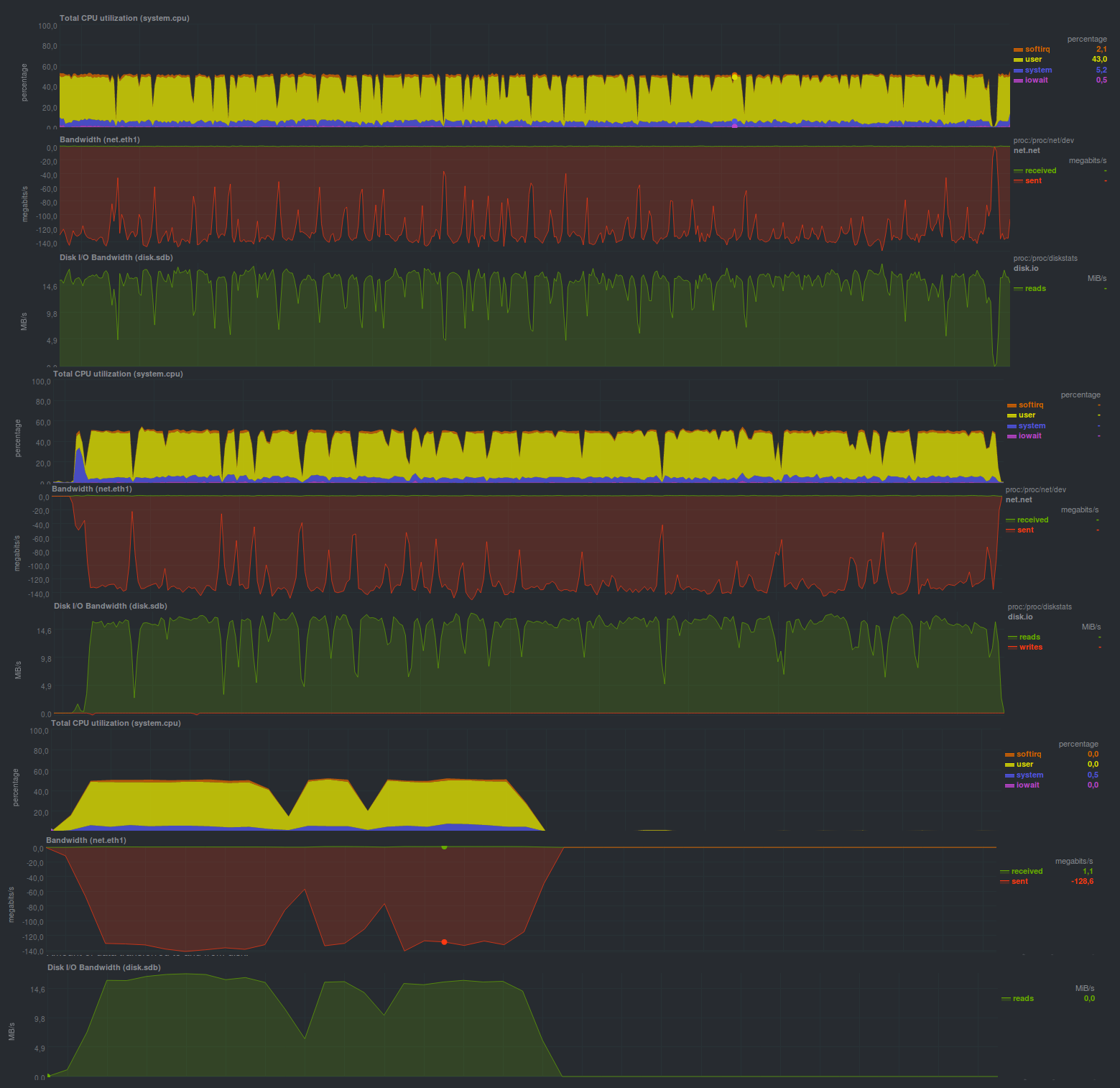

Rdiff-backup reacts very painfully to any large data change, and also does not completely utilize the network.

Testing rsnapshot

The second candidate, rsnapshot, is a perl script whose main requirement for effective work is support for hard links. This saves disk space. At the same time, files that have not changed since the previous backup will be referenced to the original file using hard links.

The logic of the backup process is also inverted: the server actively “walks” on its clients itself and takes data.

Test results

the following

| First start | Second launch | Third launch | |

|---|---|---|---|

| First set | 4m22s | 4m19s | 4m16s |

| Second set | 2m6s | 2m10s | 2m6s |

| Third set | 1m18s | 1m10s | 1m10s |

It worked very, very fast, much faster than rdiff-backup and very close to pure rsync.

Burp testing

Another option is the C implementation on top of librsync - burp, which has a client-server architecture including client authorization, as well as a web interface (not included in the base package). Another interesting feature is backup without the right of recovery from clients.

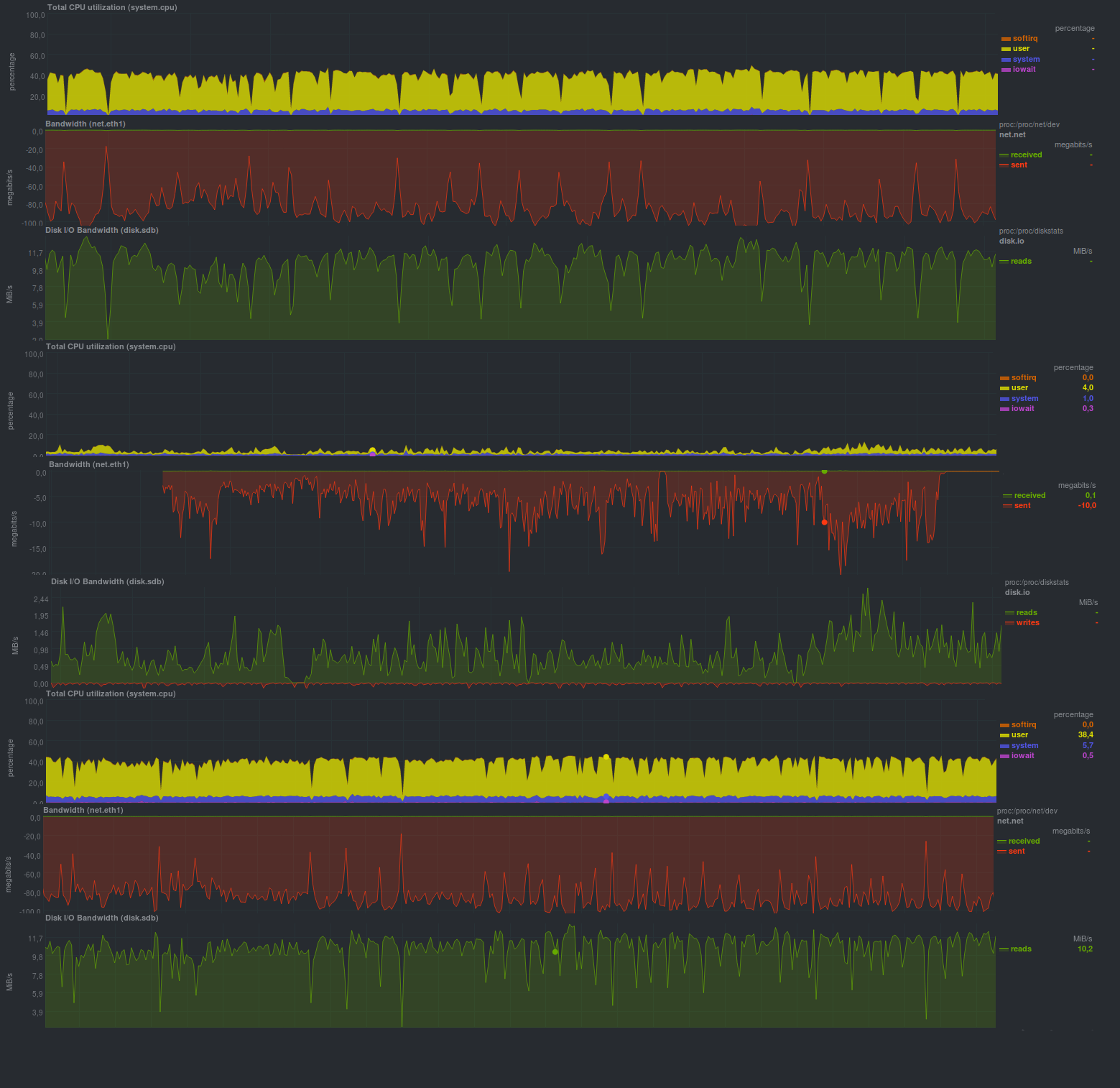

Let's look at

performance

.

| First start | Second launch | Third launch | |

|---|---|---|---|

| First set | 11m21s | 11m10s | 10m56s |

| Second set | 5m37s | 5m40s | 5m35s |

| Third set | 3m33s | 3m24s | 3m40s |

It worked 2 times slower than rsnapshot, but also quite fast, and certainly faster rdiff-backup. The graphs are a bit sawtooth - performance again rests on the disk subsystem of the backup storage server, although this is not as pronounced as rsnapshot's.

results

The size of the repositories for all candidates was approximately the same, i.e., at first growth up to 10 GB, then growth up to 15 GB, then growth up to 18 GB, etc., due to the peculiarity of rsync. It is also worth noting the single-threadedness of all candidates (CPU utilization is about 50% with a dual-core machine). All 3 candidates provided the opportunity to restore the last backup “as is”, that is, it was possible to restore files without using any third-party programs, including those used to create repositories. This is also the “generic legacy” of rsync.

conclusions

The more complex the backup system and the more different features it has, the slower it will work, but for not very demanding projects, any of them will do, except, possibly, rdiff-backup.

Announcement

This note continues the backup cycle.

Backup, part 1: Why do you need a backup, an overview of methods and technologies

? Backup, part 2: Overview and testing of rsync-based backup tools

Backup, part 3: Overview and testing duplicity, duplicati

Backup up, part 4: review and testing zbackup, restic, borgbackup

Backup, part 5: testing Bacula and Veeam Backup for Linux

Backup: at the request of the readers review AMANDA, UrBackup, BackupPC

Backup part 6: Compare s backup tools

Backup, Part 7: Conclusions

Authors : Finnix