Bluetooth LE is not so scary, or How to improve the user experience without much effort

Recently, our team came up with and implemented the function of transferring money by air using Bluetooth LE technology. I want to tell you how we did it and what Apple provides us with from the tools. Many developers think that Bluetooth is difficult, because it is a rather low-level protocol, and there are not many experts on it. But everything is not so scary, and in fact, using this function is very simple! And those functions that can be implemented using Bluetooth LE are certainly interesting and will subsequently highlight your application among competitors.

Let's first understand what kind of technology it is and what is its difference from the classic Bluetooth.

Why did the Bluetooth developers name this technology namely Low Energy? After all, with each new version of Bluetooth, power consumption was already many times lower. The answer lies in this battery.

Its diameter is only 2 cm, and the capacity is about 220 mA * h. When engineers developed Bluetooth LE, they wanted the device with such a battery to work for several years. And they did it! Bluetooth LE devices with such a battery can work for a year. How many of you still turn off the Bluetooth on your phone in the old fashioned way to save energy, as you did in 2000? In vain you do this - the savings will be less than 10 seconds of the phone per day. And you disable very large functionality, such as Handoff, AirDrop and others.

What did the engineers achieve by developing Bluetooth LE? Have they refined the classic protocol? Made it more energy efficient? Just optimized all the processes? Not. They completely redesigned the architecture of the Bluetooth stack and achieved the fact that now, to be visible to all other devices, you need less time to be on the air and occupy the channel. In turn, this allowed a good saving on energy consumption. And with the new architecture, any new device can now be standardized, thanks to which developers from all over the world can communicate with the device and, therefore, easily write new applications to manage it. In addition, the principle of self-discovery is laid in the architecture: when connecting to a device, you do not need to enter any PIN codes, and if your application can communicate with this device,

How did engineers manage to make such a huge leap in energy efficiency?

The frequency remained the same: 2.4 GHz, not certified and free for use in many countries. But the connection delay has become less: 15-30 ms instead of 100 ms with classic Bluetooth. The working distance remained the same - 100 m. The transmission interval was not strong, but changed - instead of 0.625 ms, it became 3 ms.

But because of this, energy consumption could not be reduced tenfold. Of course, something had to suffer. And this is the speed: instead of 24 Mbps, it became 0.27 Mbps. You will probably say that this is ridiculous speed for 2018.

This technology is not young, it first appeared in the iPhone 4s. And already managed to conquer many areas. Bluetooth LE is used in all smart home devices and wearable electronics. Now there are even chips the size of coffee beans.

And how is this technology applied in software?

Since Apple was the first to integrate Bluetooth into their device and start using it, by now they have made good progress and integrated technology into their ecosystem. And now you can meet this technology in services such as AirDrop, Devices quick start, Share passwords, Handoff. And even the notifications in the watch are made via Bluetooth LE. In addition, Apple has made publicly available documentation on how to make sure that notifications from all applications come to your own devices. What are the roles of devices within Bluetooth LE?

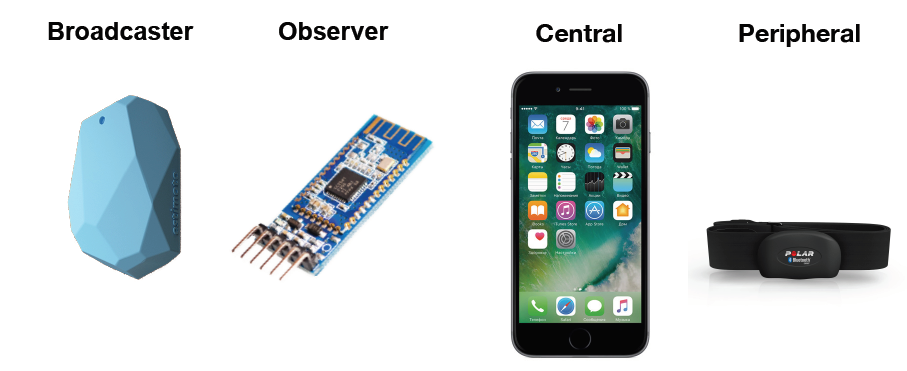

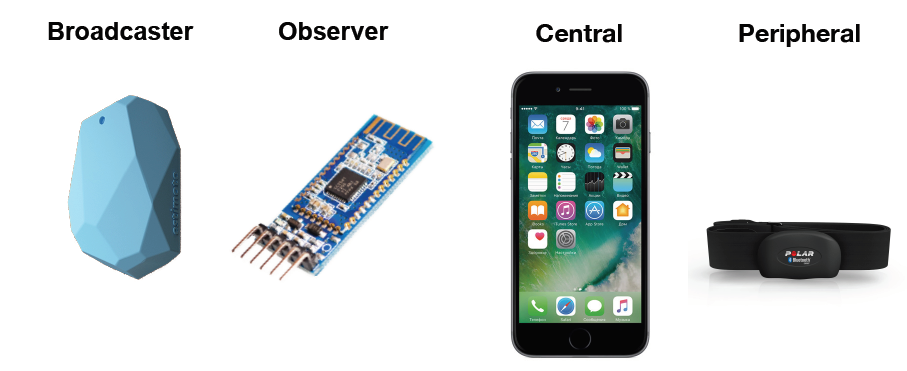

Brodcaster. Sends messages to everyone who is nearby, you cannot connect to this device. By this principle, iBeacons and indoor navigation work.

Observer.Listens to what is happening around, and receives data only from public messages. Does not create connections.

But with Central and Peripheral more interesting. Why they were not called simply Server-Client? Logically, judging by the name. But no.

Because Peripheral actually acts as a server. This is a peripheral device that consumes less power and connects to the more powerful Central. Peripheral can inform you that it is nearby and what services it has. Only one device can connect to it, and Peripheral has some data. And Central can scan the air in search of devices, send connection requests, connect to any number of devices, can read, write and subscribe to data from Peripheral.

What do we as developers have access to in the Apple ecosystem?

iOS / Mac OS:

watchOS / tvOS:

The most important difference is the connection interval. What does it affect? To answer this question, you first need to understand how the Bluetooth LE protocol works and why such a small difference in absolute values is very important.

How is the search and connection process?

Peripheral announces its presence at the frequency of the advertisement interval, its package is very small and contains only a few service identifiers provided by the device, as well as the name of the device. The interval can be quite large and can vary depending on the current status of the device, power saving mode and other settings. Apple advises developers of external devices to tie the length of the interval to the accelerometer: increase the interval if the device is not used, and when it is active, decrease to quickly find the device. Advertisement-interval does not correlate with the connection interval and is determined by the device itself, depending on power consumption and its settings. It is inaccessible and unknown to us in the Apple ecosystem; it is completely controlled by the system.

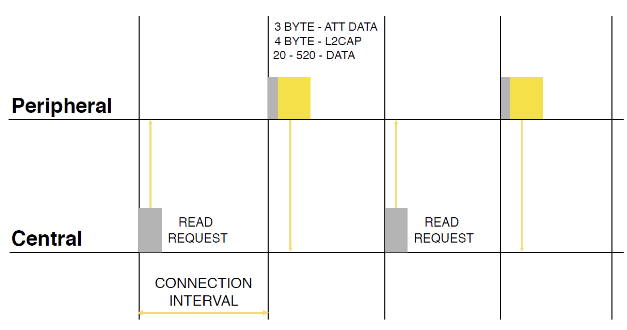

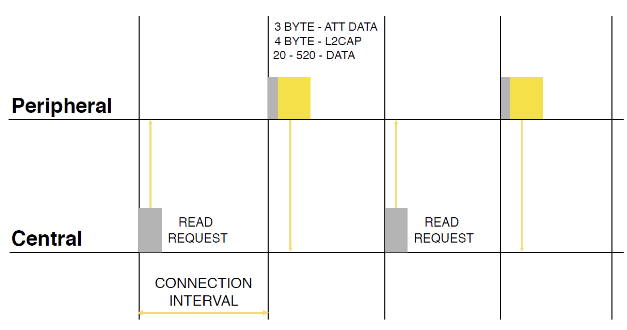

After we find the device, we send a connection request, and here the connection interval enters the scene - the time after which the second device can respond to the request. But this is when connecting, but what happens when reading / writing?

The connection interval also appears when reading data - reducing it by 2 times increases the data transfer rate. But you need to understand that if both devices do not support the same interval, then the maximum of them will be selected.

Let's look at what a package of information that Peripheral passes consists of.

MTU (maximum transmission unit) of such a package is determined during the connection process and varies from device to device and depending on the operating system. In protocol version 4.0, the MTU was about 30, and the size of the payload did not exceed 20 bytes. In version 4.2, everything has changed, now you can transfer about 520 bytes. But, unfortunately, only devices younger than the iPhone 5s support this version of the protocol. The size of the overhead, regardless of the size of the MTU, is 7 bytes: this includes ATT and L2CAP headers. With the record, in general, a similar situation.

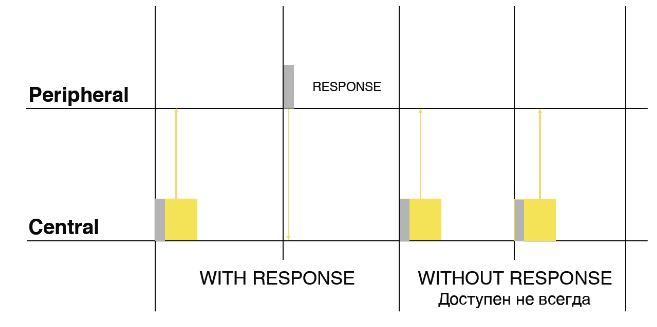

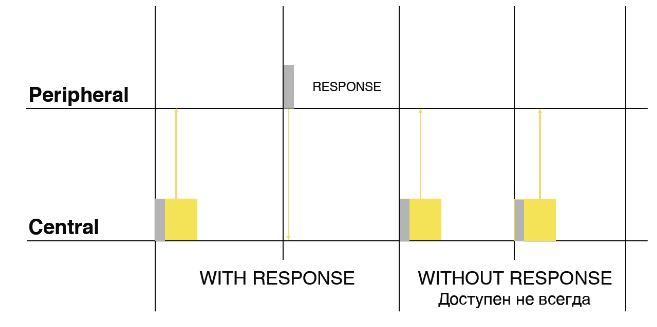

There are only two modes: with answer and without. Unanswered mode significantly speeds up data transfer, since there is no waiting interval before the next recording. But this mode is not always available, not on all devices and not on all systems. Access to this recording mode may be limited by the system itself, because it is considered less energy-efficient. In iOS, there is a method in which you can check before recording whether this mode is available.

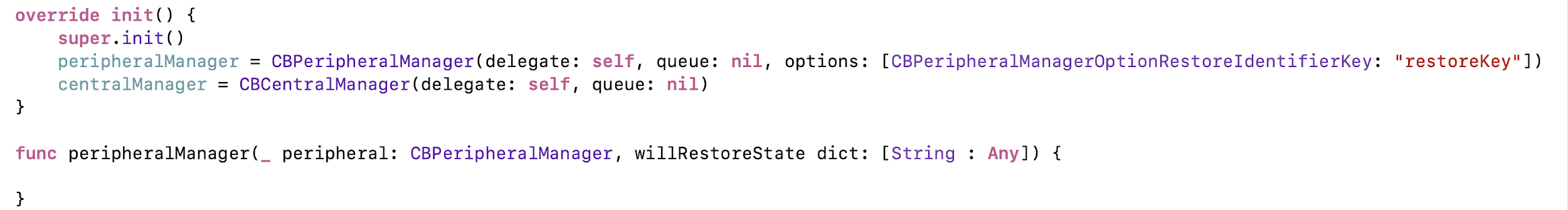

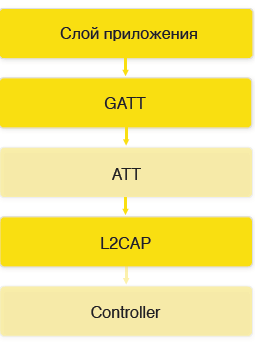

Now let's look at what the protocol consists of.

The protocol consists of 5 levels. The application layer is your logic, described on top of CoreBluetooth. GATT (Generic Attributes Layer) is used to exchange services and characteristics that are on the devices. ATT (Attributes Layer)used to manage your characteristics and transfer your data. L2CAP is a low-level data exchange protocol. Controller is the BT chip itself.

You probably ask what GATT is and how we can work with it?

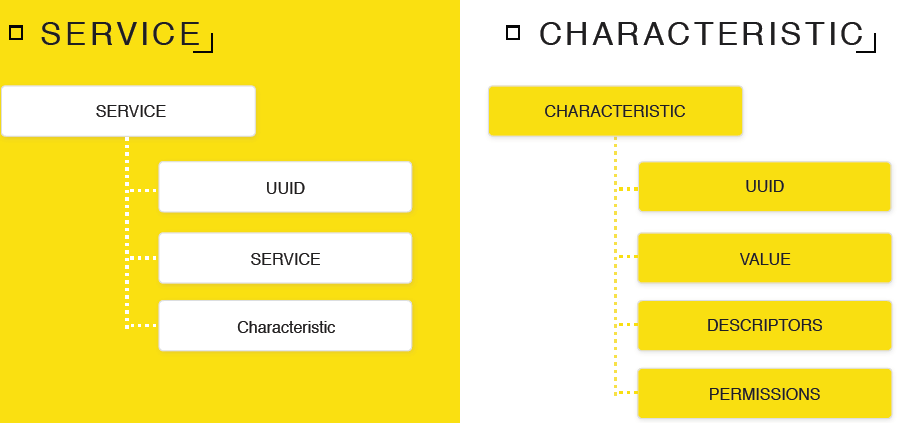

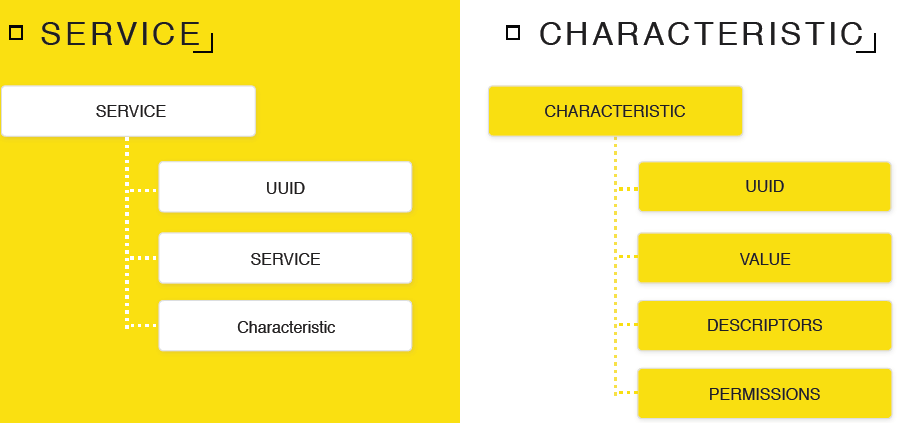

GATT consists of features and services. A characteristic is an object in which your data is stored, like a variable. And a service is a group in which your characteristics are located, like a namespace. The service has a name - UUID, you choose it yourself. A service may contain a subsidiary service.

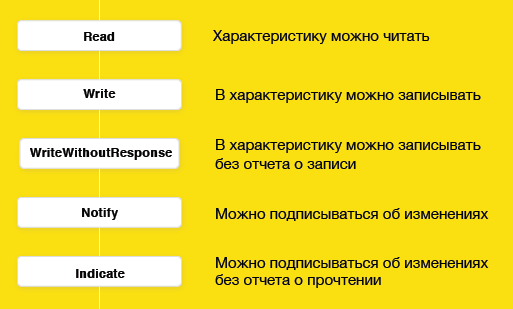

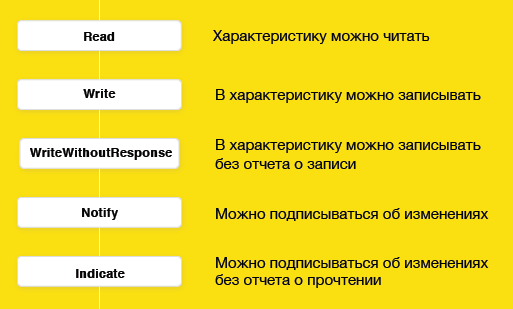

The characteristic also has its own UUID - in fact, a name. The value of the characteristic is NSData, here you can record and store data.Descriptors are a description of your characteristic, you can describe what data you expect in this characteristic, or what they mean. There are many descriptors in the Bluetooth protocol, but so far only two are available on Apple systems: the human description and the data format. Also has access levels (Permissions) to your specifications:

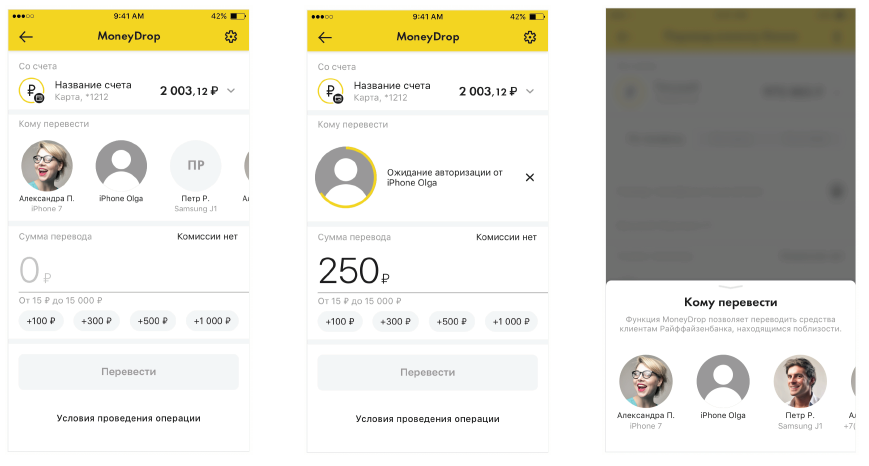

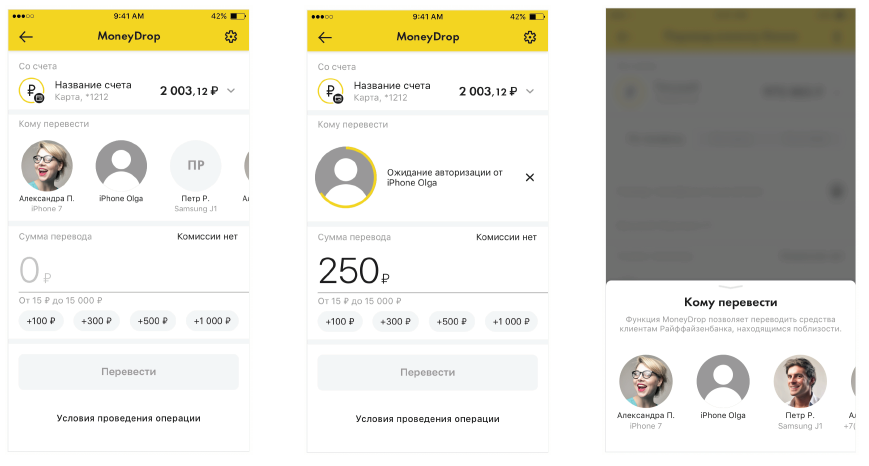

We had an idea to make it possible to transfer money by air without requiring anything from the recipient. Imagine, you are puzzling over a very interesting task, writing the perfect code, and here a colleague suggests going for coffee. And you are so passionate about the task that you can’t go away, and ask him to buy you a cup of delicious cappuccino. He brings you coffee, and you need to return the money to him. You can translate by phone number, it works fine. But here's an awkward situation - you don't know his number. Well, like this,

I’ve been working for three years, but haven’t exchanged numbers :) Therefore, we decided to make it possible to transfer money to those who are nearby, without entering any user data. Like in AirDrop. Just select a user and send the amount he needs. Let's see what we need for this.

We need the sender:

The recipient, in turn, must be able to inform the surrounding senders that he has a service with the necessary data, and be able to receive messages from the sender. I think it’s not worth describing how the process of transferring money by details at our bank takes place. Now let's try to implement this.

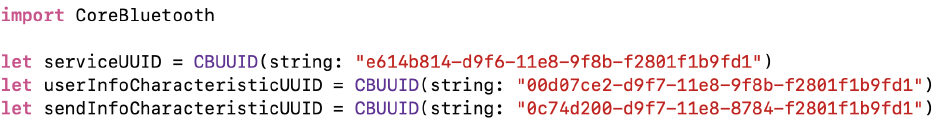

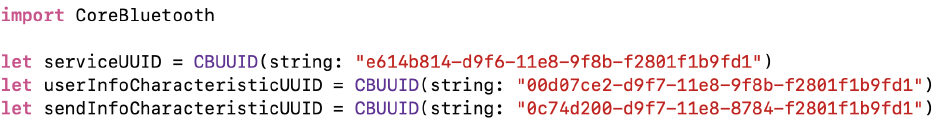

First you need to come up with the names of our service and characteristics. As I said, this is the UUID. We simply generate them and save them on Peripheral and Central so that they are the same on both devices.

You are free to use any UUIDs, except those ending like this: XXXXXXXX- 0000-1000-8000-00805F9B34FB - they are reserved for different companies. You yourself can buy such a number and no one will use it. It will cost $ 2500.

Next, we will need to create managers: one to transfer funds, the other to receive. You just need to specify delegates. We will transmit Central, receive Peripheral. We create both, because both the sender and the receiver can be one person at different times.

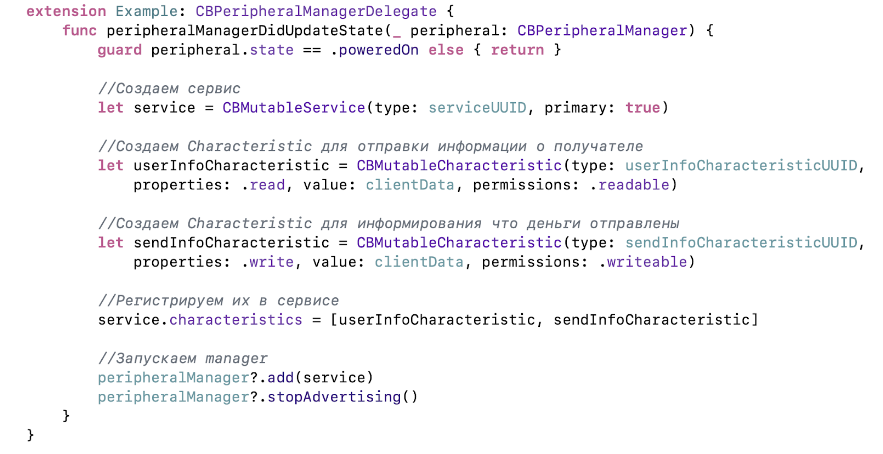

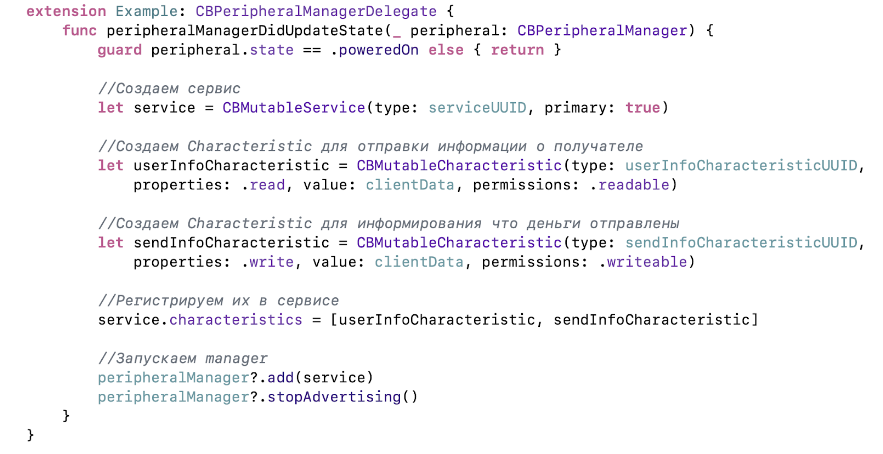

Now we need to make it possible to detect the recipient and write down the details of the recipient in our characteristic.

First, create a service. We will register the UUID and indicate that it is primary - that is, the service is the main one for this device. A good example: a heart rate monitor, for which the current heart rate will be the main service, and the battery status is secondary information.

Next, we create two characteristics: one for reading the details of the recipient, the second for writing so that the recipient can learn about sending money. We register them in our service, then add them to the manager, start the discovery and indicate the UUID of the service so that all devices that are nearby can find out about our service before connecting to it. This data is placed in the packet that Central sends during the broadcast.

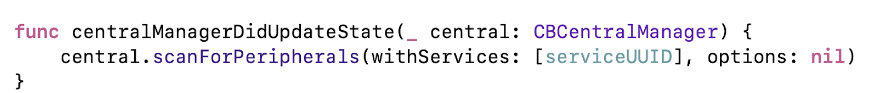

The recipient is ready, proceed to the sender. Run the search and connect.

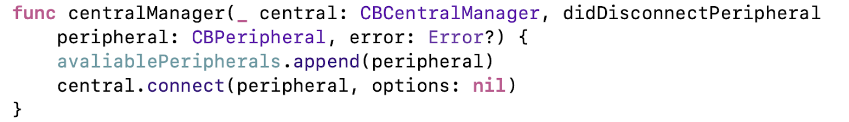

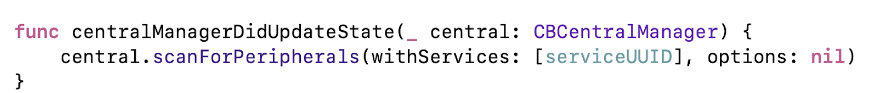

When you turn on the manager, we start the search for devices with our service. When we find them, we get them in the delegate method and immediately connect. Important: you need to maintain a strong link to all Peripheral that you work with, otherwise they will leak.

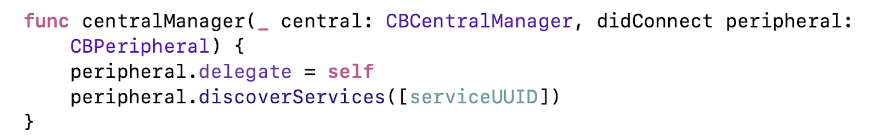

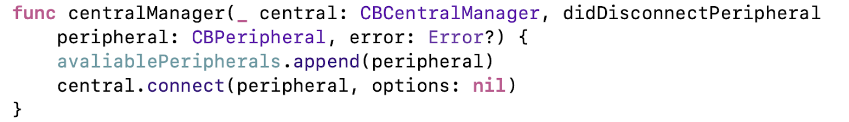

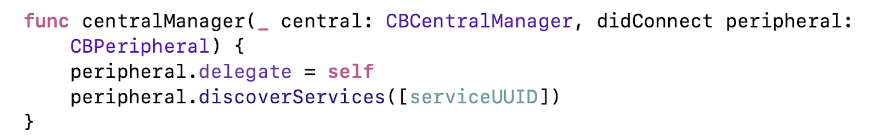

After successful connection, we configure the delegate who will work with this device, and we get the service we need from the device.

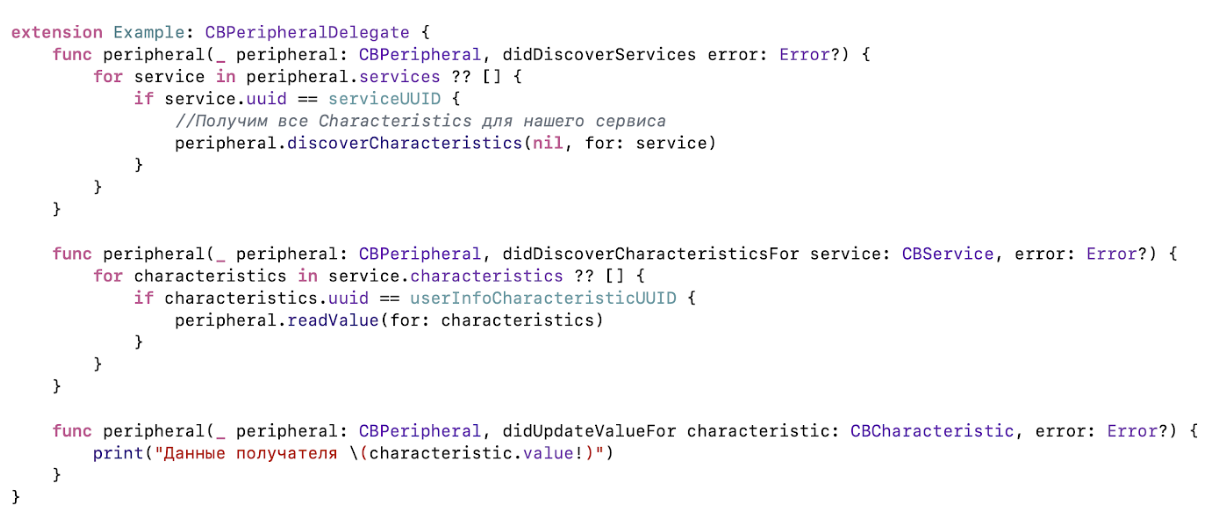

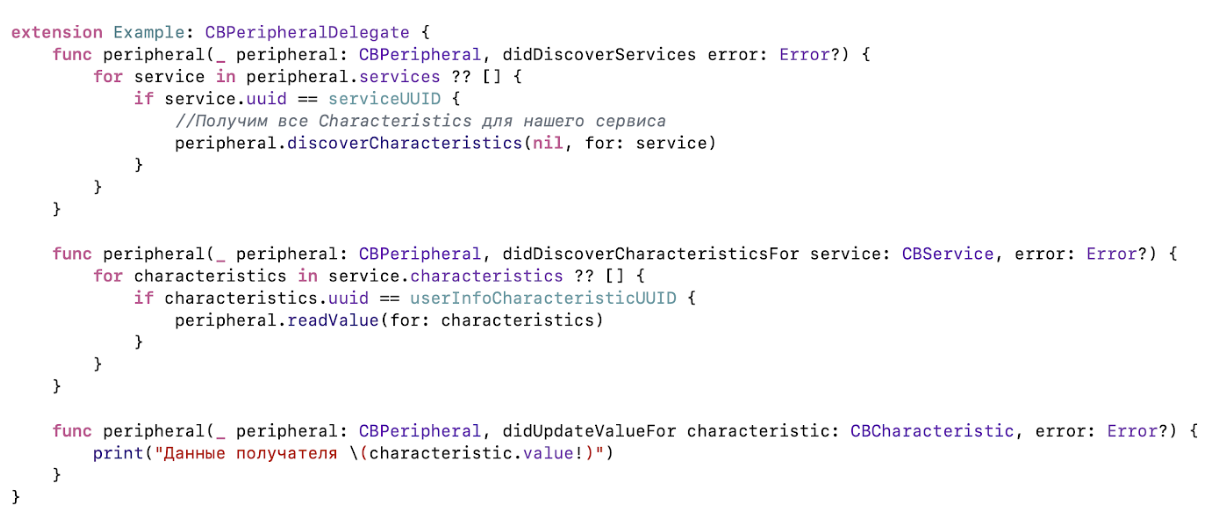

We have successfully connected to the recipient, now you need to read its details.

After connecting, we have already requested all services from the device. And after receiving them, the delegate method will be called, which will list all the services available on this device. We find the right one and request its characteristics. The result can be found by the UUID in the delegate method, which stores the data for translation. We try to read them, and we get the desired again in the delegate method. All services, characteristics and their values are cached by the system, so it is not necessary to request them later each time.

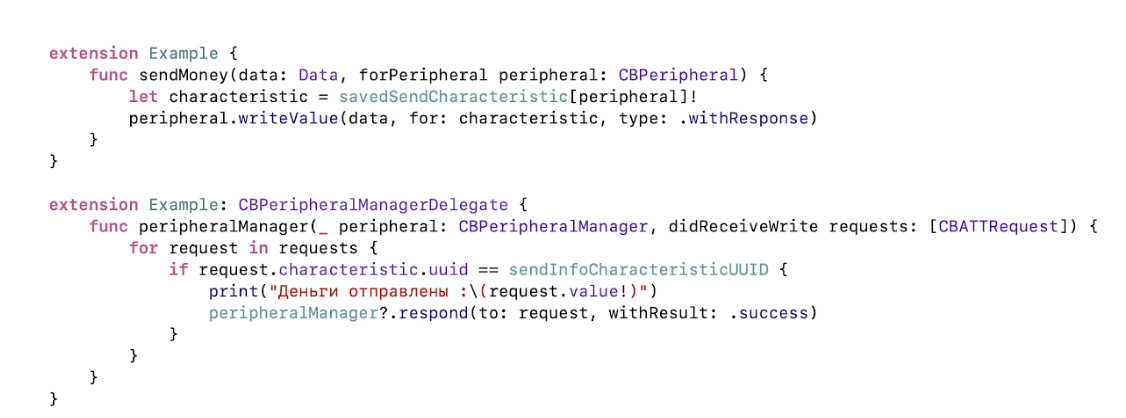

That's all, we sent money for coffee, it's time to show the recipient a beautiful notice so that he waits for rubles on his account. To do this, you need to implement the process of sending a message.

We get the characteristic we need from the sender, in this case we took it from the stored value. But before that, you need to get it from the device, as we did before. And then just write the data to the desired characteristic.

After that, on the other device, we get a write request in the delegate method. Here you can read the data that is sent to you, respond to any error, for example, there is no access, or this characteristic does not exist. Everything will work, but only if both devices are turned on and applications are active. And we need to work in the background!

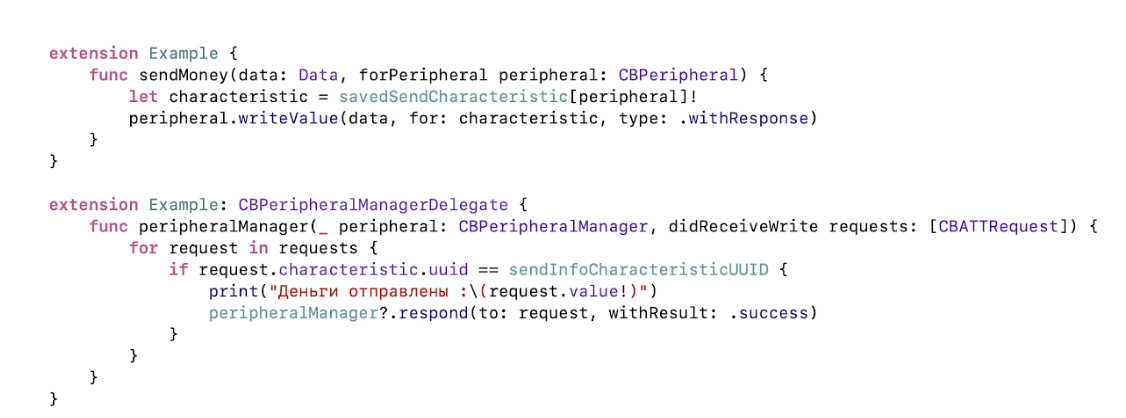

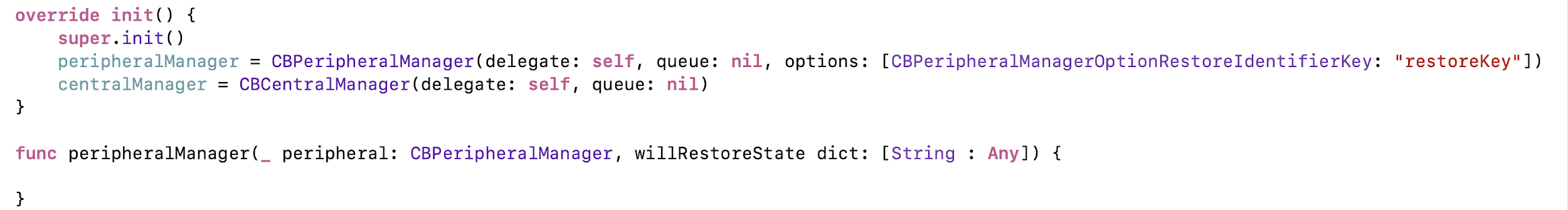

Apple allows you to use Bluetooth in the background. To do this, you need to indicate in info.plist the key in which mode we want to use, in Peripheral or Central.

Next, in the manager, you need to specify the recovery key and create a delegate method. Now the background mode is available to us. If the application falls asleep or is unloaded from memory, then when you find the desired Peripheral or when Central is connected, it wakes up, and the manager restores with your key.

Everything is fine, ready to be released. But here designers come running to us and say: “We want to insert photos of users so that it is easier for them to find each other.” What to do? In our characteristic, you can write just some 500 bytes, but on some devices in general 20 :(

To solve this problem, we had to go deeper.

Now we talked to devices at the GATT / ATT level. But in iOS 11, we have access to the L2CAP protocol. However, in this case, you will have to take care of the data transfer yourself. Packets are sent with 2 Kb MTU, no need to re-encode anything, regular NSStream is applied. Data rates up to 394 Kbps, according to Apple.

Suppose you transfer any data of your service from Peripheral to Central in the form of normal characteristics. And it took me to open the channel. You open it on Peripheral, in return you get PSM - this is the number of the channel you can connect to, and you need to transfer it to Central using the same characteristics. The number is dynamic, the system itself chooses which PSM to open at the moment. After the transfer, you can already connect to Peripheral on the Central and exchange data in a format convenient for you. Let's look at how to do this.

First, open the encrypted port on Peripheral. You can do it without encryption, then this will speed up the transfer a little.

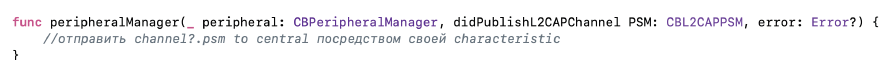

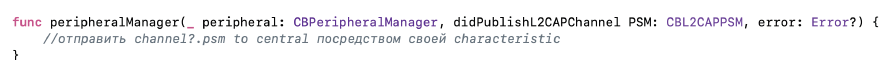

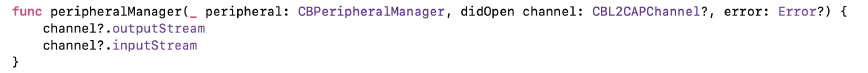

Next, in the delegate method, we get the PSM and send it to another device.

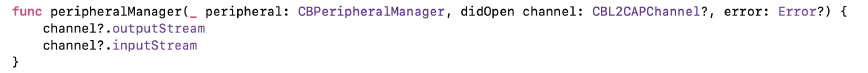

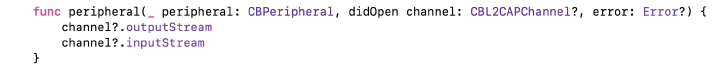

After connecting another device, we will be called a method in which we can get the NSStream we need for transmission from the channel.

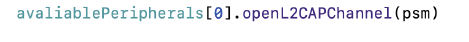

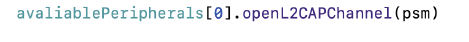

With Central, it’s even easier, we just connect to the channel with the desired number ...

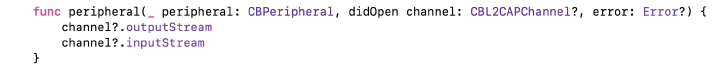

... and after that we get the streams we need. In them you can transfer absolutely any data of any size, and build your protocol on top of L2CAP. So we realized the transfer of recipient photos.

But there are pitfalls, where do without them.

Let's look at the pitfalls when working in the background. Since the roles of Peripheral and Central are available to you, you might think. that in the background you can determine which devices are nearby in the background and which are active. In theory, it should have been, but Apple introduced a restriction: phones that are in the background, whether Central or Peripheral, are not available for other phones that are also in the background. Also, phones that are in the background are not visible from non-iOS devices. Let's look at why this happens.

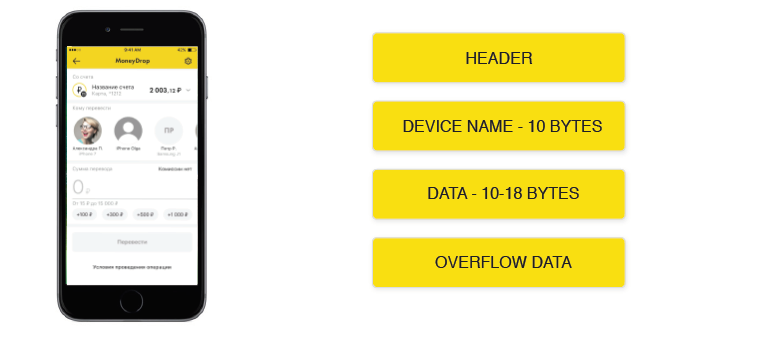

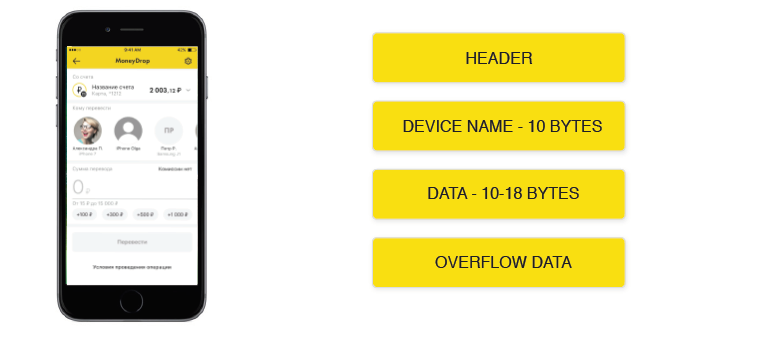

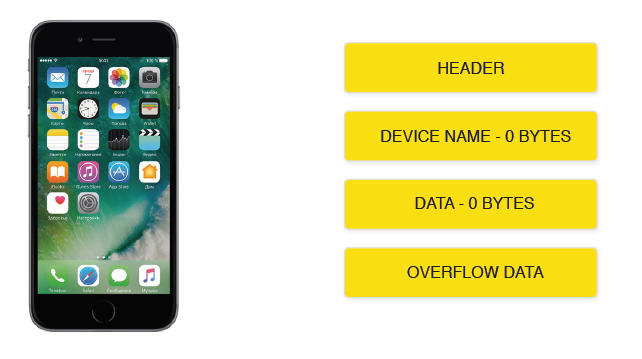

When your device is active, it sends a regular broadcast packet, which may contain the device name and a list of services. that this device provides. And overflow data is all that did not fit.

When the device goes into the background, it does not transmit the name, and transfers the list of supported services to overflow data. If the application is active, then when scanning from an iOS device, it reads this data, and when switching to the background, it ignores it. Therefore, when switching to the background, you will not be able to see applications that are also in the background. Other Apple operating systems always ignore overflow data, so if you look for devices that support your service, you will get an empty array. And if you connect to each device that is nearby and request supported services, then the list may contain your service, and you can work with it.

Then we were getting ready to submit for testing, corrected minor bugs, and were engaged in optimization. And suddenly, at some point, we began to get this error in the console:

The worst thing was that no delegate method was called, we could not even beat this error for the user. Just a message in the log - and silence, everything froze. No major changes were made, so we started rolling back on commits. And they found that they once optimized the code and redid the way of writing data. The problem was that not all clients were updated, so this error occurred.

We, happy that we fixed everything, ran rather to pass it to testing, and they almost immediately returned to us: “Your fashion photos do not work. They all come underloaded. ” We started trying, and it’s true that sometimes, on different devices, broken photos come at different times. They began to look for a reason.

And then again they saw the previous error. Immediately thought that it was in different versions. But after the complete removal of the old version from all test devices, the error still reproduced. We were sad ...

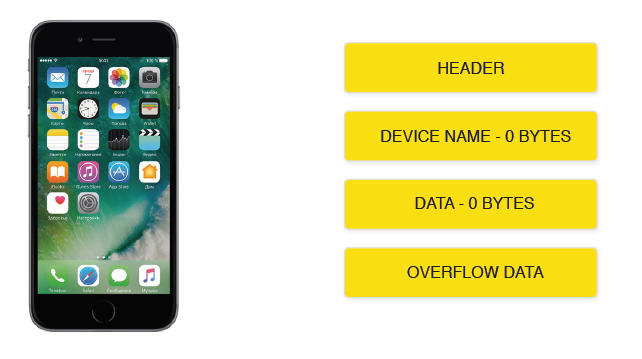

We started looking for a debugging tool. The first thing we came across was the Apple Bluetooth Explorer. A powerful program, it can do a lot of things, but for debugging the Bluetooth LE protocol, there is one small tab with the search for devices and obtaining characteristics. And we needed to analyze L2CAP.

Then they found LightBlue Explorer. It turned out to be a pretty decent program, though with a design from iOS 7. It can do the same thing as Bluetooth Explorer, and also knows how to subscribe to specifications. And it works more stable. Everything is fine, but again without L2CAP.

And then we remembered the well-known WireShark sniffer.

It turned out he was familiar with Bluetooth LE: he can read L2CAP, but only under Windows. Although it’s not scary that we won’t find Windows or something. The biggest minus - the program only works with a specific device. That is, you had to find the device somewhere in the official store. And you yourself understand that a large company is unlikely to approve the purchase of an incomprehensible device at a flea market. We even started browsing overseas online stores.

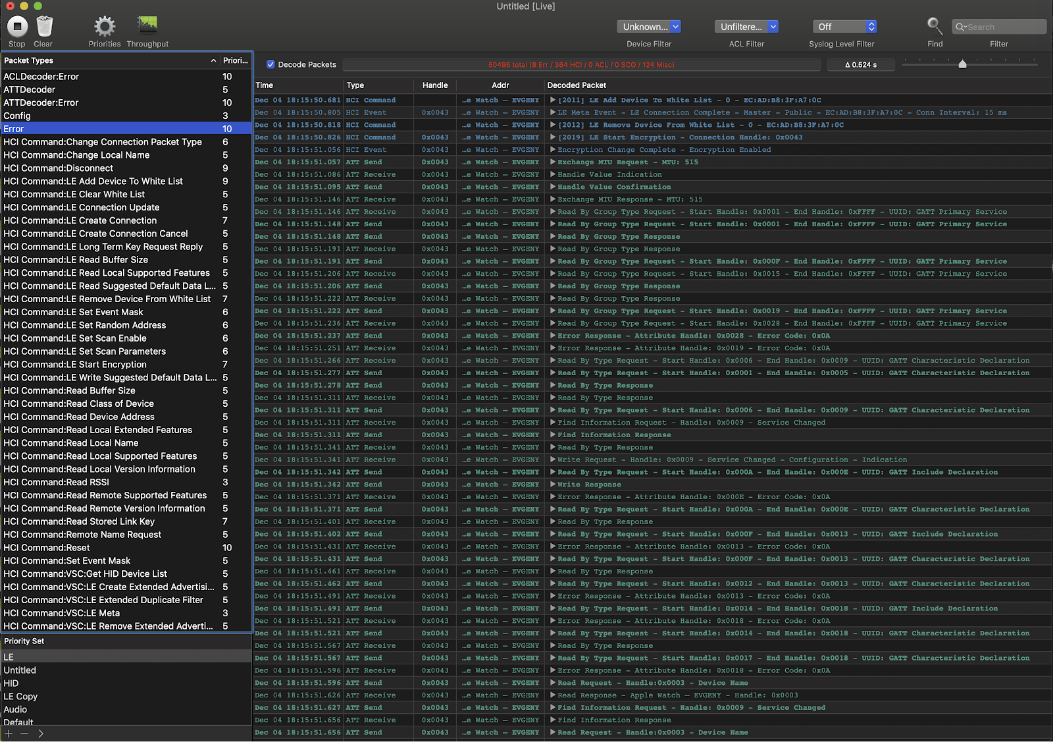

But here they found the PacketLogger program in Additional Xcode Tools. It allows you to watch the traffic that goes on the OS X device. Why not rewrite our MoneyDrop under OS X? We already had a separate library. We just replaced UIImage with NSImage, everything started up after 10 minutes.

Finally, we could read the packets exchanged between devices. It immediately became clear that at the time of data transmission via L2CAP one of the characteristics was recorded. And due to the fact that the channel was completely occupied with the transfer of photos, iOS ignored the recording, and the sender after the ignore broke the channel. After fixing the problems with the transfer of the photo was not.

That's all, thanks for reading :)

WWDC / CoreBluetooth:

Bluetooth

YouTube

Let's first understand what kind of technology it is and what is its difference from the classic Bluetooth.

What is Bluetooth LE?

Why did the Bluetooth developers name this technology namely Low Energy? After all, with each new version of Bluetooth, power consumption was already many times lower. The answer lies in this battery.

Its diameter is only 2 cm, and the capacity is about 220 mA * h. When engineers developed Bluetooth LE, they wanted the device with such a battery to work for several years. And they did it! Bluetooth LE devices with such a battery can work for a year. How many of you still turn off the Bluetooth on your phone in the old fashioned way to save energy, as you did in 2000? In vain you do this - the savings will be less than 10 seconds of the phone per day. And you disable very large functionality, such as Handoff, AirDrop and others.

What did the engineers achieve by developing Bluetooth LE? Have they refined the classic protocol? Made it more energy efficient? Just optimized all the processes? Not. They completely redesigned the architecture of the Bluetooth stack and achieved the fact that now, to be visible to all other devices, you need less time to be on the air and occupy the channel. In turn, this allowed a good saving on energy consumption. And with the new architecture, any new device can now be standardized, thanks to which developers from all over the world can communicate with the device and, therefore, easily write new applications to manage it. In addition, the principle of self-discovery is laid in the architecture: when connecting to a device, you do not need to enter any PIN codes, and if your application can communicate with this device,

- Less time on air.

- Less power consumption.

- New architecture.

- Reduced connection time.

How did engineers manage to make such a huge leap in energy efficiency?

The frequency remained the same: 2.4 GHz, not certified and free for use in many countries. But the connection delay has become less: 15-30 ms instead of 100 ms with classic Bluetooth. The working distance remained the same - 100 m. The transmission interval was not strong, but changed - instead of 0.625 ms, it became 3 ms.

But because of this, energy consumption could not be reduced tenfold. Of course, something had to suffer. And this is the speed: instead of 24 Mbps, it became 0.27 Mbps. You will probably say that this is ridiculous speed for 2018.

Where is Bluetooth LE used?

This technology is not young, it first appeared in the iPhone 4s. And already managed to conquer many areas. Bluetooth LE is used in all smart home devices and wearable electronics. Now there are even chips the size of coffee beans.

And how is this technology applied in software?

Since Apple was the first to integrate Bluetooth into their device and start using it, by now they have made good progress and integrated technology into their ecosystem. And now you can meet this technology in services such as AirDrop, Devices quick start, Share passwords, Handoff. And even the notifications in the watch are made via Bluetooth LE. In addition, Apple has made publicly available documentation on how to make sure that notifications from all applications come to your own devices. What are the roles of devices within Bluetooth LE?

Brodcaster. Sends messages to everyone who is nearby, you cannot connect to this device. By this principle, iBeacons and indoor navigation work.

Observer.Listens to what is happening around, and receives data only from public messages. Does not create connections.

But with Central and Peripheral more interesting. Why they were not called simply Server-Client? Logically, judging by the name. But no.

Because Peripheral actually acts as a server. This is a peripheral device that consumes less power and connects to the more powerful Central. Peripheral can inform you that it is nearby and what services it has. Only one device can connect to it, and Peripheral has some data. And Central can scan the air in search of devices, send connection requests, connect to any number of devices, can read, write and subscribe to data from Peripheral.

What do we as developers have access to in the Apple ecosystem?

What is available to us?

iOS / Mac OS:

- Peripheral and Central.

- Background mode.

- Recovery of state.

- Connection interval 15 ms.

watchOS / tvOS:

- watchOS 4+ / tvOS 9+.

- Central only.

- Maximum two connections.

- Apple watch series 2+ / AppleTv 4+.

- Shutdown when entering the background.

- Connection interval 30 ms.

The most important difference is the connection interval. What does it affect? To answer this question, you first need to understand how the Bluetooth LE protocol works and why such a small difference in absolute values is very important.

How the protocol works

How is the search and connection process?

Peripheral announces its presence at the frequency of the advertisement interval, its package is very small and contains only a few service identifiers provided by the device, as well as the name of the device. The interval can be quite large and can vary depending on the current status of the device, power saving mode and other settings. Apple advises developers of external devices to tie the length of the interval to the accelerometer: increase the interval if the device is not used, and when it is active, decrease to quickly find the device. Advertisement-interval does not correlate with the connection interval and is determined by the device itself, depending on power consumption and its settings. It is inaccessible and unknown to us in the Apple ecosystem; it is completely controlled by the system.

After we find the device, we send a connection request, and here the connection interval enters the scene - the time after which the second device can respond to the request. But this is when connecting, but what happens when reading / writing?

The connection interval also appears when reading data - reducing it by 2 times increases the data transfer rate. But you need to understand that if both devices do not support the same interval, then the maximum of them will be selected.

Let's look at what a package of information that Peripheral passes consists of.

MTU (maximum transmission unit) of such a package is determined during the connection process and varies from device to device and depending on the operating system. In protocol version 4.0, the MTU was about 30, and the size of the payload did not exceed 20 bytes. In version 4.2, everything has changed, now you can transfer about 520 bytes. But, unfortunately, only devices younger than the iPhone 5s support this version of the protocol. The size of the overhead, regardless of the size of the MTU, is 7 bytes: this includes ATT and L2CAP headers. With the record, in general, a similar situation.

There are only two modes: with answer and without. Unanswered mode significantly speeds up data transfer, since there is no waiting interval before the next recording. But this mode is not always available, not on all devices and not on all systems. Access to this recording mode may be limited by the system itself, because it is considered less energy-efficient. In iOS, there is a method in which you can check before recording whether this mode is available.

Now let's look at what the protocol consists of.

The protocol consists of 5 levels. The application layer is your logic, described on top of CoreBluetooth. GATT (Generic Attributes Layer) is used to exchange services and characteristics that are on the devices. ATT (Attributes Layer)used to manage your characteristics and transfer your data. L2CAP is a low-level data exchange protocol. Controller is the BT chip itself.

You probably ask what GATT is and how we can work with it?

GATT consists of features and services. A characteristic is an object in which your data is stored, like a variable. And a service is a group in which your characteristics are located, like a namespace. The service has a name - UUID, you choose it yourself. A service may contain a subsidiary service.

The characteristic also has its own UUID - in fact, a name. The value of the characteristic is NSData, here you can record and store data.Descriptors are a description of your characteristic, you can describe what data you expect in this characteristic, or what they mean. There are many descriptors in the Bluetooth protocol, but so far only two are available on Apple systems: the human description and the data format. Also has access levels (Permissions) to your specifications:

Let's try it yourself

We had an idea to make it possible to transfer money by air without requiring anything from the recipient. Imagine, you are puzzling over a very interesting task, writing the perfect code, and here a colleague suggests going for coffee. And you are so passionate about the task that you can’t go away, and ask him to buy you a cup of delicious cappuccino. He brings you coffee, and you need to return the money to him. You can translate by phone number, it works fine. But here's an awkward situation - you don't know his number. Well, like this,

I’ve been working for three years, but haven’t exchanged numbers :) Therefore, we decided to make it possible to transfer money to those who are nearby, without entering any user data. Like in AirDrop. Just select a user and send the amount he needs. Let's see what we need for this.

PUSH mapping

We need the sender:

- I could find all the devices that are nearby and support our service.

- I could read the details.

- And he could send a message to the recipient that he had successfully sent him the money.

The recipient, in turn, must be able to inform the surrounding senders that he has a service with the necessary data, and be able to receive messages from the sender. I think it’s not worth describing how the process of transferring money by details at our bank takes place. Now let's try to implement this.

First you need to come up with the names of our service and characteristics. As I said, this is the UUID. We simply generate them and save them on Peripheral and Central so that they are the same on both devices.

You are free to use any UUIDs, except those ending like this: XXXXXXXX- 0000-1000-8000-00805F9B34FB - they are reserved for different companies. You yourself can buy such a number and no one will use it. It will cost $ 2500.

Next, we will need to create managers: one to transfer funds, the other to receive. You just need to specify delegates. We will transmit Central, receive Peripheral. We create both, because both the sender and the receiver can be one person at different times.

Now we need to make it possible to detect the recipient and write down the details of the recipient in our characteristic.

First, create a service. We will register the UUID and indicate that it is primary - that is, the service is the main one for this device. A good example: a heart rate monitor, for which the current heart rate will be the main service, and the battery status is secondary information.

Next, we create two characteristics: one for reading the details of the recipient, the second for writing so that the recipient can learn about sending money. We register them in our service, then add them to the manager, start the discovery and indicate the UUID of the service so that all devices that are nearby can find out about our service before connecting to it. This data is placed in the packet that Central sends during the broadcast.

The recipient is ready, proceed to the sender. Run the search and connect.

When you turn on the manager, we start the search for devices with our service. When we find them, we get them in the delegate method and immediately connect. Important: you need to maintain a strong link to all Peripheral that you work with, otherwise they will leak.

After successful connection, we configure the delegate who will work with this device, and we get the service we need from the device.

We have successfully connected to the recipient, now you need to read its details.

After connecting, we have already requested all services from the device. And after receiving them, the delegate method will be called, which will list all the services available on this device. We find the right one and request its characteristics. The result can be found by the UUID in the delegate method, which stores the data for translation. We try to read them, and we get the desired again in the delegate method. All services, characteristics and their values are cached by the system, so it is not necessary to request them later each time.

That's all, we sent money for coffee, it's time to show the recipient a beautiful notice so that he waits for rubles on his account. To do this, you need to implement the process of sending a message.

We get the characteristic we need from the sender, in this case we took it from the stored value. But before that, you need to get it from the device, as we did before. And then just write the data to the desired characteristic.

After that, on the other device, we get a write request in the delegate method. Here you can read the data that is sent to you, respond to any error, for example, there is no access, or this characteristic does not exist. Everything will work, but only if both devices are turned on and applications are active. And we need to work in the background!

Apple allows you to use Bluetooth in the background. To do this, you need to indicate in info.plist the key in which mode we want to use, in Peripheral or Central.

Next, in the manager, you need to specify the recovery key and create a delegate method. Now the background mode is available to us. If the application falls asleep or is unloaded from memory, then when you find the desired Peripheral or when Central is connected, it wakes up, and the manager restores with your key.

Everything is fine, ready to be released. But here designers come running to us and say: “We want to insert photos of users so that it is easier for them to find each other.” What to do? In our characteristic, you can write just some 500 bytes, but on some devices in general 20 :(

Go deeper

To solve this problem, we had to go deeper.

Now we talked to devices at the GATT / ATT level. But in iOS 11, we have access to the L2CAP protocol. However, in this case, you will have to take care of the data transfer yourself. Packets are sent with 2 Kb MTU, no need to re-encode anything, regular NSStream is applied. Data rates up to 394 Kbps, according to Apple.

Suppose you transfer any data of your service from Peripheral to Central in the form of normal characteristics. And it took me to open the channel. You open it on Peripheral, in return you get PSM - this is the number of the channel you can connect to, and you need to transfer it to Central using the same characteristics. The number is dynamic, the system itself chooses which PSM to open at the moment. After the transfer, you can already connect to Peripheral on the Central and exchange data in a format convenient for you. Let's look at how to do this.

First, open the encrypted port on Peripheral. You can do it without encryption, then this will speed up the transfer a little.

Next, in the delegate method, we get the PSM and send it to another device.

After connecting another device, we will be called a method in which we can get the NSStream we need for transmission from the channel.

With Central, it’s even easier, we just connect to the channel with the desired number ...

... and after that we get the streams we need. In them you can transfer absolutely any data of any size, and build your protocol on top of L2CAP. So we realized the transfer of recipient photos.

But there are pitfalls, where do without them.

Underwater rocks

Let's look at the pitfalls when working in the background. Since the roles of Peripheral and Central are available to you, you might think. that in the background you can determine which devices are nearby in the background and which are active. In theory, it should have been, but Apple introduced a restriction: phones that are in the background, whether Central or Peripheral, are not available for other phones that are also in the background. Also, phones that are in the background are not visible from non-iOS devices. Let's look at why this happens.

When your device is active, it sends a regular broadcast packet, which may contain the device name and a list of services. that this device provides. And overflow data is all that did not fit.

When the device goes into the background, it does not transmit the name, and transfers the list of supported services to overflow data. If the application is active, then when scanning from an iOS device, it reads this data, and when switching to the background, it ignores it. Therefore, when switching to the background, you will not be able to see applications that are also in the background. Other Apple operating systems always ignore overflow data, so if you look for devices that support your service, you will get an empty array. And if you connect to each device that is nearby and request supported services, then the list may contain your service, and you can work with it.

Then we were getting ready to submit for testing, corrected minor bugs, and were engaged in optimization. And suddenly, at some point, we began to get this error in the console:

CoreBluetooth[WARNING] Unknown error: 124The worst thing was that no delegate method was called, we could not even beat this error for the user. Just a message in the log - and silence, everything froze. No major changes were made, so we started rolling back on commits. And they found that they once optimized the code and redid the way of writing data. The problem was that not all clients were updated, so this error occurred.

.write != .writeWithoutResponseWe, happy that we fixed everything, ran rather to pass it to testing, and they almost immediately returned to us: “Your fashion photos do not work. They all come underloaded. ” We started trying, and it’s true that sometimes, on different devices, broken photos come at different times. They began to look for a reason.

And then again they saw the previous error. Immediately thought that it was in different versions. But after the complete removal of the old version from all test devices, the error still reproduced. We were sad ...

CoreBluetooth[WARNING] Unknown error: 722

CoreBluetooth[WARNING] Unknown error: 249

CoreBluetooth[WARNING] Unknown error: 312We started looking for a debugging tool. The first thing we came across was the Apple Bluetooth Explorer. A powerful program, it can do a lot of things, but for debugging the Bluetooth LE protocol, there is one small tab with the search for devices and obtaining characteristics. And we needed to analyze L2CAP.

Then they found LightBlue Explorer. It turned out to be a pretty decent program, though with a design from iOS 7. It can do the same thing as Bluetooth Explorer, and also knows how to subscribe to specifications. And it works more stable. Everything is fine, but again without L2CAP.

And then we remembered the well-known WireShark sniffer.

It turned out he was familiar with Bluetooth LE: he can read L2CAP, but only under Windows. Although it’s not scary that we won’t find Windows or something. The biggest minus - the program only works with a specific device. That is, you had to find the device somewhere in the official store. And you yourself understand that a large company is unlikely to approve the purchase of an incomprehensible device at a flea market. We even started browsing overseas online stores.

But here they found the PacketLogger program in Additional Xcode Tools. It allows you to watch the traffic that goes on the OS X device. Why not rewrite our MoneyDrop under OS X? We already had a separate library. We just replaced UIImage with NSImage, everything started up after 10 minutes.

Finally, we could read the packets exchanged between devices. It immediately became clear that at the time of data transmission via L2CAP one of the characteristics was recorded. And due to the fact that the channel was completely occupied with the transfer of photos, iOS ignored the recording, and the sender after the ignore broke the channel. After fixing the problems with the transfer of the photo was not.

That's all, thanks for reading :)

useful links

WWDC / CoreBluetooth:

- https://developer.apple.com/videos/play/wwdc2017/712/

- WWDC 2012 Session 703 Core Bluetooth 101

- WWDC 2012 Session 705 Advanced Core Bluetooth

Bluetooth

- https://www.bluetooth.com/specifications/gatt

- Getting Started with Bluetooth Low Energy O'Reilly

YouTube

- Arrow Electronics → Bluetooth Low Energy Series