Full Self-Driving Computer Development

- Transfer

Translation of the first part of the Tesla Autonomy Investor Day presentation on the development of Full Self-Driving Computer for Tesla autopilot. We fill in the gap between the thesis reviews for the presentation and its content.

The text of the presentation is translated close to the original. Questions to the speaker - selectively with abbreviations.

Host: Hello everyone. Sorry for being late. Welcome to our first day of autonomous driving. I hope we can do this more regularly to keep you updated on our developments.

About three months ago, we were preparing for the fourth quarter earnings report with Ilon and other executives. I then said that the biggest gap in conversations with investors, between what I see inside the company and what its external perception is, is our progress in autonomous driving. And this is understandable, for the last couple of years we talked about increasing the production of Model 3, around which there was a lot of debate. In fact, a lot has happened in the background.

We worked on a new chip for autopilot, completely redesigned the machine vision neural network, and finally began to release the Full Self-Driving Computer (FSDC). We thought it was a good idea to just open the veil, invite everyone and tell about everything that we have done over the past two years.

About three years ago we wanted to use, we wanted to find the best chip for autonomous driving. We found that there is no chip that was designed from the ground up for neural networks. Therefore, we invited my colleague Pete Bannon, vice president of integrated circuit design, to develop such a chip for us. He has about 35 years of experience in chip development. Including 12 years at PASemi, which was later acquired by Apple. He worked on dozens of different architectures and implementations, and was the chief designer of the Apple iPhone 5, shortly before joining Tesla. Also joins us on Elon Musk. Thanks.

Ilon:Actually, I was going to introduce Pete, but since they have already done this, I will add that he is simply the best systems and integrated circuit architect in the world that I know. It is an honor that you and your team at Tesla. Please just tell us about the incredible work that you have done.

Pete:Thanks Ilon. I am pleased to be here this morning and it is really nice to tell you about all the work that my colleagues and I have done here in Tesla over the past three years. I will tell you a little about how it all began, and then I will introduce you to the FSDC computer and tell you a little how it works. We will delve into the chip itself and consider some of the details. I will describe how the specialized neural network accelerator that we designed works and then shows some results, and I hope that by that time you will not fall asleep yet.

I was hired in February 2016. I asked Ilon if he was ready to spend as much as needed to create this specialized system, he asked: “Will we win?”, I answered: “Well, yes, of course,” then he said “I’m in business” and it all started . We hired a bunch of people and started thinking about what a chip designed specifically for fully autonomous driving would look like. We spent eighteen months developing the first version, and in August 2017 released it for production. We got the chip in December, it worked, and actually worked very well on the first try. In April 2018, we made several changes and released version B zero Rev. In July 2018, the chip was certified, and we started full-scale production. In December 2018, the autonomous driving stack was launched on new equipment, and we were able to proceed with the conversion of company cars and testing in the real world. In March 2019 we started installing a new computer in models S and X, and in April - in Model 3.

So, the whole program, from hiring the first employees to the full launch in all three models of our cars, took a little more than three years. This is perhaps the fastest system development program I have ever participated in. And it really speaks of the benefits of high vertical integration, allowing you to do parallel design and accelerate deployment.

In terms of goals, we were completely focused solely on the requirements of Tesla, and this greatly simplifies life. If you have one single customer, you do not need to worry about others. One of the goals was to keep power below 100 watts so that we could convert existing machines. We also wanted to lower costs to provide redundancy for greater security.

At the time we poked our fingers at the sky, I argued that driving a car would require a neural network performance of at least 50 trillion operations per second. Therefore, we wanted to get at least as much, and better, more. Batch sizes determine the number of items you work with at the same time. For example, Google TPUs have a packet size of 256, and you need to wait until you have 256 items to process before you can get started. We did not want to wait and developed our engine with a package size of one. As soon as the image appears, we immediately process it to minimize delay and increase security.

We needed a graphics processor to do some post-processing. At first, it occupied quite a lot, but we assumed that over time it would become smaller, since neural networks are getting better and better. And it really happened. We took risks by putting a rather modest graphics processor in the design, and that turned out to be a good idea.

Security is very important, if you do not have a protected car, you cannot have a safe car. Therefore, much attention is paid to security and, of course, security.

In terms of chip architecture, as Ilon mentioned earlier, in 2016 there was no accelerator originally created for neural networks. Everyone simply added instructions to their CPU, GPU or DSP. No one did development with 0. Therefore, we decided to do it ourselves. For other components, we purchased standard IP industrial CPUs and GPUs, which allowed us to reduce development time and risks.

Another thing that was a bit unexpected for me was the ability to use existing commands in Tesla. Tesla had excellent teams of developers of power supplies, signal integrity analysis, housing design, firmware, system software, circuit board development, and a really good system validation program. We were able to use all this to speed up the program.

This is how it looks. On the right you see the connectors for video coming from the car’s cameras. Two autonomous driving computers in the center of the board, on the left - the power supply and control connectors. I love it when a solution comes down to its basic elements. You have a video, a computer and power, simple and clear. Here is the previous Hardware 2.5 solution, which included the computer, and which we installed the last two years. Here is a new design for an FSD computer. They are very similar. This, of course, is due to the limitations of the car modernization program. I would like to point out that this is actually a rather small computer. It is placed behind the glove compartment, and does not occupy half of the trunk.

As I said earlier, there are two completely independent computers on the board. They are highlighted in blue and green. On the sides of each SoC you can see DRAM chips. At the bottom left you see FLASH chips that represent the file system. There are two independent computers that boot and run under their own operating system.

Ilon: The general principle is that if any part fails, the machine can continue to move. The camera, the power circuit, one of the Tesla computer chips fails - the machine continues to move. The probability of failure of this computer is significantly lower than the likelihood that the driver will lose consciousness. This is a key indicator, at least an order of magnitude.

Pete:Yes, therefore, one of the things we do to maintain the computer is redundant power supplies. The first chip runs on one power source, and the second on another. The same is for cameras, half of the cameras on the power supply are marked in blue, the other half on green. Both chips receive all the video and process it independently.

From a driving point of view, the sequence is to collect a lot of information from the world around you, we have not only cameras, but also radar, GPS, maps, gyro stabilizer (IMU), ultrasonic sensors around the car. We have a steering angle, we know what the acceleration of a car should be like. All of this comes together to form a plan. When the plan is ready, the two computers exchange their versions of the plan to make sure they match.

Assuming the plan is the same, we issue control signals and drive. Now that you are moving with the new controls, you certainly want to test it. We verify that the transmitted control signals coincide with what we intended to transmit to the actuators in the car. Sensors are used to verify that control is actually taking place. If you ask the car to accelerate, or slow down, or turn right or left, you can look at the accelerometers and make sure that this is really happening. There is significant redundancy and duplication of both our data and our data monitoring capabilities.

Let's talk about the chip. It is packaged in a 37.5 mm BGA with 1600 pins, most of which are power and ground. If you remove the cover, you can see the substrate and the crystal in the center. If you separate the crystal and turn it over, you will see 13,000 C4 bumps scattered across the surface. Below are the twelve metal layers of the integrated circuit. This is a 14-nanometer FinFET CMOS process measuring 260 mm.sq., a small circuit. For comparison, a conventional cell phone chip is about 100 mm2. A high-performance graphics processor will be about 600-800 mm.kv. So we are in the middle. I would call it the golden mean, this is a convenient size for assembly. There are 250 million logic elements and 6 billion transistors, which, although I have been working on this all this time, amaze me.

I would just go around the chip and explain all its parts. I will go in the same order as the pixel coming from the camera. In the upper left corner you can see the camera interface. We can take 2.5 billion pixels per second, which is more than enough for all available sensors. A network that distributes data from a memory system to memory controllers on the right and left edges of the chip. We use standard LPDDR4 operating at a speed of 4266 gigabits per second. This gives us a maximum throughput of 68 gigabytes per second. This is a pretty good bandwidth, but not excessive, we are trying to stay in the middle ground. The image processing processor has a 24-bit internal pipeline, which allows us to fully use the HDR sensors that are in the car. It performs advanced Tone mapping,

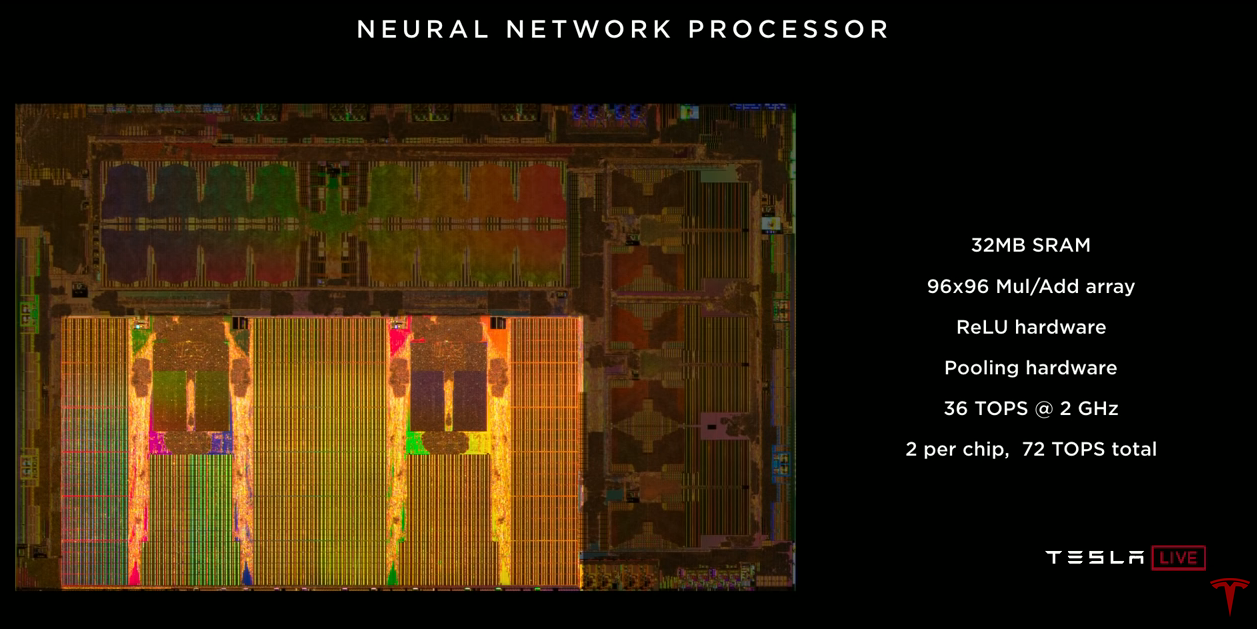

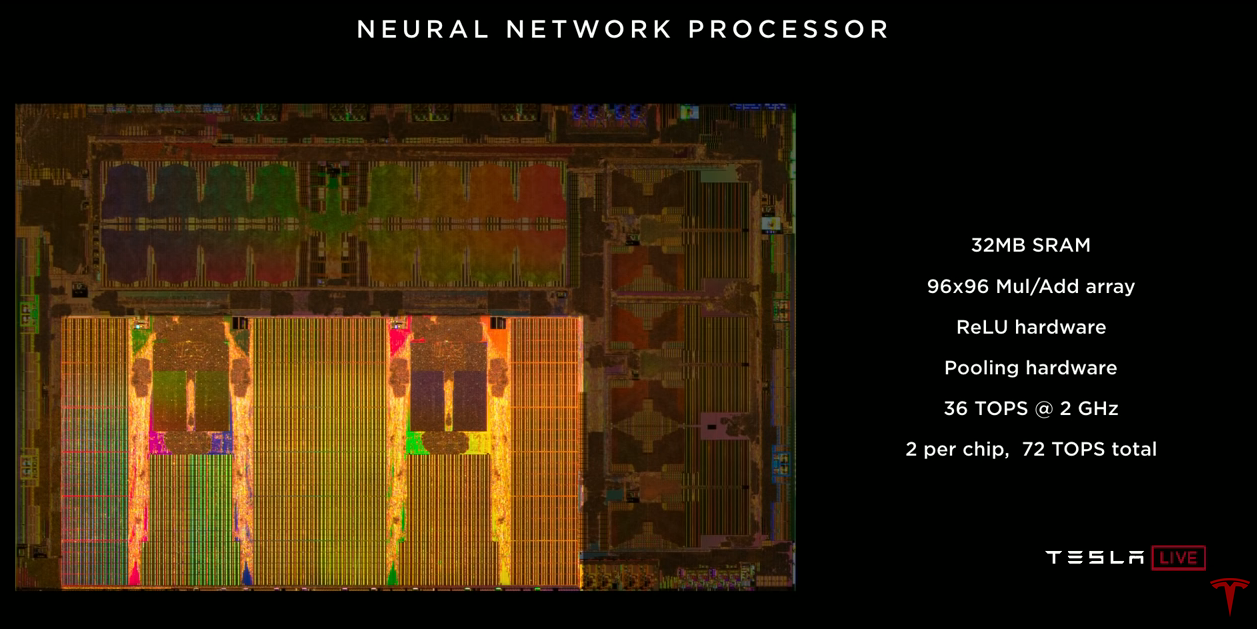

The neural network accelerator itself. There are two on the chip. Each of them has 32 megabytes of SRAM for storing temporary results. This minimizes the amount of data that we need to transfer to the chip and vice versa, which helps reduce power consumption. Each contains an array of 96x96 multipliers with accumulation, which allows us to do almost 10,000 MUL / ADD operations per cycle. There is a dedicated ReLU accelerator, a pooling accelerator. Each of them provides 36 trillion operations per second operating at a frequency of 2 GHz. Two accelerators on a chip give 72 trillion operations per second, which is noticeably higher than the target of 50 trillion.

The video encoder, the video from which we use in the car for many tasks, including outputting images from the rear view camera, video recording, and also for recording data to the cloud, Stuart and Andrew will talk about this later. A rather modest graphics processor is located on the chip. It supports 32 and 16 bit floating point numbers. Also 12 64-bit general-purpose A72 processors. They operate at a frequency of 2.2 GHz, which is approximately 2.5 times higher than the performance of the previous solution. The security system contains two processors that operate in lockstep mode. This system makes the final decision whether it is safe to transmit control signals to the vehicle’s drives. This is where the two planes come together, and we decide whether it’s safe to move forward. And finally, a security system whose task is to ensure

I told you many different performance indicators, and I think it would be useful to look at the future. We will consider a neural network from our (narrow) camera. It takes 35 billion operations. If we use all 12 CPUs to process this network, we can do 1.5 frames per second, it is very slow. Absolutely not enough to drive a car. If we used GPUs with 600 GFLOPs for the same network, we would get 17 frames per second, which is still not enough to drive a car with 8 cameras. Our neural network accelerator can produce 2100 frames per second. You can see that the amount of computation in the CPU and GPU is negligible compared to the neural network accelerator.

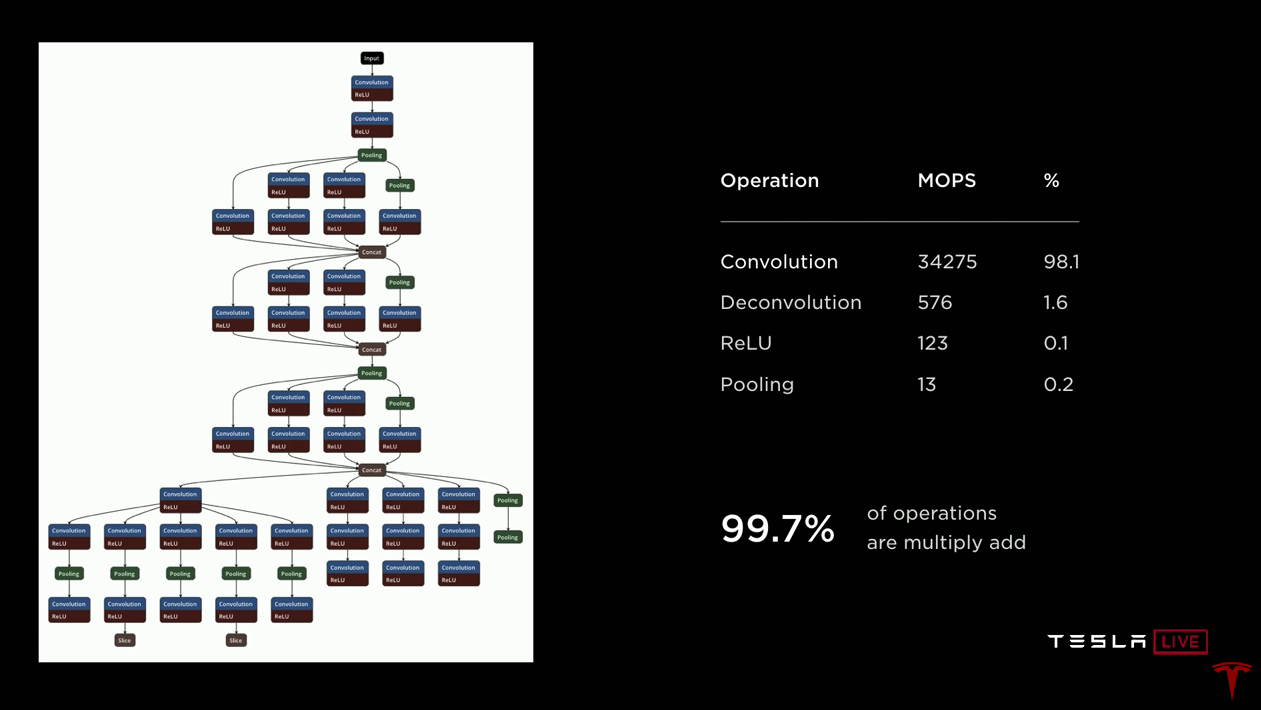

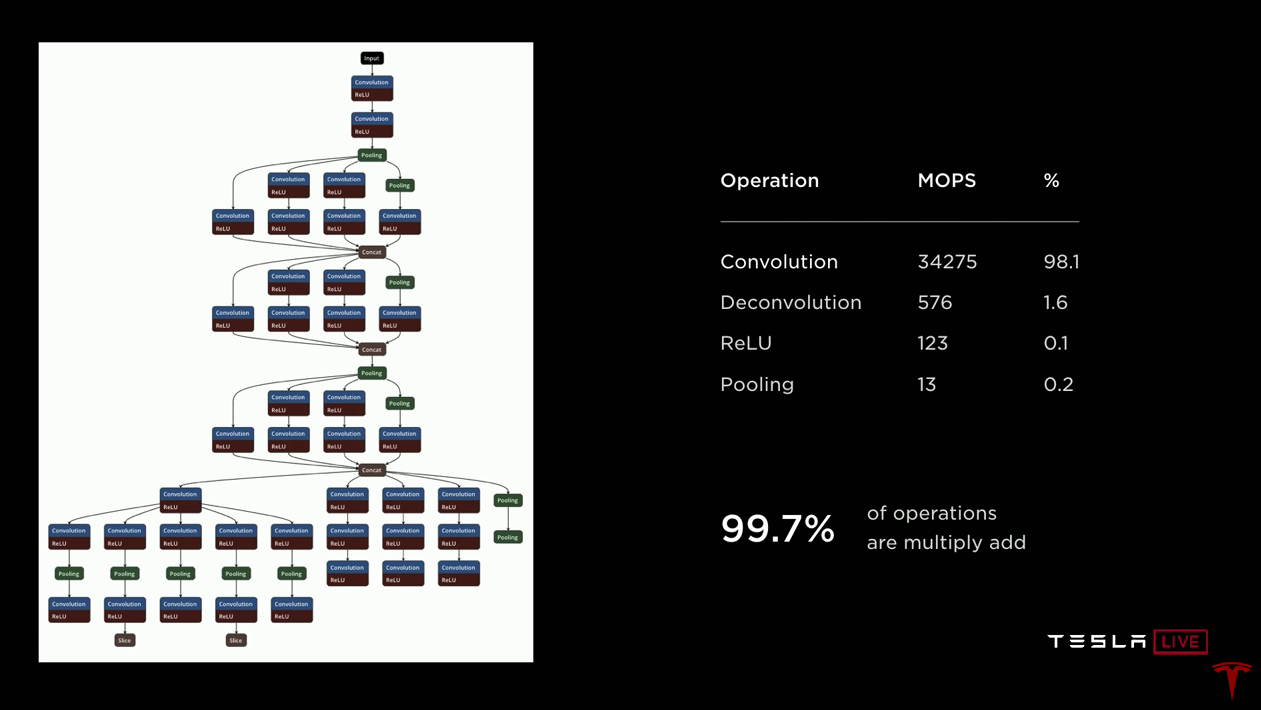

Let's move on to talking about a neural network accelerator. I’ll only drink some water. On the left here is a drawing of a neural network to give you an idea of what is going on. The data arrives at the top and passes through each of the blocks. Data is transmitted along the arrows to various blocks, which are usually convolutions or reverse convolutions with activation functions (ReLUs). Green blocks combine layers. It is important that the data received by one block is then used by the next block, and you no longer need it - you can throw it away. So all this temporary data is created and destroyed when passing through the network. There is no need to store them outside the chip in DRAM. Therefore, we only store them in SRAM, and in a few minutes I will explain why this is very important.

If you look at the right side, you will see that in this network of 35 billion operations, almost all are convolutions, essentially scalar products, the rest are deconvolution (reverse convolutions), also based on the scalar product, and then ReLU and pooling are relatively simple operations. Therefore, if you are developing an accelerator, you focus on implementing scalar products based on addition with accumulation and optimize them. But imagine that you accelerate this operation by 10,000 times and 100% turn into 0.1%. Suddenly, ReLU and pooling operations become very significant. Therefore, our implementation includes dedicated accelerators for ReLU processing and pooling.

The chip operates in a limited heat budget. We needed to be very careful about how we burn power. We want to maximize the amount of arithmetic we can do. Therefore, we chose 32 bit integer addition, it consumes 9 times less energy than floating point addition. And we chose 8-bit integer multiplication, which also consumes significantly less energy than other multiplication operations, and probably has enough accuracy to get good results. As for the memory. Accessing external DRAM is about a hundred times more expensive in terms of power consumption than using local SRAM. It is clear that we want to make the most of local SRAM.

From a management point of view, here is the data that was published in an article by Mark Horowitz, where he critically examined how much energy it takes to execute a single instruction on a regular integer processor. And you see that the addition operation consumes only 0.15% of the total power. Everything else is overhead for management and more. Therefore, in our design we strive to get rid of all this as much as possible. What really interests us is arithmetic.

So here is the design that we have finished. You can see that in it the main place is occupied by 32 megabytes of SRAM, they are left, right, in the center and at the bottom. All calculations are performed in the upper central part. Each cycle, we read 256 bytes of activation data from the SRAM array, 128 bytes of weights from the SRAM array and combine them in a 96x96 array, which performs 9000 additions with accumulation per cycle at 2 GHz. This is only 36.8 trillion. operations. Now that we are done with the scalar product, we upload the data, pass it through the dedicated ReLU, optionally through pulling, and finally put it in the write buffer, where all the results are aggregated. And then we write 128 bytes per clock back to SRAM. And all this happens continuously. We work with scalar products, while we upload the previous results, perform pulling and write the results back to memory. If you stack it all at 2 GHz, you will need 1 terabyte per second of SRAM bandwidth to support operation. And iron provides this. One terabyte per second of bandwidth per accelerator, two accelerators per chip - two terabytes per second.

The accelerator has a relatively small set of commands. There is a DMA read operation for loading data from memory, a DMA write operation for uploading results back to memory. Three convolution instructions (convolution, deconvolution, inner-product). Two relatively simple operations are shift and elementwise operation (eltwise). And of course, the stop operation when the calculations are finished.

We had to develop a neural network compiler. We took a neural network trained by our development team, in the form in which it was used in the old hardware. When you compile it for use on a new accelerator, the compiler performs fusion of layers, which allows us to increase the number of calculations for each call to SRAM. It also performs smoothing of memory accesses. We perform channel padding to reduce conflicts between memory banks. Memory allocation also takes SRAM banks into account. This is the case when conflict processing could be implemented in hardware. But with software implementation, we save on hardware due to some software complexity. We also automatically insert DMA operations so that the data arrives for calculations on time, without stopping the processing. At the end, we generate the code, weights data, compress and add a CRC checksum for reliability. The neural network is loaded into SRAM at startup and is there all the time.

Thus, to start the network, you specify the address of the input buffer, which contains a new image that has just arrived from the camera; set the address of the output buffer; set the pointer to the network weight; go. The accelerator “goes into itself” and will sequentially pass through the entire neural network, usually for one or two million clock cycles. Upon completion, you receive an interrupt and can post-process the results.

Turning to the results. Our goal was to meet 100 watts. Measurements on cars that drive with a full stack of autopilot have shown that we dissipate 72 watts. This is slightly larger than in the previous project, but a significant performance improvement is a good excuse. Of these 72 watts, about 15 watts is consumed in neural networks. The cost of this solution is about 80% of what we paid before. In terms of performance, we took the neural network (narrow) of the camera, which I already mentioned, with 35 billion operations, we launched it on old equipment and received 110 frames per second. We took the same data and the same network, compiled it for the new FSD computer and, using all four accelerators, we can process 2300 frames per second.

Ilon:I think this is perhaps

Pete’s most significant slide : I’ve never worked on a project where the productivity improvement was over 3. So it was pretty fun. Compared with the nvidia Drive Xavier solution, there the chip provides 21 trillion. operations, while our FSDC with two chips - 144 trillion. operations.

So, in conclusion, I think we have created a solution that delivers outstanding performance of 144 trillion. operations for processing a neural network. It has outstanding power characteristics. We managed to squeeze all this productivity into the heat budget that we had. This allows you to implement a duplication solution. The computer has a moderate cost, and what is really important, the FSDC will provide a new level of safety and autonomy in Tesla cars, without affecting their cost and mileage. We all look forward to it.

Ilon: If you have questions about equipment, ask them right now.

The reason I asked Pete to do a detailed, much more detailed than perhaps most people would have appreciated diving into a Tesla FSD computer is as follows. At first glance it seems incredible how it could happen that Tesla, which had never designed such chips before, created the best chip in the world. But this is exactly what happened. And not just the best by a small margin, but the best by a huge margin. All Tesla manufactured right now have this computer. We switched from the Nvidia solution for S and X about a month ago and switched Model 3 about ten days ago. All cars produced have all the necessary hardware and everything necessary for fully autonomous driving. I will say it again: all Tesla cars produced now, have everything you need for full autonomous driving. All you have to do is improve the software. Later today, you can drive cars with a version for developers of improved autonomous driving software. You will see for yourself. Questions.

Q: do you have the ability to use activation functions other than ReLU?

Pete: yes, we have a sigmoid, for example

Q: maybe it was worth switching to a more compact manufacturing process, maybe 10 nm or 7 nm?

Pete: at the time we started to design, not all IPs we wanted to get were available in 10 nm

Ilon: it is worth noting that we completed this design about a year and a half ago and started the next generation. Today we are not talking about the next generation, but we are already halfway. All that is obvious for the next generation chip, we do.

Q: The computer is designed to work with cameras. Can I use it with lidar?

Ilon:Lidar is a disastrous decision, and anyone who relies on lidar is doomed. Doomed. Expensive. Dear sensors that are not needed. It's like having a bunch of expensive unnecessary apps. One small one is nothing, but a bunch is already a nightmare. This is stupid, you will see.

Q: Can you assess the impact of energy consumption on mileage?

Pete: For Model 3, the target consumption is 250 watts per mile.

Ilon:Depends on the nature of driving. In the city, the effect will be much greater than on the highway. You drive in the city for an hour and you have a hypothetical solution that consumes 1 kW. You will lose 6km on Model 3. If the average speed is 25km / h, then you lose 25%. Consumption of the system has a huge impact on mileage in a city where we think that there will be a large part of the robotax market, so power is extremely important.

Q: How reliable is your technology in terms of IP, are you not going to give out IP for free?

Pete: We have filed a dozen patents for this technology. This is essentially linear algebra, which I don’t think you can patent. (Ilon laughs)

Q:your microcircuit can do something, maybe encrypt all the weights so that your intellectual property remains inside, and no one can just steal it.

Ilon: Oh, I would like to meet someone who can do this. I would hire him in an instant. This is a very difficult problem. Even if you can extract the data, you will need a huge amount of resources to use it somehow.

The big sustainable advantage for us is the fleet. No one has a fleet. Weights are constantly updated and improved based on billions of miles traveled. Tesla has a hundred times more cars with the hardware necessary for training than all the others combined. By the end of this quarter, we will have 500,000 vehicles with 8 cameras and 12 ultrasonic sensors. In a year we will have more than a million cars with FSDC. This is just a huge data advantage. This is similar to how the Google search engine has a huge advantage, because people use it, and people actually train Google with their queries.

Host:One thing to remember about our FSD computer is that it can work with much more complex neural networks for much more accurate image recognition. It's time to talk about how we actually get these images and how we analyze them. Here we have a senior director of AI - Andrey Karpaty, who will explain all this to you. Andrei is a PhD from Stanford University, where he studied computer science with an emphasis on recognition and deep learning.

Ilon: Andrey, why don’t you just start, come on. Many doctors came out of Stanford, it doesn’t matter. Andrey is teaching a computer vision course at Stanford, which is much more important. Please tell about yourself.

(Translation turned out to be rather not a quick matter, I don’t know if I have enough for the second part about the neural network training system, although it seems to me the most interesting.)

The text of the presentation is translated close to the original. Questions to the speaker - selectively with abbreviations.

Host: Hello everyone. Sorry for being late. Welcome to our first day of autonomous driving. I hope we can do this more regularly to keep you updated on our developments.

About three months ago, we were preparing for the fourth quarter earnings report with Ilon and other executives. I then said that the biggest gap in conversations with investors, between what I see inside the company and what its external perception is, is our progress in autonomous driving. And this is understandable, for the last couple of years we talked about increasing the production of Model 3, around which there was a lot of debate. In fact, a lot has happened in the background.

We worked on a new chip for autopilot, completely redesigned the machine vision neural network, and finally began to release the Full Self-Driving Computer (FSDC). We thought it was a good idea to just open the veil, invite everyone and tell about everything that we have done over the past two years.

About three years ago we wanted to use, we wanted to find the best chip for autonomous driving. We found that there is no chip that was designed from the ground up for neural networks. Therefore, we invited my colleague Pete Bannon, vice president of integrated circuit design, to develop such a chip for us. He has about 35 years of experience in chip development. Including 12 years at PASemi, which was later acquired by Apple. He worked on dozens of different architectures and implementations, and was the chief designer of the Apple iPhone 5, shortly before joining Tesla. Also joins us on Elon Musk. Thanks.

Ilon:Actually, I was going to introduce Pete, but since they have already done this, I will add that he is simply the best systems and integrated circuit architect in the world that I know. It is an honor that you and your team at Tesla. Please just tell us about the incredible work that you have done.

Pete:Thanks Ilon. I am pleased to be here this morning and it is really nice to tell you about all the work that my colleagues and I have done here in Tesla over the past three years. I will tell you a little about how it all began, and then I will introduce you to the FSDC computer and tell you a little how it works. We will delve into the chip itself and consider some of the details. I will describe how the specialized neural network accelerator that we designed works and then shows some results, and I hope that by that time you will not fall asleep yet.

I was hired in February 2016. I asked Ilon if he was ready to spend as much as needed to create this specialized system, he asked: “Will we win?”, I answered: “Well, yes, of course,” then he said “I’m in business” and it all started . We hired a bunch of people and started thinking about what a chip designed specifically for fully autonomous driving would look like. We spent eighteen months developing the first version, and in August 2017 released it for production. We got the chip in December, it worked, and actually worked very well on the first try. In April 2018, we made several changes and released version B zero Rev. In July 2018, the chip was certified, and we started full-scale production. In December 2018, the autonomous driving stack was launched on new equipment, and we were able to proceed with the conversion of company cars and testing in the real world. In March 2019 we started installing a new computer in models S and X, and in April - in Model 3.

So, the whole program, from hiring the first employees to the full launch in all three models of our cars, took a little more than three years. This is perhaps the fastest system development program I have ever participated in. And it really speaks of the benefits of high vertical integration, allowing you to do parallel design and accelerate deployment.

In terms of goals, we were completely focused solely on the requirements of Tesla, and this greatly simplifies life. If you have one single customer, you do not need to worry about others. One of the goals was to keep power below 100 watts so that we could convert existing machines. We also wanted to lower costs to provide redundancy for greater security.

At the time we poked our fingers at the sky, I argued that driving a car would require a neural network performance of at least 50 trillion operations per second. Therefore, we wanted to get at least as much, and better, more. Batch sizes determine the number of items you work with at the same time. For example, Google TPUs have a packet size of 256, and you need to wait until you have 256 items to process before you can get started. We did not want to wait and developed our engine with a package size of one. As soon as the image appears, we immediately process it to minimize delay and increase security.

We needed a graphics processor to do some post-processing. At first, it occupied quite a lot, but we assumed that over time it would become smaller, since neural networks are getting better and better. And it really happened. We took risks by putting a rather modest graphics processor in the design, and that turned out to be a good idea.

Security is very important, if you do not have a protected car, you cannot have a safe car. Therefore, much attention is paid to security and, of course, security.

In terms of chip architecture, as Ilon mentioned earlier, in 2016 there was no accelerator originally created for neural networks. Everyone simply added instructions to their CPU, GPU or DSP. No one did development with 0. Therefore, we decided to do it ourselves. For other components, we purchased standard IP industrial CPUs and GPUs, which allowed us to reduce development time and risks.

Another thing that was a bit unexpected for me was the ability to use existing commands in Tesla. Tesla had excellent teams of developers of power supplies, signal integrity analysis, housing design, firmware, system software, circuit board development, and a really good system validation program. We were able to use all this to speed up the program.

This is how it looks. On the right you see the connectors for video coming from the car’s cameras. Two autonomous driving computers in the center of the board, on the left - the power supply and control connectors. I love it when a solution comes down to its basic elements. You have a video, a computer and power, simple and clear. Here is the previous Hardware 2.5 solution, which included the computer, and which we installed the last two years. Here is a new design for an FSD computer. They are very similar. This, of course, is due to the limitations of the car modernization program. I would like to point out that this is actually a rather small computer. It is placed behind the glove compartment, and does not occupy half of the trunk.

As I said earlier, there are two completely independent computers on the board. They are highlighted in blue and green. On the sides of each SoC you can see DRAM chips. At the bottom left you see FLASH chips that represent the file system. There are two independent computers that boot and run under their own operating system.

Ilon: The general principle is that if any part fails, the machine can continue to move. The camera, the power circuit, one of the Tesla computer chips fails - the machine continues to move. The probability of failure of this computer is significantly lower than the likelihood that the driver will lose consciousness. This is a key indicator, at least an order of magnitude.

Pete:Yes, therefore, one of the things we do to maintain the computer is redundant power supplies. The first chip runs on one power source, and the second on another. The same is for cameras, half of the cameras on the power supply are marked in blue, the other half on green. Both chips receive all the video and process it independently.

From a driving point of view, the sequence is to collect a lot of information from the world around you, we have not only cameras, but also radar, GPS, maps, gyro stabilizer (IMU), ultrasonic sensors around the car. We have a steering angle, we know what the acceleration of a car should be like. All of this comes together to form a plan. When the plan is ready, the two computers exchange their versions of the plan to make sure they match.

Assuming the plan is the same, we issue control signals and drive. Now that you are moving with the new controls, you certainly want to test it. We verify that the transmitted control signals coincide with what we intended to transmit to the actuators in the car. Sensors are used to verify that control is actually taking place. If you ask the car to accelerate, or slow down, or turn right or left, you can look at the accelerometers and make sure that this is really happening. There is significant redundancy and duplication of both our data and our data monitoring capabilities.

Let's talk about the chip. It is packaged in a 37.5 mm BGA with 1600 pins, most of which are power and ground. If you remove the cover, you can see the substrate and the crystal in the center. If you separate the crystal and turn it over, you will see 13,000 C4 bumps scattered across the surface. Below are the twelve metal layers of the integrated circuit. This is a 14-nanometer FinFET CMOS process measuring 260 mm.sq., a small circuit. For comparison, a conventional cell phone chip is about 100 mm2. A high-performance graphics processor will be about 600-800 mm.kv. So we are in the middle. I would call it the golden mean, this is a convenient size for assembly. There are 250 million logic elements and 6 billion transistors, which, although I have been working on this all this time, amaze me.

I would just go around the chip and explain all its parts. I will go in the same order as the pixel coming from the camera. In the upper left corner you can see the camera interface. We can take 2.5 billion pixels per second, which is more than enough for all available sensors. A network that distributes data from a memory system to memory controllers on the right and left edges of the chip. We use standard LPDDR4 operating at a speed of 4266 gigabits per second. This gives us a maximum throughput of 68 gigabytes per second. This is a pretty good bandwidth, but not excessive, we are trying to stay in the middle ground. The image processing processor has a 24-bit internal pipeline, which allows us to fully use the HDR sensors that are in the car. It performs advanced Tone mapping,

The neural network accelerator itself. There are two on the chip. Each of them has 32 megabytes of SRAM for storing temporary results. This minimizes the amount of data that we need to transfer to the chip and vice versa, which helps reduce power consumption. Each contains an array of 96x96 multipliers with accumulation, which allows us to do almost 10,000 MUL / ADD operations per cycle. There is a dedicated ReLU accelerator, a pooling accelerator. Each of them provides 36 trillion operations per second operating at a frequency of 2 GHz. Two accelerators on a chip give 72 trillion operations per second, which is noticeably higher than the target of 50 trillion.

The video encoder, the video from which we use in the car for many tasks, including outputting images from the rear view camera, video recording, and also for recording data to the cloud, Stuart and Andrew will talk about this later. A rather modest graphics processor is located on the chip. It supports 32 and 16 bit floating point numbers. Also 12 64-bit general-purpose A72 processors. They operate at a frequency of 2.2 GHz, which is approximately 2.5 times higher than the performance of the previous solution. The security system contains two processors that operate in lockstep mode. This system makes the final decision whether it is safe to transmit control signals to the vehicle’s drives. This is where the two planes come together, and we decide whether it’s safe to move forward. And finally, a security system whose task is to ensure

I told you many different performance indicators, and I think it would be useful to look at the future. We will consider a neural network from our (narrow) camera. It takes 35 billion operations. If we use all 12 CPUs to process this network, we can do 1.5 frames per second, it is very slow. Absolutely not enough to drive a car. If we used GPUs with 600 GFLOPs for the same network, we would get 17 frames per second, which is still not enough to drive a car with 8 cameras. Our neural network accelerator can produce 2100 frames per second. You can see that the amount of computation in the CPU and GPU is negligible compared to the neural network accelerator.

Let's move on to talking about a neural network accelerator. I’ll only drink some water. On the left here is a drawing of a neural network to give you an idea of what is going on. The data arrives at the top and passes through each of the blocks. Data is transmitted along the arrows to various blocks, which are usually convolutions or reverse convolutions with activation functions (ReLUs). Green blocks combine layers. It is important that the data received by one block is then used by the next block, and you no longer need it - you can throw it away. So all this temporary data is created and destroyed when passing through the network. There is no need to store them outside the chip in DRAM. Therefore, we only store them in SRAM, and in a few minutes I will explain why this is very important.

If you look at the right side, you will see that in this network of 35 billion operations, almost all are convolutions, essentially scalar products, the rest are deconvolution (reverse convolutions), also based on the scalar product, and then ReLU and pooling are relatively simple operations. Therefore, if you are developing an accelerator, you focus on implementing scalar products based on addition with accumulation and optimize them. But imagine that you accelerate this operation by 10,000 times and 100% turn into 0.1%. Suddenly, ReLU and pooling operations become very significant. Therefore, our implementation includes dedicated accelerators for ReLU processing and pooling.

The chip operates in a limited heat budget. We needed to be very careful about how we burn power. We want to maximize the amount of arithmetic we can do. Therefore, we chose 32 bit integer addition, it consumes 9 times less energy than floating point addition. And we chose 8-bit integer multiplication, which also consumes significantly less energy than other multiplication operations, and probably has enough accuracy to get good results. As for the memory. Accessing external DRAM is about a hundred times more expensive in terms of power consumption than using local SRAM. It is clear that we want to make the most of local SRAM.

From a management point of view, here is the data that was published in an article by Mark Horowitz, where he critically examined how much energy it takes to execute a single instruction on a regular integer processor. And you see that the addition operation consumes only 0.15% of the total power. Everything else is overhead for management and more. Therefore, in our design we strive to get rid of all this as much as possible. What really interests us is arithmetic.

So here is the design that we have finished. You can see that in it the main place is occupied by 32 megabytes of SRAM, they are left, right, in the center and at the bottom. All calculations are performed in the upper central part. Each cycle, we read 256 bytes of activation data from the SRAM array, 128 bytes of weights from the SRAM array and combine them in a 96x96 array, which performs 9000 additions with accumulation per cycle at 2 GHz. This is only 36.8 trillion. operations. Now that we are done with the scalar product, we upload the data, pass it through the dedicated ReLU, optionally through pulling, and finally put it in the write buffer, where all the results are aggregated. And then we write 128 bytes per clock back to SRAM. And all this happens continuously. We work with scalar products, while we upload the previous results, perform pulling and write the results back to memory. If you stack it all at 2 GHz, you will need 1 terabyte per second of SRAM bandwidth to support operation. And iron provides this. One terabyte per second of bandwidth per accelerator, two accelerators per chip - two terabytes per second.

The accelerator has a relatively small set of commands. There is a DMA read operation for loading data from memory, a DMA write operation for uploading results back to memory. Three convolution instructions (convolution, deconvolution, inner-product). Two relatively simple operations are shift and elementwise operation (eltwise). And of course, the stop operation when the calculations are finished.

We had to develop a neural network compiler. We took a neural network trained by our development team, in the form in which it was used in the old hardware. When you compile it for use on a new accelerator, the compiler performs fusion of layers, which allows us to increase the number of calculations for each call to SRAM. It also performs smoothing of memory accesses. We perform channel padding to reduce conflicts between memory banks. Memory allocation also takes SRAM banks into account. This is the case when conflict processing could be implemented in hardware. But with software implementation, we save on hardware due to some software complexity. We also automatically insert DMA operations so that the data arrives for calculations on time, without stopping the processing. At the end, we generate the code, weights data, compress and add a CRC checksum for reliability. The neural network is loaded into SRAM at startup and is there all the time.

Thus, to start the network, you specify the address of the input buffer, which contains a new image that has just arrived from the camera; set the address of the output buffer; set the pointer to the network weight; go. The accelerator “goes into itself” and will sequentially pass through the entire neural network, usually for one or two million clock cycles. Upon completion, you receive an interrupt and can post-process the results.

Turning to the results. Our goal was to meet 100 watts. Measurements on cars that drive with a full stack of autopilot have shown that we dissipate 72 watts. This is slightly larger than in the previous project, but a significant performance improvement is a good excuse. Of these 72 watts, about 15 watts is consumed in neural networks. The cost of this solution is about 80% of what we paid before. In terms of performance, we took the neural network (narrow) of the camera, which I already mentioned, with 35 billion operations, we launched it on old equipment and received 110 frames per second. We took the same data and the same network, compiled it for the new FSD computer and, using all four accelerators, we can process 2300 frames per second.

Ilon:I think this is perhaps

Pete’s most significant slide : I’ve never worked on a project where the productivity improvement was over 3. So it was pretty fun. Compared with the nvidia Drive Xavier solution, there the chip provides 21 trillion. operations, while our FSDC with two chips - 144 trillion. operations.

So, in conclusion, I think we have created a solution that delivers outstanding performance of 144 trillion. operations for processing a neural network. It has outstanding power characteristics. We managed to squeeze all this productivity into the heat budget that we had. This allows you to implement a duplication solution. The computer has a moderate cost, and what is really important, the FSDC will provide a new level of safety and autonomy in Tesla cars, without affecting their cost and mileage. We all look forward to it.

Ilon: If you have questions about equipment, ask them right now.

The reason I asked Pete to do a detailed, much more detailed than perhaps most people would have appreciated diving into a Tesla FSD computer is as follows. At first glance it seems incredible how it could happen that Tesla, which had never designed such chips before, created the best chip in the world. But this is exactly what happened. And not just the best by a small margin, but the best by a huge margin. All Tesla manufactured right now have this computer. We switched from the Nvidia solution for S and X about a month ago and switched Model 3 about ten days ago. All cars produced have all the necessary hardware and everything necessary for fully autonomous driving. I will say it again: all Tesla cars produced now, have everything you need for full autonomous driving. All you have to do is improve the software. Later today, you can drive cars with a version for developers of improved autonomous driving software. You will see for yourself. Questions.

Q: do you have the ability to use activation functions other than ReLU?

Pete: yes, we have a sigmoid, for example

Q: maybe it was worth switching to a more compact manufacturing process, maybe 10 nm or 7 nm?

Pete: at the time we started to design, not all IPs we wanted to get were available in 10 nm

Ilon: it is worth noting that we completed this design about a year and a half ago and started the next generation. Today we are not talking about the next generation, but we are already halfway. All that is obvious for the next generation chip, we do.

Q: The computer is designed to work with cameras. Can I use it with lidar?

Ilon:Lidar is a disastrous decision, and anyone who relies on lidar is doomed. Doomed. Expensive. Dear sensors that are not needed. It's like having a bunch of expensive unnecessary apps. One small one is nothing, but a bunch is already a nightmare. This is stupid, you will see.

Q: Can you assess the impact of energy consumption on mileage?

Pete: For Model 3, the target consumption is 250 watts per mile.

Ilon:Depends on the nature of driving. In the city, the effect will be much greater than on the highway. You drive in the city for an hour and you have a hypothetical solution that consumes 1 kW. You will lose 6km on Model 3. If the average speed is 25km / h, then you lose 25%. Consumption of the system has a huge impact on mileage in a city where we think that there will be a large part of the robotax market, so power is extremely important.

Q: How reliable is your technology in terms of IP, are you not going to give out IP for free?

Pete: We have filed a dozen patents for this technology. This is essentially linear algebra, which I don’t think you can patent. (Ilon laughs)

Q:your microcircuit can do something, maybe encrypt all the weights so that your intellectual property remains inside, and no one can just steal it.

Ilon: Oh, I would like to meet someone who can do this. I would hire him in an instant. This is a very difficult problem. Even if you can extract the data, you will need a huge amount of resources to use it somehow.

The big sustainable advantage for us is the fleet. No one has a fleet. Weights are constantly updated and improved based on billions of miles traveled. Tesla has a hundred times more cars with the hardware necessary for training than all the others combined. By the end of this quarter, we will have 500,000 vehicles with 8 cameras and 12 ultrasonic sensors. In a year we will have more than a million cars with FSDC. This is just a huge data advantage. This is similar to how the Google search engine has a huge advantage, because people use it, and people actually train Google with their queries.

Host:One thing to remember about our FSD computer is that it can work with much more complex neural networks for much more accurate image recognition. It's time to talk about how we actually get these images and how we analyze them. Here we have a senior director of AI - Andrey Karpaty, who will explain all this to you. Andrei is a PhD from Stanford University, where he studied computer science with an emphasis on recognition and deep learning.

Ilon: Andrey, why don’t you just start, come on. Many doctors came out of Stanford, it doesn’t matter. Andrey is teaching a computer vision course at Stanford, which is much more important. Please tell about yourself.

(Translation turned out to be rather not a quick matter, I don’t know if I have enough for the second part about the neural network training system, although it seems to me the most interesting.)