How we evaluated the quality of documentation

Hello, Habr! My name is Lesha, I am a system analyst at one of Alfa-Bank's product teams. Now I am developing a new Internet bank for legal entities and individual entrepreneurs.

And when you are an analyst, especially in such a channel, without documentation and hard work with it - nowhere. And the documentation is the thing that always raises a lot of questions. Why is the web application not described? Why does the specification indicate how the service should work, but it does not work at all? Why is it that only two people can understand the specification, one of whom wrote it?

However, documentation cannot be ignored for obvious reasons. And in order to simplify our lives, we decided to assess the quality of the documentation. How exactly we did this and what conclusions we came to - under the cut.

In order not to repeat the text “New Internet Bank” several dozen times, I will write the NIB. Now we have over a dozen teams working on the development of the NIB for entrepreneurs and legal entities. Moreover, each of them either from scratch creates its own documentation for a new service or web application, or makes changes to the current one. With this approach, can the documentation in principle be of high quality?

And to determine the quality of the documentation, we identified three main characteristics.

Summarizing - complete, relevant and understandable documentation.

To assess the quality of the documentation, we decided to interview those who work directly with it: NIB analysts. Respondents were asked to rate 10 statements according to the scheme “On a scale of 1 to 5 (completely disagree - completely agree)”.

The allegations reflected the characteristics of quality documentation and the opinion of the compilers of the survey regarding NIB documents.

It is clear that simply the answer “From 1 to 5” could not reveal the necessary details, so a person could leave a comment on each item.

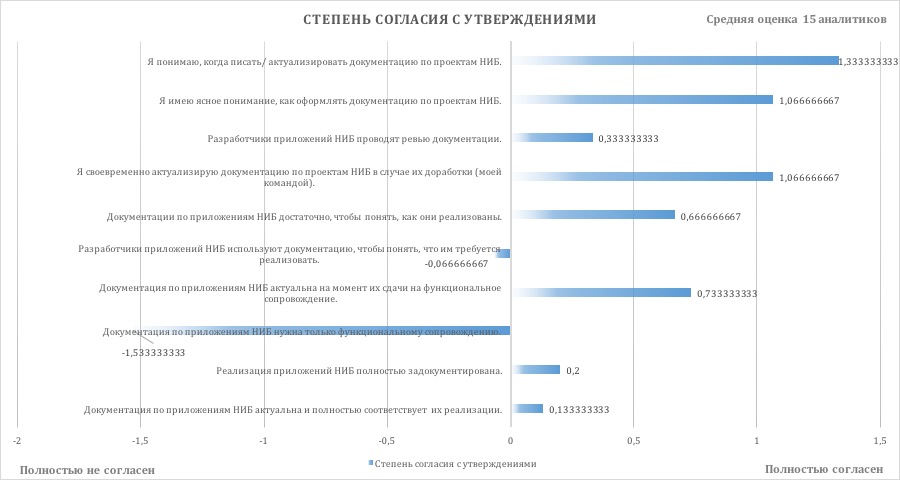

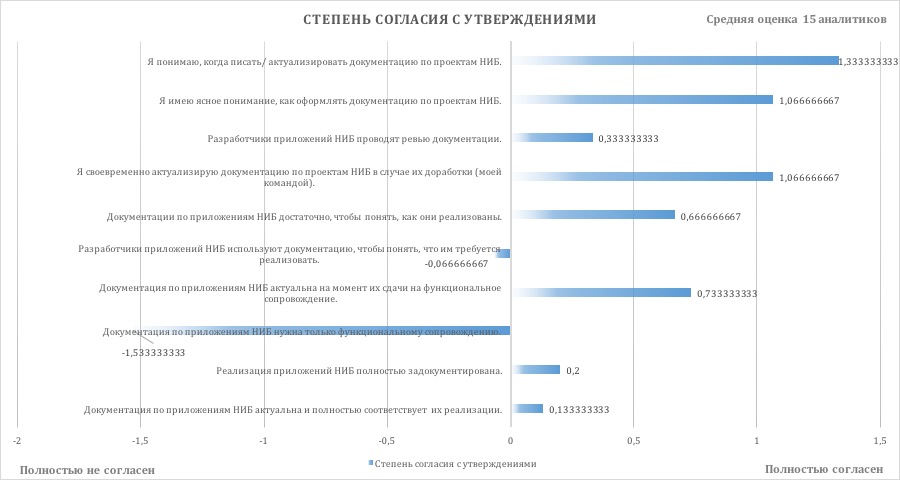

We did all this through the corporate Slack - we simply sent a proposal to the system analysts to take the survey. There were 15 analysts (9 from Moscow and 6 from St. Petersburg). After the survey was completed, we formed an average rating for each of the 10 statements, which was then normalized.

This is what happened.

The survey showed that although analysts are inclined to believe that the implementation of NIB applications is fully documented, they do not give unambiguous agreement (0.2). As a concrete example, they indicated that a number of databases and queues from existing solutions were not covered by documentation. The developer is able to tell the analyst that not everything is documented. But the thesis that developers conduct a review of documentation also did not receive unambiguous support (0.33). That is, the risk of incomplete descriptions of implemented solutions remains.

It’s easier with relevance - although there is no unequivocal agreement again (0.13), analysts are still inclined to consider the documentation relevant. Comments allowed us to understand that more often there are problems with relevance at the front than at the middle. True, they didn’t write anything about backing.

As to whether the analysts themselves understand when to write and update the documentation, the agreement was already much more uniform (1.33), including its design (1.07). What was noted as an inconvenience here is the lack of uniform rules for maintaining documentation. Therefore, in order not to include the “Who is in the forest, who is for firewood” regime, they have to work on the basis of examples of existing documentation. Hence a useful wish - to create a standard for maintaining documents, to develop templates for their parts.

The documentation on NIB applications is relevant at the time of delivery for functional support (0.73). It is understandable, because one of the criteria for handing over a project to functional support is up-to-date documentation. It is also sufficient to understand the implementation (0.67), although sometimes questions remain.

But what the respondents did not agree with (rather unanimously) is that the documentation on NIB applications, in principle, is only necessary for functional support (-1.53). Analysts as consumers of documentation were mentioned most often. The remaining team members (developers) - much less often. Moreover, analysts believe that developers do not use documentation to understand what they need to implement, although not unanimously (-0.06). This, by the way, is also expected in conditions when code development and writing documentation go in parallel.

To improve the quality of documents, we decided to do this:

All this should help to raise the quality of documents to a new level.

At least I hope so.

And when you are an analyst, especially in such a channel, without documentation and hard work with it - nowhere. And the documentation is the thing that always raises a lot of questions. Why is the web application not described? Why does the specification indicate how the service should work, but it does not work at all? Why is it that only two people can understand the specification, one of whom wrote it?

However, documentation cannot be ignored for obvious reasons. And in order to simplify our lives, we decided to assess the quality of the documentation. How exactly we did this and what conclusions we came to - under the cut.

Documentation Quality

In order not to repeat the text “New Internet Bank” several dozen times, I will write the NIB. Now we have over a dozen teams working on the development of the NIB for entrepreneurs and legal entities. Moreover, each of them either from scratch creates its own documentation for a new service or web application, or makes changes to the current one. With this approach, can the documentation in principle be of high quality?

And to determine the quality of the documentation, we identified three main characteristics.

- It must be complete. It sounds pretty captainly, but it's important to note. It should describe in detail all the elements of the implemented solution.

- It should be relevant. That is, correspond to the current implementation of the solution itself.

- It should be clear. So that the person using it understands how the solution is implemented.

Summarizing - complete, relevant and understandable documentation.

Poll

To assess the quality of the documentation, we decided to interview those who work directly with it: NIB analysts. Respondents were asked to rate 10 statements according to the scheme “On a scale of 1 to 5 (completely disagree - completely agree)”.

The allegations reflected the characteristics of quality documentation and the opinion of the compilers of the survey regarding NIB documents.

- The documentation on NIB applications is relevant and fully consistent with their implementation.

- The implementation of the NIB applications is fully documented.

- Documentation on NIB applications is only needed for functional support.

- The documentation on NIB applications is relevant at the time of their submission for functional support.

- NIB application developers use documentation to understand what they need to implement.

- The documentation for the NIB applications is enough to understand how they are implemented.

- I will update documentation on NIB projects in a timely manner if they are finalized (by my team).

- NIB application developers review documentation.

- I have a clear understanding of how to document NIB projects.

- I understand when to write / update documentation on NIB projects.

It is clear that simply the answer “From 1 to 5” could not reveal the necessary details, so a person could leave a comment on each item.

We did all this through the corporate Slack - we simply sent a proposal to the system analysts to take the survey. There were 15 analysts (9 from Moscow and 6 from St. Petersburg). After the survey was completed, we formed an average rating for each of the 10 statements, which was then normalized.

This is what happened.

The survey showed that although analysts are inclined to believe that the implementation of NIB applications is fully documented, they do not give unambiguous agreement (0.2). As a concrete example, they indicated that a number of databases and queues from existing solutions were not covered by documentation. The developer is able to tell the analyst that not everything is documented. But the thesis that developers conduct a review of documentation also did not receive unambiguous support (0.33). That is, the risk of incomplete descriptions of implemented solutions remains.

It’s easier with relevance - although there is no unequivocal agreement again (0.13), analysts are still inclined to consider the documentation relevant. Comments allowed us to understand that more often there are problems with relevance at the front than at the middle. True, they didn’t write anything about backing.

As to whether the analysts themselves understand when to write and update the documentation, the agreement was already much more uniform (1.33), including its design (1.07). What was noted as an inconvenience here is the lack of uniform rules for maintaining documentation. Therefore, in order not to include the “Who is in the forest, who is for firewood” regime, they have to work on the basis of examples of existing documentation. Hence a useful wish - to create a standard for maintaining documents, to develop templates for their parts.

The documentation on NIB applications is relevant at the time of delivery for functional support (0.73). It is understandable, because one of the criteria for handing over a project to functional support is up-to-date documentation. It is also sufficient to understand the implementation (0.67), although sometimes questions remain.

But what the respondents did not agree with (rather unanimously) is that the documentation on NIB applications, in principle, is only necessary for functional support (-1.53). Analysts as consumers of documentation were mentioned most often. The remaining team members (developers) - much less often. Moreover, analysts believe that developers do not use documentation to understand what they need to implement, although not unanimously (-0.06). This, by the way, is also expected in conditions when code development and writing documentation go in parallel.

What is the result and why do we need these numbers

To improve the quality of documents, we decided to do this:

- Ask the developer to review written documents.

- If possible, timely update the documentation, the front - in the first place.

- Create and adopt a standard for documenting NIB projects so that everyone can quickly understand which elements of the system and how to describe it. Well, develop appropriate templates.

All this should help to raise the quality of documents to a new level.

At least I hope so.