Auto Tiering features in Qsan XCubeSAN storage

Continuing to consider the technologies for accelerating I / O operations as applied to storage systems, which began in the previous article , one cannot help but dwell on such a very popular option as Auto Tiering. Although the ideology of this function is very close to that of various manufacturers of storage systems, we will consider the features of tearing implementation using the example of Qsan storage .

Despite the variety of data stored on storage, these same data can be divided into several groups based on their relevance (frequency of use). The most popular ("hot") data is extremely important to organize the fastest access, while the processing of less popular ("cold") data can be performed with a lower priority.

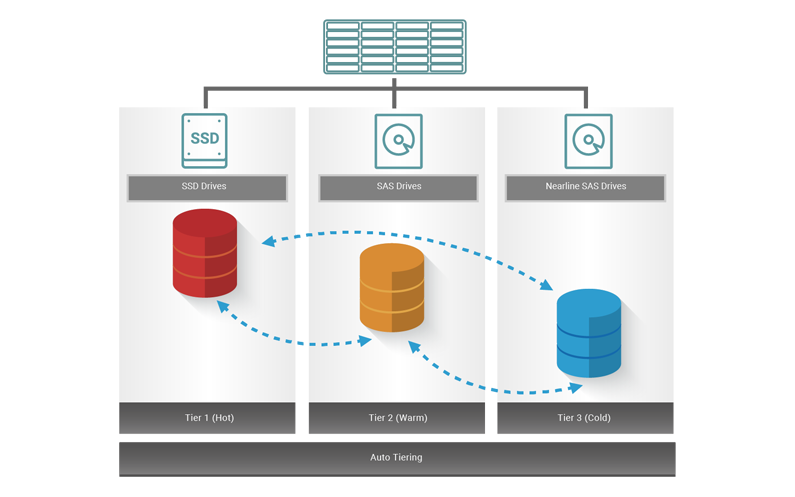

To organize such a scheme, the tearing functional is used. The data array in this case does not consist of the same type of disks, but of several groups of drives that form different tier storage levels. Using a special algorithm, data is automatically moved between levels in order to ensure maximum final performance.

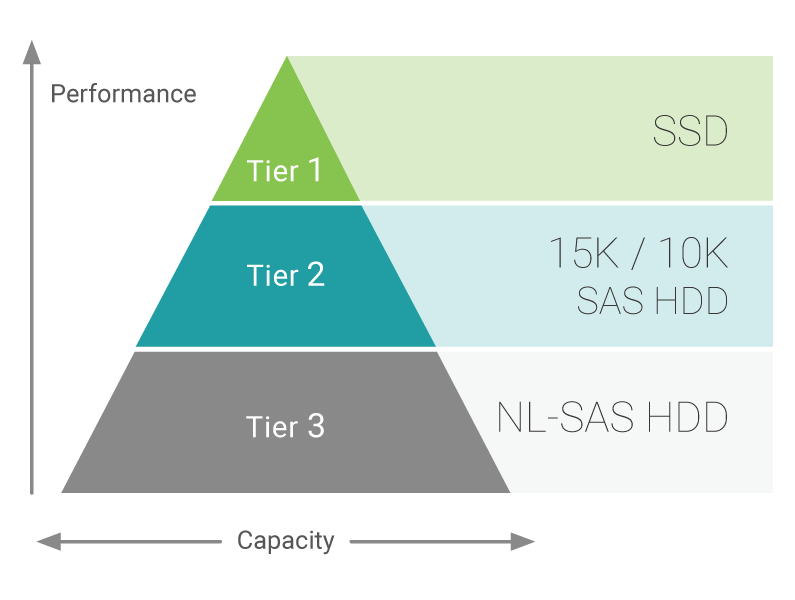

Qsan storage systems support up to three storage tiers:

- Tier 1: SSD Maximum Performance

- Tier 2: HDD SAS 10K / 15K, high performance

- Tier 3: HDD NL-SAS 7.2K, maximum capacity

Auto Tiering pool can contain both all three levels, and only two in any combination. Inside each Tier, drives are combined into familiar RAID groups. For maximum flexibility, the RAID level in each Tier may be different. That is, for example, nothing prevents you from organizing a structure like 4x SSD RAID10 + 6x HDD 10K RAID5 + 12 HDD 7.2K RAID6

After creating volumes (virtual disks) in the Auto Tiering pool on it, the background collection of statistics on all I / O operations begins. To do this, the space is "cut" into blocks of 1GB (the so-called sub LUN). Each time you access such a block, it is assigned a coefficient 1. Then, over time, this coefficient decreases. After 24 hours, in the absence of input / output requests for this unit, it will already be equal to 0.5 and will continue to fall after each subsequent hour.

At a certain point in time (by default every day at midnight), the collected results are ranked by sub LUN activity based on their coefficients. Based on this, a decision is made which blocks to move and in which direction. After which, in fact, there is a relocation of data between the levels.

The Qsan storage system perfectly implements control of the tearing process using a variety of parameters, which will allow you to very flexibly configure the final performance of the array.

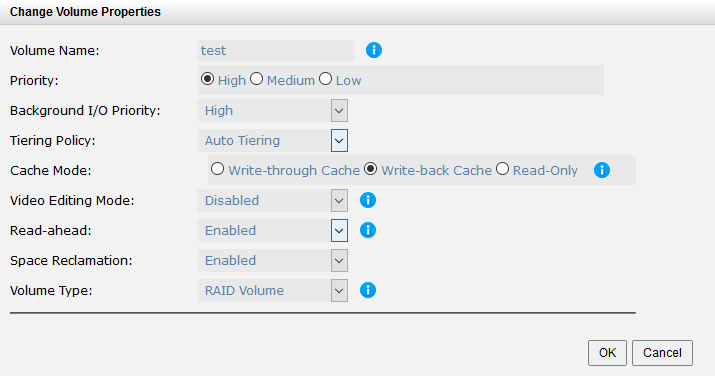

To determine the initial location of the data and the priority direction of their movement, policies are used that are set separately for each volume:

- Auto Tiering - the default policy, the initial placement and direction of movements is determined automatically, i.e. “Hot” data tends to the highest level, and “cold” data moves down. The initial placement is selected based on the available space at each level. But you need to understand that the system primarily seeks to maximize the use of the fastest drives. Therefore, if there is free space, the data will be placed at the upper levels. This policy is suitable for most scenarios when data demand cannot be predicted in advance.

- Start high, and then Auto Tiering - the difference from the previous one is only in the original data location (at the fastest level)

- The highest level - data always strives to occupy the fastest level. If in the process they are moved down, then as soon as possible they are moved back. This policy is suitable for data that requires the fastest access.

- Minimum level - data always strives to occupy the lowest level. This policy is perfect for rarely used data (for example, archives).

- No movement - the system automatically determines the initial location of the data and does not move them. However, statistics continue to be collected in case their relocation is subsequently required.

It is worth noting that despite the fact that policies are defined when creating each volume, they can be changed repeatedly on the fly during the life cycle of the system.

In addition to policies for the tearing mechanism, the frequency and pace of data movement between levels is also configured. You can set a specific time of movement: daily or on certain days of the week, as well as reduce the interval of statistics collection to several hours (the minimum frequency is 2 hours). If there is a need to limit the execution time of the data movement operation, you can set the time frame (window for moving). In addition, the relocation speed is also indicated - 3 modes: fast, medium, slow.

In the event of a need for immediate data relocation, it is possible to execute it in manual mode at any time at the command of the administrator.

It is clear that the more often and faster data will be moved between levels, the more flexible the storage system will adapt to current operating conditions. But at the same time, it’s worth remembering that moving is an additional load (primarily on disks), so absolutely no need to “drive” the data without extreme need. It is better to plan the movement for moments of minimum load. If the work of storage constantly requires high performance 24/7, then it is worth reducing the rate of relocation to a minimum.

The abundance of tearing settings will undoubtedly please advanced users. However, for those who are faced with such technology for the first time, there is nothing to worry about. It is quite possible to trust the default settings (Auto Tiering policy, moving at maximum speed once a day at night) and, as statistics accumulate, adjust certain parameters to achieve the desired result.

Comparing peering with such a no less popular technology to increase productivity as SSD caching , one should keep in mind the different principles of their algorithms.

| SSD caching | Auto tiering | |

|---|---|---|

| Effect onset rate | Almost instantly. But a noticeable effect only after the cache “warms up” (minutes-hours) | After collecting statistics (from 2 hours, ideally a day), plus the time to move the data |

| Effect duration | Until the data is replaced by a new portion (minutes-hours) | While the demand for data is relevant (day or more) |

| Indications for use | Instantly increase productivity for a short time (databases, virtualization environments) | Increased productivity over a long period (file, web, mail servers) |

Also one of the features of tearing is the ability to use it not only for scenarios like “SSD + HDD”, but also “fast HDD + slow HDD” or all three levels in general, which is impossible in principle when using SSD caching.

Testing

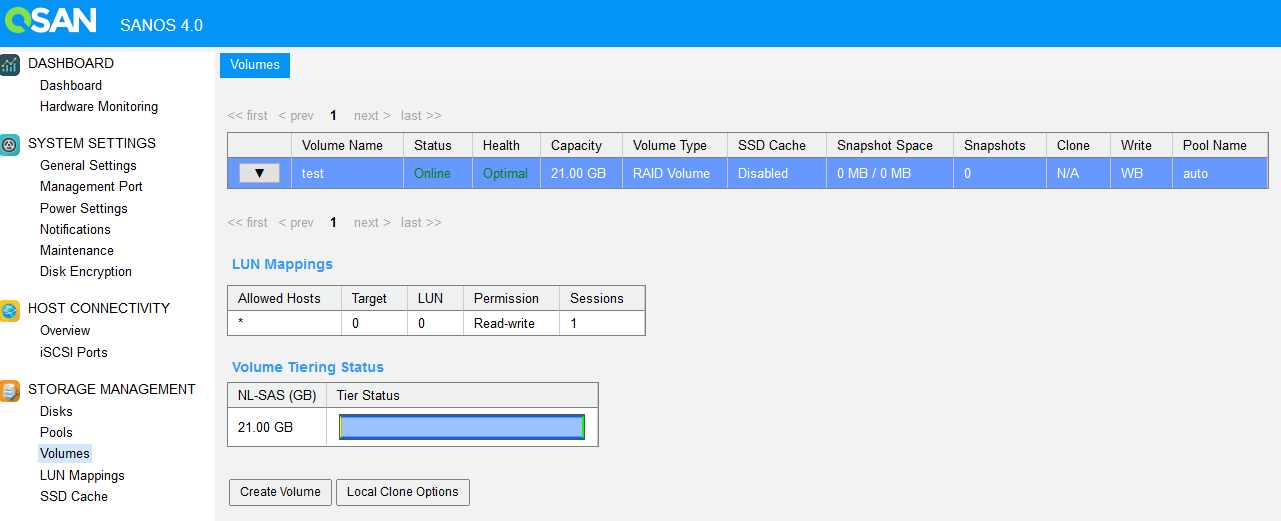

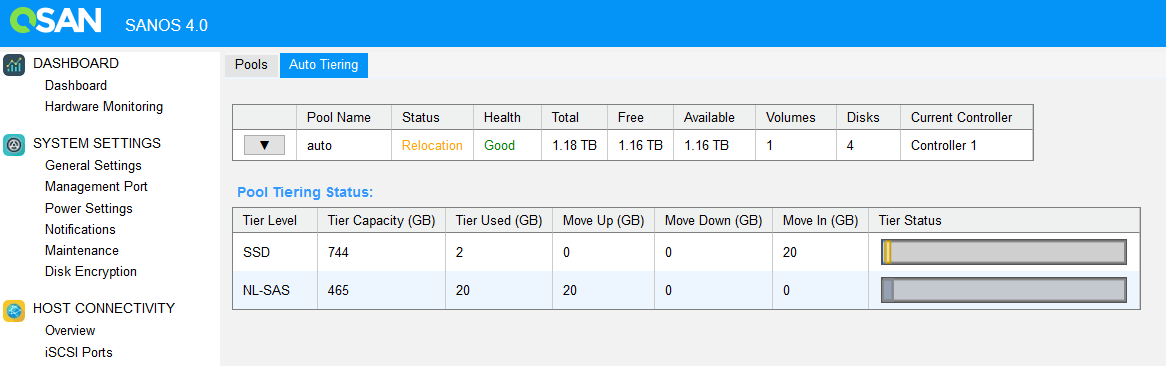

To test the operation of tearing algorithms, we conducted a simple test. A pool of two levels of SSD (RAID 1) + HDD 7.2K (RAID1) was created, on which the volume with the "minimum level" policy was placed. Those. data should always be located on slow disks.

The control interface clearly shows the placement of data between levels

After filling the volume with data, we changed the placement policy to Auto Tiering and ran the IOmeter test.

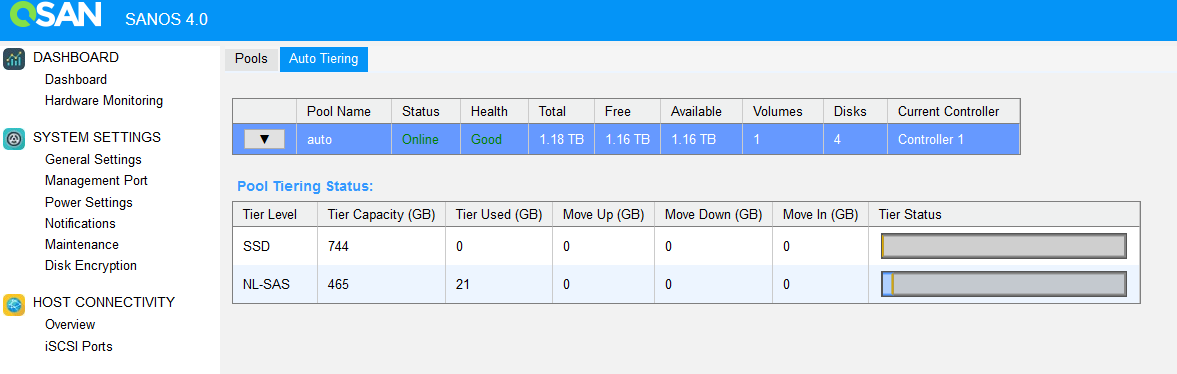

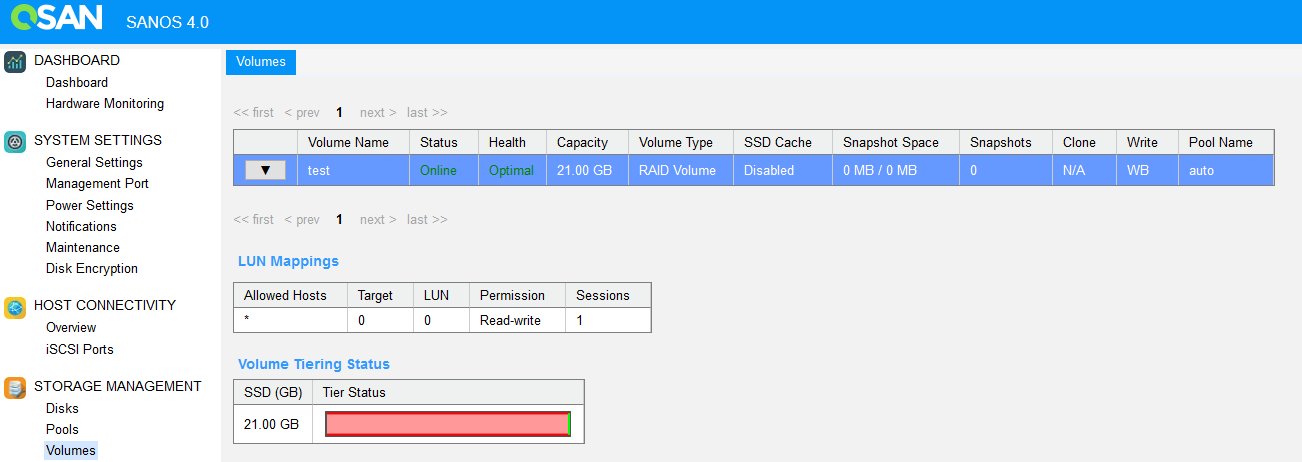

After several hours of testing, when the system was able to accumulate statistics, the relocation process began.

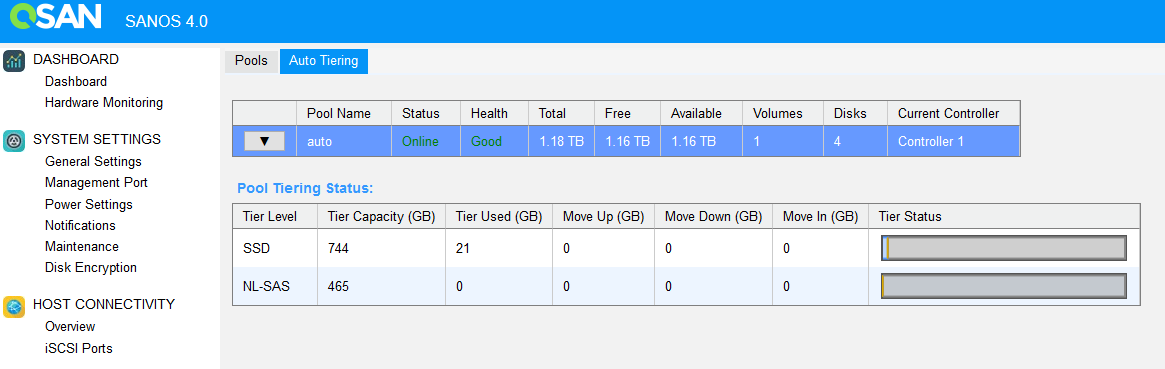

At the end of the data movement, our test volume completely crawled to the upper level (SSD).

Verdict

Auto Tiering is a wonderful technology that allows you to increase storage system productivity with the minimum of material and time costs due to the more intensive use of high-speed drives. In relation to Qsan, the only investment is a license, which is acquired once and for all without limitation on the volume / number of disks / shelves / etc. This functionality is equipped with such rich settings that it can satisfy almost any business task. And visualization of processes in the interface will allow you to effectively manage the device.