Word2vec in pictures

- Transfer

“ Every thing conceals a pattern that is part of the universe. It has symmetry, elegance and beauty - qualities that are first of all grasped by every true artist who captures the world. This pattern can be caught in the change of seasons, in the way the sand flows along the slope, in the tangled branches of the creosote shrub, in the pattern of its leaf.

We are trying to copy this pattern in our life and our society and therefore we love rhythm, song, dance, various forms that make us happy and comfort us. However, one can also discern the danger lurking in the search for absolute perfection, for it is obvious that the perfect pattern is unchanged. And, approaching perfection, all things go to death ”- Dune (1965)

I believe the embeddings concept is one of the most remarkable ideas in machine learning. If you have ever used Siri, Google Assistant, Alexa, Google Translate or even a smartphone keyboard with the prediction of the next word, then you have already worked with the attachment-based natural language processing model. Over the past decades, this concept has evolved significantly for neural models (recent developments include contextualized word embeddings in advanced models such as BERT and GPT2).

Word2vec is an effective investment creation method developed in 2013. In addition to working with words, some of his concepts were effective in developing recommendatory mechanisms and giving meaning to data even in commercial, non-linguistic tasks. This technology has been used by companies such as Airbnb , Alibaba , Spotify, and Anghami in their recommendation engines .

In this article, we will look at the concept and mechanics of generating attachments using word2vec. Let's start with an example to familiarize yourself with how to represent objects in vector form. Do you know how much a list of five numbers (vector) can say about your personality?

Personalization: what are you?

“I give you the Desert Chameleon; his ability to merge with the sand will tell you everything you need to know about the roots of ecology and the reasons for preserving your personality. ” - Children of the Dune

On a scale of 0 to 100, do you have an introvert or extrovert personality type (where 0 is the most introvert type, and 100 is the most extrovert type)? Have you ever passed a personality test: for example, MBTI, or better yet , the Big Five ? You are given a list of questions and then evaluated on several axes, including introversion / extroversion.

Example of the Big Five test results. He really says a lot about personality and is able to predict academic , personal and professional success . For example, here you can go through it.

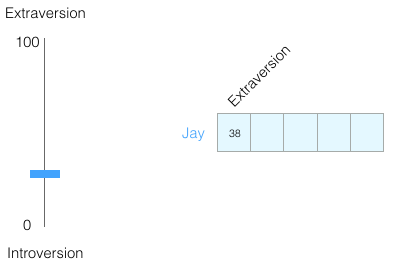

Suppose I scored 38 out of 100 for evaluating introversion / extraversion. This can be represented as follows:

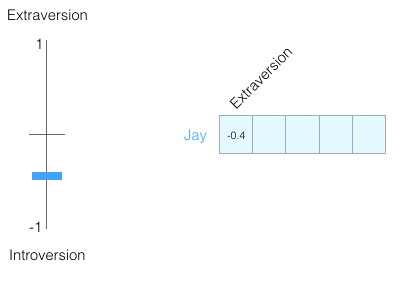

Or on a scale of −1 to +1:

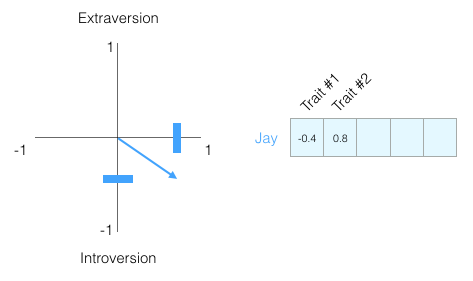

How well do we recognize a person only from this assessment? Not really. Humans are complex creatures. Therefore, we add one more dimension: one more characteristic from the test.

You can imagine these two dimensions as a point on the graph, or, even better, as a vector from the origin to this point. There are great tools for working with vectors that will come in handy very soon.

I don’t show what personality traits we put on the chart so that you don’t get attached to specific traits, but immediately understand the vector representation of the person’s personality as a whole.

Now we can say that this vector partially reflects my personality. This is a useful description when comparing different people. Suppose I was hit by a red bus, and you need to replace me with a similar person. Which of the two people on the following chart looks more like me?

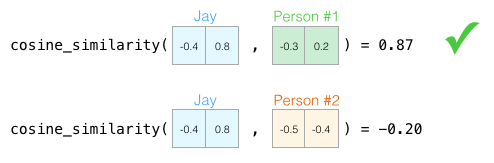

When working with vectors, the similarity is usually calculated by the Otiai coefficient (geometric coefficient):

Person No. 1 is more like me in character. Vectors in one direction (length is also important) give a larger Otiai coefficient.

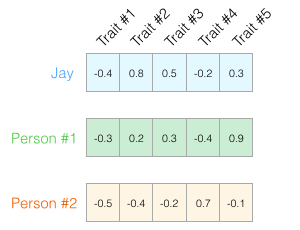

Again, two measurements are not enough to evaluate people. Decades of development of psychological science have led to the creation of a test for five basic personality characteristics (with many additional ones). So, let's use all five dimensions:

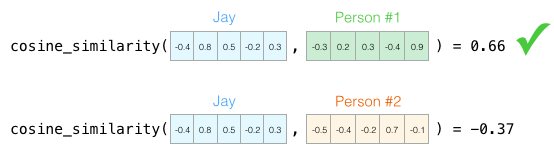

The problem with the five dimensions is that it will no longer be possible to draw neat arrows in 2D. This is a common problem in machine learning, where you often have to work in a multidimensional space. It is good that the geometric coefficient works with any number of measurements:

Geometric coefficient works for any number of measurements. In five dimensions, the result is much more accurate.

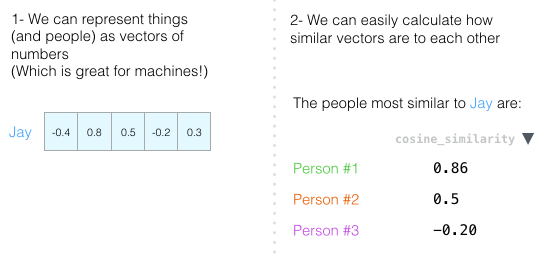

At the end of this chapter I want to repeat two main ideas:

- People (and other objects) can be represented as numerical vectors (which is great for cars!).

- We can easily calculate how similar the vectors are.

Word embedding

"The gift of words is the gift of deception and illusion." - Children of the Dune

With this understanding, we will move on to the vector representations of words obtained as a result of training (they are also called attachments) and look at their interesting properties.

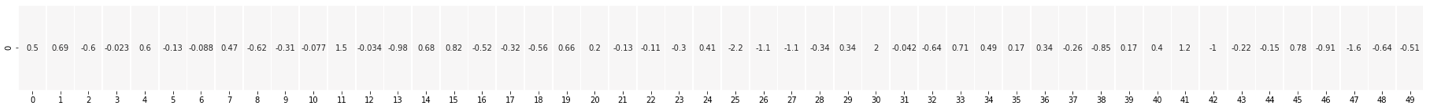

Here is the attachment for the word “king” (GloVe vector, trained on Wikipedia):

[ 0.50451 , 0.68607 , -0.59517 , -0.022801, 0.60046 , -0.13498 , -0.08813 , 0.47377 , -0.61798 , -0.31012 , -0.076666, 1.493 , -0.034189, -0.98173 , 0.68229 , 0.81722 , -0.51874 , -0.31503 , -0.55809 , 0.66421 , 0.1961 , -0.13495 , -0.11476 , -0.30344 , 0.41177 , -2.223 , -1.0756 , -1.0783 , -0.34354 , 0.33505 , 1.9927 , -0.04234 , -0.64319 , 0.71125 , 0.49159 , 0.16754 , 0.34344 , -0.25663 , -0.8523 , 0.1661 , 0.40102 , 1.1685 , -1.0137 , -0.21585 , -0.15155 , 0.78321 , -0.91241 , -1.6106 , -0.64426 , -0.51042 ]We see a list of 50 numbers, but it’s hard to say something. Let's visualize them to compare with other vectors. Put the numbers in one row:

Colorize the cells by their values (red for close to 2, white for close to 0, blue for close to −2):

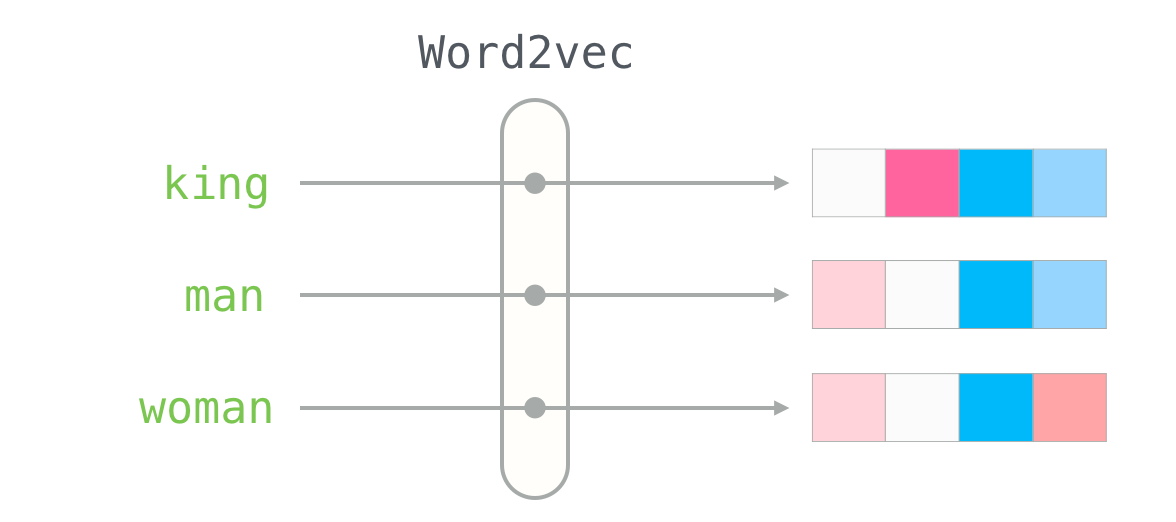

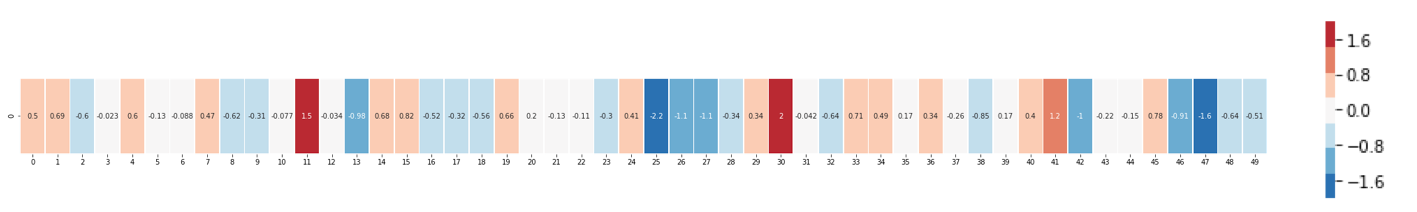

Now forget about the numbers, and only by colors we contrast the “king” with other words:

You see that “man” and “woman” are much closer to each other than to the “king”? It says something. Vector representations capture a lot of information / meaning / associations of these words.

Here is another list of examples (compare columns with similar colors):

There are several things to notice:

- Through all the words goes one red column. That is, these words are similar in this particular dimension (and we do not know what is encoded in it).

- You can see that “woman” and “girl” are very similar. The same thing with “man” and “boy”.

- “Boy” and “girl” are also similar in some dimensions, but differ from “woman” and “man”. Could this be a coded vague idea of youth? Probably.

- Everything except the last word is people's ideas. I added an object (water) to show the differences between the categories. For example, you can see how the blue column goes down and stops in front of the water vector.

- There are clear dimensions where the “king” and the “queen” are similar to each other and different from everyone else. Maybe a vague concept of royalty is coded there?

Analogies

“Words endure any load that we wish. All that is required is an agreement on tradition, according to which we build concepts. ” - God the Emperor of Dune

Famous examples that show the incredible properties of investments are the concept of analogies. We can add and subtract word vectors, getting interesting results. The most famous example is the formula “king - man + woman”:

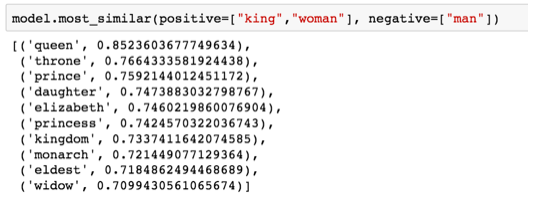

Using the Gensim library in python, we can add and subtract word vectors, and the library will find the words closest to the resulting vector. The image shows a list of the most similar words, each with a coefficient of geometric similarity. We

visualize this analogy, as before:

The resulting vector from the calculation “king – man + woman” is not quite equal to the “queen”, but this is the closest result from 400,000 word embeddings in the data set.

Having examined the word embeddings, let's study how the training takes place. But before moving on to word2vec, you need to take a look at the conceptual ancestor of word embedding: a neural language model.

Language model

“The prophet is not subject to the illusions of the past, present or future. The fixity of linguistic forms determines such linear differences. The prophets are holding the key to the lock of the tongue. For them, the physical image remains only a physical image and nothing more.

Their universe does not have the properties of a mechanical universe. A linear sequence of events is assumed by the observer. Cause and investigation? It is a completely different matter. The Prophet utters fateful words. You see a glimpse of an event that should happen "according to the logic of things." But the prophet instantly releases the energy of infinite miraculous power. The universe is undergoing a spiritual shift. ” - God the Emperor of Dune

One example of NLP (Natural Language Processing) is the prediction function of the next word on the keyboard of a smartphone. Billions of people use it hundreds of times a day.

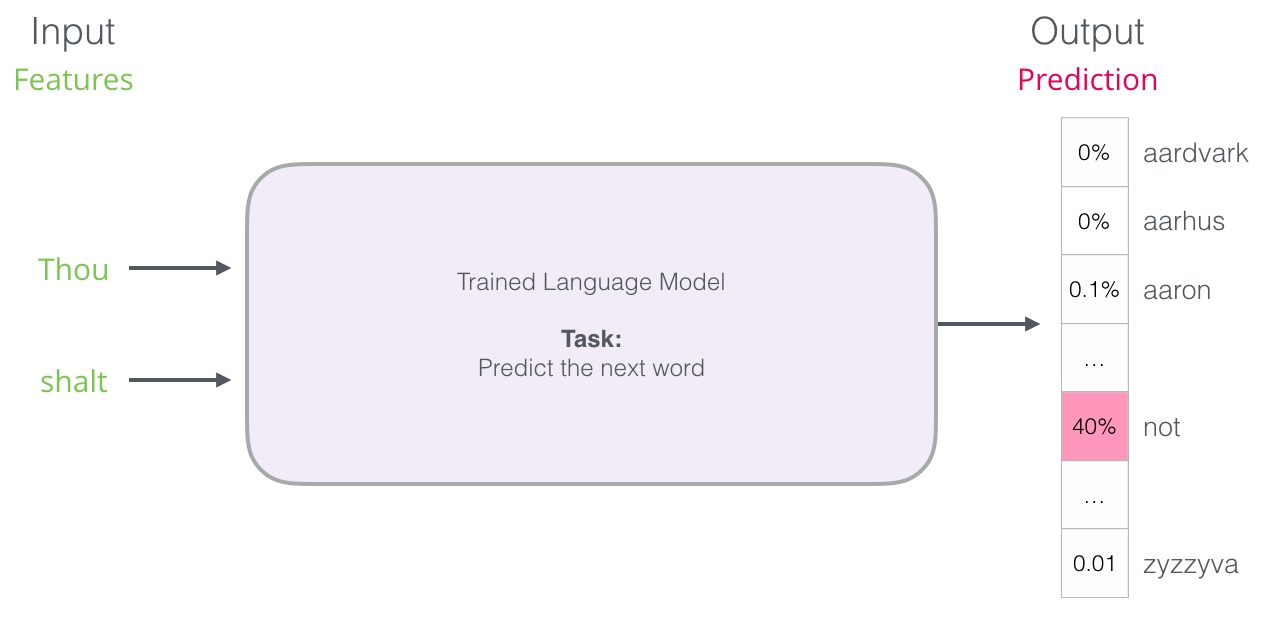

Predicting the next word is a suitable task for a language model . She can take a list of words (say, two words) and try to predict the following.

In the screenshot above, the model took these two green words (

thou shalt) and returned a list of options (most likely for the word not):

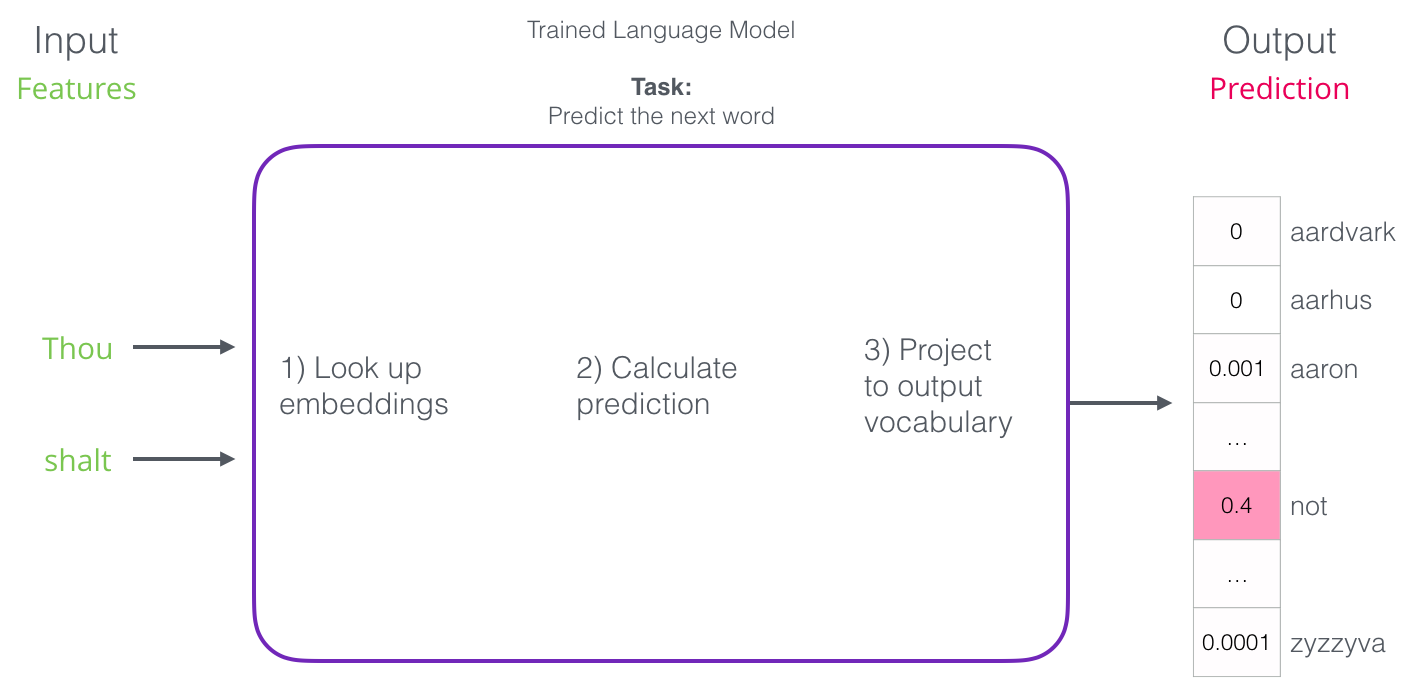

We can imagine the model as a black box:

But in practice, the model produces more than one word. It derives an estimate of the probability for virtually all known words (the "dictionary" of the model varies from several thousand to more than a million words). The keyboard application then finds the words with the highest scores and shows them to the user.

A neural language model gives the probability of all known words. We indicate the probability as a percentage, but in the resulting vector, 40% will be represented as 0.4.

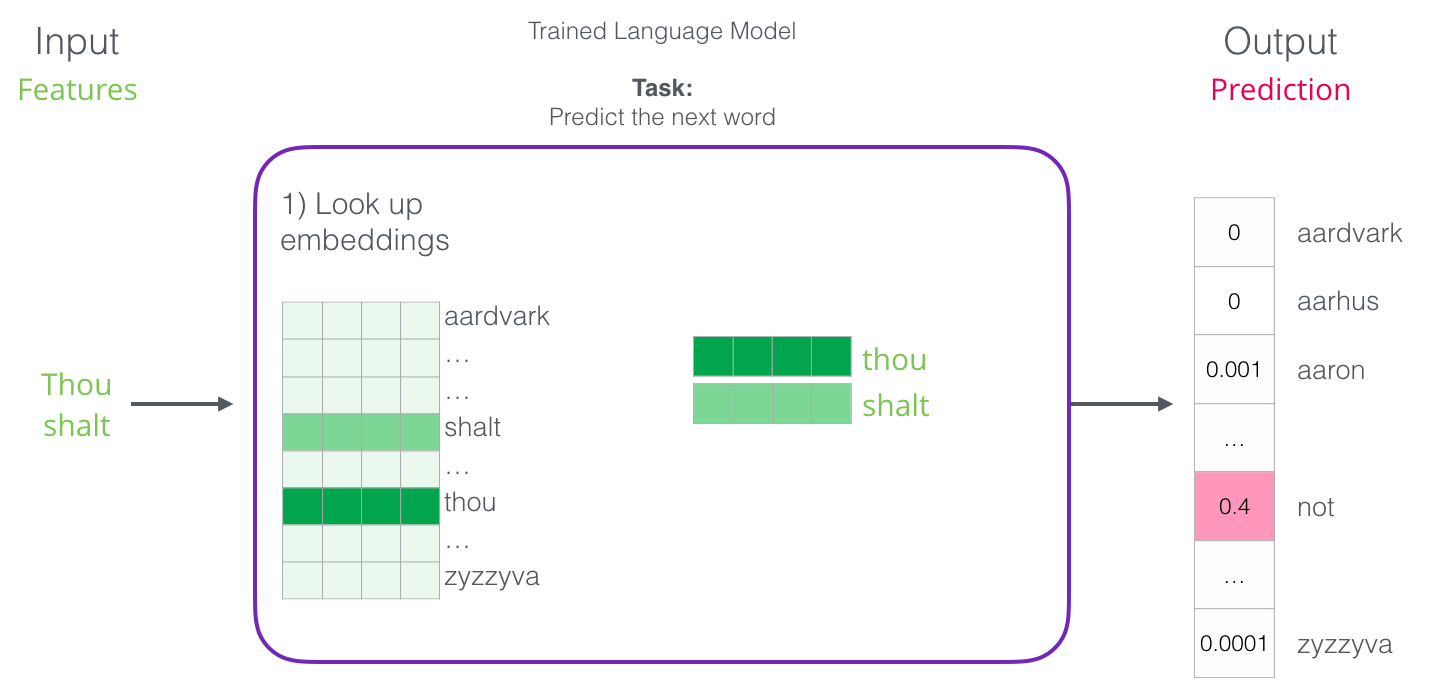

After training, the first neural models ( Bengio 2003 ) calculated the forecast in three stages:

The first step for us is the most relevant, as we discuss investments. As a result of training, a matrix is created with the attachments of all the words in our dictionary. To get the result, we simply look for the embeddings of the input words and run the prediction:

Now let's look at the learning process and find out how this matrix of investments is created.

Language model training

“The process cannot be understood by ending it. Understanding must move along with the process, merge with its flow and flow with it ”- Dune

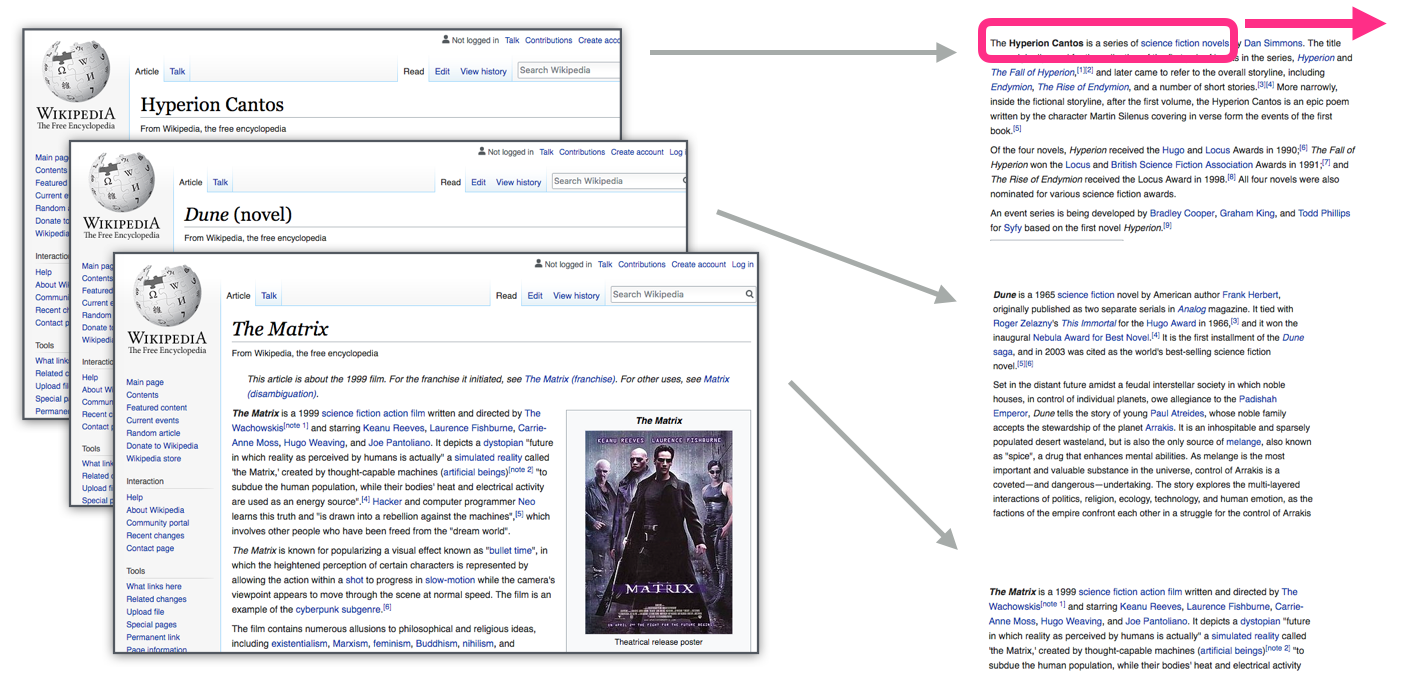

Language models have a huge advantage over most other machine learning models: they can be trained on texts that we have in abundance. Think of all the books, articles, Wikipedia materials, and other forms of textual data that we have. Compare with other machine learning models that need manual labor and specially collected data.

“You must learn the word by his company” - J. R. Furs

Attachments for words are calculated according to surrounding words, which more often appear nearby. The mechanics are as follows:

- We get a lot of text data (say, all Wikipedia articles)

- Set a window (for example, of three words) that slides throughout the text.

- A sliding window generates patterns for training our model.

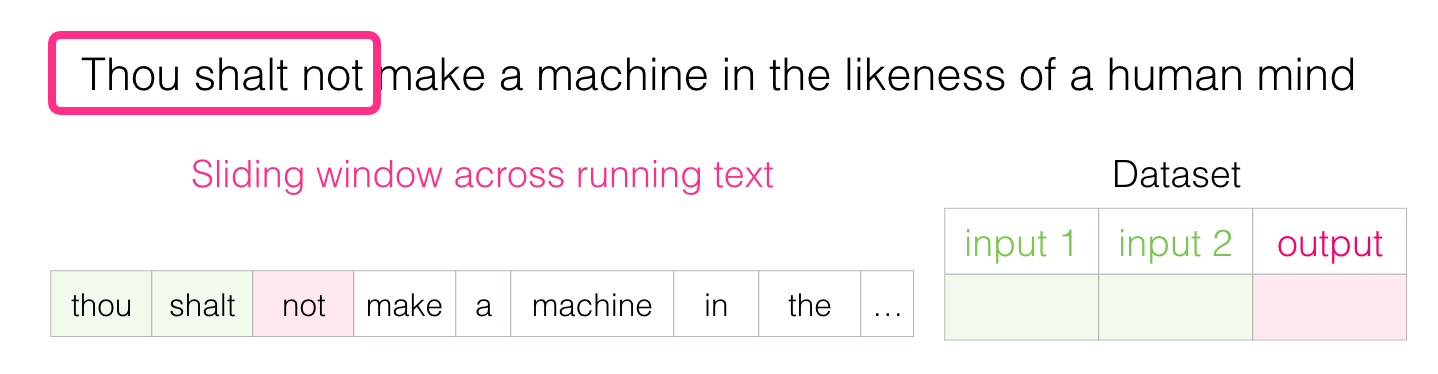

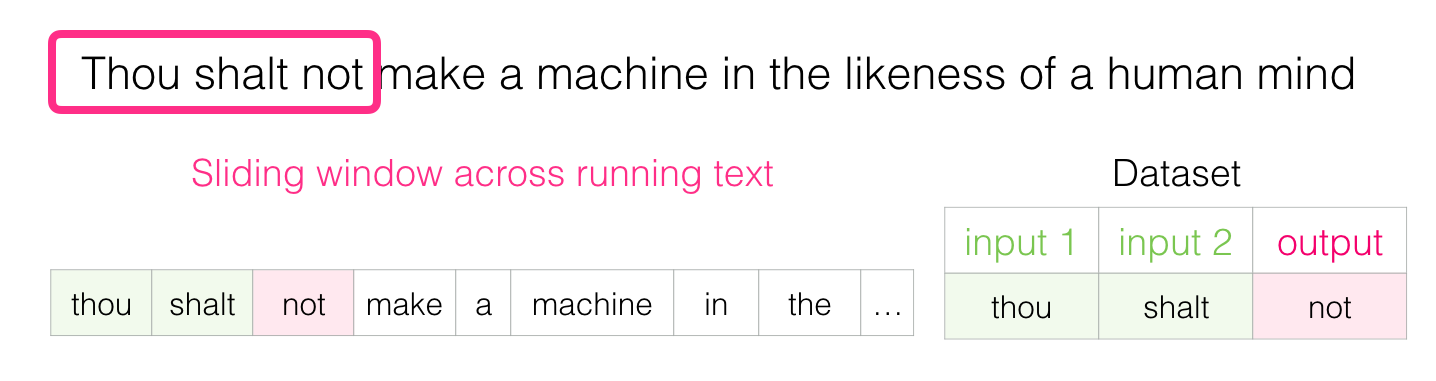

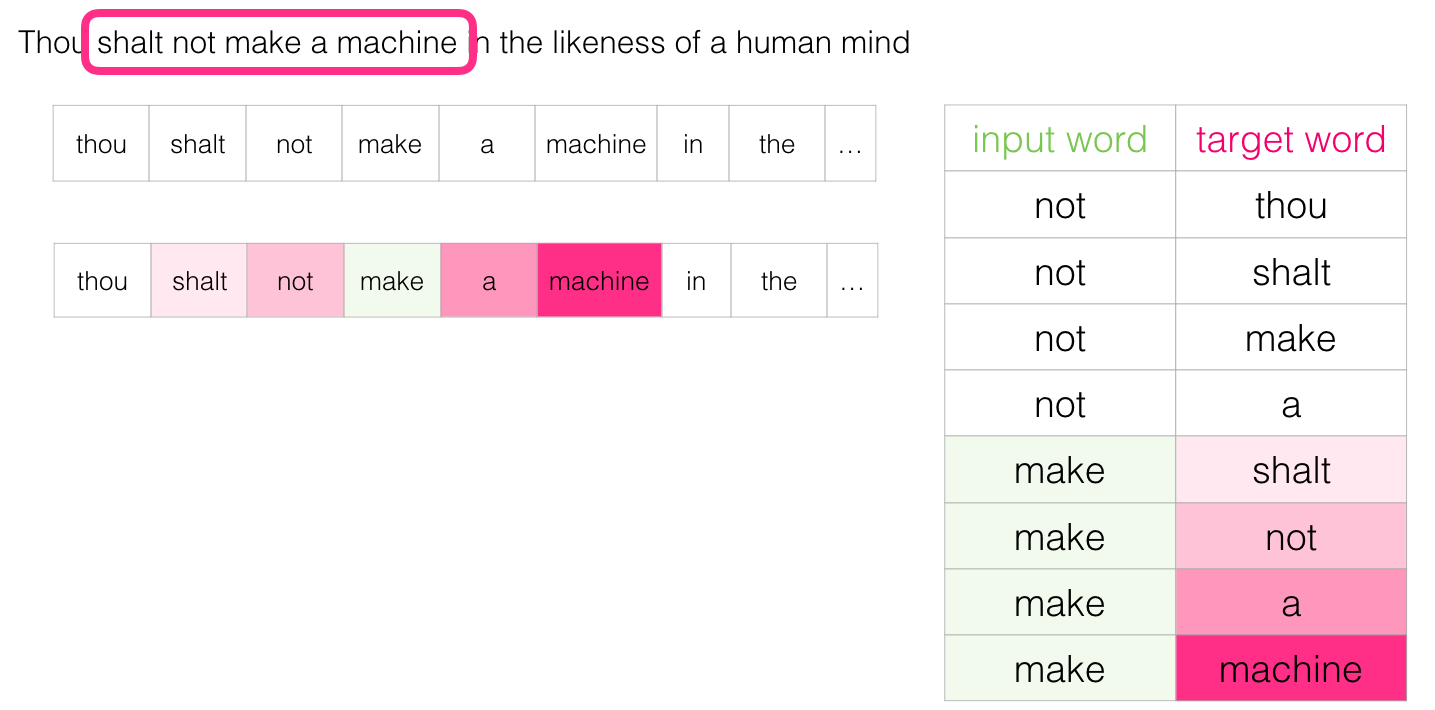

When this window glides over the text, we (actually) generate a data set, which we then use to train the model. For understanding, let's see how a sliding window handles this phrase:

“May you not build a machine endowed with the likeness of the human mind” - Dune

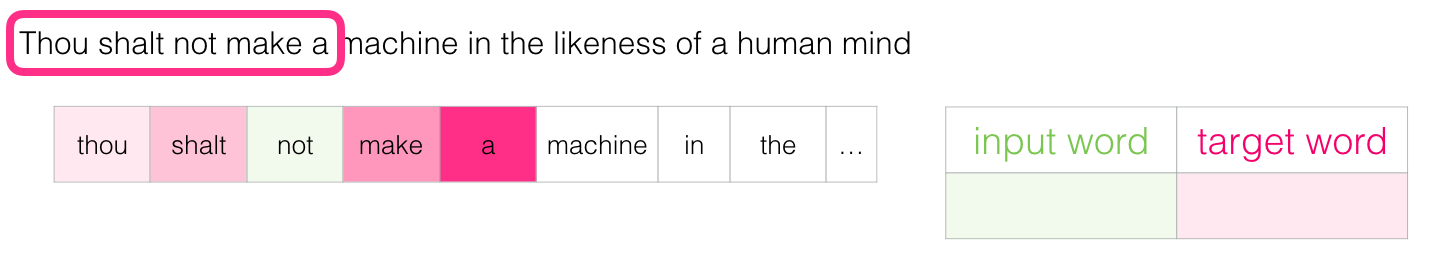

When we start, the window is located on the first three words of the sentence:

We take the first two words for signs, and the third word for the label:

We generated the first sample in the data set, which can later be used to teach the language model.

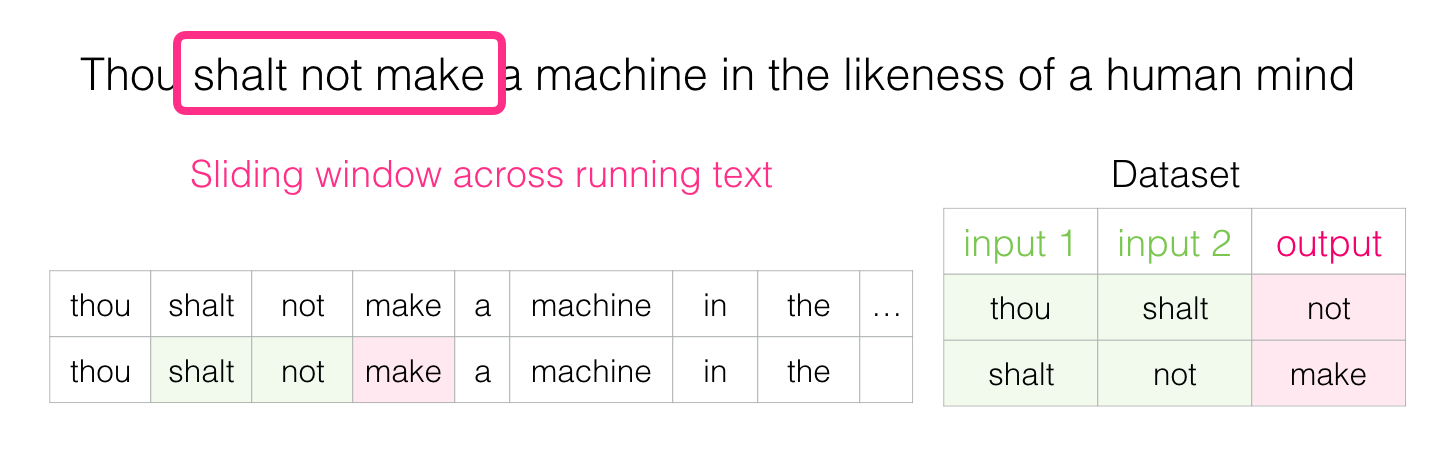

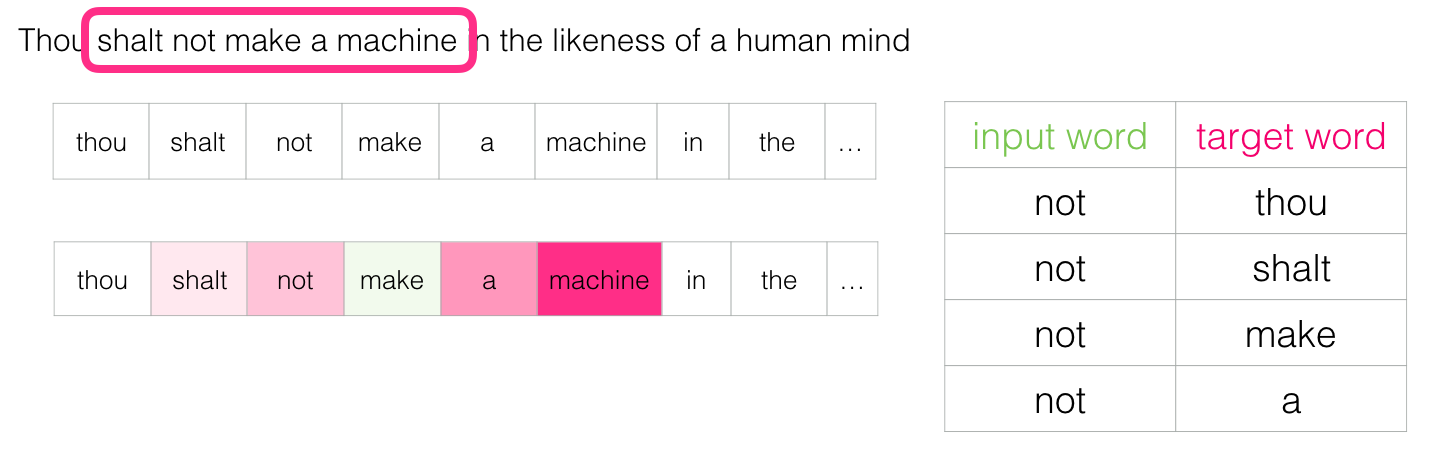

Then we move the window to the next position and create the second sample:

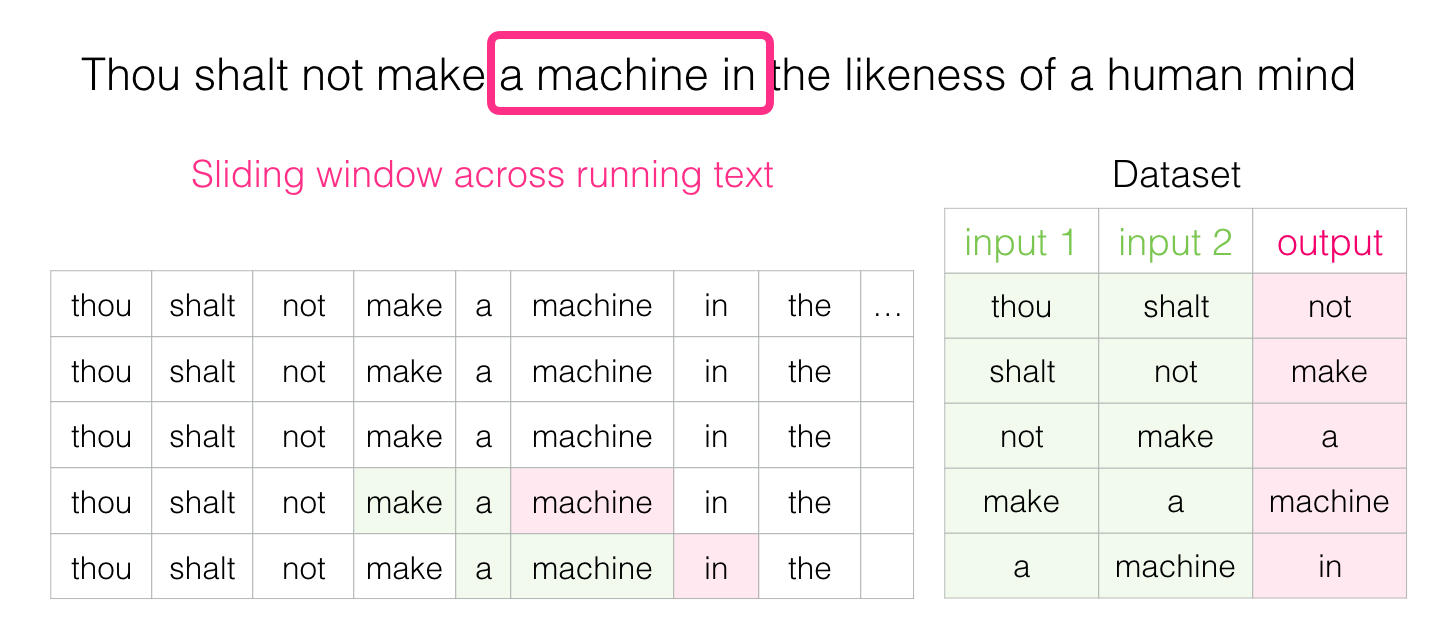

And pretty soon, we are accumulating a larger data set:

In practice, models are usually trained directly in the process of moving a sliding window. But logically, the “data set generation” phase is separate from the training phase. In addition to neural network approaches, the N-gram method was often used earlier for teaching language models (see the third chapter of the book “Speech and Language Processing” ). To see the difference when switching from N-grams to neural models in real products, here's a 2015 post on the Swiftkey blog , the developer of my favorite Android keyboard, which presents its neural language model and compares it with the previous N-gram model. I like this example because it shows how the algorithmic properties of investments can be described in a marketing language.

We look both ways

“A paradox is a sign that we should try to consider what lies behind it. If the paradox gives you concern, it means that you are striving for the absolute. Relativists view the paradox simply as an interesting, perhaps funny, sometimes scary thought, but a very instructive thought. ” Emperor God of Dune

Based on the foregoing, fill in the gap:

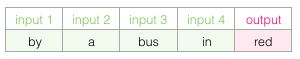

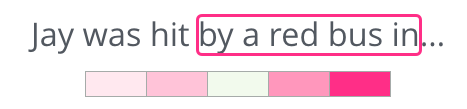

As a context, there are five previous words (and an earlier reference to “bus”). I am sure that most of you have guessed that there should be a "bus". But if I give you another word after the space, will this change your answer?

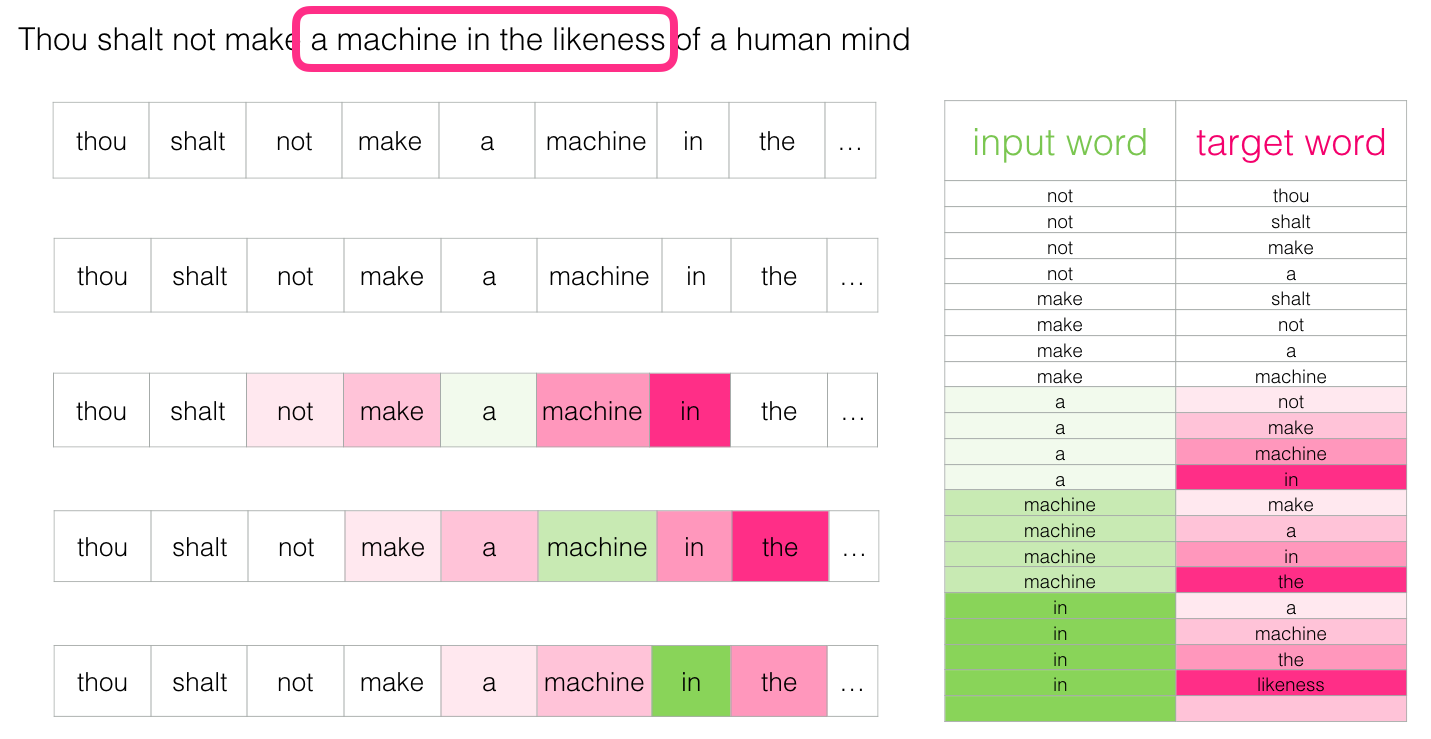

This completely changes the situation: now the missing word is most likely “red”. Obviously, words have informational value both before and after a space. It turns out that accounting in both directions (left and right) allows you to calculate better investments. Let's see how to configure model training in such a situation.

Skip gram

“When an absolutely unmistakable choice is unknown, the intellect gets a chance to work with limited data in the arena, where errors are not only possible but also necessary.” - Capitul Dunes

In addition to two words before the target, you can take into account two more words after it.

Then the data set for model training will look like this:

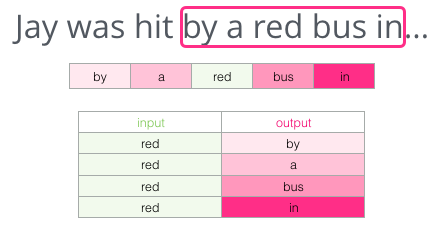

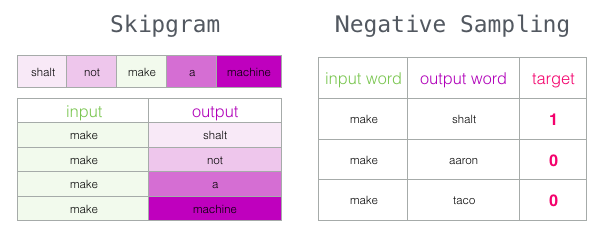

This is called CBOW (Continuous Bag of Words) architecture and is described in one of the word2vec [pdf] documents . There is another architecture, which also show excellent results, but is arranged a little differently: it tries to guess the neighboring words by the current word. A sliding window looks something like this:

In the green slot is the input word, and each pink box represents a possible way out

. Pink rectangles have different shades, because this sliding window actually creates four separate patterns in our training dataset:

This method is called skip-gram architecture . You can visualize a sliding window as follows:

The following four samples are added to the training dataset:

Then we move the window to the following position:

Which generates four more examples:

Soon we will have much more samples:

Learning Review

“Muad'Dib was a fast learner because he was primarily taught how to learn. But the very first lesson was the assimilation of the belief that he can learn, and that is the basis of everything. It’s amazing how many people don’t believe that they can learn and learn, and how many more people think that learning is very difficult. ” - Dune

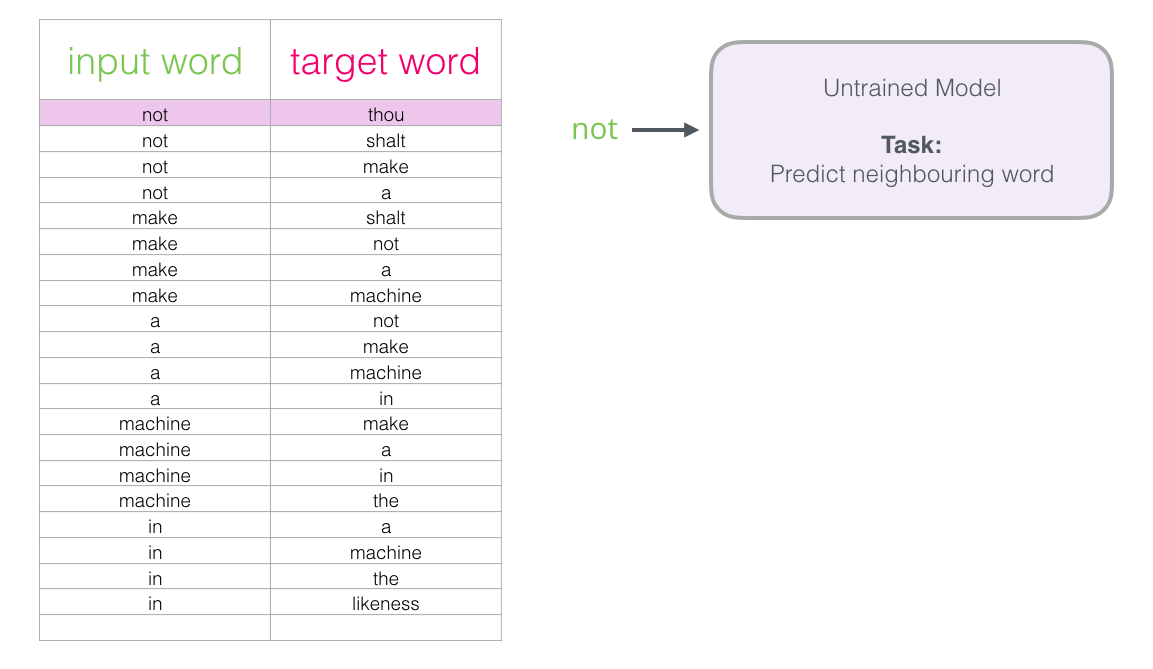

Now that we have the skip-gram set, we use it to train the basic neural model of the language that predicts a neighboring word.

Let's start with the first sample in our data set. We take the sign and send it to the untrained model with the request to predict the next word.

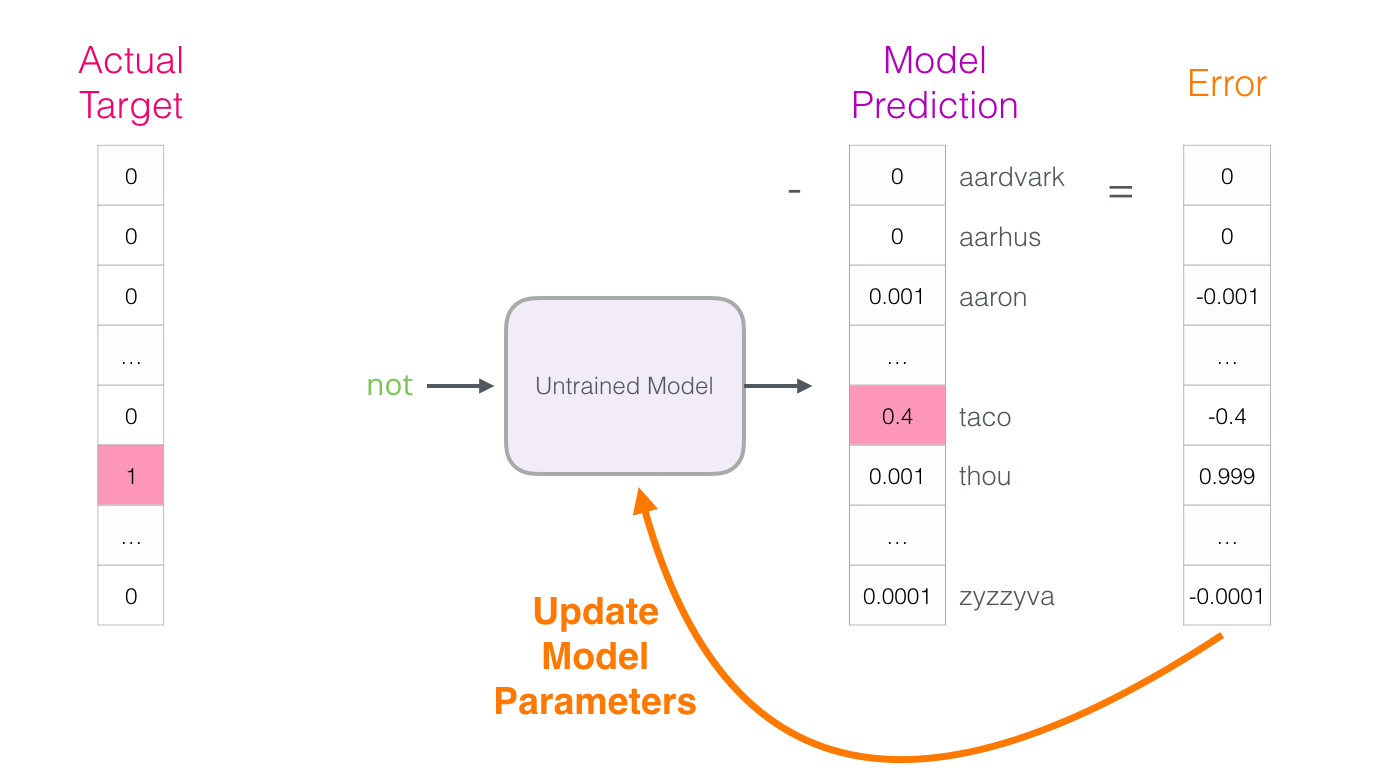

The model goes through three steps and displays a prediction vector (with probability for each word in the dictionary). Since the model is not trained, at this stage its forecast is probably incorrect. But that’s nothing. We know which word she predicts - this is the resulting cell in the row that we currently use to train the model:

A “target vector” is one in which the target word has a probability of 1, and all other words have a probability of 0.

How wrong was the model? Subtract the forecast vector from the target and get the error vector:

This error vector can now be used to update the model, so the next time it is more likely to give an accurate result on the same input data.

Here the first stage of training ends. We continue to do the same with the next sample in the data set, and then with the next, until we examine all the samples. This is the end of the first era of learning. We repeat everything over and over for several eras, and as a result we get a trained model: from it you can extract the investment matrix and use it in any applications.

Although we learned a lot, but to fully understand how word2vec really learns, a couple of key ideas are missing.

Negative selection

“Trying to understand Muad'Dib without understanding his mortal enemies — the Harkonnenov — is the same as trying to understand the Truth without understanding what the Falsehood is. This is an attempt to know the Light without knowing the Darkness. It's impossible". - Dune

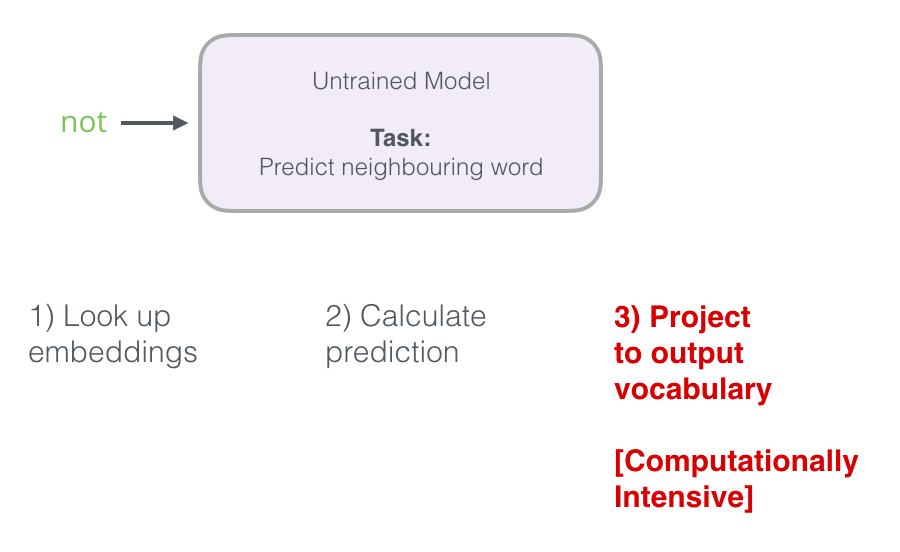

Recall the three steps how a neural model calculates a forecast:

The third step is very expensive from a computational point of view, especially if you do it for each sample in the data set (tens of millions of times). It is necessary to somehow increase productivity.

One way is to divide the goal into two stages:

- Create high-quality word attachments (without predicting the next word).

- Use these high-quality investments for teaching the language model (for forecasting).

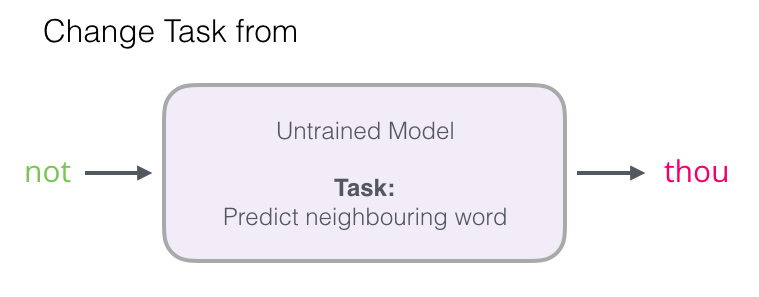

This article will focus on the first step. To increase productivity, you can move away from predicting a neighboring word ...

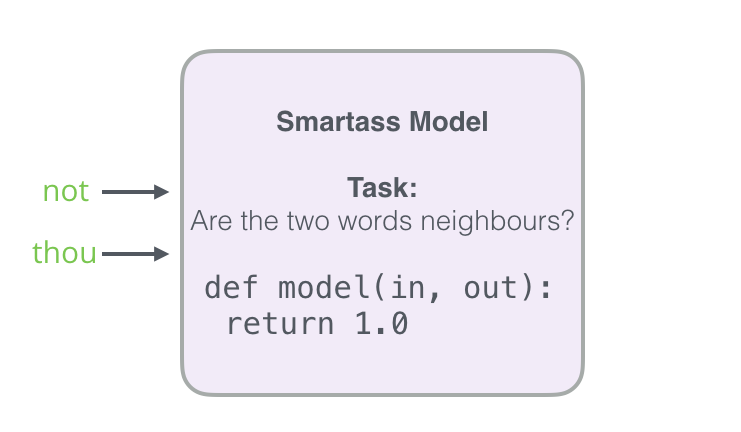

... and switch to a model that takes input and output words and calculates the probability of their proximity (from 0 to 1).

Such a simple transition replaces the neural network with a logistic regression model - thus, calculations become much simpler and faster.

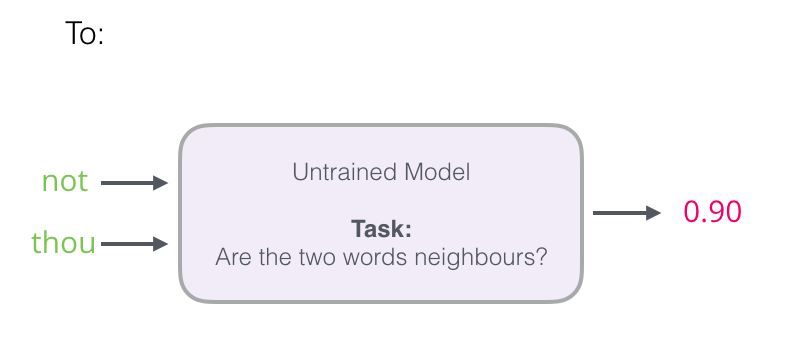

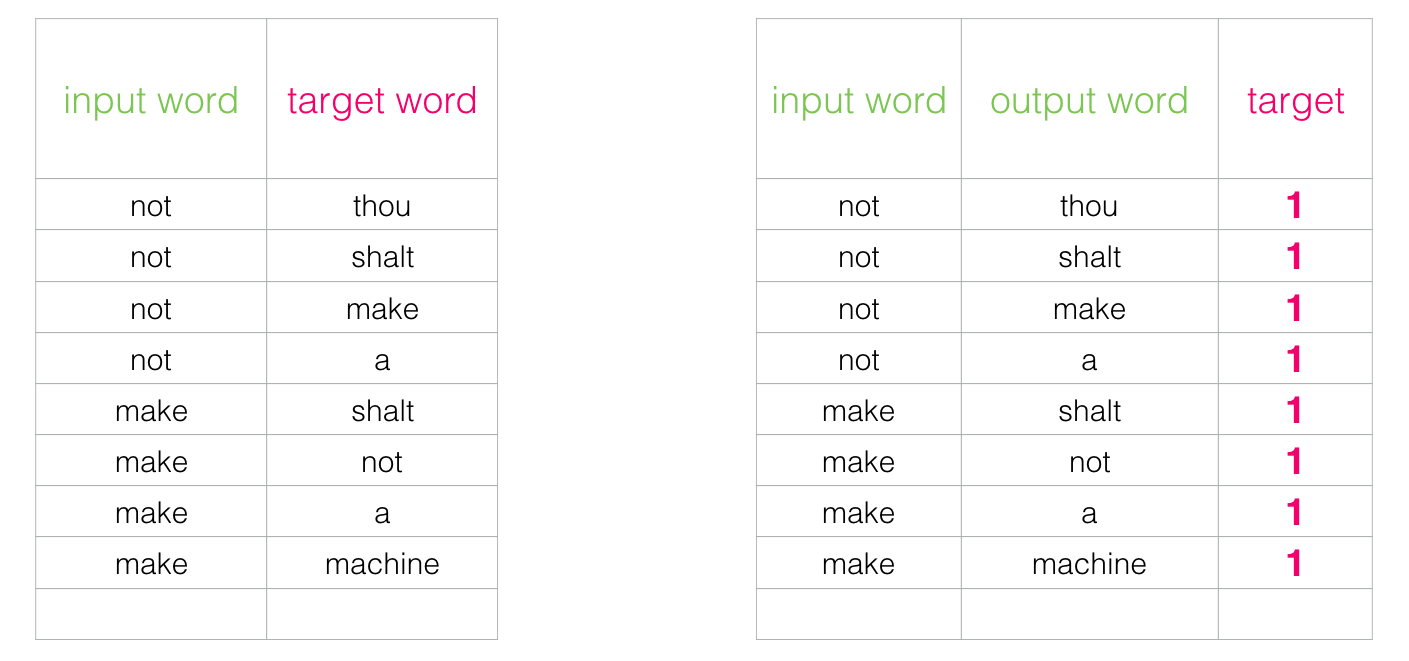

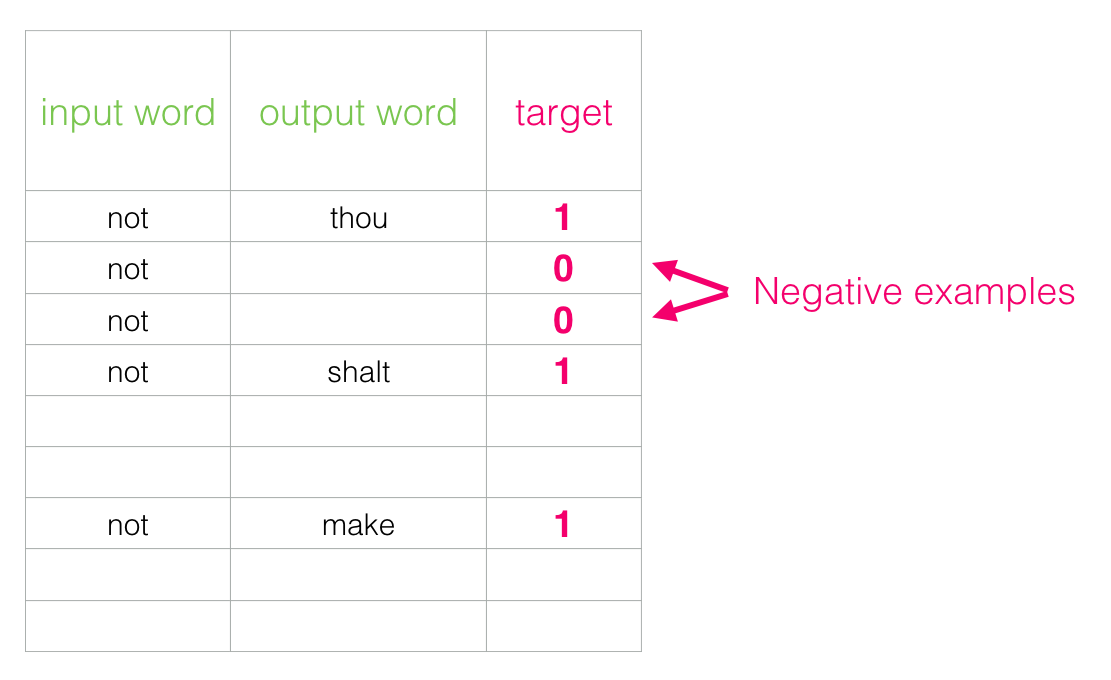

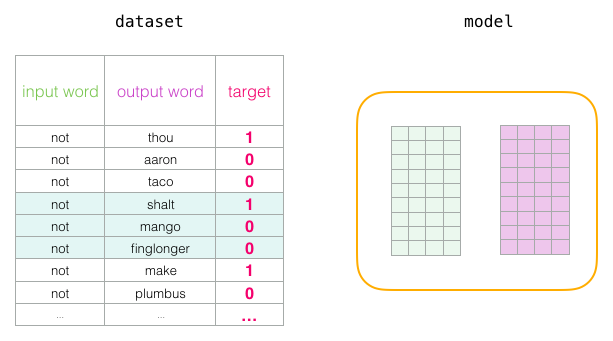

At the same time, we need to refine the structure of our data set: the label is now a new column with values 0 or 1. In our table, units are everywhere, because we added neighbors there.

Such a model is calculated at an incredible speed: millions of samples in minutes. But you need to close one loophole. If all our examples are positive (goal: 1), then a tricky model can form that always returns 1, demonstrating 100% accuracy, but it does not learn anything and generates junk investments.

To solve this problem, you need to enter negative patterns into the data set - words that are definitely not neighbors. For them, the model must return 0. Now the model will have to work hard, but the calculations still go at great speed.

For each sample in the dataset, add negative examples labeled 0

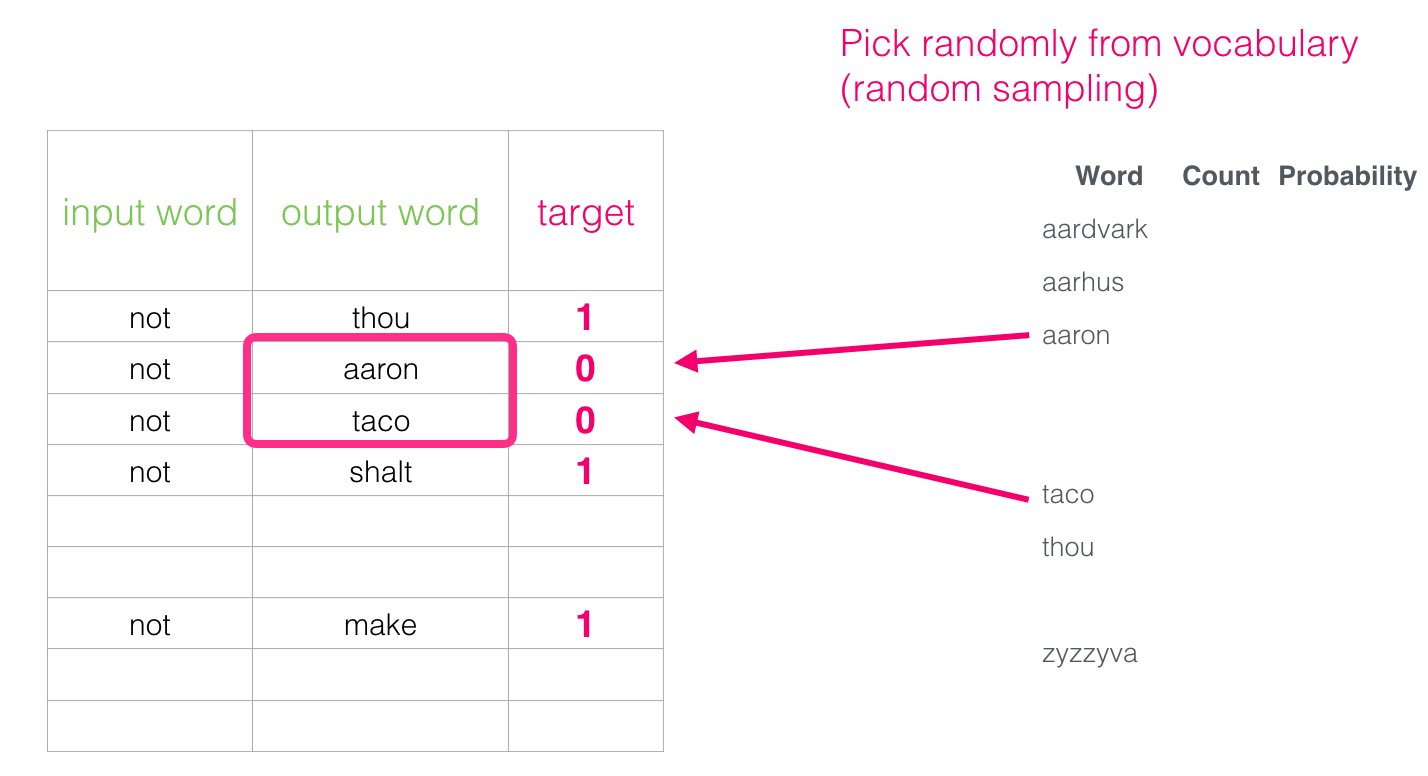

But what to enter as output words? Choose the words arbitrarily:

This idea was born under the influence of noise comparison method [pdf]. We match the actual signal (positive examples of neighboring words) with noise (randomly selected words that are not neighbors). This provides an excellent compromise between performance and statistical performance.

Skip-gram Negative Sample (SGNS)

We looked at two central concepts of word2vec: together they are called “skip-gram with negative sampling”.

Learning word2vec

“A machine cannot foresee every problem that is important to a living person. There is a big difference between discrete space and continuous continuum. We live in one space, and machines exist in another. ” - God the Emperor of Dune

Having examined the basic ideas of skip-gram and negative sampling, we can proceed to a closer look at the word2vec learning process.

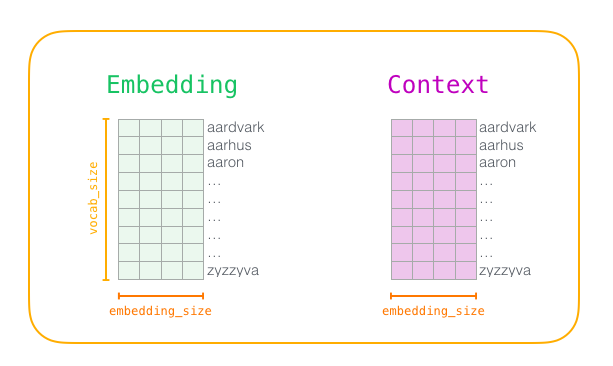

First, we pre-process the text on which we train the model. We define the size of the dictionary (we will call it

vocab_size), say, in 10,000 attachments and the parameters of the words in the dictionary. At the beginning of training, we create two matrices:

Embeddingand Context. These matrices store attachments for each word in our dictionary (therefore, it vocab_sizeis one of their parameters). The second parameter is the dimension of the attachment (usually embedding_sizeset to 300, but earlier we looked at an example with 50 dimensions).

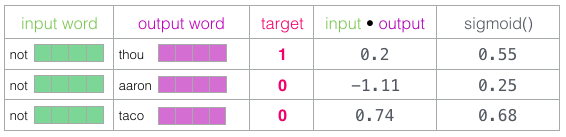

First, we initialize these matrices with random values. Then we begin the learning process. At each stage, we take one positive example and the negative ones associated with it. Here is our first group:

Now we have four words: input word

notand output / context words thou(actual neighbor), aaronand taco(negative examples). We begin the search for their attachments in the matrices Embedding(for the input word) and Context(for contextual words), although in both matrices there are attachments for all words from our dictionary.

Then we calculate the scalar product of the input attachment with each of the contextual attachments. In each case, a number is obtained that indicates the similarity of input data and contextual attachments.

Now we need a way to turn these estimates into a kind of likelihood: all of them must be positive numbers between 0 and 1. This is an excellent task for sigmoid logistic equations .

The result of the sigmoid calculation can be considered as the output of the model for these samples. As you can see, u have

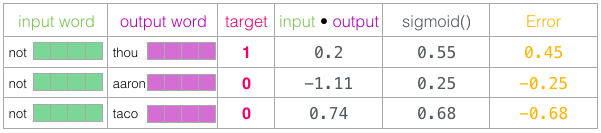

tacothe highest score, while u aaronstill have the lowest score both before and after sigmoid. When the untrained model made a forecast and having a real target mark for comparison, let's calculate how many errors are in the model forecast. To do this, simply subtract the sigmoid score from the target labels.

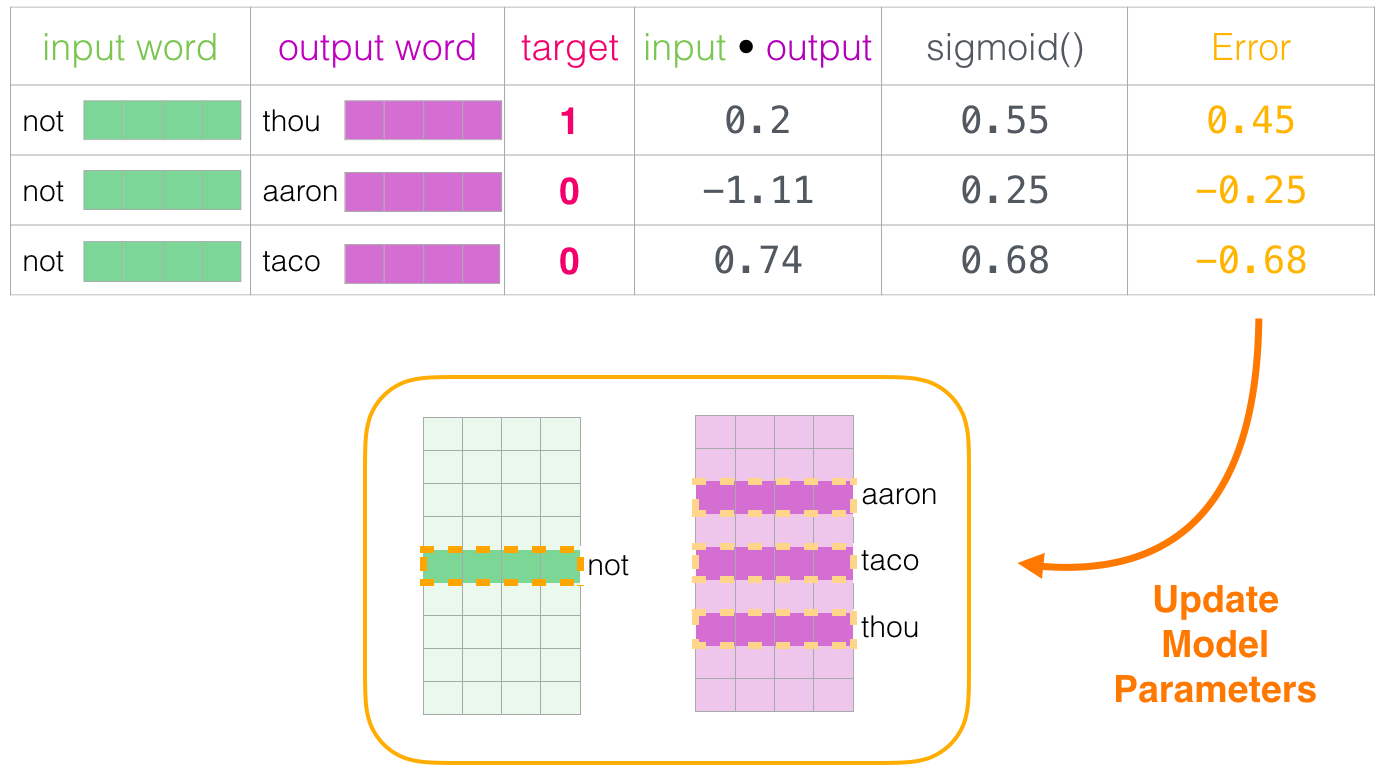

error= target-sigmoid_scoresHere comes the “learning” phase from the term “machine learning”. Now we can use this evaluation to correct errors of investment

not, thou, aaronand tacoso that the next calculation of the result would be closer to the target estimates.

This completes one stage of training. We improved a little investment of several words (

not, thou, aaronand taco). Now we move on to the next stage (the next positive sample and the negative ones associated with it) and repeat the process.

Attachments continue to improve as we cycle through the entire data set several times. You can then stop the process, set the matrix aside,

Contextand use the trained matrix Embeddingsfor the next task.Window size and number of negative samples

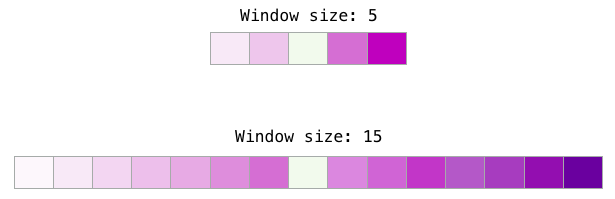

In the process of learning word2vec, two key hyperparameters are the window size and the number of negative samples.

Different window sizes are suitable for different tasks. It has been noticed that smaller window sizes (2–15) generate interchangeable attachments with similar indexes (note that antonyms are often interchangeable when looking at surrounding words: for example, the words “good” and “bad” are often mentioned in similar contexts). Larger window sizes (15–50 or even more) generate related attachments with similar indices. In practice, you often have to provide annotations for useful semantic similarities in your task. In Gensim, the default window size is 5 (two words left and right, in addition to the input word itself).

The number of negative samples is another factor in the learning process. The original document recommends 5–20. It also says that 2-5 samples seem to be sufficient when you have a sufficiently large dataset. In Gensim, the default value is 5 negative patterns.

Conclusion

“If your behavior falls beyond your standards, then you are a living person, not an automaton” - God-Emperor of Dune

I hope you now understand the embedding of words and the essence of the word2vec algorithm. I also hope that now you will become more familiar with articles that mention the concept of “skip-gram with negative sampling” (SGNS), as in the aforementioned recommender systems.

References and Further Reading

- “Distributed representations of words and phrases and their composition” [pdf]

- «Эффективная оценка представлений слов в векторном пространстве» [pdf]

- «Нейронная вероятностная модель языка» [pdf]

- «Обработка речи и языка» Дэна Журафски и Джеймса Мартина — основной ресурс по NLP. Word2vec рассматривается в шестой главе.

- «Методы нейронных сетей в обработке естественного языка» by Йоава Голдберга — отличная книга по обработке естественного языка.

- Крис Маккормик написал несколько отличных постов в блоге о Word2vec. Он также только что выпустил электронную книгу «Внутренности word2vec»

- Хотите посмотреть код? Вот два варианта:

- Реализация word2vec на Python в Gensim

- Original implementation of Mikolov in C , and even better this version with detailed comments from Chris McCormick

- Evaluation of distributional models of compositional semantics

- "On investments words" , part 2

- "Dune"