Operating Systems: Three Easy Pieces. Part 1: Intro (translation)

Introduction to Operating Systems

Hello, Habr! I want to bring to your attention a series of articles-translations of one interesting literature in my opinion - OSTEP. This article discusses rather deeply the work of unix-like operating systems, namely, work with processes, various schedulers, memory, and other similar components that make up the modern OS. The original of all materials you can see here . Please note that the translation was done unprofessionally (quite freely), but I hope I retained the general meaning.

Laboratory work on this subject can be found here:

- original: pages.cs.wisc.edu/~remzi/OSTEP/Homework/homework.html

- original: github.com/remzi-arpacidusseau/ostep-code

- my personal adaptation: github.com/bykvaadm/OS/tree/master/ostep

- Part 3: Introduction to the Scheduler

Other parts:

- Part 1: Intro

- Part 2: Abstraction: the process

- Part 3: Introduction to the Process API

- Part 4: Introduction to the Scheduler

- Part 5: MLFQ Scheduler

And you can look at my channel in telegram =)

Program work

What happens when a program works? Running programs does one simple thing - it executes instructions. Every second, millions and even possibly billions of instructions are extracted by the processor from the RAM, in turn, it decodes them (for example, recognizes what type these instructions belong to) and executes. This can be the addition of two numbers, access to memory, checking conditions, switching to functions, and so on. After the completion of one instruction, the processor proceeds to execute another. And so instruction by instruction, they are executed until the program is completed.

This example is naturally considered simplified - in fact, to speed up the processor, modern hardware allows you to execute instructions out of turn, calculate possible results, follow instructions at the same time and similar tricks.

Von Neumann Computing Model

The simplified form of work described by us is similar to the Von Neumann model of calculations. Von Neumann is one of the pioneers of computer systems; he is also one of the authors of game theory . While the program is running, a bunch of other events occur, many other processes and third-party logic work, the main purpose of which is to simplify the launch, operation and maintenance of the system.

There is a set of software that is responsible for the simplicity of running programs (or even allowing you to run several programs at the same time), it allows programs to share the same memory, as well as interact with different devices. Such a set of software (software) is essentially called the operating system and its tasks include monitoring that the system works correctly and efficiently, as well as ensuring ease of management of this system.

operating system

An operating system, short for OS, is a complex of interconnected programs designed to manage computer resources and organize the interaction of a user with a computer .

The OS achieves its effectiveness primarily through the most important technique - virtualization technique . The OS interacts with a physical resource (processor, memory, disk, and the like) and transforms it into a more general, more powerful and easier to use form of itself. Therefore, for a general understanding, you can very roughly compare the operating system with a virtual machine.

In order to allow users to give instructions to the operating system and thus use the capabilities of a virtual machine (such as: launching a program, allocating memory, accessing a file, and so on), the operating system provides some interface called API (application programming interface) and to which You can make calls. A typical operating system makes it possible to make hundreds of system calls.

And finally, since virtualization allows many programs to work (thus sharing the CPU), and at the same time gaining access to their instructions and data (thereby sharing memory), as well as accessing disks (thus sharing I / O devices ), the operating system is also called the resource manager. Each processor, disk and memory is a resource of the system and thus one of the roles of the operating system becomes the task of managing these resources, doing it efficiently, honestly or, conversely, depending on the task for which this operating system is designed.

CPU virtualization

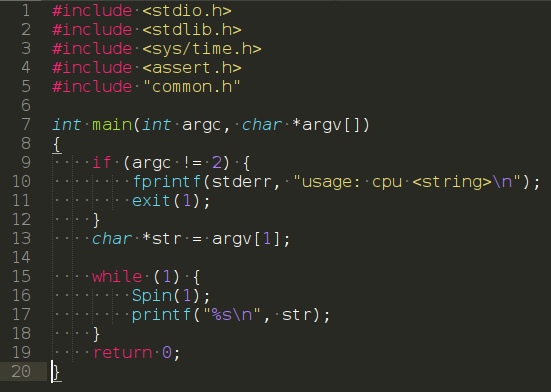

Consider the following program:

(https://www.youtube.com/watch?v=zDwT5fUcki4)

It does not perform any special actions, in fact all it does is call the spin () function , whose task is to cycle time and return after one second has passed. Thus, it repeats endlessly the string that the user passed as an argument.

Run this program, and pass the symbol “A” to it as an argument. The result is not very interesting - the system simply runs a program that periodically displays the symbol “A”.

Now let's try the option when many instances of the same program are running, but displaying different letters, so that it is more understandable. In this case, the result will be slightly different. Despite the fact that we have one processor, the program runs simultaneously. How does this happen? But it turns out that the operating system, not without the help of hardware capabilities, creates an illusion. The illusion that there are several virtual processors in the system, turning one physical processor into a theoretically infinite number and thereby allowing programs to appear to be executed simultaneously. Such an illusion is called CPU Virtualization .

Such a picture raises many questions, for example, if several programs want to start simultaneously, which one will be launched? The "policies" of the OS are responsible for this question. Policies are used in many places of the OS and answer similar questions, and are also the basic mechanisms that the OS implements. Hence the role of the OS as a resource manager.

Memory virtualization

Now let's look at the memory. The physical memory model in modern systems is represented as an array of bytes . To read from memory, you must specify the address of the cell in order to access it. To record or update data, you must also specify the data and the address of the cell where to write it.

Memory accesses occur constantly during the program operation. A program stores in memory its entire data structure, and accesses it by following various instructions. Instructions, meanwhile, are also stored in memory, so it is also accessed for every request to the next instruction.

Call malloc ()

Consider the following program, which allocates an area of memory using the malloc () call (https://youtu.be/jnlKRnoT1m0):

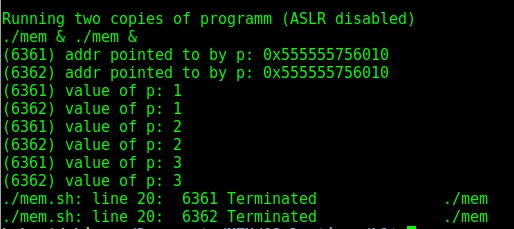

The program does a few things. Firstly, it allocates a certain amount of memory (line 7), then displays the address of the selected cell (line 9), writes zero to the first slot of the allocated memory. Next, the program enters a cycle in which it increments the value recorded in memory at the address in the variable “p”. It also displays the process identifier of itself. The process ID is unique for each running process.. Having started several copies, we will come across an interesting result: In the first case, if you do nothing and just start several copies, then the addresses will be different. But this does not fall under our theory! It is true, since in modern distributions the function of randomization of memory is turned on by default. If you turn it off, we get the expected result - the memory addresses of two simultaneously running programs will coincide.

The result is that two independent programs work with their own private address spaces, which in turn are displayed by the operating system in physical memory. Therefore, the use of memory addresses within one program will not affect others in any way, and it seems to each program that it has its own piece of physical memory, which is entirely at its disposal. The reality, however, is that physical memory is a shared resource managed by the operating system.

Coherence

Another important topic within operating systems is consistency . This term is used when it comes to problems in the system that can occur when working with many things at the same time within the same program. Consistency problems arise even in the operating system itself. In previous examples with memory and processor virtualization, we realized that the OS manages many things at the same time - it starts the first process, then the second, and so on. As it turned out, this behavior can lead to some problems. So, for example, modern multithreaded programs experience such difficulties.

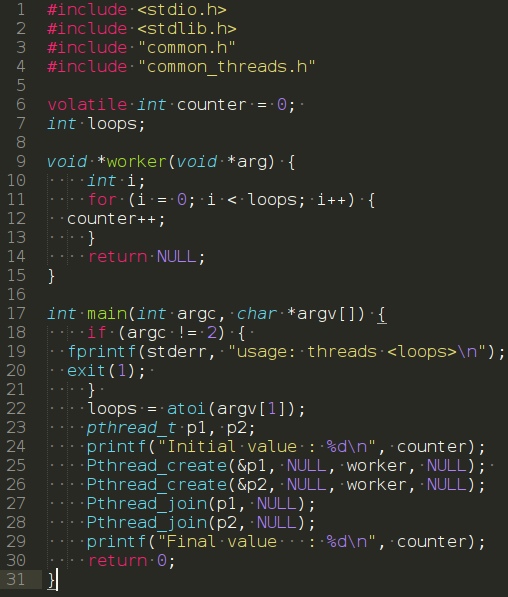

Consider the following program:

The program in the main function creates two threads using the Pthread_create () call. In this example, a thread can be thought of as a function running in the same memory space next to other functions, and the number of functions launched simultaneously is clearly more than one. In this example, each thread starts and performs the function of worker () which in turn simply increments the variable ,.

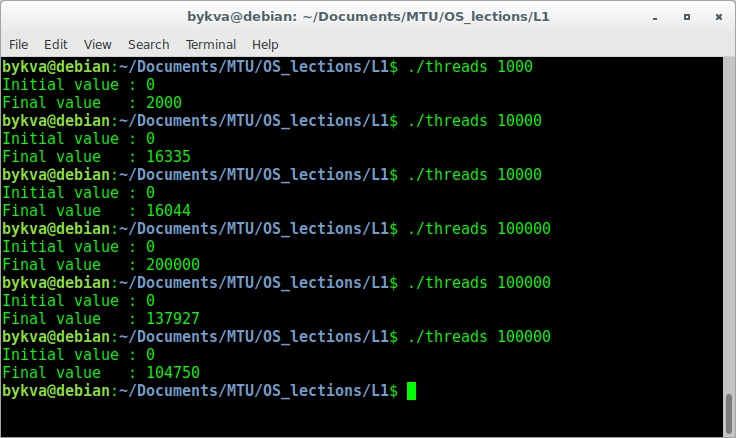

Run this program with argument 1000. As you might have guessed, the result should be 2000, since each thread incremented the variable 1000 times. However, everything is not so simple. Let's try to run the program with the number of repetitions an order of magnitude more.

By inputting a number, for example, 100000, we expect to see 200000 on the output. However, running the number 100000 several times, we will not only not see the correct answer, but also get different incorrect answers. The answer lies in the fact that to increase the number, three operations are required - extracting the number from memory, incrementing and then writing the number back. Since all these instructions are not atomically implemented (all at the same time), such strange things can happen. This problem is called race condition programming - race condition . When unknown forces at an unknown moment can affect the performance of any of your operations.