Kubernetes 1.14: Overview of Key Innovations

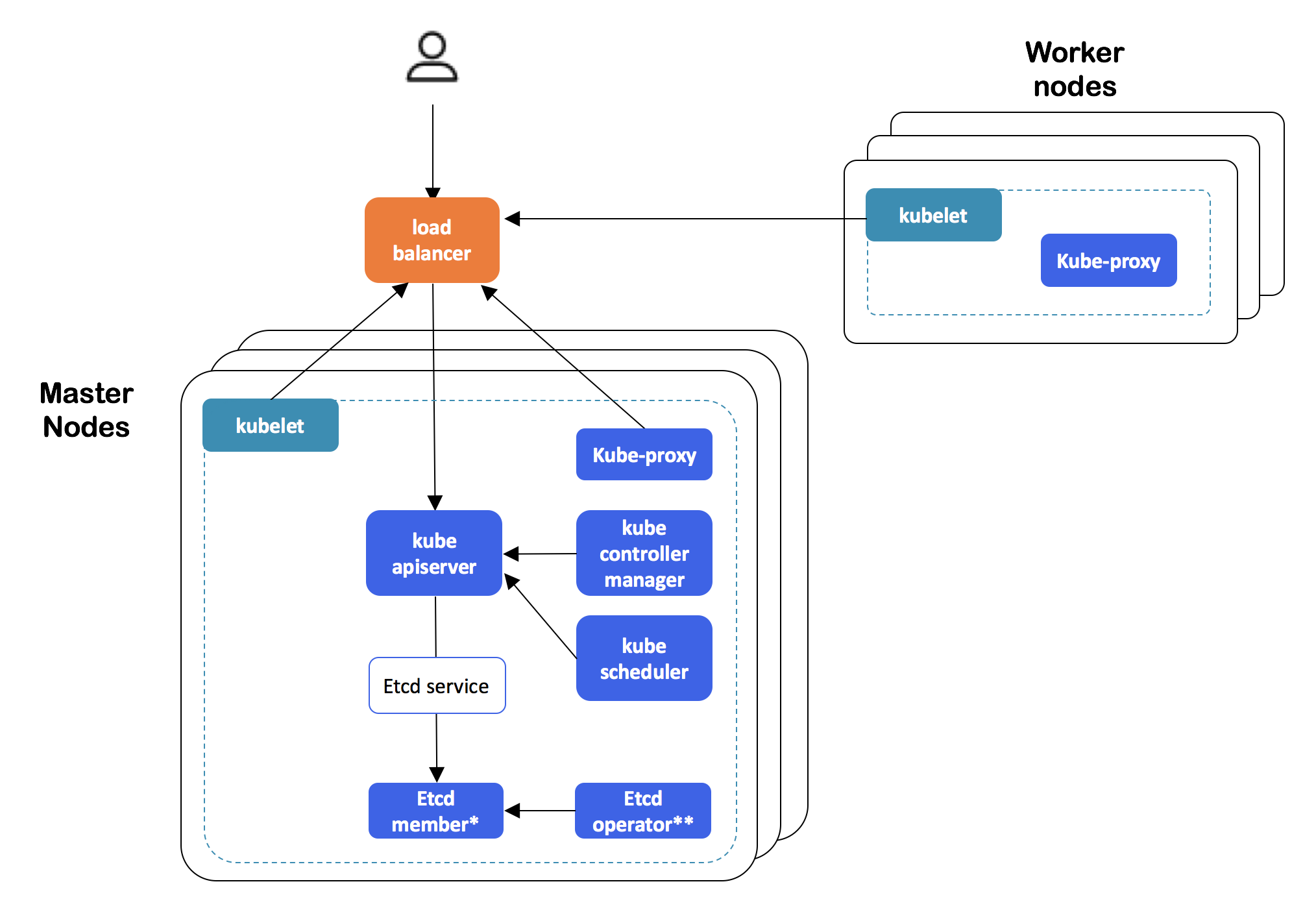

Kubernetes - 1.14 will be released this night . According to the tradition that has developed for our blog, we are talking about key changes in the new version of this wonderful Open Source product. The information used to prepare this material is taken from the Kubernetes enhancements tracking table , CHANGELOG-1.14 and related issues, pull requests, Kubernetes Enhancement Proposals (KEP). UPDATE (March 26): The official release announcement also appeared on the K8s blog . Let's start with an important introduction from SIG cluster-lifecycle: Kubernetes dynamic failover clusters (or more precisely, self-hosted HA deployments) now

can be created using the usual (in the context of single-node clusters) commands

kubeadm( initand join). In short, for this:- certificates used by the cluster are transferred to secrets;

- for the possibility of using the etcd cluster inside the K8s cluster (i.e., getting rid of the external dependency that has existed so far), the etcd-operator is used ;

- Recommended settings for an external load balancer that provides a fault-tolerant configuration are documented (in the future, the possibility of failure from this dependence is planned, but not at this stage).

Kubernetes HA cluster architecture created with kubeadm

For implementation details, see design proposal . This feature was really long-awaited: the alpha version was expected back in K8s 1.9, but only appeared now.

API

The team

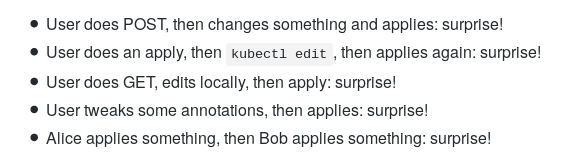

applyand generally declarative management of objects are taken out kubectlof apiserver. The developers themselves briefly explain their decision by saying that kubectl apply- a fundamental part of working with configurations in Kubernetes, however, is “full of bugs and difficult to fix”, in connection with which this functionality needs to be brought back to normal form and transferred to the control plane. Simple and illustrative examples of the problems that exist today:

Implementation details are in KEP . The current availability is the alpha version (promotion to beta is planned for the next release of Kubernetes).

In the alpha version, the possibility of using the OpenAPI v3 scheme forcreation and publication of OpenAPI documentation on CustomResources (CR) used to validate (server-side) K8s resources defined by the user (CustomResourceDefinition, CRD). Publishing OpenAPI for CRD allows customers (for example,

kubectl) to validate on their side (within kubectl createand kubectl apply) and issue documentation according to the scheme ( kubectl explain). Details are in the KEP . Pre-existing logs now open with a flag

O_APPEND(and not O_TRUNC) to avoid loss of logs in some situations and for the convenience of truncate logs by external utilities for rotation. Also in the context of the Kubernetes API, it can be noted that the field is added to

PodSandboxandPodSandboxStatusruntime_handlerAccounting for information about RuntimeClassin pod'e (learn more about it, read the text about Release 1.12 Kubernetes , where this class and has emerged as the alpha version), and Admission Webhooks implemented to determine which version AdmissionReviewthey support. Finally, in the Admission Webhooks rules, you can now limit the scope of their application to namespace and cluster scope.Storage facilities

PersistentLocalVolumesWith beta status since the release of K8s 1.10 , declared stable (GA): this feature gate will no longer be disabled and will be removed in Kubernetes 1.17. The ability to use the environment variables of the so-called Downward API (for example, the name of the pod) for the names of directories mounted as

subPathwas developed in the form of a new field subPathExpr, with the help of which the desired directory name is now determined. Initially, the feature appeared in Kubernetes 1.11, but for 1.14 it remained in the alpha version status. As with the previous Kubernetes release, many significant changes are introduced for the rapidly evolving CSI (Container Storage Interface):

CSI

Support for resizing support for CSI volumes has become available (as part of the alpha version) . To use it, you will need to enable the feature gate called

ExpandCSIVolumes, as well as the presence of support for this operation in a specific CSI driver. Another feature for CSI in the alpha version is the ability to refer directly (i.e., without using PV / PVC) to CSI volumes as part of the pod specification. This removes the restriction on using CSI as exclusively remote data warehouses , opening the door for them to the world of local ephemeral volumes . To use ( example from the documentation ), you must enable

CSIInlineVolumefeature gate.There has been progress in the “internals” of Kubernetes related to CSI, which are not so noticeable to end users (system administrators) ... At the moment, developers are forced to support two versions of each storage plugin: one “in the old fashion”, inside the K8s code base (in -tree), and the second - as part of the new CSI (read more about it, for example, in here ) . This causes understandable inconvenience that needs to be addressed as the CSI as such stabilizes. Simply deprecating the deprecated API of the in-tree plug-ins is not possible due to the corresponding Kubernetes policy .

All this led to the fact that the alpha version reached the process of migrating the internal code of the pluginsimplemented as in-trees in CSI plugins, due to which developers' concerns will be reduced to supporting one version of their plug-ins, and compatibility with old APIs will remain and they can be declared obsolete in the usual way. It is expected that by the next Kubernetes release (1.15), all cloud provider plugins will be migrated, the implementation will receive beta status and will be activated in the default K8s installations. See the design proposal for details . This migration also resulted in the rejection of restrictions for volumes defined by specific cloud providers (AWS, Azure, GCE, Cinder).

In addition, support for block devices with CSI (

CSIBlockVolume) has been converted to beta.Nodes / Kubelet

Introduced an alpha version of the new endpoint in Kubelet, designed to return metrics for the main resources . Generally speaking, if Kubelet used to get statistics on the use of containers from cAdvisor, now this data comes from the container runtime through the CRI (Container Runtime Interface), but compatibility with older versions of Docker is preserved. Previously, statistics collected in Kubelet were provided through the REST API, but now it uses the endpoint located at the address

/metrics/resource/v1alpha1. The long-term strategy of the developers is to minimize the set of metrics provided by Kubelet. By the way, these metrics themselves are now callednot “core metrics”, but “resource metrics”, and are described as “first-class resources, such as cpu, and memory”. A very interesting nuance: in spite of the clear advantage in gRPC endpoint performance compared to different cases of using the Prometheus format (the result of one of the benchmark'ov see below) , the authors preferred the Prometheus text format due to the clear leadership of this monitoring system in the community.

“GRPC is not compatible with major monitoring pipelines. Endpoint will only be useful for delivering metrics to Metrics Server or monitoring components that integrate directly with it. When using caching in Metrics Server, the performance of the Prometheus text format is good enough for us to prefer Prometheus over gRPC, given the widespread use of Prometheus in the community. When the OpenMetrics format becomes more stable, we can get closer to gRPC performance with a proto-based format. ”

One of the comparative performance tests using the gRPC and Prometheus formats in the new Kubelet endpoint for metrics. More graphs and other details can be found in KEP .

Among other changes:

- Kubelet now accepts a parameter in the options and , guaranteeing that the specified PID will be reserved for the system as a whole or for Kubernetes system daemons, respectively. The feature is activated when the feature gate called .

pid=--system-reserved--kube-reservedSupportNodePidsLimit - Kubelet now (once) tries to stop containers in an unknown state before restart and delete operations.

- When used

PodPresetsnow , the same information is added to the init container as a regular container. - Kubelet started using

usageNanoCoresCRI from the statistics provider, and network statistics were added for nodes and containers in Windows . - Information about the operating system and architecture is now recorded in Node labels

kubernetes.io/osandkubernetes.io/archobjects (translated from beta to GA). - The ability to specify a specific system user group for containers in the pod (

RunAsGroup, appeared in K8s 1.11 ) has progressed to the beta version (enabled by default). - du and find used in cAdvisor are replaced by Go implementations.

CLI

The -k flag has been added to cli-runtime and kubectl for integration with kustomize (by the way, it is now being developed in a separate repository), i.e. for processing additional YAML files from special kustomization directories (see KEP for details on their use ):

An example of a simple use of the kustomization file (a more complicated use of kustomize within overlays is also possible )

In addition:

- The kubectl logo and its documentation have been updated - see kubectl.docs.kubernetes.io .

- A new team has been added

kubectl create cronjob, the name of which speaks for itself. - In

kubectl logsnow you can combine flags-f(--followfor streaming logs) and-l(--selectorfor label query). - kubectl taught to copy files selected with a wild card.

- A flag was

kubectl waitadded to the command--allto select all resources in the namespace of the specified resource type. - Declared stable plugin mechanism for kubectl .

Other

Stable (GA) status received the following features:

- Support for Windows nodes (including Windows Server 2019), which implies the possibility of planning Windows Server containers in Kubernetes (see also KEP );

ReadinessGateused in the pod specification to determine the additional conditions considered in the readiness of the pod;- Support for large pages (feature gate called

HugePages); - CustomPodDNS ;

- PriorityClass API, Pod Priority & Preemption .

Other changes introduced in Kubernetes 1.14:

- The default RBAC policy no longer provides access to the API

discoveryandaccess-reviewusers without authentication (unauthenticated) . - Official support for CoreDNS is provided only for Linux, so when using kubeadm for its (CoreDNS) deployment in a cluster, nodes should work only in Linux (nodeSelectors are used for this limitation).

- The default CoreDNS configuration now uses the forward plugin instead of the proxy. In addition, readinessProbe has been added to CoreDNS, which prevents load balancing on the corresponding (not ready for service) pods.

- In kubeadm, in phases

initorupload-certs, it became possible to upload the certificates required to connect the new control-plane to the secret of kubeadm-certs (flag is used--experimental-upload-certs). - For Windows installations, an alpha version of support for gMSA (Group Managed Service Account) - special accounts in Active Directory, which can be used by containers, has appeared.

- For GCE , mTLS encryption was activated between etcd and kube-apiserver.

- Updates in the used / dependent software: Go 1.12.1, CSI 1.1, CoreDNS 1.3.1, support for Docker 18.09 in kubeadm, and the minimum supported version of the Docker API was 1.26.

PS

Read also in our blog: