The situation: virtual GPUs are not inferior in performance to iron solutions

In February, a high-performance computing (HPC) conference was held at Stanford. Representatives of VMware said that when working with the GPU, the system based on the modified ESXi hypervisor is not inferior in speed to bare metal solutions.

We talk about the technologies that allowed this to be achieved. / photo Victorgrigas CC BY-SA

According to analysts, about 70% of the workloads in data centers are virtualized . However, the remaining 30% still work on bare metal without hypervisors. These 30% for the most part consist of highly loaded applications related, for example, to training neural networks, and using graphic processors.

Experts explain this trend by the fact that the hypervisor as an intermediate layer of abstraction can affect the performance of the entire system. In studies of five years ago, you can find data on a decrease in speed by 10%. Therefore, companies and data center operators are in no hurry to transfer the HPC load to a virtual environment.

But virtualization technologies are evolving and improving. At a conference a month ago, VMware said that the ESXi hypervisor does not adversely affect GPU performance. Computing speed can drop by three percent, and this is comparable to bare metal.

To improve the performance of HPC systems with GPUs, VMware introduced a number of changes to the work of the hypervisor. In particular, he got rid of the vMotion function. It is needed for load balancing and usually transfers virtual machines (VMs) between servers or GPUs. Disabling vMotion has led to the fact that each VM is now assigned a specific graphics processor. This has helped reduce data sharing costs.

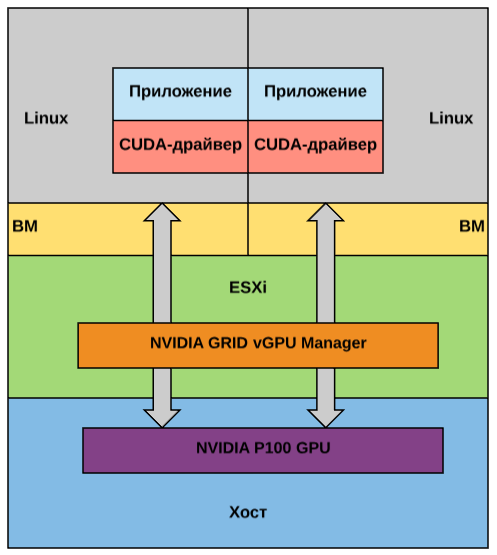

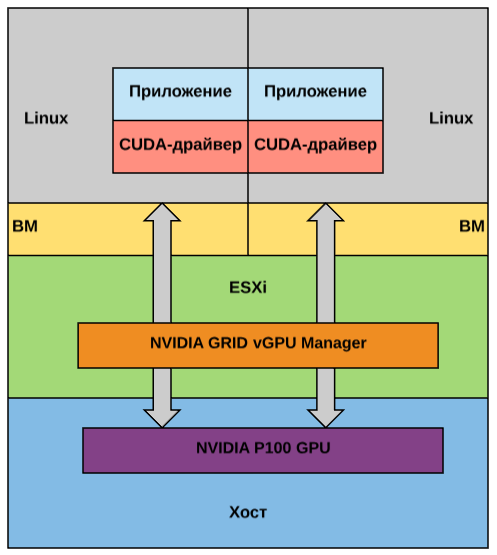

Another key component of the system is technology.DirectPath I / O. It allows the CUDA driver for parallel computing to interact directly with virtual machines, bypassing the hypervisor. When you need to run several VMs on the same GPU, the GRID vGPU solution is activated. It divides the memory card into several segments (but the computational cycles are not divided).

The scheme of operation of two virtual machines in this case will look as follows:

The company conducted hypervisor tests by training a language model based on TensorFlow . The “damage” to productivity was only 3-4%, compared to bare metal. At the same time, in return, the system was able to distribute resources on demand depending on current loads.

The IT giant also conducted container tests . The company's engineers trained neural networks to recognize images. At the same time, the resources of one GPU were distributed between four container VMs. As a result, the performance of individual machines decreased by 17% (compared to a single VM that has full access to GPU resources). However, the number of processed images per second has tripled. Similar systems are expectedwill find application in the field of data analysis and computer modeling.

Among the potential problems that VMware may encounter, experts single out a rather narrow target audience. A small number of companies are currently working with high-performance systems. Although Statista notes that by 2021, 94% of the workloads of world data centers will be virtualized. According to analysts, the value of the HPC market will grow from $ 32 to $ 45 billion between 2017 and 2022.

/ photo Global Access Point PD

There are several analogues on the market that are developed by large IT companies: AMD and Intel.

The first GPU virtualization company offers an SR-IOV (single-root input / output virtualization) approach. This technology provides VMs access to some of the hardware capabilities of the system. The solution allows you to split the graphics processor between 16 users with equal performance virtualized systems.

As for the second IT giant, their technology is based on the Citrix XenServer 7 hypervisor. It combines the work of a standard GPU driver and a virtual machine, which allows the latter to display 3D applications and desktops on the devices of hundreds of users.

Virtual GPU developers are betting on the implementation of AI systems and the growing popularity of high-performance solutions in the business technology market. They hope that the need for processing large amounts of data will increase the demand for vGPUs.

Now manufacturers are looking for a way to combine the functionality of the CPU and GPU in one core in order to speed up the solution of tasks related to graphics, performing mathematical calculations, logical operations, and data processing. The appearance on the market of such cores in the future will change the approach to resource virtualization and their distribution between workloads in a virtual and cloud environment.

What to read on the topic in our corporate blog:

A couple of posts from our Telegram channel:

We talk about the technologies that allowed this to be achieved. / photo Victorgrigas CC BY-SA

Performance issue

According to analysts, about 70% of the workloads in data centers are virtualized . However, the remaining 30% still work on bare metal without hypervisors. These 30% for the most part consist of highly loaded applications related, for example, to training neural networks, and using graphic processors.

Experts explain this trend by the fact that the hypervisor as an intermediate layer of abstraction can affect the performance of the entire system. In studies of five years ago, you can find data on a decrease in speed by 10%. Therefore, companies and data center operators are in no hurry to transfer the HPC load to a virtual environment.

But virtualization technologies are evolving and improving. At a conference a month ago, VMware said that the ESXi hypervisor does not adversely affect GPU performance. Computing speed can drop by three percent, and this is comparable to bare metal.

How it works

To improve the performance of HPC systems with GPUs, VMware introduced a number of changes to the work of the hypervisor. In particular, he got rid of the vMotion function. It is needed for load balancing and usually transfers virtual machines (VMs) between servers or GPUs. Disabling vMotion has led to the fact that each VM is now assigned a specific graphics processor. This has helped reduce data sharing costs.

Another key component of the system is technology.DirectPath I / O. It allows the CUDA driver for parallel computing to interact directly with virtual machines, bypassing the hypervisor. When you need to run several VMs on the same GPU, the GRID vGPU solution is activated. It divides the memory card into several segments (but the computational cycles are not divided).

The scheme of operation of two virtual machines in this case will look as follows:

Results and forecasts

The company conducted hypervisor tests by training a language model based on TensorFlow . The “damage” to productivity was only 3-4%, compared to bare metal. At the same time, in return, the system was able to distribute resources on demand depending on current loads.

The IT giant also conducted container tests . The company's engineers trained neural networks to recognize images. At the same time, the resources of one GPU were distributed between four container VMs. As a result, the performance of individual machines decreased by 17% (compared to a single VM that has full access to GPU resources). However, the number of processed images per second has tripled. Similar systems are expectedwill find application in the field of data analysis and computer modeling.

Among the potential problems that VMware may encounter, experts single out a rather narrow target audience. A small number of companies are currently working with high-performance systems. Although Statista notes that by 2021, 94% of the workloads of world data centers will be virtualized. According to analysts, the value of the HPC market will grow from $ 32 to $ 45 billion between 2017 and 2022.

/ photo Global Access Point PD

Similar solutions

There are several analogues on the market that are developed by large IT companies: AMD and Intel.

The first GPU virtualization company offers an SR-IOV (single-root input / output virtualization) approach. This technology provides VMs access to some of the hardware capabilities of the system. The solution allows you to split the graphics processor between 16 users with equal performance virtualized systems.

As for the second IT giant, their technology is based on the Citrix XenServer 7 hypervisor. It combines the work of a standard GPU driver and a virtual machine, which allows the latter to display 3D applications and desktops on the devices of hundreds of users.

Future technology

Virtual GPU developers are betting on the implementation of AI systems and the growing popularity of high-performance solutions in the business technology market. They hope that the need for processing large amounts of data will increase the demand for vGPUs.

Now manufacturers are looking for a way to combine the functionality of the CPU and GPU in one core in order to speed up the solution of tasks related to graphics, performing mathematical calculations, logical operations, and data processing. The appearance on the market of such cores in the future will change the approach to resource virtualization and their distribution between workloads in a virtual and cloud environment.

What to read on the topic in our corporate blog:

- Why companies use virtual machines, not containers

- “How are VMware?”: Overview of New Solutions

- VMware vSphere 6.7: How New Hypervisor Features Are Beneficial

A couple of posts from our Telegram channel: