Binary format reverse engineering using Korg SNG files as an example. Part 2

In a previous article, I described the line of reasoning when parsing an unknown binary data format. Using the Hex-editor Synalaze It !, I showed how you can parse the header of a binary file and highlight the main data blocks. Since in the case of the SNG format these blocks form a hierarchical structure, I managed to use recursion in the grammar to automatically build their tree view in a human-readable form.

In this article, I will describe a similar approach that I used to parse directly the music data. Using the built-in features of the Hex editor, I will create a prototype of a data converter into the common and simple Midi format. We will have to face a number of pitfalls and puzzle over the seemingly simple task of converting time samples. Finally, I’ll explain how you can use the results obtained and the grammar of the binary file to generate part of the code for the future converter.

Parsing music data

So, it's time to figure out how music data is stored in .SNG files. In part, I mentioned this in a previous article. The synthesizer documentation states that the SNG file can contain up to 128 “songs”, each of which consists of 16 tracks and one master track (for recording global events and changing master effects). Unlike the Midi format, where music events simply follow each other with a specific time delta, the SNG format contains music measures.

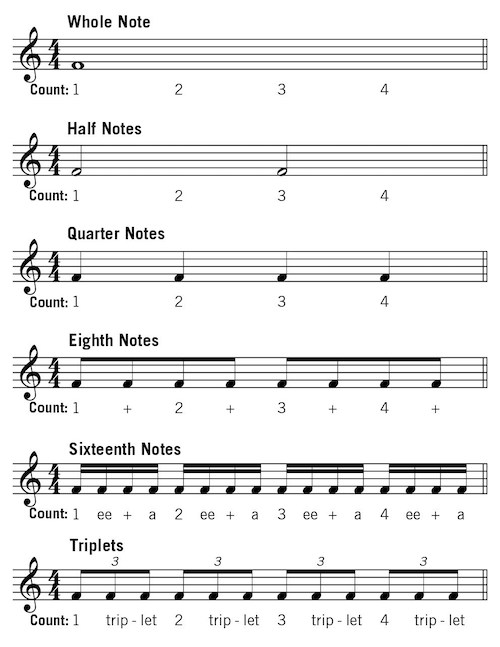

A measure is a kind of container for a sequence of notes. The measure dimension is indicated in musical notation. For example, 4/4 - means that the measure contains 4 beats, each of which is equal in duration to a quarter note. Simply put, such a measure will contain 4 quarter notes, or 2 half notes, or 8 eighths.

Here's how it looks in musical notation

The measures in the SNG file are used to edit tracks in the built-in synthesizer sequencer. Using the menu, you can delete, add and duplicate measures anywhere in the track. You can also loop cycles or change their dimension. Finally, you can simply start recording a track from any measure.

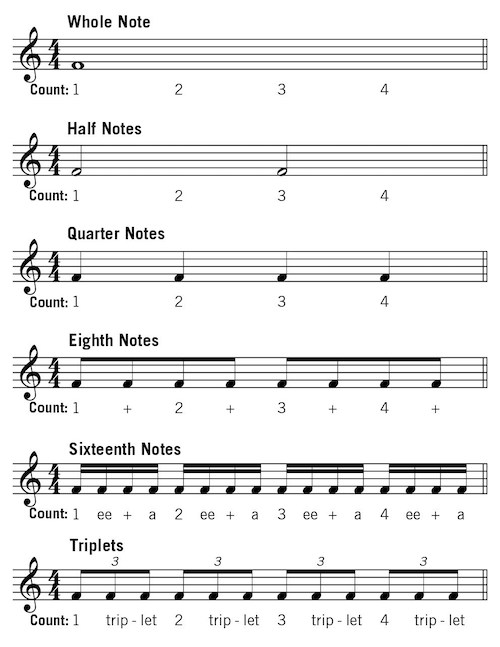

Let's try to see how all this is stored in a binary file. The common container for "songs" is the SGS1 block. The data of each song is stored in blocks SDT1:

The blocks SPR1 and BMT1 store the general settings of the song (tempo, metronome settings) and the settings of individual tracks (patches, effects, arpeggiator settings, etc.). We are interested in the TRK1 block - it contains music events. But you need to go down a couple more levels of the hierarchy - to block MTK1

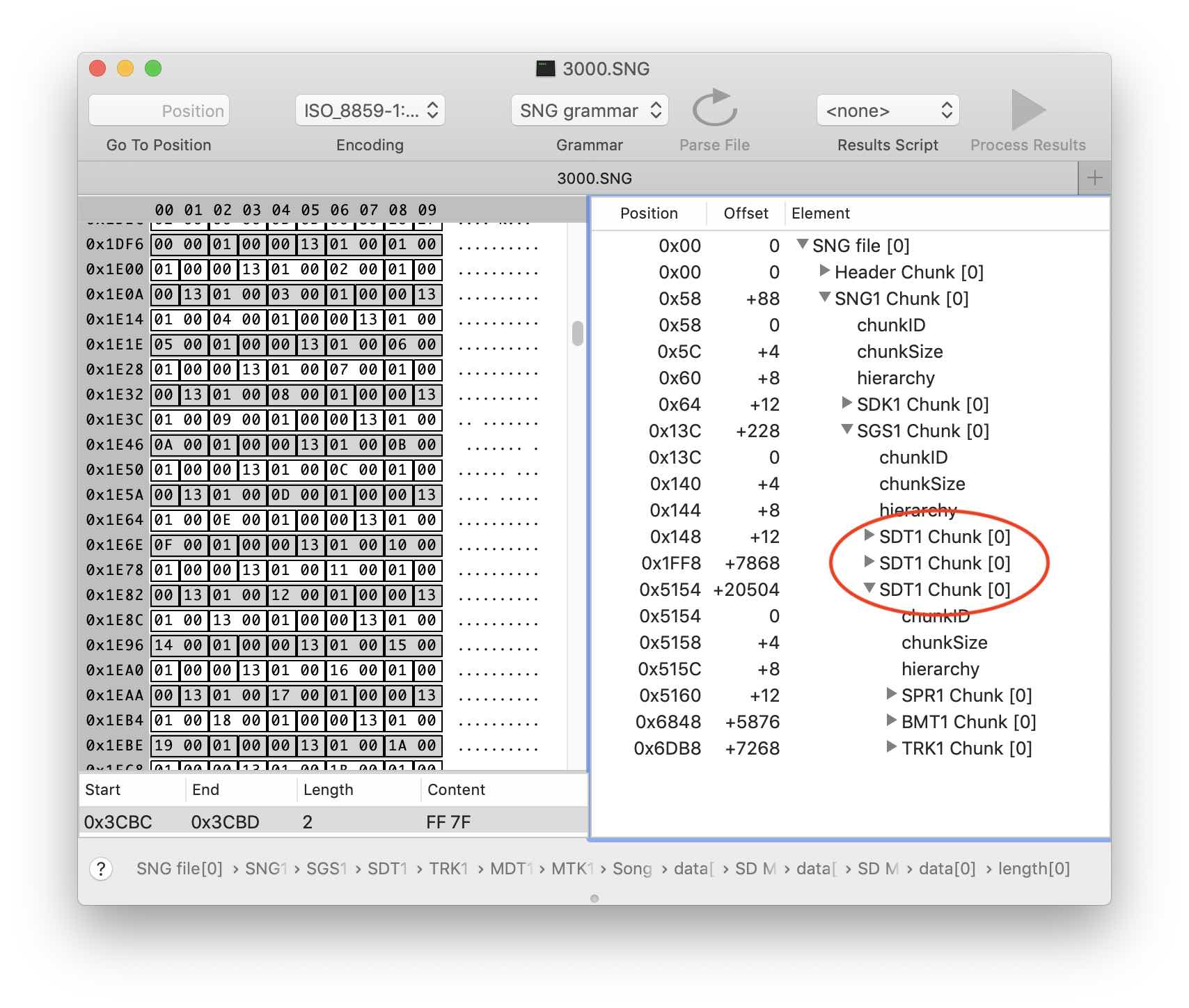

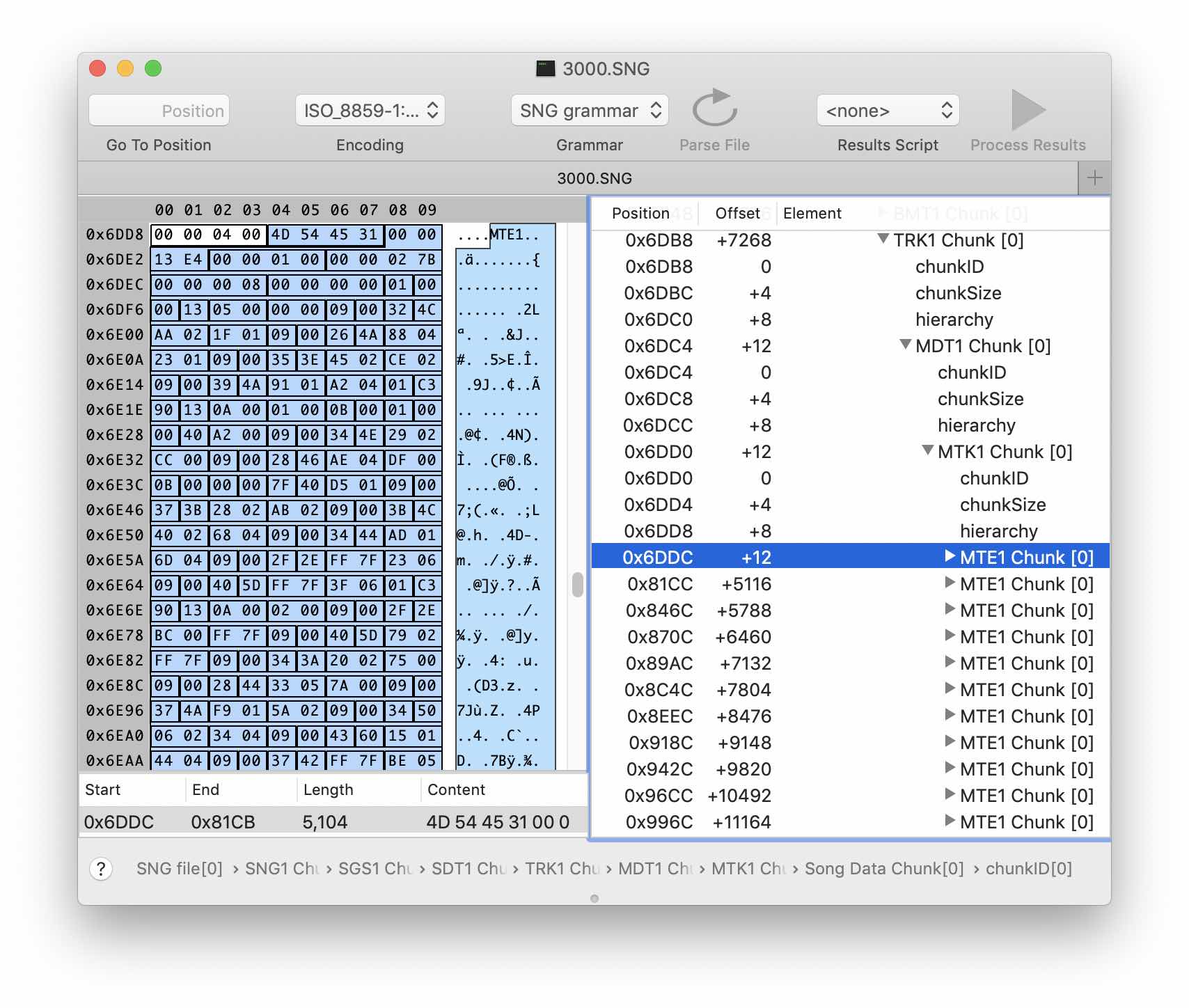

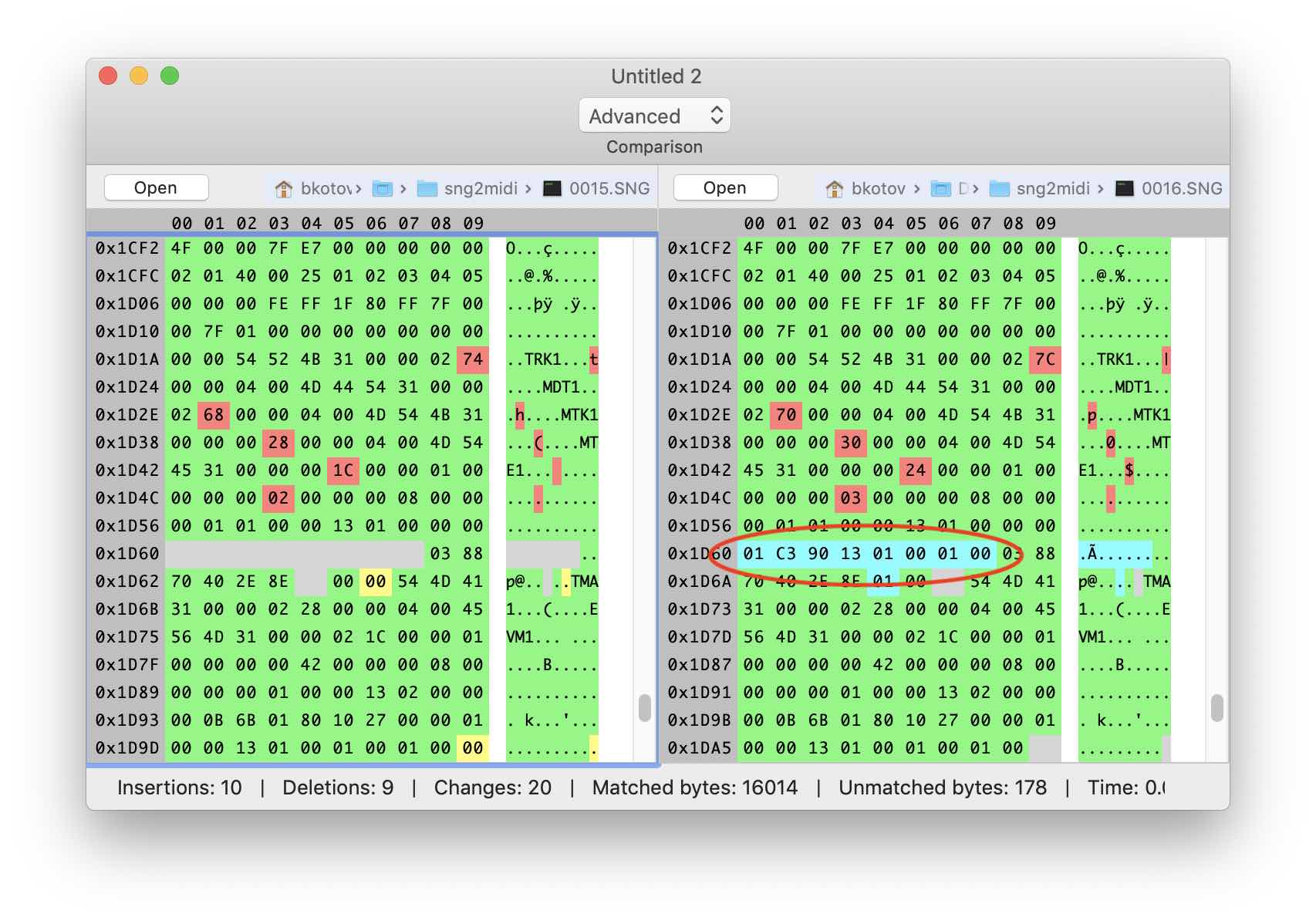

Finally, we found our tracks - these are blocks MTE1. Let's try to record an empty track of short duration on the synthesizer and one more bit longer - to understand how the information about measures in binary form is stored.

It seems that the measures are stored as eight-byte structures. Add a couple of notes:

So, we can assume that all events are stored in the same form. The beginning of the MTE block contains still unknown information, then the sequence of eight-byte structures goes to the end. Open the grammar editor and create an event structure with a size of 8 bytes.

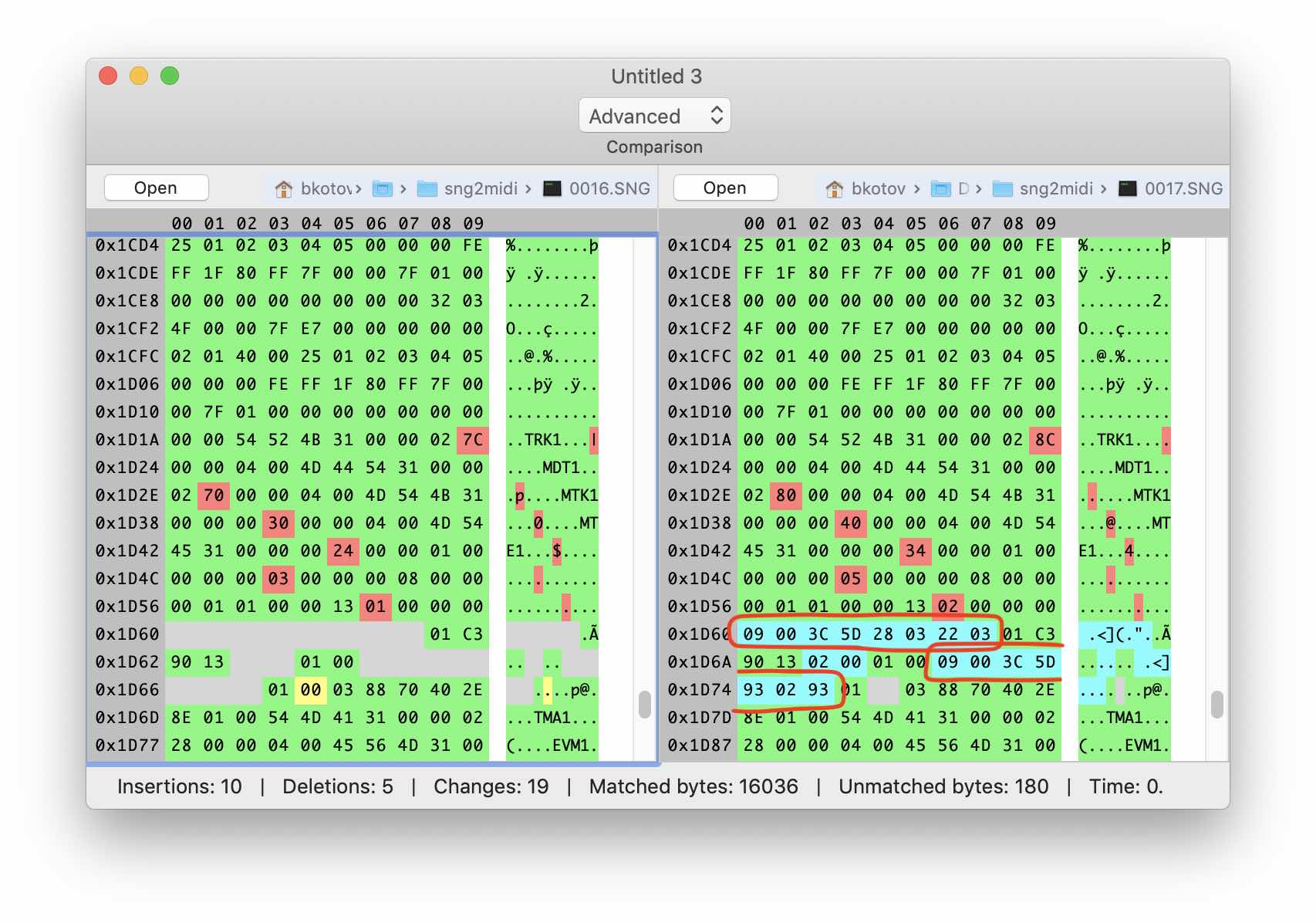

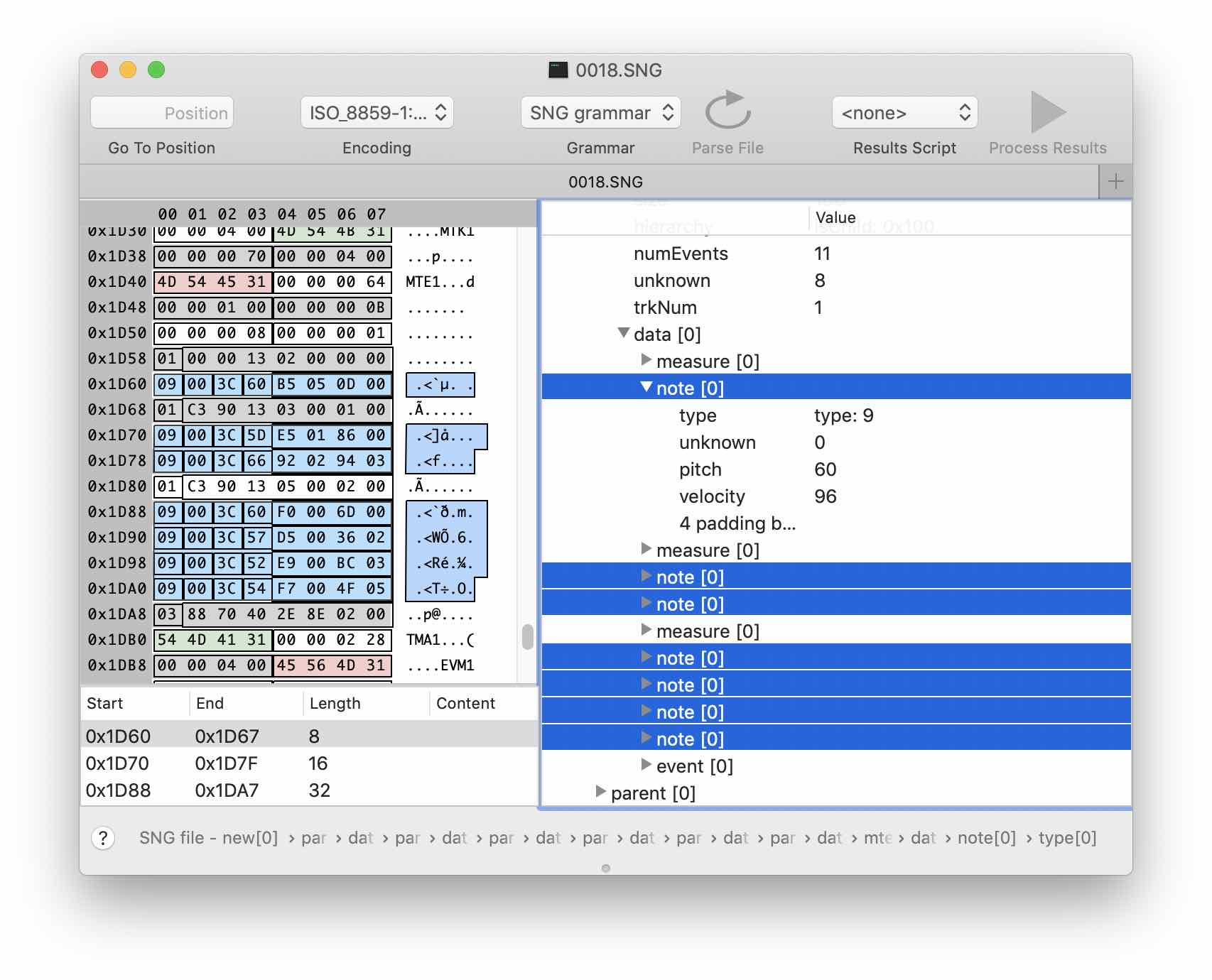

Add the mte1Chunk structure that inherits childChunk and place a link to event in the data structure . We indicate thatevent can be repeated an unlimited number of times. Next, through experiments, we find out the size and purpose of several bytes before the start of the stream of events of the track. I got the following:

At the beginning of block MTE1, the number of track events, its number and, presumably, the dimension of the event are stored. After applying the grammar, the block began to look like this:

Let's move on to the flow of events. After analyzing several files with different sequences of notes, the following picture emerges:

| # | A type | Binary representation |

|---|---|---|

| 1 | Beat 1 | 01 00 00 ... |

| 2 | Note | 09 00 3C ... |

| 3 | Note | 09 00 3C ... |

| 4 | Note | 09 00 3C ... |

| 5 | Beat2 | 01 C3 90 ... |

| 6 | Note | 09 00 3C ... |

| 7 | End of Track | 03 88 70 ... |

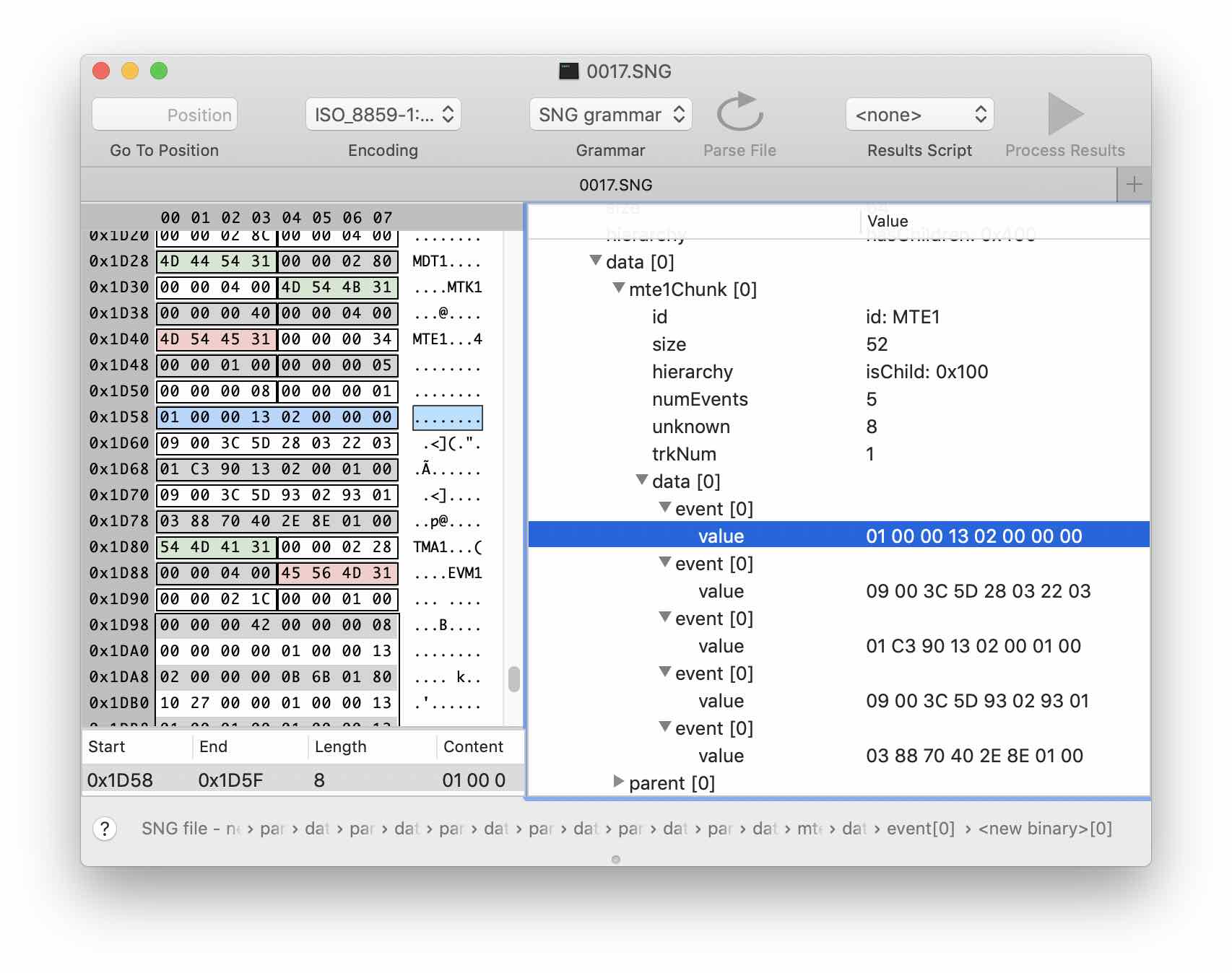

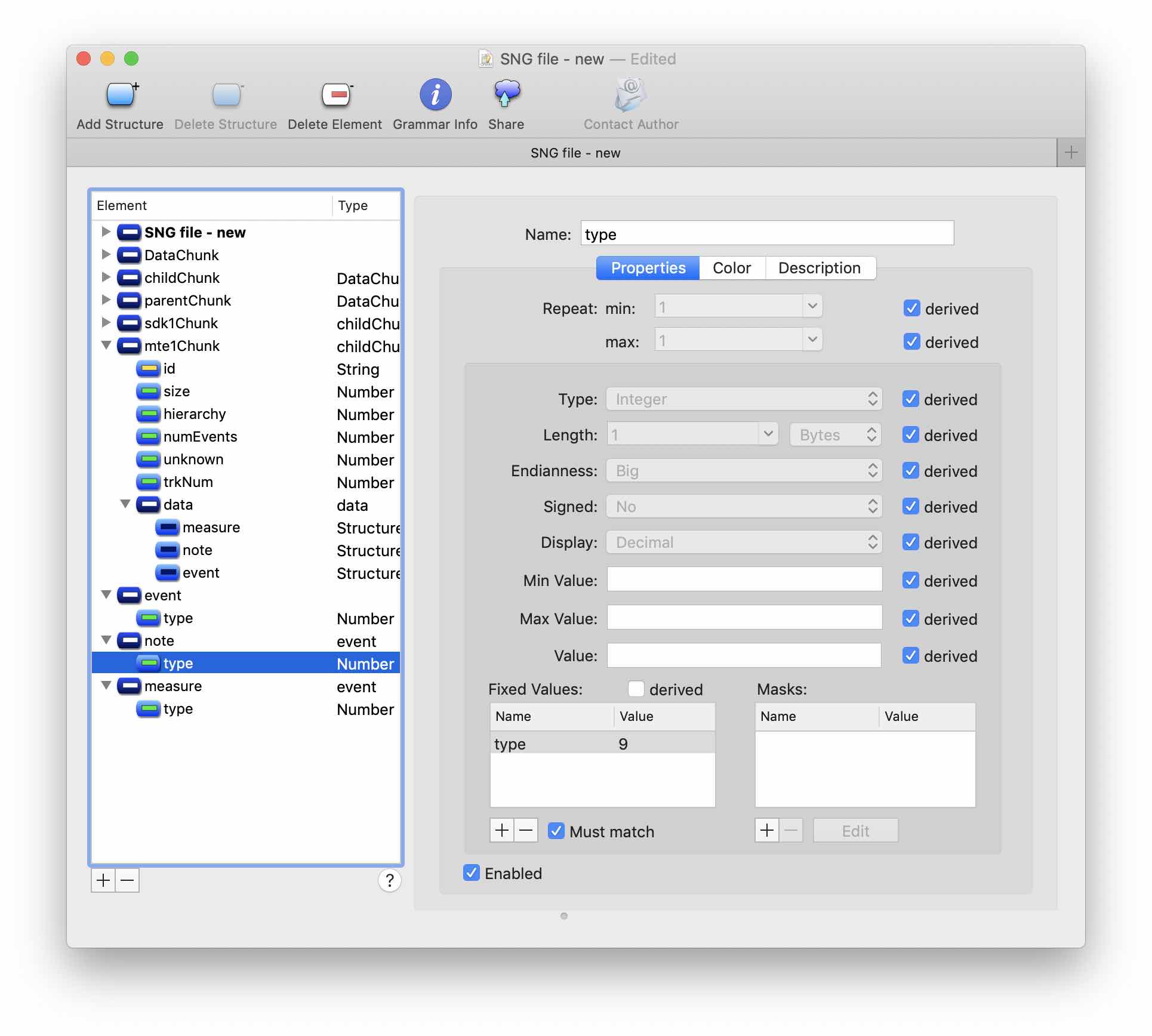

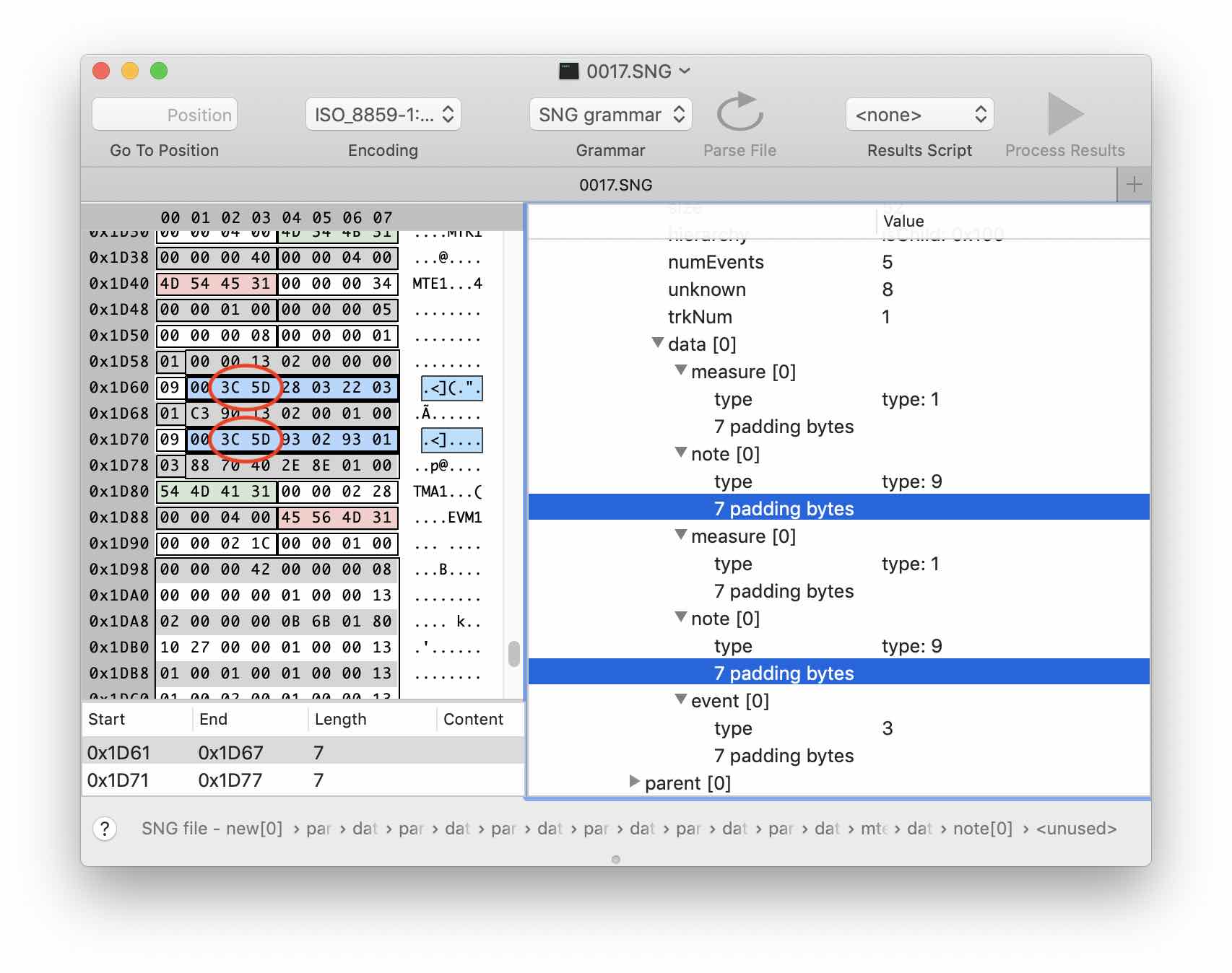

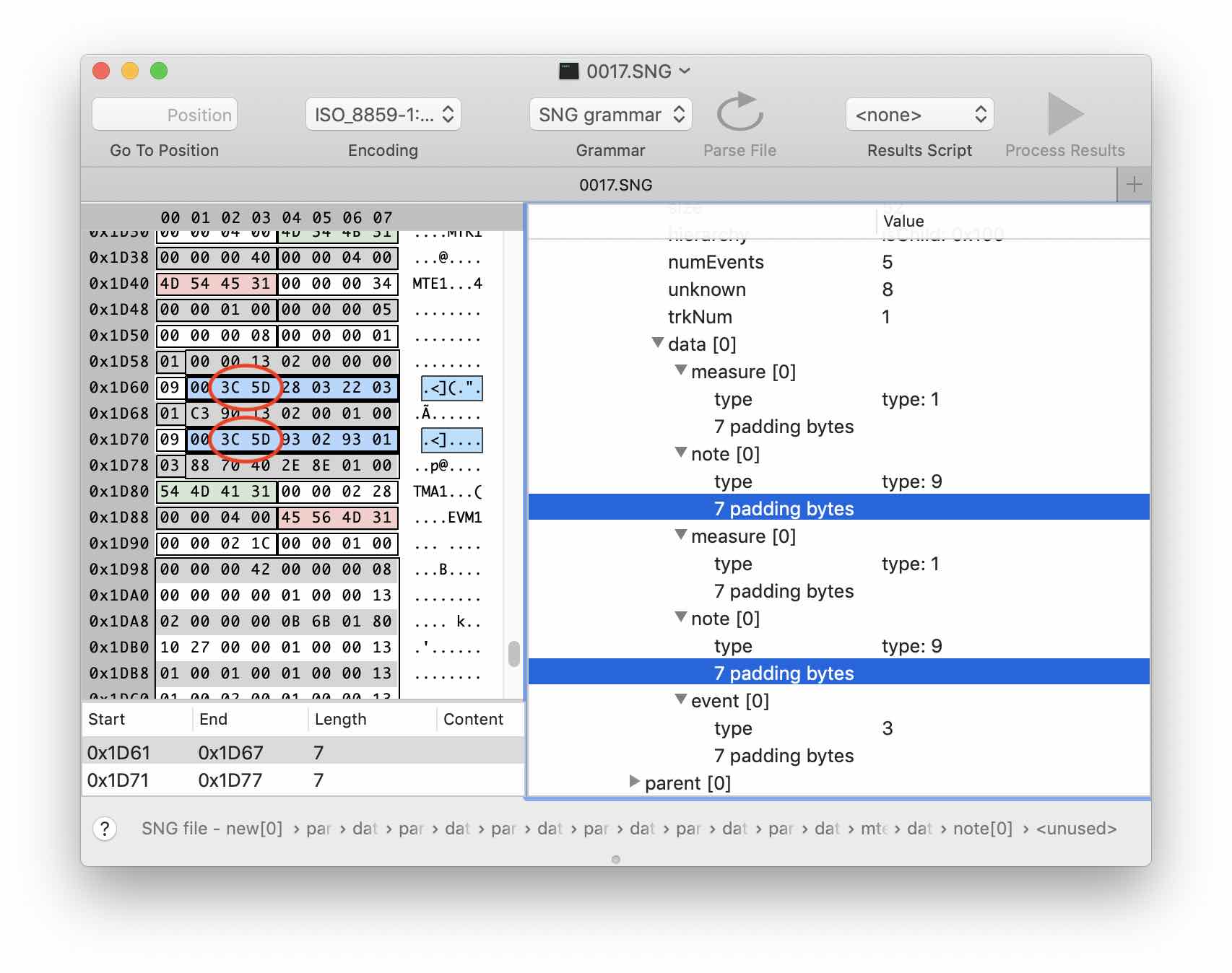

It looks like the first byte encodes the type of event. Add a type field to the event structure . Let's create two more structures inheriting event : measure and note . We indicate the corresponding Fixed Values for each of them. And finally, add links to these structures in the data of the mte1Chunk block .

Apply the changes:

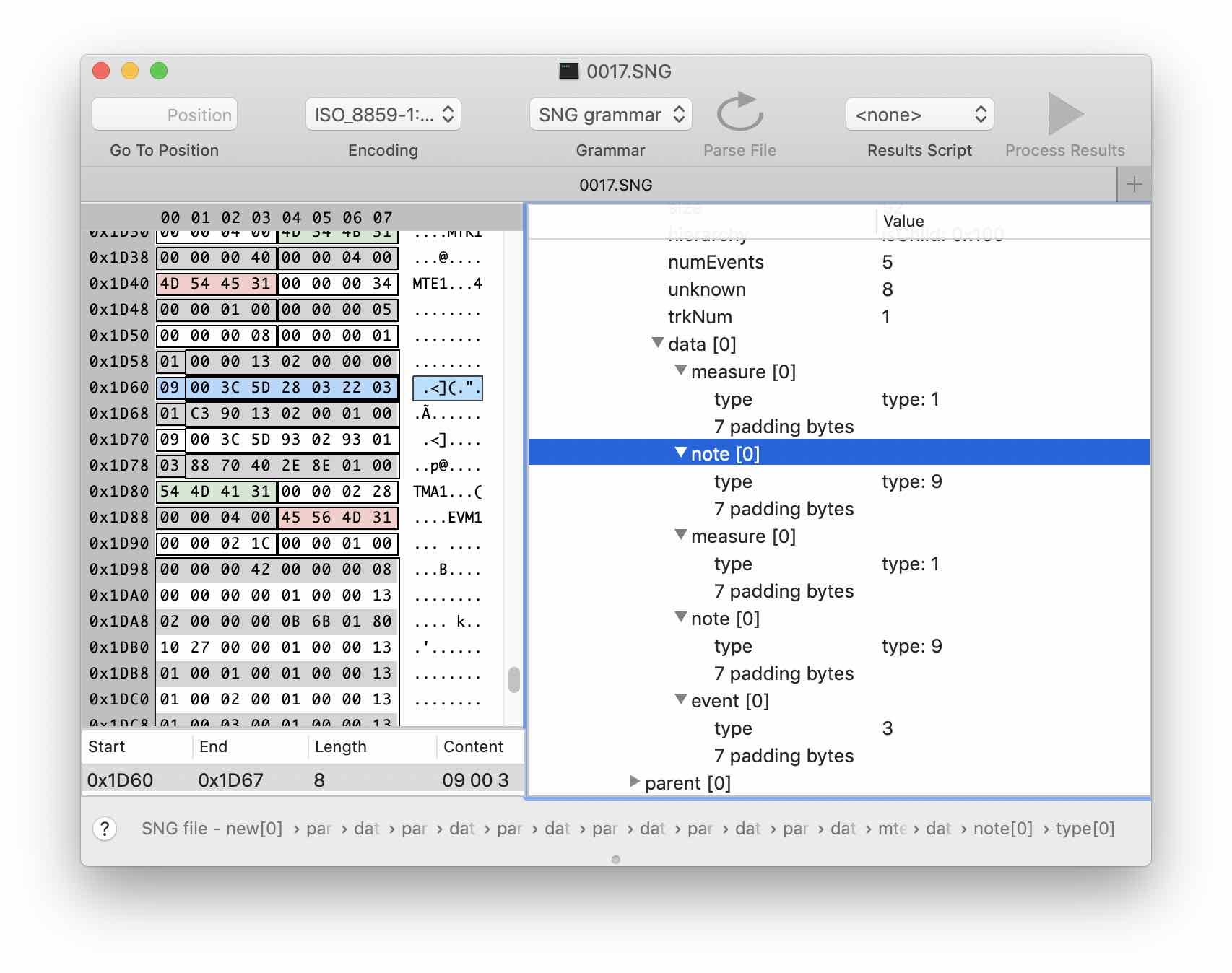

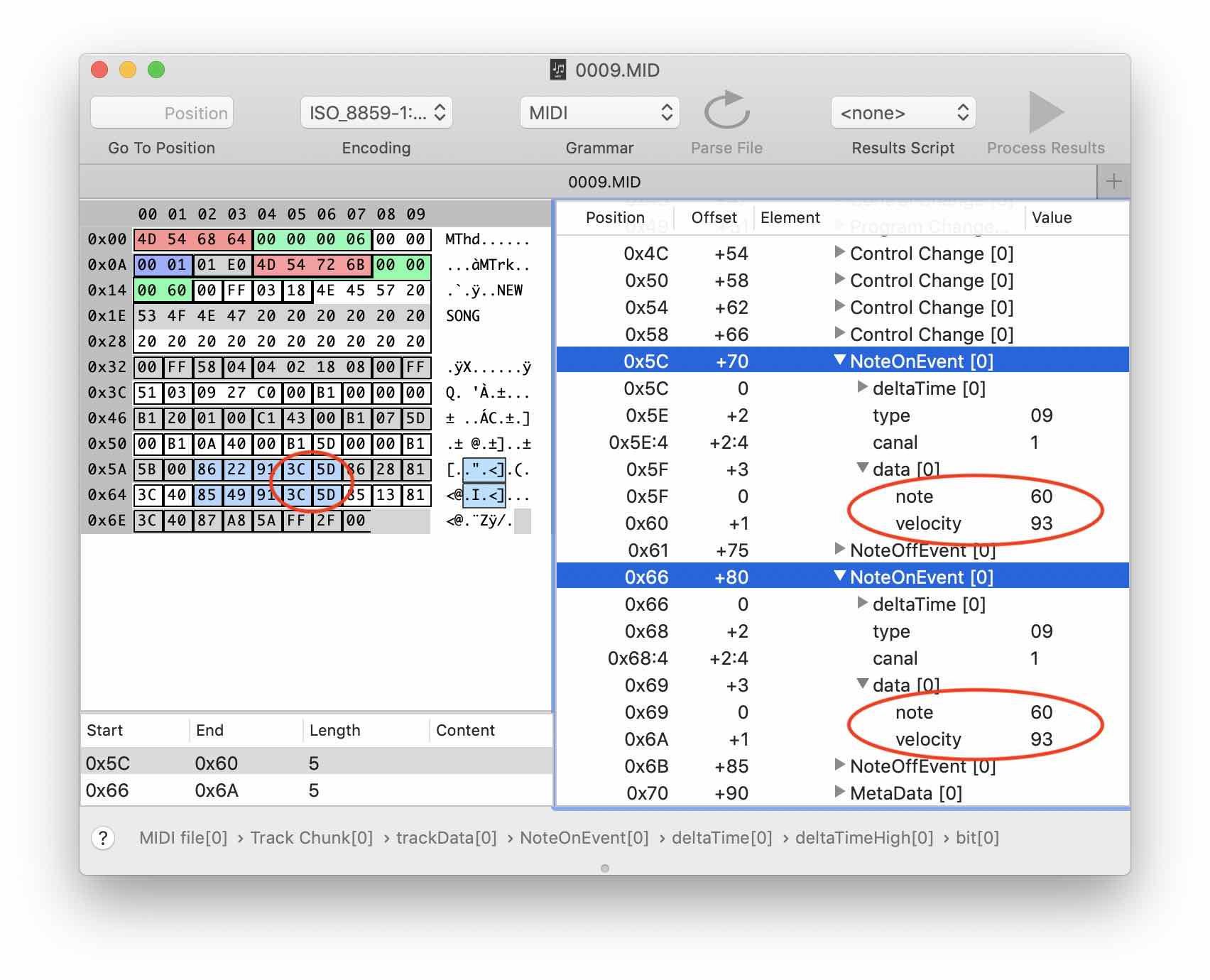

Well, we have made good progress. It remains to understand how the height and strength of the note is encoded, as well as the time shift of each event relative to the others. Let's try again to compare our files with the result of export to midi, done through the synthesizer menu. This time we are specifically interested in the events of clicking notes.

The same events in the SNG file

Excellent! It seems that the pitch and pressure of the notes are encoded in exactly the same way as in the midi format with just a couple of bytes. Add the appropriate fields to the grammar.

Unfortunately, things are not so simple with a temporary shift.

We deal with the duration and delta

In the midi format, the NoteOn and NoteOff events are separate. The duration of a note is determined by the delta time between these events. In the case of the SNG format, where there is no analogue of the NoteOff event, the duration and time delta values must be stored in one structure.

To understand how they are stored, I recorded several sequences of notes of different durations on the synthesizer.

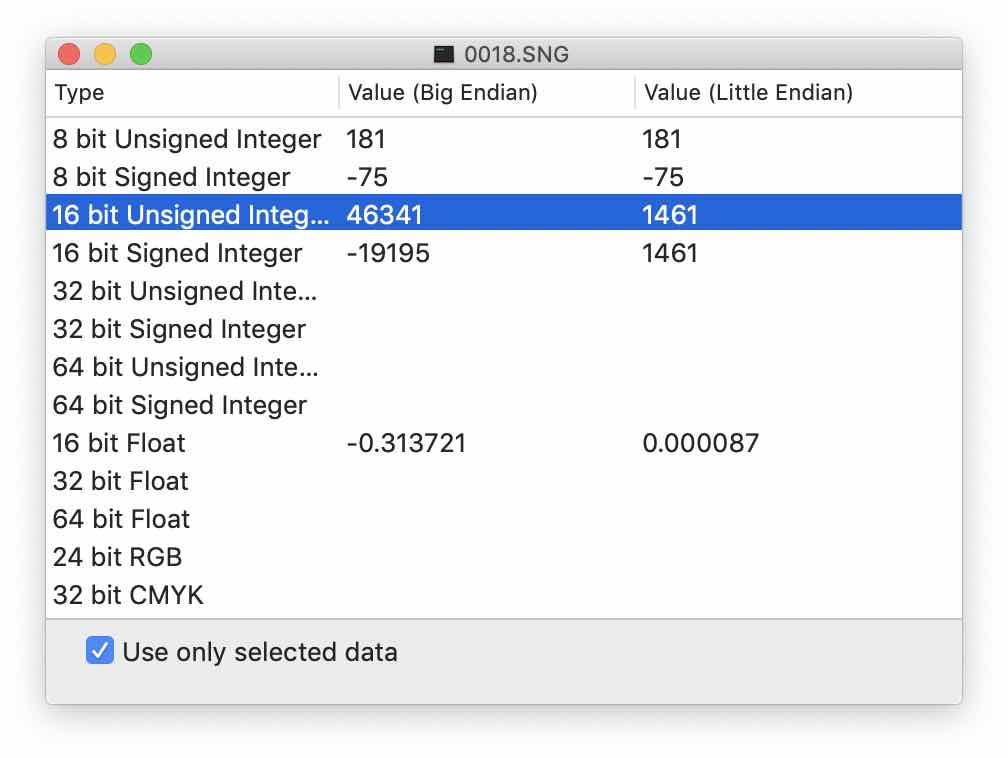

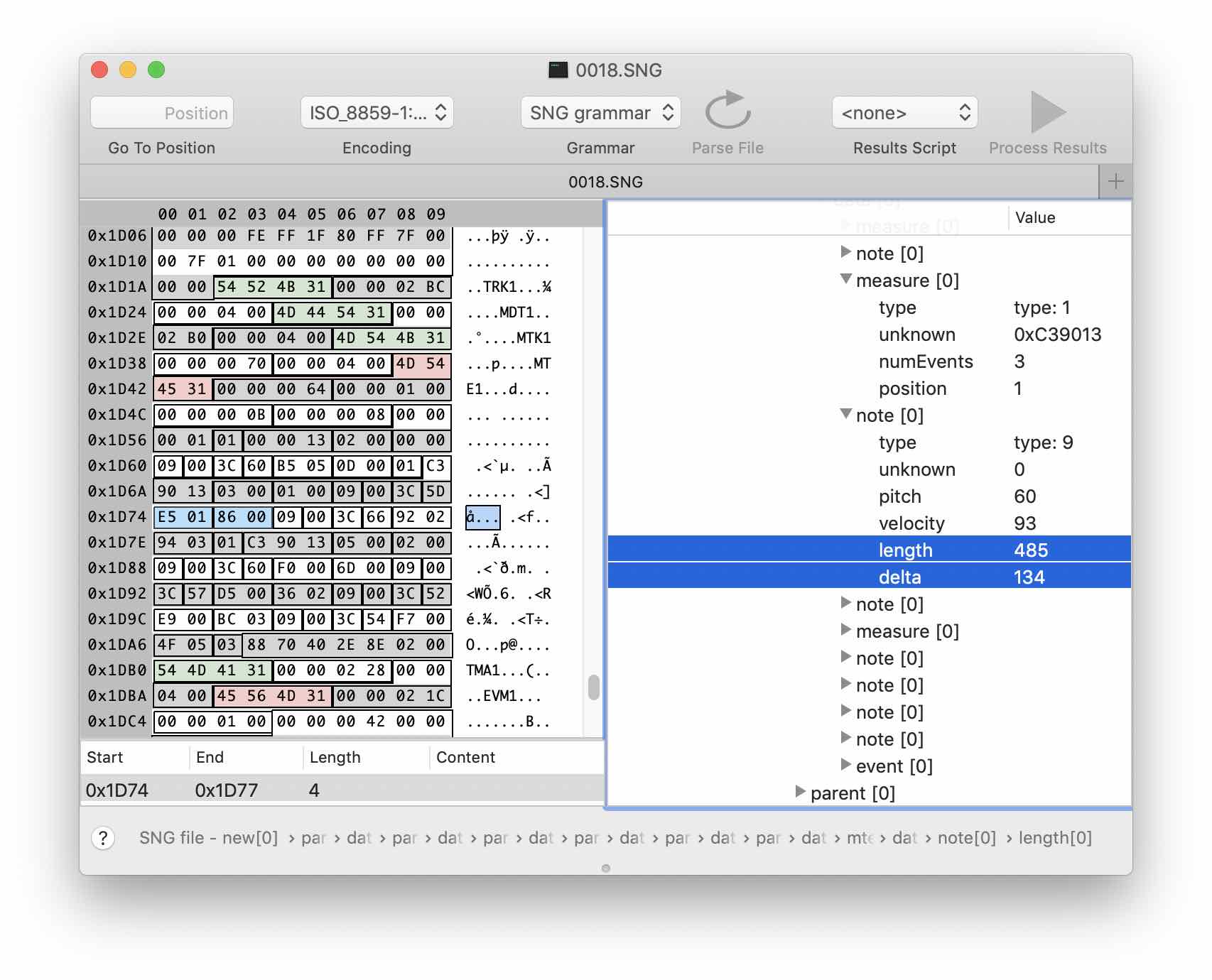

Obviously, the data we need is in the last 4 bytes of the event structure. The regularity is not visible with the naked eye, so we select the bytes of interest to us in the editor and use the Data Panel tool.

Hidden text

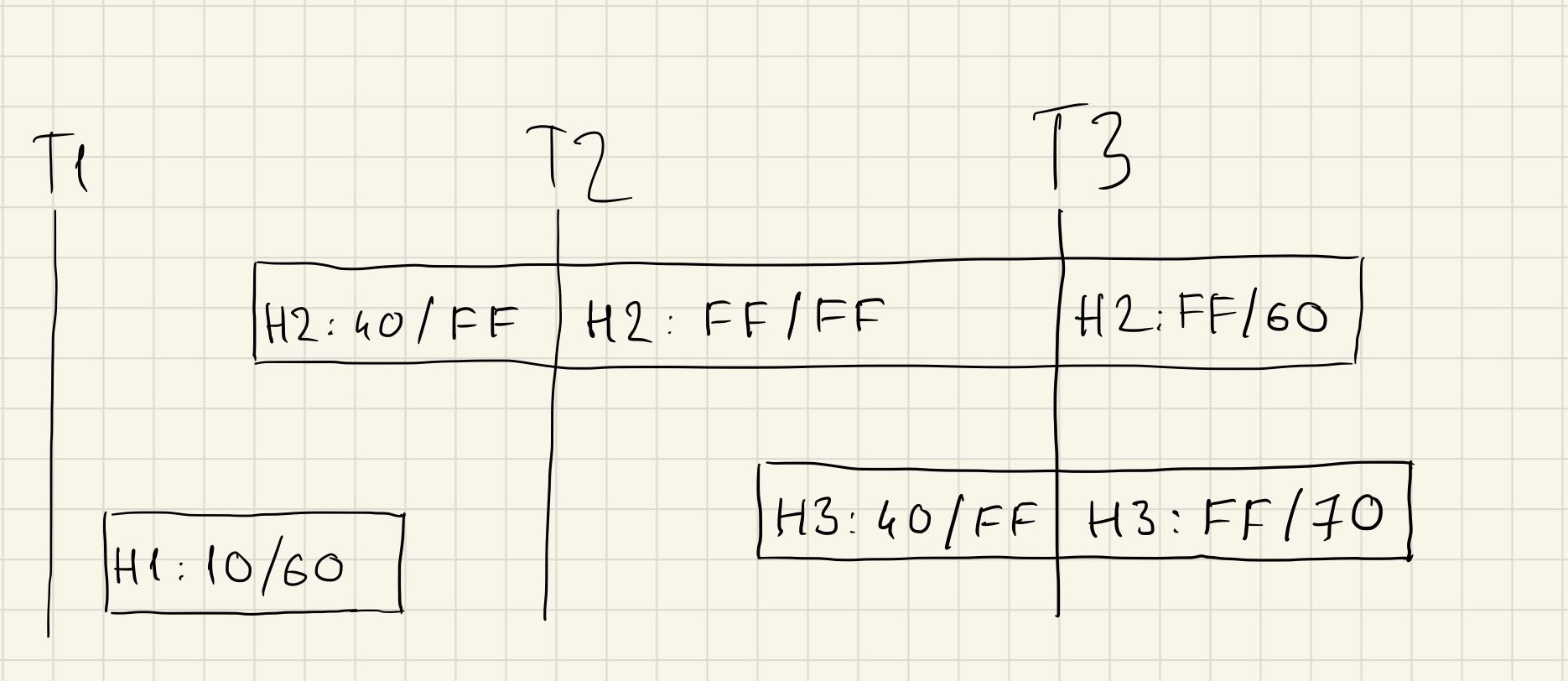

Apparently, both the duration of the note and the time shift are encoded by a pair of bytes (UInt16). In this case, the byte order is reverse - Little Endian. Having compared a sufficient amount of data, I found out that the time delta here is not counted from the previous event as in midi, but from the beginning of the clock. If the note ends in the next measure, then in the current measure its length will be 0x7fff, and in the next it is repeated with the same delta 0x7fff and the duration counted relative to the beginning of a new measure. Correspondingly, if a note sounds several measures, then in each intermediate one its duration and delta will be equal to 0x7fff.

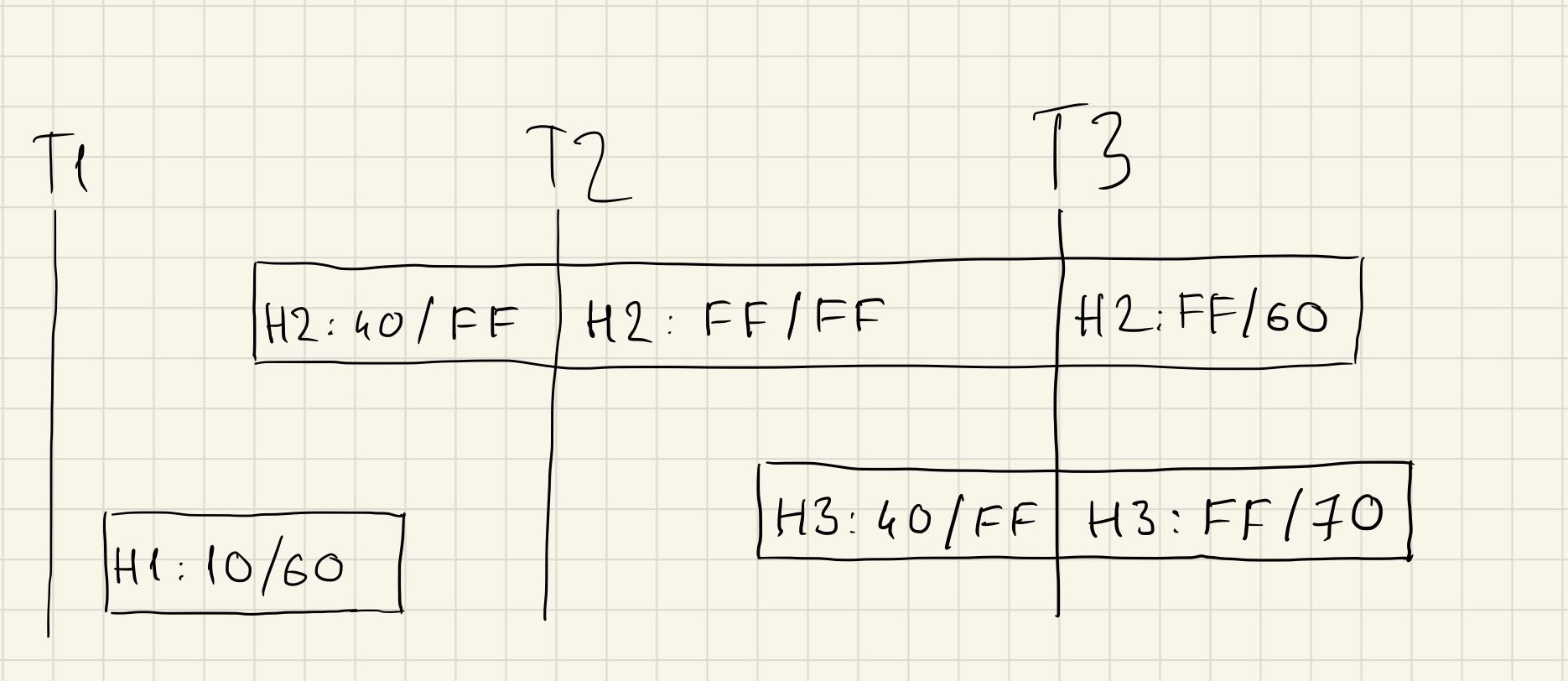

Small circuit

The units of time delta / duration are counted in cells. Note 1 sounds normal, and Note 2 continues to sound in the 2nd and 3rd measures.

The units of time delta / duration are counted in cells. Note 1 sounds normal, and Note 2 continues to sound in the 2nd and 3rd measures.

In my opinion, all this looks a bit crutchy. On the other hand, in musical notation, notes continuously sounding several measures are indicated in a similar way by legato.

In which “parrots" do we have a duration? Like midi, tics are used here. From the documentation it is known that the duration of one share is 480 ticks. With a tempo of 100 beats per minute and a 4/4 dimension, the duration of the quarter note is (60/100) = 0.6 seconds. Accordingly, the duration of one tick is 0.6 / 480 = 0.00125 seconds. A standard 4/4 beat will last 4 * 480 = 1920 ticks or 2.4 seconds at a rate of 100 bpm.

All this will be useful to us in the future. In the meantime, add the duration and delta to our note structure.. Also, note that there is a field in the tact structure that stores the number of events. Another field contains the serial number of the measure - add them to the measure structure .

Converter prototype

Now we have enough information to try to convert the data. The Hex editor Synalaze It in the pro version allows you to write scripts in python or lua. When creating a script, you need to decide what we want to work with: with the grammar itself, with individual files on the disk or somehow process the parsed data. Unfortunately, each of the templates has some limitations. The program provides a number of classes and methods for working, but not all of them are accessible from all templates. Perhaps this is a flaw in the documentation, but I have not found how you can load the grammar for a list of files, parse them and use the resulting structures to export data.

Therefore, we will create a script to work with the result of parsing the current file. This template implements three methods: init, terminate, and processResult. The latter is called automatically and recursively passes through all the structures and data received during parsing.

To write the converted data in midi, we use the Python MIDI toolkit (https://github.com/vishnubob/python-midi). Since we are implementing the Proof of Concept, we will not perform the conversion of note and delta durations. Instead, we set fixed values. Notes with a duration of 0x7fff or with a similar delta are simply discarded for now.

The capabilities of the built-in script editor are very limited, so all the code will have to be placed in one file.

gist.github.com/bkotov/71d7dfafebfe775616c4bd17d6ddfe7b

So, let's try to convert the file and listen to what we got

Hmm ... And it turned out pretty interesting. The first thing that occurred to me when I tried to formulate what it looked like was structureless music. I

’ll try to give a definition: Structureless music is a piece of music with a reduced structure, built on harmony. Note durations and intervals between notes are canceled or reduced to the same values.

A sort of harmonious noise. Let it be pearly (by analogy with white, blue, red, pink, etc.), it seems that no one has taken this combination.

Perhaps we should try to train a neural network on my data, maybe the result will be interesting.

The task for warming up the mind

This is all wonderful, but the main problem is still not solved. We need to convert the note durations to NoteOff events, and the time offset of the event relative to the start of the measure to a time delta between adjacent events. I will try to formulate the conditions of the problem more formally.

Task

Имеется поток музыкальных событий следующего вида:

НачалоТакта1

Нота1

Нота2

Нота3

...

НотаN

НачалоТакта2

...

НачалоТактаN

Нота1

...

КонецТрека

Событие НачалоТакта можно описать структурой

типСобытия: 1

длительностьТакта: 1920

номерТакта: Int

числоСобытийВТакте: Int

Событие Нота описывается структурой

типСобытия: 9

высота: 0-127

силаНажатия: 0-127

длительность: 0-1920 или 0xFF

времяСНачалаТакта: 0-1920 или 0xFF

Если нота начинается в текущем такте, а заканчивается в следующем, то в текущем такте ее длительность будет равна 0xFF, а в следующем нота дублируется с времяСНачалаТакта=0xFF и с длительностью считаемой относительно начала нового такта. Если нота звучит дольше чем в двух тактах, то она дублируется в каждом промежуточном такте. В этом случае во всех промежуточных тактах у дублей времяСНачалаТакта = длительность = 0xFF.

Разные ноты могут звучать одновременно.

Необходимо за один проход преобразовать эти данные в поток событий midi. Эти события описываются структурами:

НотаВкл:

типСобытия: 9

высота: 0-127

силаНажатия: 0-127

времяСПрошлогоСобытия: Int

НотаВыкл:

типСобытия: 8

высота: 0-127

силаНажатия: 0-127

времяСПрошлогоСобытия: IntThe task is a little simplified. In a real SNG file, each measure can have a different dimension. In addition to Note On / Off events, other events will also occur in the stream, for example, pressing the sustain pedal or changing the pitch using pitchBend.

I will give my solution to this problem in the next article (if there is one).

Current results

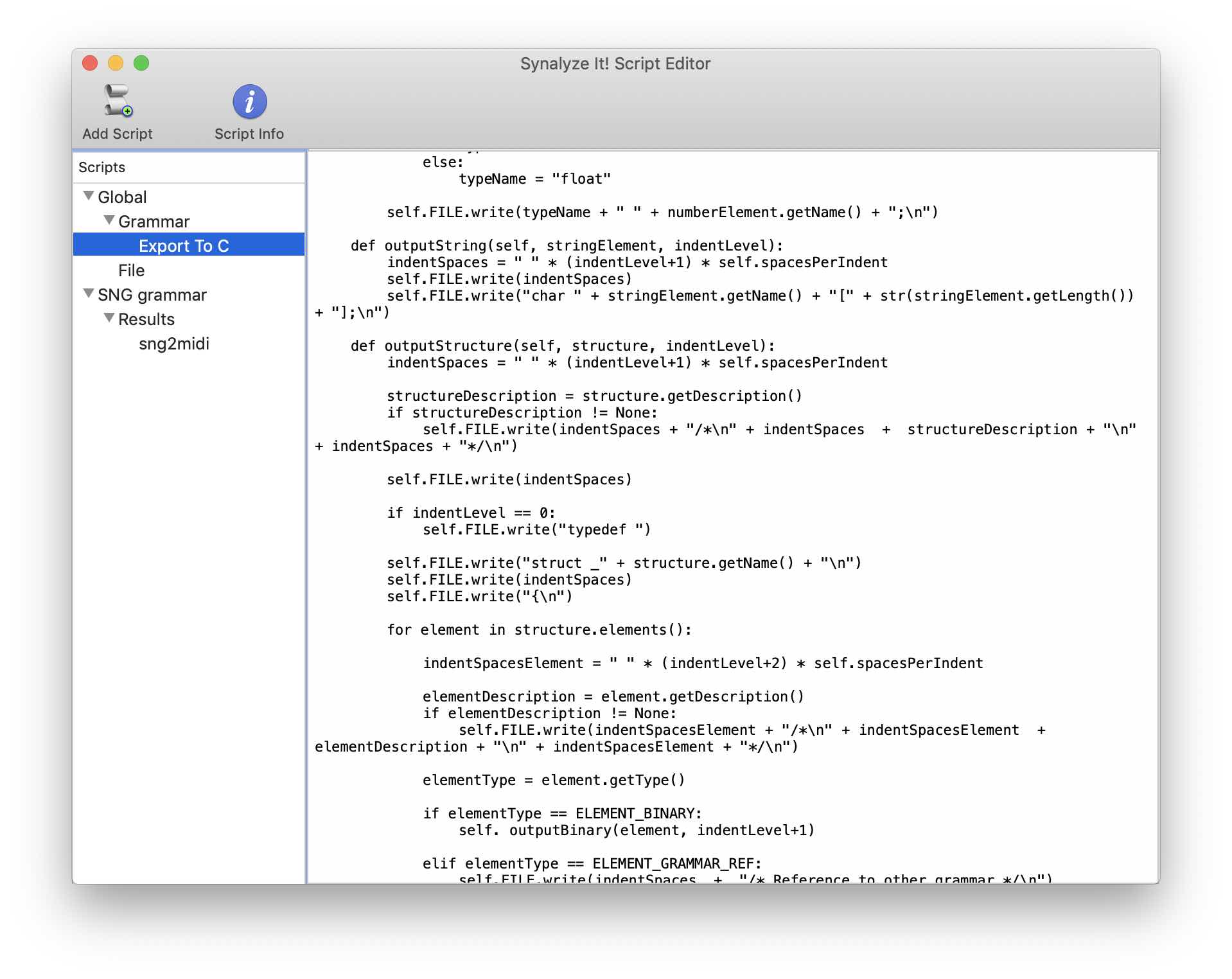

Since the solution with the script does not scale to an arbitrary number of files, I decided to write a console converter in Swift. If I wrote a two-way converter, then the created grammar structures would be useful to me in the code. You can export them to C structures or any other language using the same scripting functionality built into Synalize It! A file with an example of such an export is created automatically when you select a Grammar template.

At the moment, the converter is 99% complete (in the form that suits me in terms of functionality). I plan to put the code and grammar on github.

An example, for which everything was started, you can listen here .

How this piece sounds ready-made.