BERT is a state-of-the-art language model for 104 languages. BERT launching tutorial locally and on Google Colab

- From the sandbox

- Tutorial

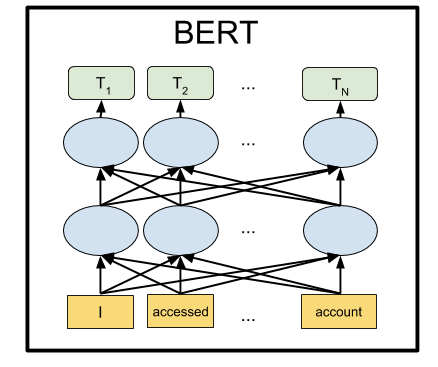

BERT is a neural network from Google, which showed by a wide margin state-of-the-art results on a number of tasks. With BERT, you can create programs with AI for natural language processing: answer questions posed in an arbitrary form, create chat bots, automatic translators, analyze text, and so on.

Google has posted pre-trained BERT models, but as is usually the case in Machine Learning, they suffer from a lack of documentation. Therefore, in this tutorial we will learn how to run the BERT neural network on a local computer, as well as on a free server GPU on Google Colab.

Why do you need it

To feed a text to the input of a neural network, you need to somehow represent it in the form of numbers. The easiest way to do this is letter-by-letter, giving one letter to each input of the neural network. Then each letter will be encoded with a number from 0 to 32 (plus some margin for punctuation marks). This is the so-called character-level.

But much better results are obtained if we present sentences not by one letter, but by feeding the neural network to each input at once by the whole word (or at least in syllables). It will already be word-level. The easiest option is to compile a dictionary with all the existing words, and feed the network the number of the word in this dictionary. For example, if the word "dog" is in this dictionary at 1678 place, then the input of the neural network for this word is given the number 1678.

That's just in a natural language with the word "dog" in a person many associations immediately pop up: "furry", "evil", "man's friend". Is it possible to somehow encode this feature of our thinking in a representation for a neural network? It turns out you can. To do this, it is enough to re-sort the word numbers so that the words close in meaning are next to each other. Let there be, for example, 1678 for "dog", and 1680 for the word "fluffy". And 9000 for the word "teapot". As you can see, the numbers 1678 and 1680 are much closer to each other than the 9000.

In practice, each word is assigned not one number, but several - a vector, say, of 32 numbers. And distances are measured as distances between points pointed to by these vectors in the space of the corresponding dimension (for a vector of 32 numbers in length, this is a space with 32 dimensions, or with 32 axes). This allows you to match one word at once with several words that are close in meaning (depending on which axis to count). Moreover, arithmetic operations can be performed with vectors. A classic example: if from a vector denoting the word "king", subtract the vector "man" and add a vector for the word "woman", then you get a certain vector-result. And it will miraculously correspond to the word "queen". And indeed, "the king - a man + woman = queen." Magic! And this is not an abstract example,really it happens . Considering that neural networks are well adapted for mathematical transformations over their inputs, apparently this provides such a high efficiency of this method.

This approach is called Embeddings. All machine learning packages (TensorFlow, PyTorch) allow the first layer of the neural network to put a special Embedding Layer layer that does this automatically. That is, at the input of the neural network, we give the usual number of a word in the dictionary, and the Embedding Layer, by self-learning, translates each word into a vector of the specified length, say, 32 numbers.

But they quickly realized that it was much more profitable to train in advance such a vector representation of words on some huge corpus of texts, for example, on the whole Wikipedia, and in specific neural networks to use ready-made word vectors, and not to train them every time.

There are several ways to represent words by vectors, they gradually evolve: word2vec, GloVe, Elmo.

In the summer of 2018, OpenAI noticed that if you pre-teach a neural network on the Transformer architecture on large volumes of text, it will unexpectedly and by a large margin show excellent results on a variety of different tasks in the processing of natural language. In fact, such a neural network at its output creates vector representations for words, and even whole phrases. And hanging on top of such a language model a small block of a pair of additional layers of neurons, you can train this neural network for any task.

BERT from Google is an enhanced OpenAI GPT network (bidirectional instead of unidirectional, etc.), also on the Transformer architecture. At the moment, BERT is a state-of-the-art on almost all popular NLP benchmarks.

How did they do it

The idea behind BERT is very simple: let's input phrases to the input of a neural network, in which 15% of words are replaced by [MASK], and teach the neural network to predict these masked words.

For example, if we feed the phrase “I came to [MASK] and bought [MASK] to the input of the neural network, it should show the words“ store ”and“ milk ”at the output. This is a simplified example from the official BERT page, on longer sentences the variation of possible options becomes less, and the answer of the neural network is unambiguous.

And in order for the neural network to learn to understand the relationship between different sentences, we will additionally teach it to predict whether the second phrase is a logical continuation of the first. Or is it some kind of random phrase that has nothing to do with the first.

So, for two sentences: "I went to the store." and "And bought milk there.", the neural network should answer that this is logical. And if the second phrase is "Cruc's sky Pluto," then it must respond that this sentence has nothing to do with the first. Below we will play with both of these modes of operation BERT.

Having thus trained the neural network on the corpus of texts from Wikipedia and the BookCorpus book collection for 4 days on 16 TPU, we received BERT.

Installation and Setup

Note : in this section we will start and play with BERT on the local computer. To run this neural network on a local GPU, you will need an NVidia GTX 970 with 4 GB of video memory or higher. If you just want to run BERT in a browser (you don't even need a GPU on your computer for this), go to the Google Colab section.

First of all, install TensorFlow, if you don’t have one yet, follow the instructions from https://www.tensorflow.org/install . To support GPU, you must first install CUDA Toolkit 9.0, then cuDNN SDK 7.2, and only then itself TensorFlow with GPU support:

pip install tensorflow-gpuBasically, this is enough to run BERT. But there is no instruction as such; you can compose it yourself by sorting out the source code in the run_classifier.py file (the usual situation in Machine Learning, when instead of documentation you have to go into the source code). But we will do it easier and use the Keras BERT shell (it may also come in handy for the fine-tuning network later, because it provides a convenient Keras interface).

To do this, install Keras himself:

pip install kerasAnd after Keras BERT:

pip install keras-bertWe also need the tokenization.py file from the original github BERT. Either click the Raw button and save it to the folder with the future script, or download the entire repository and take the file from there, or take a copy from the repository with this code https://github.com/blade1780/bert .

Now it's time to download the pre-trained neural network. There are several options for BERT, all of which are listed on the official page github.com/google-research/bert . We will take the universal multilingual "BERT-Base, Multilingual Cased" for 104 languages. Download the multi_cased_L-12_H-768_A-12.zip file (632 MB) and unzip it in the folder with the future script.

Everything is ready, create a file BERT.py, then there will be a bit of code.

Import required libraries and set paths

# coding: utf-8import sys

import codecs

import numpy as np

from keras_bert import load_trained_model_from_checkpoint

import tokenization

# папка, куда распаковали преодобученную нейросеть BERT

folder = 'multi_cased_L-12_H-768_A-12'

config_path = folder+'/bert_config.json'

checkpoint_path = folder+'/bert_model.ckpt'

vocab_path = folder+'/vocab.txt'Since we have to translate the usual lines of text into a special format of tokens, we will create a special object for this. Pay attention to do_lower_case = False, since we use the Cased BERT model, which is case sensitive.

tokenizer = tokenization.FullTokenizer(vocab_file=vocab_path, do_lower_case=False)Load the model

model = load_trained_model_from_checkpoint(config_path, checkpoint_path, training=True)

model.summary()BERT can work in two modes: guess the words missing in the phrase, or guess whether the second phrase is logical after the first. We will do both.

For the first mode, the input to the neural network is to submit a phrase in the format:

[CLS] Я пришел в [MASK] и купил [MASK]. [SEP]The neural network should return the full sentence with filled words on the site of the masks: "I came to the store and bought milk."

For the second mode, both phrases separated by a separator must be submitted to the input of the neural network:

[CLS] Я пришел в магазин. [SEP] И купил молоко. [SEP]The neural network must answer whether the second phrase is a logical continuation of the first. Or is it a random phrase that has nothing to do with the first.

For BERT to work, you need to prepare three vectors, each with a length of 512 numbers: token_input, seg_input and mask_input.

In token_input will be stored our original text converted into tokens using the tokenizer. The phrase in the form of indices in the dictionary will be at the beginning of this vector, and the rest will be filled with zeros.

In mask_input, we have to set 1 for all positions where the [MASK] mask is located, and fill in the rest with zeros.

In seg_input, we must denote the first phrase (including the initial CLS and the SEP separator) as 0, the second phrase (including the final SEP) denote as 1, and fill in the rest of the vector with zeros.

BERT does not use a dictionary of whole words, rather of the most common syllables. Although the whole words in it too. You can open the vocab.txt file in the downloaded neural network and see what words the neural network uses at its input. There are like whole words, for example "France". But most Russian words need to be broken down into syllables. So, the word "came" should be broken into "when" and "## went." To help convert regular text strings to the format required for BERT, we will use the tokenization.py module.

Mode 1: prediction of words covered by the token [MASK] in the phrase

The input phrase, which is fed to the input of the neural network

sentence = 'Я пришел в [MASK] и купил [MASK].'

print(sentence)Convert it to tokens. The problem is that tokenizer cannot handle service marks like [CLS] and [MASK], although they are in the vocab.txt dictionary. Therefore, we will have to manually split our string with [MASK] markers and extract pieces of plain text from it in order to convert it to BERT tokens using tokenizer. And also add [CLS] to the beginning and [SEP] to the end of the phrase.

sentence = sentence.replace(' [MASK] ','[MASK]'); sentence = sentence.replace('[MASK] ','[MASK]'); sentence = sentence.replace(' [MASK]','[MASK]') # удаляем лишние пробелы

sentence = sentence.split('[MASK]') # разбиваем строку по маске

tokens = ['[CLS]'] # фраза всегда должна начинаться на [CLS]# обычные строки преобразуем в токены с помощью tokenizer.tokenize(), вставляя между ними [MASK]for i in range(len(sentence)):

if i == 0:

tokens = tokens + tokenizer.tokenize(sentence[i])

else:

tokens = tokens + ['[MASK]'] + tokenizer.tokenize(sentence[i])

tokens = tokens + ['[SEP]'] # фраза всегда должна заканчиваться на [SEP] In tokens now tokens that are guaranteed by the dictionary are converted into indices. Let's do it:

token_input = tokenizer.convert_tokens_to_ids(tokens) Now in token_input comes a series of numbers (the numbers of words in the vocab.txt dictionary) that need to be fed to the input of the neural network. It remains only to extend this vector to the length of 512 elements. Python construct [0] * length creates an array of length length, filled with zeros. We simply add it to our tokens, which in python combines two arrays into one.

token_input = token_input + [0] * (512 - len(token_input))Now we create a mask with a mask of 512 length, putting everywhere 1, where the number 103 appears in tokens (which corresponds to the marker [MASK] in the vocab.txt dictionary), and the rest filling in 0:

mask_input = [0]*512for i in range(len(mask_input)):

if token_input[i] == 103:

mask_input[i] = 1For the first BERT mode of operation, seg_input must be completely filled with zeros:

seg_input = [0]*512The last step is to convert the python arrays into numpy arrays of c shape (1,512), for which we put them into subarray []:

token_input = np.asarray([token_input])

mask_input = np.asarray([mask_input])

seg_input = np.asarray([seg_input])Ok, done. Now we run the neural network prediction!

predicts = model.predict([token_input, seg_input, mask_input])[0]

predicts = np.argmax(predicts, axis=-1)

predicts = predicts[0][:len(tokens)] # отрезаем начало фразы, длиной как исходная фраза, чтобы отсечь случайные выбросы среди нулей дальшеNow format the result from tokens back to the string, separated by spaces.

out = []

# добавляем в out только слова в позиции [MASK], которые маскированы цифрой 1 в mask_inputfor i in range(len(mask_input[0])):

if mask_input[0][i] == 1: # [0][i], т.к. сеть возвращает batch с формой (1,512), где в первом элементе наш результат

out.append(predicts[i])

out = tokenizer.convert_ids_to_tokens(out) # индексы в текстовые токены

out = ' '.join(out) # объединяем токены в строку с пробелами

out = tokenization.printable_text(out) # в удобочитаемый текст

out = out.replace(' ##','') # объединяем разъединенные слова: "при ##шел" -> "пришел"And display the result:

print('Result:', out)In our example, for the phrase "I came to [MASK] and bought [MASK]." the neural network gave the result "house" and "it": "I came into the house and bought it." Well, not so bad for the first time. Buy a house is definitely better than milk).

Earth is the third [MASK] from the Sun

Result: star

sandwich best [MASK] with oil

Result: found

after [MASK] lunch is supposed to sleep

Result: this

get away from [MASK]

Result: ## oh - is that some kind of curse word? )

[MASK] from the door

Result: view

При [MASK] молотка и гвоздей можно сделать шкаф

Result: помощи

А если завтра не будет? Сегодня, например, его не [MASK]!

Result: будет

Как может надоесть игнорировать [MASK]?

Result: её

Есть бытовая логика, есть женская логика, а о мужской [MASK] ничего не известно

Result: философии

У женщин к тридцати годам формируется образ принца, под который подходит любой [MASK].

Result: человек

Большинством голосов Белоснежка и семь гномов проголосовали за [MASK], при одном голосе против.

Result: село — первая буква правильная

Оцените степень своего занудства по 10 бальной шкале: [MASK] баллов

Result: 10

Вашу [MASK], [MASK] и [MASK]!

Result: любовь я я — нет, BERT, я имел ввиду совсем не это

You can also enter English phrases (and any in 104 languages, a list of which is here )

[MASK] must go on!

Result: I

Mode 2: checking the logic of two phrases

We set two consecutive phrases that will be submitted to the input of the neural network.

sentence_1 = 'Я пришел в магазин.'

sentence_2 = 'И купил молоко.'

print(sentence_1, '->', sentence_2)Let's form tokens in the format [CLS] phrase_1 [SEP] phrase_2 [SEP], transforming plain text into tokens using tokenizer:

tokens_sen_1 = tokenizer.tokenize(sentence_1)

tokens_sen_2 = tokenizer.tokenize(sentence_2)

tokens = ['[CLS]'] + tokens_sen_1 + ['[SEP]'] + tokens_sen_2 + ['[SEP]']We convert string tokens into numeric indices (the numbers of words in the vocab.txt dictionary) and extend the vector to 512:

token_input = tokenizer.convert_tokens_to_ids(tokens)

token_input = token_input + [0] * (512 - len(token_input))The word mask in this case is completely filled with zeros.

mask_input = [0] * 512But you need to fill the mask of sentences with the second phrase (including the final SEP) with units, and everything else with zeros:

seg_input = [0]*512

len_1 = len(tokens_sen_1) + 2# длина первой фразы, +2 - включая начальный CLS и разделитель SEPfor i in range(len(tokens_sen_2)+1): # +1, т.к. включая последний SEP

seg_input[len_1 + i] = 1# маскируем вторую фразу, включая последний SEP, единицами# конвертируем в numpy в форму (1,) -> (1,512)

token_input = np.asarray([token_input])

mask_input = np.asarray([mask_input])

seg_input = np.asarray([seg_input])Pass the phrases through the neural network (this time the result is in [1], and not in [0], as it was above)

predicts = model.predict([token_input, seg_input, mask_input])[1] And we derive the probability that the second phrase is normal, and not a random set of words

print('Sentence is okey:', int(round(predicts[0][0]*100)), '%')In two phrases:

I came to the store. -> And bought milk.

Neural network response:

Sentence is okey: 99%

And if the second phrase is "Cruc's sky Pluto", the answer will be:

Sentence is okey: 4%

Google colab

Google provides a free server GPU Tesla K80 with 12 Gb of video memory (TPU is also available now, but their configuration is a bit more complicated). All code for Colab should be designed as jupyter notebook. To launch BERT in a browser, simply open the link.

http://colab.research.google.com/github/blade1780/bert/blob/master/BERT.ipynb

In the Runtime menu, select Run All , so that all cells start for the first time, download the model and connect the necessary libraries. Agree to reset all runtime if required.

Убедитесь, что в меню Runtime -> Change runtime type выбрано GPU и Python 3

Если кнопка подключения не активна, нажмите ее, чтобы стало Connected.

Now change the input strings sentence , sentence_1 and sentence_2 , and click the Play icon on the left to launch only the current cell. Run the entire notebook is no longer necessary.

You can run BERT in Google Colab even from a smartphone, but if it does not open, you may need to enable the Full version checkbox in your browser settings.

What's next?

In order to train BERT for a specific task, you need to add one or two layers of a simple Feed Forward network on top of it, and to train it only without touching the main BERT network. This can be done either on the bare TensorFlow, or through the shell Keras BERT. Such additional training for a specific domain occurs very quickly and is completely analogous to Fine Tuning in convolutional networks. Thus, under the SQuAD task, one can complete the training of a neural network on a single TPU in just 30 minutes (as compared with 4 days per 16 TPU for training BERT itself).

To do this, you will have to study how the last layers are presented in BERT, and also have a suitable dataset. On the official BERT page https://github.com/google-research/bert there are several examples for different tasks, as well as instructions on how to run additional training on cloud TPU. And everything else will have to look at the source in the files run_classifier.py and extract_features.py .

PS

The code presented here and jupyter notebook for Google Colab are located in the repository .

Miracles should not wait. Do not expect BERT to speak as a person. The status of state-of-the-art does not mean that progress in NLP has reached an acceptable level. This only means that BERT is better than previous models, which were even worse. A strong conversational AI is still very far away. In addition, BERT is primarily a language model, and not a ready-made chat bot, so it shows good results only after additional training for a specific task.