What's in the heart of a drone?

The automotive industry is currently undergoing a revolution in autonomous driving. An unmanned vehicle can provide a higher level of safety for passengers, because its management is devoid of human factors. The widespread use of unmanned vehicles in the future will reduce the number of accidents, as well as save the time that people spend daily driving.

OSCAR (Open-Source CAR) is a research project of the open-source StarLine unmanned vehicle, combining the best engineering minds of Russia. We called the OSCAR platform because we want to make every line of code regarding the car open to the community.

As a user of the vehicle, the car owner would like to get from point A to point B safely, comfortably and on time. User stories are diverse, ranging from a trip to work or shopping and ending with hanging out with friends or family without the constant need to keep an eye on the road.

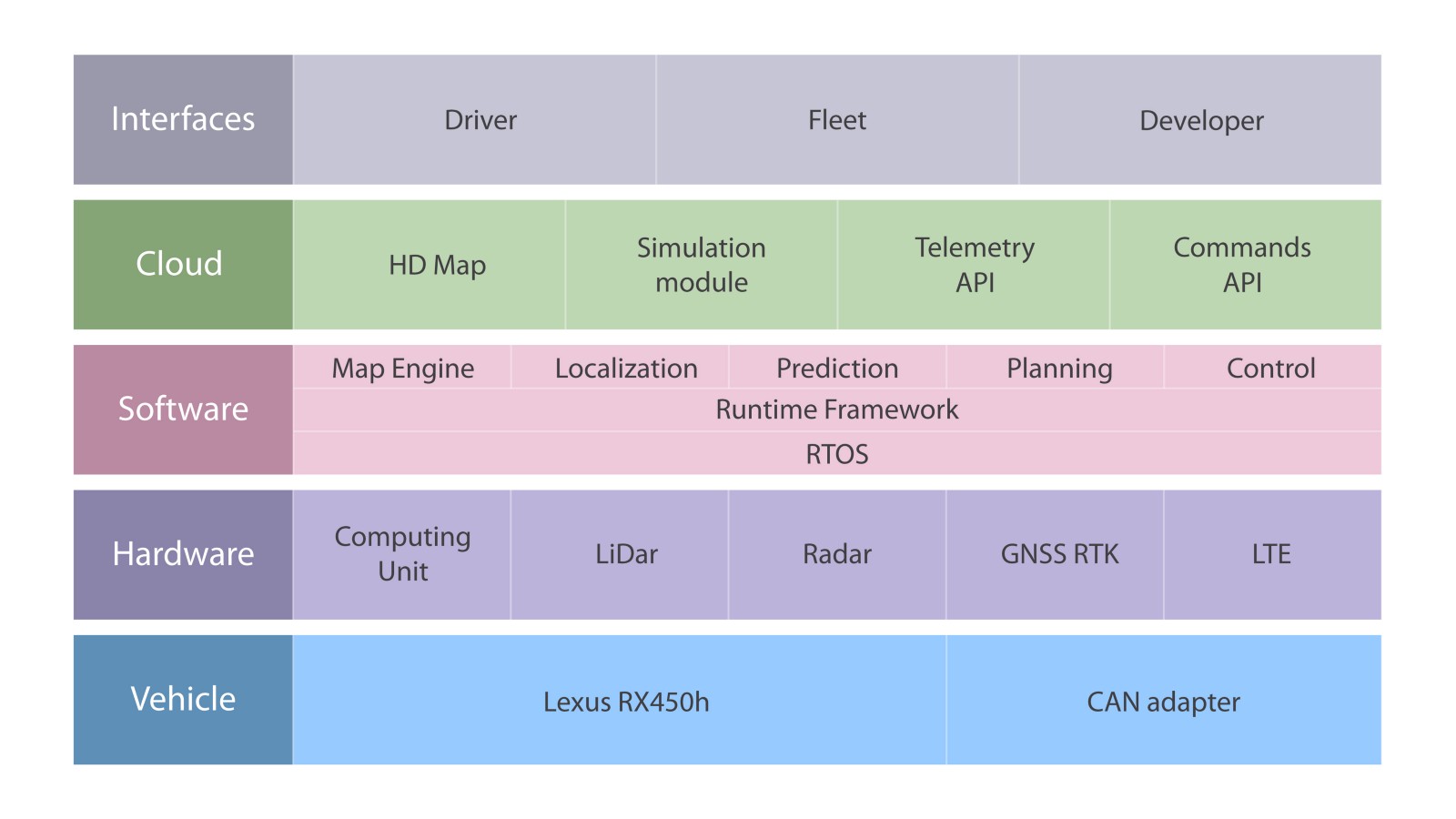

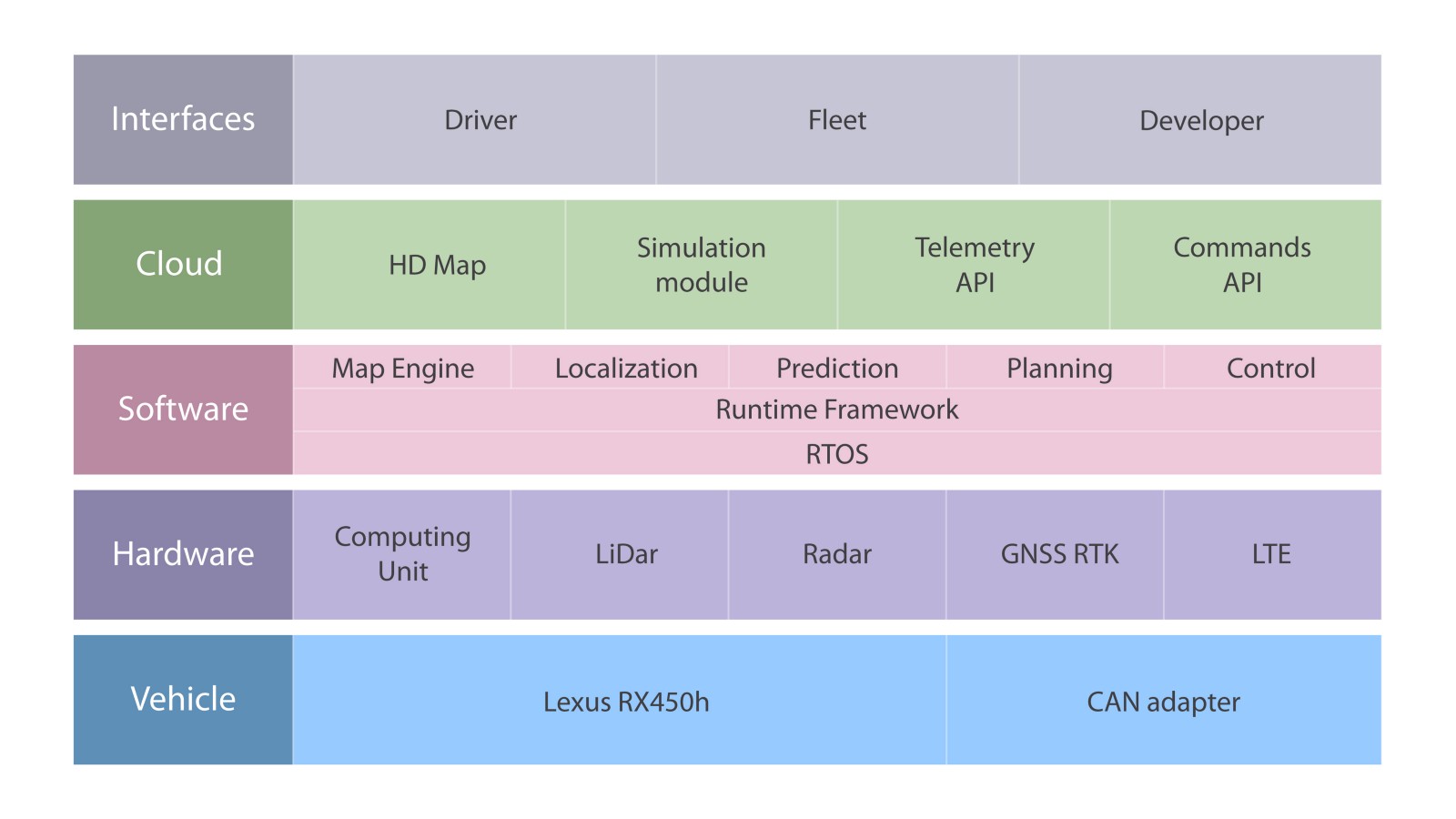

Therefore, the highest level of the platform is user applications and environments. We look forward to three groups of users: individual users, commercial, as well as platform developers. For each of these groups today we are designing a separate interface. The second level is the server part, which includes high-resolution cards, a simulation module, as well as APIs that serve the car. The software level is the creation of programs that will be built into the car. And the two lower levels of the platform are work on the car itself, which involves examining the digital interface of the machine itself and installing equipment.

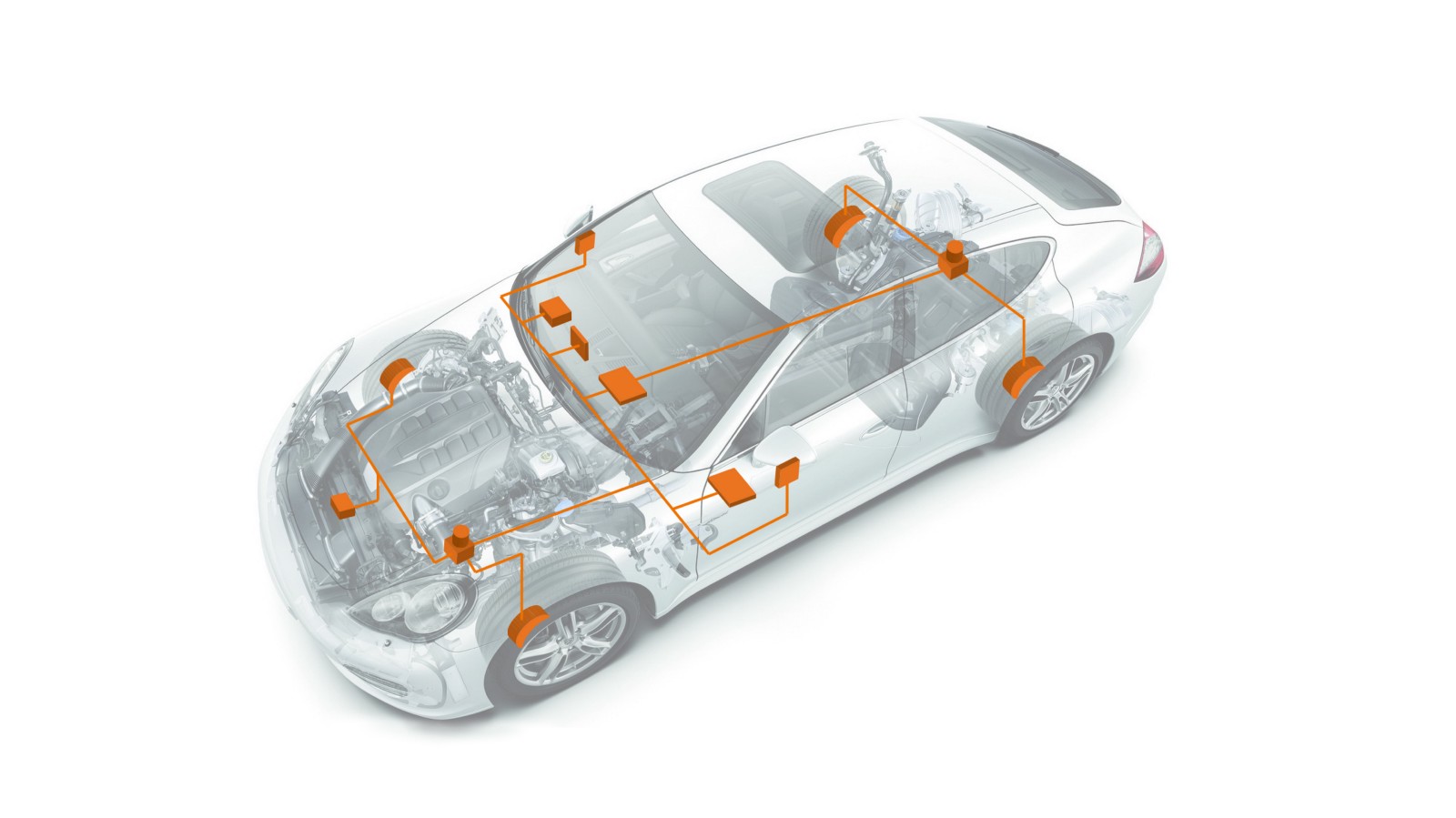

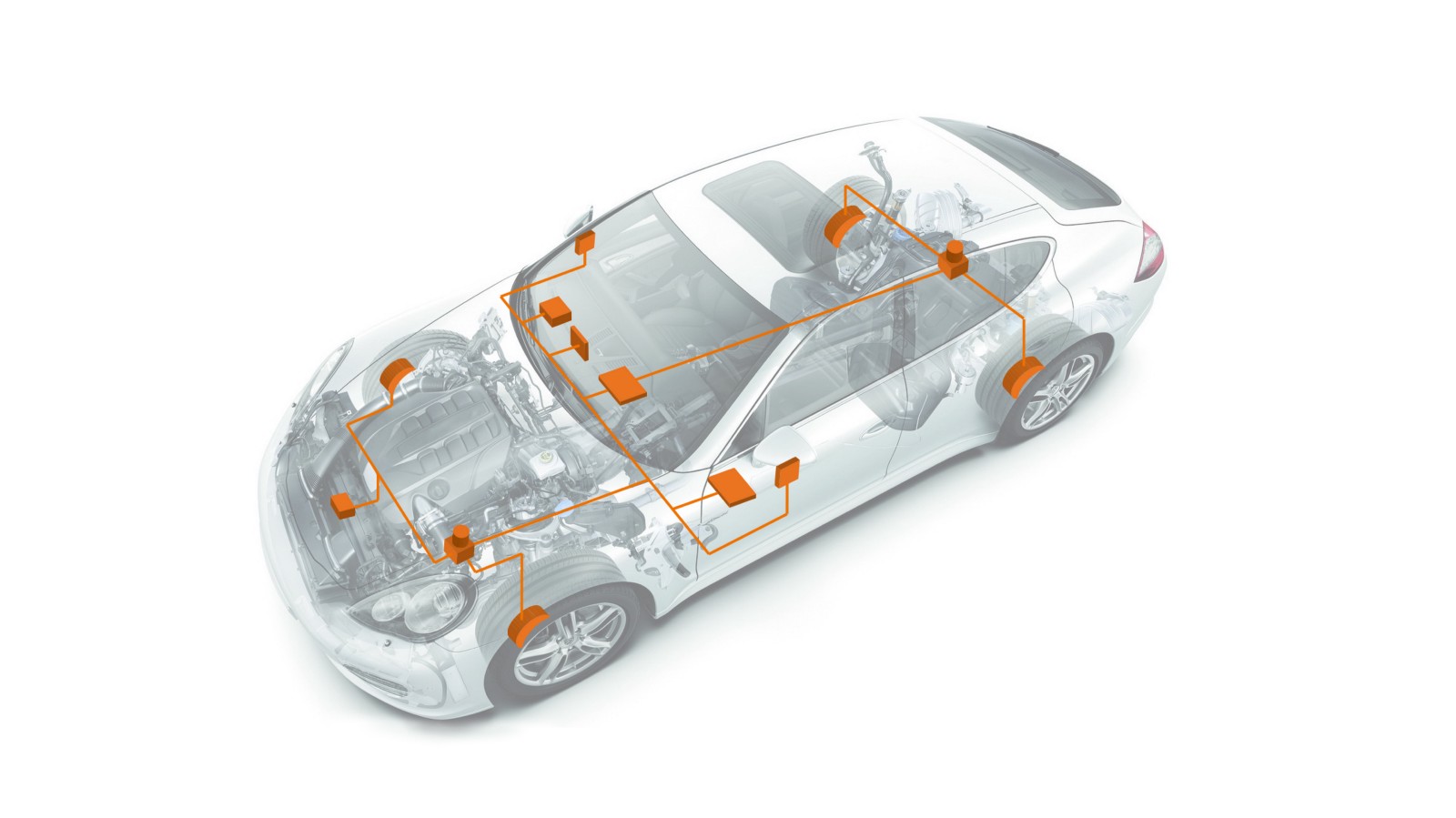

A modern car can have up to 70 electronic control units for various subsystems. Usually the most powerful processor is the engine control unit, while others are used to control the transmission, airbags, anti-lock braking system, audio systems, mirror adjustment ... Some of them form independent subsystems, while others exchange information and commands among themselves.

To ensure communication between vehicle devices, the CAN standard was developed.

CAN-bus is an industrial network standard, as well as a digital communication system and control of electrical devices of a car. Car devices are connected to the CAN bus in parallel to send and receive data. Having access to this digital interface, you can, for example, turn on the engine, open the door, fold the car mirrors using control commands.

To control the acceleration and steering of the car, we needed to access the CAN bus and understand the corresponding packet types. NPO StarLine is a manufacturer of electronic devices, so we have developed our own CAN bus adapter.

Currently, we can steer, brake and accelerate using a laptop.

An important area of work is equipping the vehicle with sensors and sensors. There are a number of approaches to equipping an unmanned vehicle. For example, some companies use expensive lidars, while others refuse them, limiting themselves to the readings of other devices.

Now the unmanned StarLine car is equipped with several mono- and binocular cameras, radars, lidars, as well as satellite navigation.

The task of the GPS receiver is to determine its location in space, as well as the exact time. Civilian GPS receivers have insufficient accuracy for positioning an unmanned vehicle; the error can be up to several meters. This happens both due to the reflection of the signal from the walls of buildings in urban environments, and due to poorly predicted natural factors, for example, changes in the speed of the signal from the satellite in the Earth’s ionosphere.

For positioning an unmanned vehicle, the error of the civilian GPS receiver is too large, and therefore, in our development, we use ultra-precise satellite navigation. To do this, a GNSS RTK receiver was installed on the car, which uses more advanced positioning techniques and, thus, achieves centimeter positioning accuracy only through satellite navigation.

But while driving, the car may end up in a tunnel or under a bridge, where signals from satellites are too weak or completely absent. Because of this, the accuracy of the GNSS-RTK receiver will drop, or positioning will be completely impossible. In these cases, the car will refine its position using the readings of the odometer and accelerometer. Algorithms that combine data from several disparate sources to reduce uncertainty are called sensor fusion algorithms.

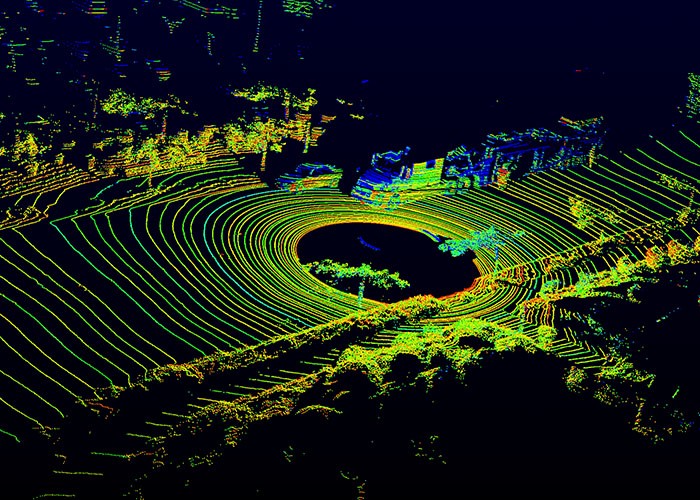

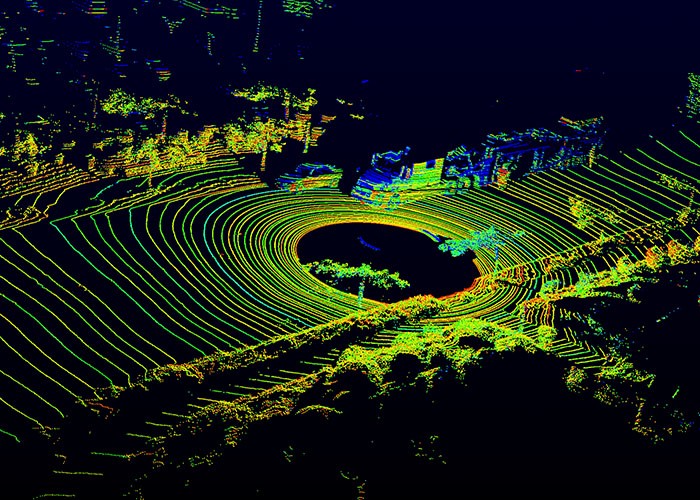

In addition to the task of localizing an unmanned vehicle, you must also have an idea of dynamic obstacles that are not on the map, for example, cars moving nearby or a person crossing a road. For these purposes, an unmanned vehicle uses radar and lidar data. The radar uses radio waves to detect obstacles, and also, thanks to the Doppler effect, allows you to calculate their radial velocities. Lidar is an active optical range finder that constantly scans the surrounding space and forms its three-dimensional map, the so-called point cloud.

Each device that is used in an unmanned vehicle (from the camera to the RTK GNSS unit) needs to be configured before it can be installed.

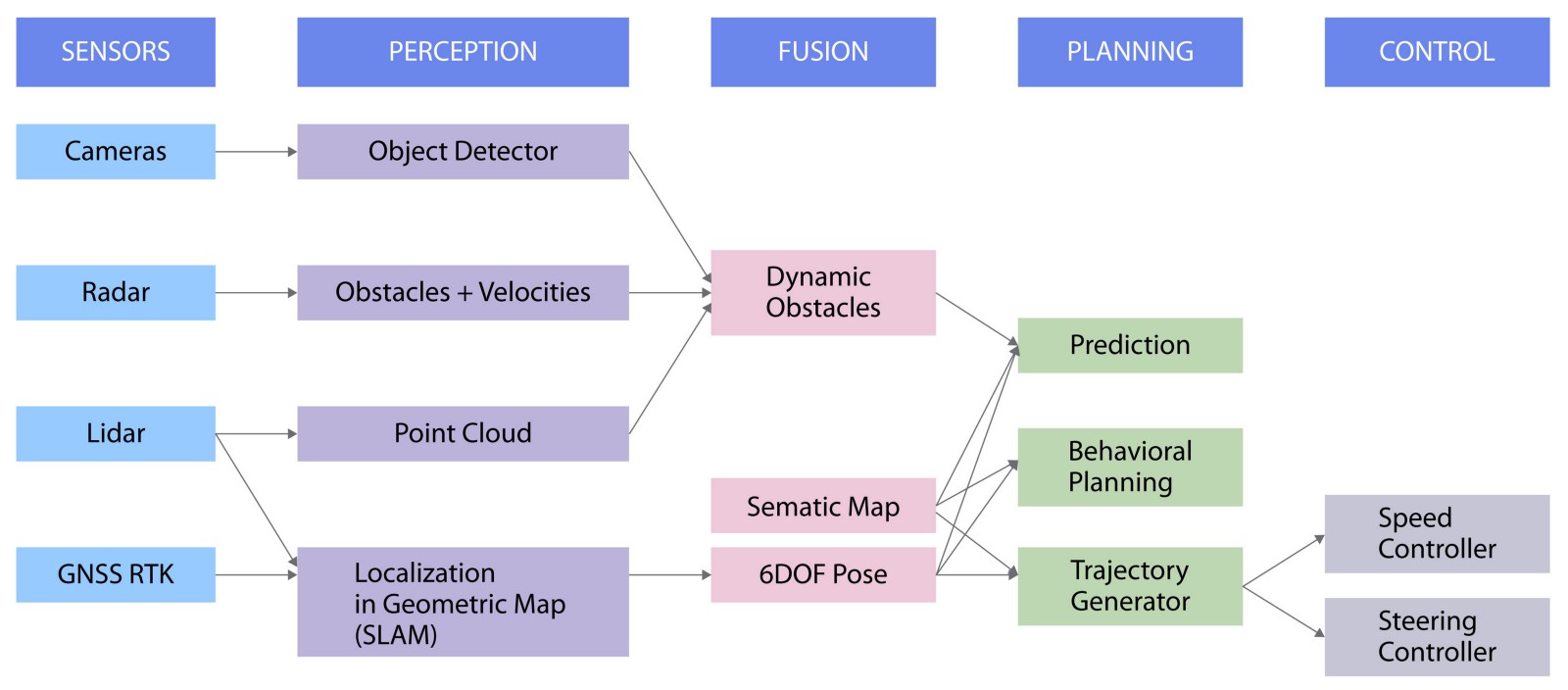

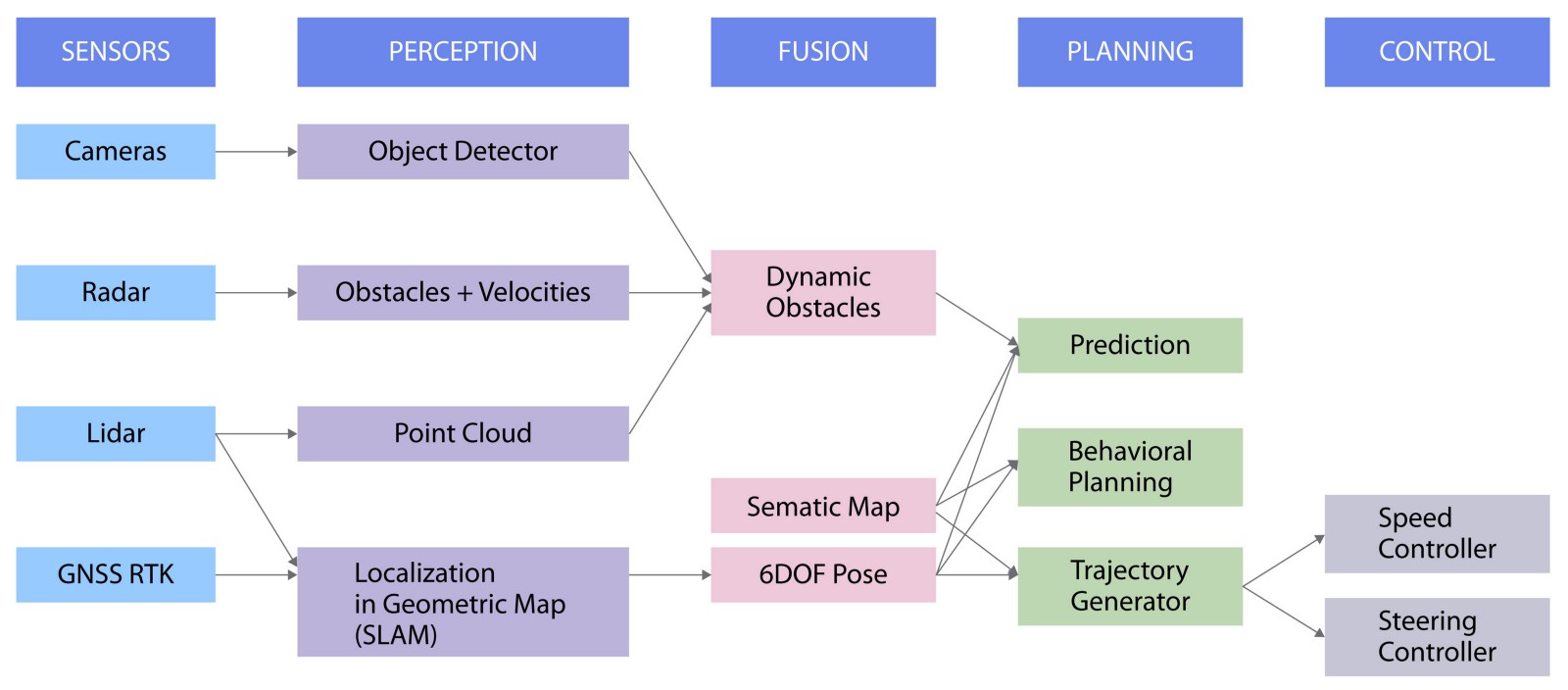

At the largest scale, drone software consists of several modules, among which are: recognition, planning, localization and control.

Data from cameras is used to recognize and detect objects, radars receive the coordinates and speeds of surrounding objects, lidars provide the algorithm with a point cloud, and the GNSS RTK module uses satellite data to localize the car.

Then the data from the first three sources are combined to obtain information about obstacles near the car.

At the same time, both satellite and lidar readings are used to solve the SLAM problem, which means simultaneous localization and mapping of the terrain. This is an approach for creating a map of an unknown environment with tracking the location of an object in it. This information is used to calculate the 6 coordinates of the car, which include three spatial coordinates and three-dimensional speed.

The next step is planning the local trajectory of the vehicle. The last step is the control module, which is used to actually execute the trajectory built during track construction.

Currently, we are engaged in setting up and installing equipment and are also working on a car control module.

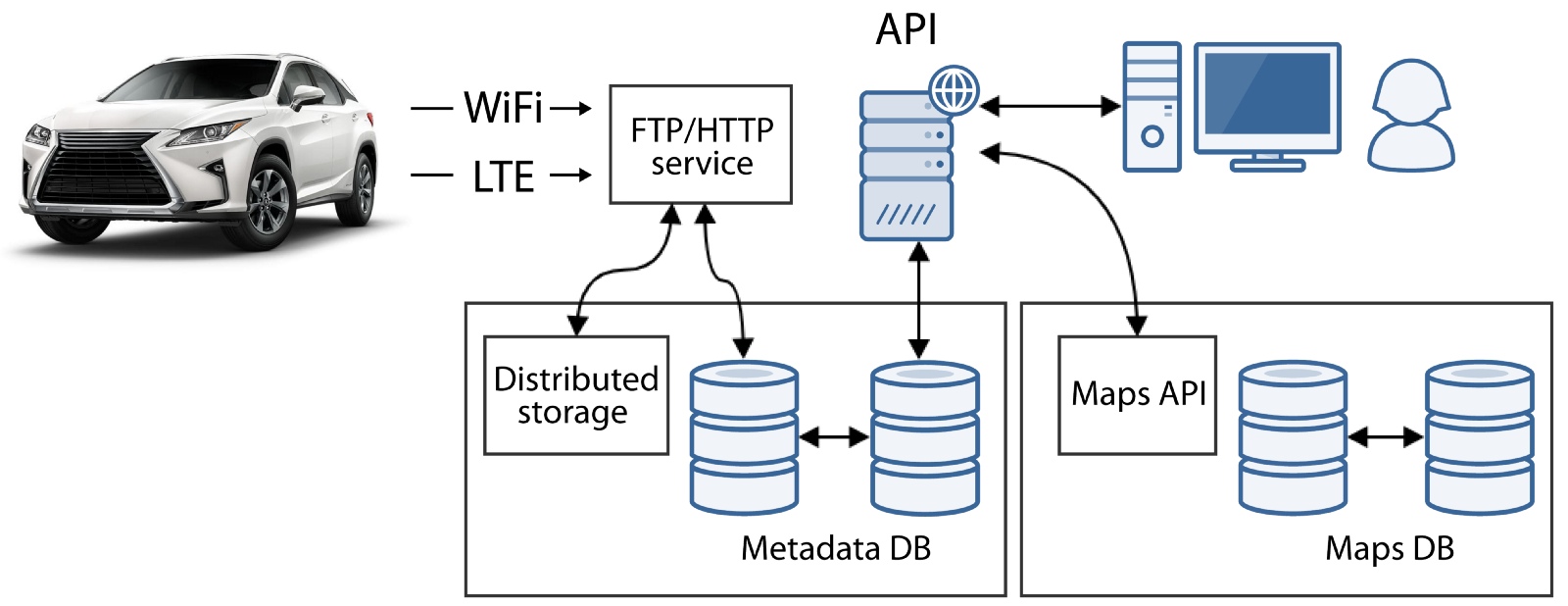

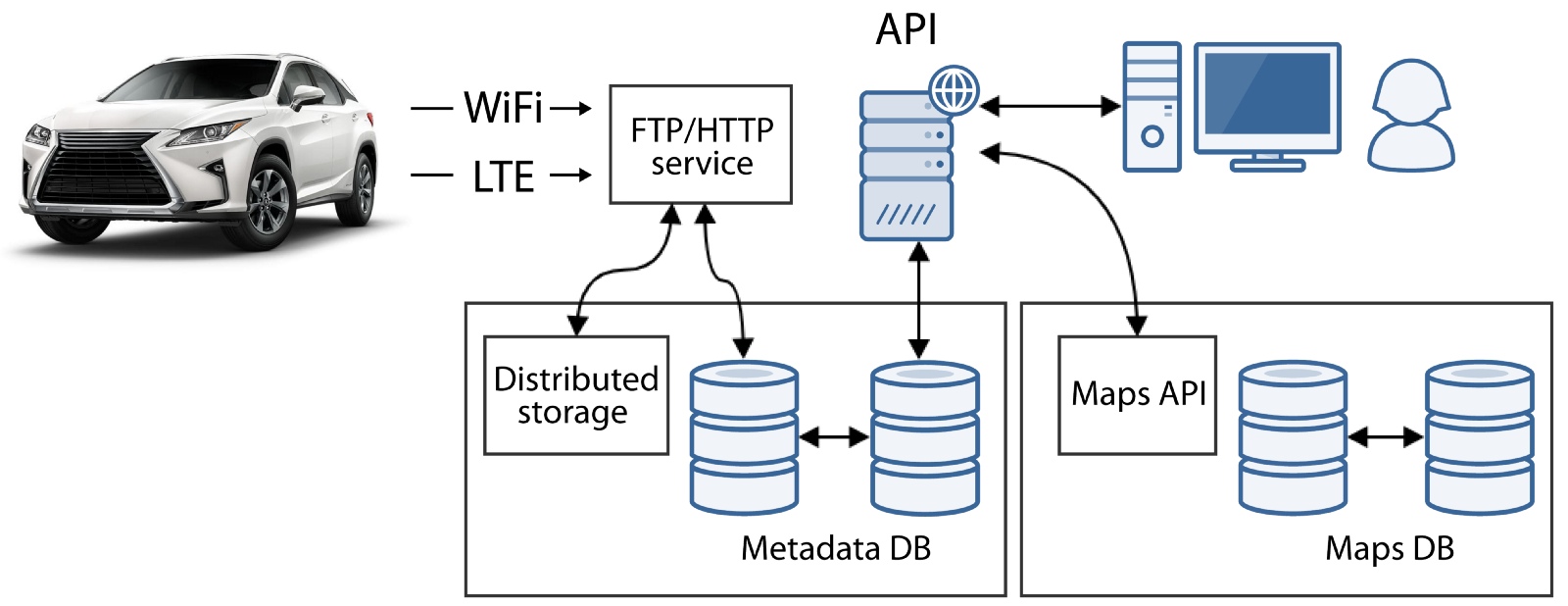

The server part consists of four blocks:

First, we need a storage for maps in order to correctly locate the car, as well as a telemetry service for data analysis. This is our early server architecture diagram, which includes two blocks. Later we will expand it using the command API to be able to send control commands to the car from the server. We will also add a simulation module.

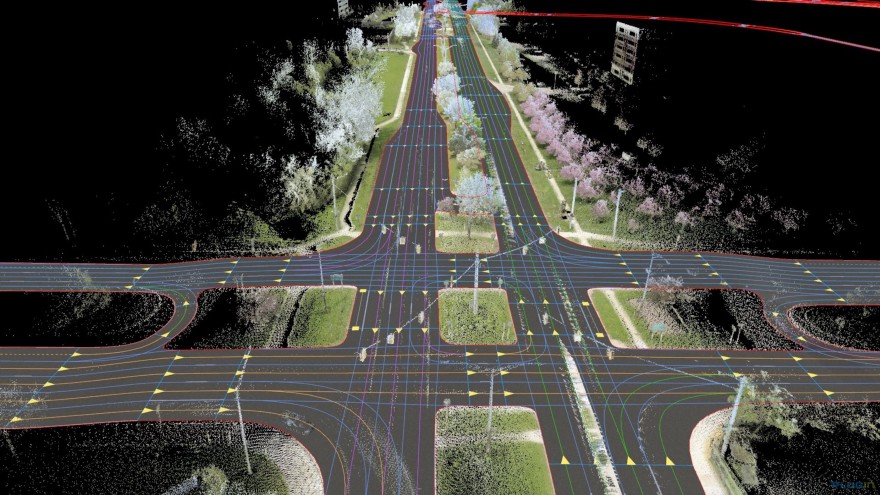

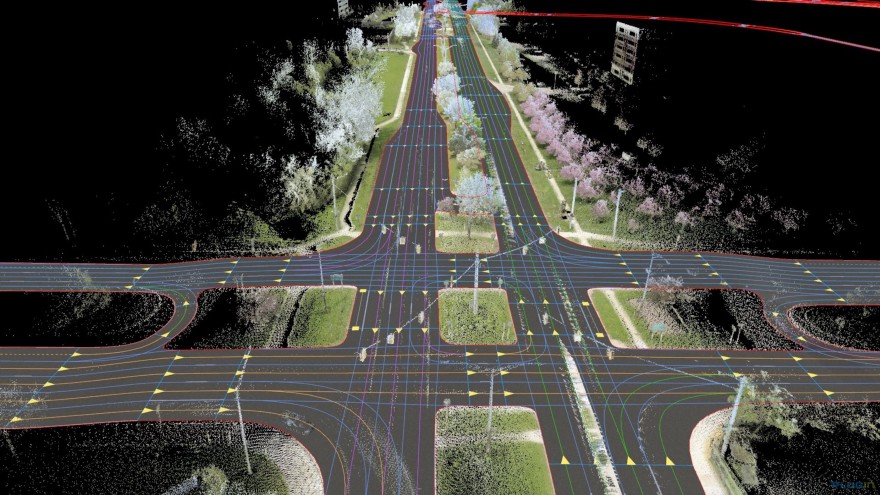

HD maps are maps that offer a complete picture of the real world accurate to the centimeter, including everything related to the road infrastructure, for example, information about lanes, signs, traffic lights. The map may also contain lidar data to enable the drone to localize on it.

Simulators are needed to generate traffic situations for debugging and testing. With the help of modeling traffic situations, we can increase the robustness of our algorithms by conducting unit testing on an arbitrary number of miles of customizable scenarios and rare conditions, for the part of the time and cost that would be required for testing on real roads.

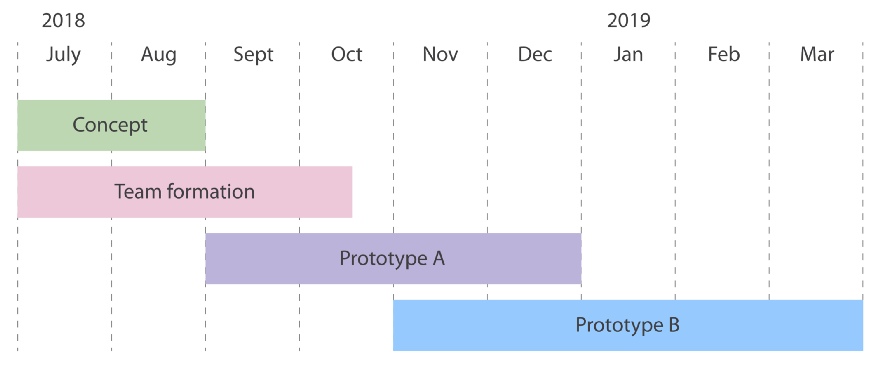

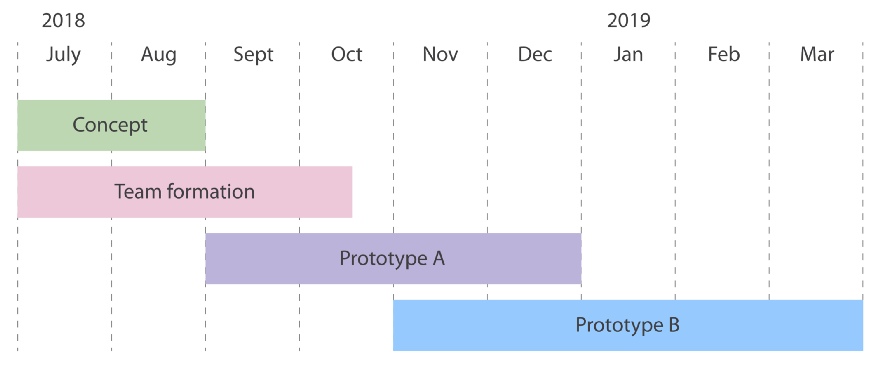

In July, we started by developing a concept, created a team and started the path to prototype A. Prototype A is a car that can move along waypoints, but requires operator intervention to avoid obstacles. Prototype B, the development of which we plan to finish by the spring of 2019, is a car that can bypass obstacles without driver control.

At the heart of the car are advanced algorithms, data streams, high-performance interfaces and sophisticated equipment. In addition, the car needs not only hardware and software, but also server and client applications. This technological heart is open, and we rely not only on the initiative of the StarLine team, but also on the help of the open-source community.

The main objective of the StarLine unmanned vehicle is the desire to make people's lives more comfortable and safer. Technology is important to us, but we believe that technological and scientific knowledge exists to serve people, and this is the true heart of our endeavor. We are approaching the day when road safety will be provided by equipment, and people will have the opportunity to devote more time to what is important to them. And if you share our point of view, then we are pleased to invite you to join us in creating tomorrow's technology.

Gitlab project

OSCAR (Open-Source CAR) is a research project of the open-source StarLine unmanned vehicle, combining the best engineering minds of Russia. We called the OSCAR platform because we want to make every line of code regarding the car open to the community.

Platform anatomy

As a user of the vehicle, the car owner would like to get from point A to point B safely, comfortably and on time. User stories are diverse, ranging from a trip to work or shopping and ending with hanging out with friends or family without the constant need to keep an eye on the road.

Therefore, the highest level of the platform is user applications and environments. We look forward to three groups of users: individual users, commercial, as well as platform developers. For each of these groups today we are designing a separate interface. The second level is the server part, which includes high-resolution cards, a simulation module, as well as APIs that serve the car. The software level is the creation of programs that will be built into the car. And the two lower levels of the platform are work on the car itself, which involves examining the digital interface of the machine itself and installing equipment.

Car

A modern car can have up to 70 electronic control units for various subsystems. Usually the most powerful processor is the engine control unit, while others are used to control the transmission, airbags, anti-lock braking system, audio systems, mirror adjustment ... Some of them form independent subsystems, while others exchange information and commands among themselves.

To ensure communication between vehicle devices, the CAN standard was developed.

CAN-bus is an industrial network standard, as well as a digital communication system and control of electrical devices of a car. Car devices are connected to the CAN bus in parallel to send and receive data. Having access to this digital interface, you can, for example, turn on the engine, open the door, fold the car mirrors using control commands.

To control the acceleration and steering of the car, we needed to access the CAN bus and understand the corresponding packet types. NPO StarLine is a manufacturer of electronic devices, so we have developed our own CAN bus adapter.

Currently, we can steer, brake and accelerate using a laptop.

Equipment

An important area of work is equipping the vehicle with sensors and sensors. There are a number of approaches to equipping an unmanned vehicle. For example, some companies use expensive lidars, while others refuse them, limiting themselves to the readings of other devices.

Now the unmanned StarLine car is equipped with several mono- and binocular cameras, radars, lidars, as well as satellite navigation.

The task of the GPS receiver is to determine its location in space, as well as the exact time. Civilian GPS receivers have insufficient accuracy for positioning an unmanned vehicle; the error can be up to several meters. This happens both due to the reflection of the signal from the walls of buildings in urban environments, and due to poorly predicted natural factors, for example, changes in the speed of the signal from the satellite in the Earth’s ionosphere.

For positioning an unmanned vehicle, the error of the civilian GPS receiver is too large, and therefore, in our development, we use ultra-precise satellite navigation. To do this, a GNSS RTK receiver was installed on the car, which uses more advanced positioning techniques and, thus, achieves centimeter positioning accuracy only through satellite navigation.

But while driving, the car may end up in a tunnel or under a bridge, where signals from satellites are too weak or completely absent. Because of this, the accuracy of the GNSS-RTK receiver will drop, or positioning will be completely impossible. In these cases, the car will refine its position using the readings of the odometer and accelerometer. Algorithms that combine data from several disparate sources to reduce uncertainty are called sensor fusion algorithms.

In addition to the task of localizing an unmanned vehicle, you must also have an idea of dynamic obstacles that are not on the map, for example, cars moving nearby or a person crossing a road. For these purposes, an unmanned vehicle uses radar and lidar data. The radar uses radio waves to detect obstacles, and also, thanks to the Doppler effect, allows you to calculate their radial velocities. Lidar is an active optical range finder that constantly scans the surrounding space and forms its three-dimensional map, the so-called point cloud.

Each device that is used in an unmanned vehicle (from the camera to the RTK GNSS unit) needs to be configured before it can be installed.

Software

At the largest scale, drone software consists of several modules, among which are: recognition, planning, localization and control.

Data from cameras is used to recognize and detect objects, radars receive the coordinates and speeds of surrounding objects, lidars provide the algorithm with a point cloud, and the GNSS RTK module uses satellite data to localize the car.

Then the data from the first three sources are combined to obtain information about obstacles near the car.

At the same time, both satellite and lidar readings are used to solve the SLAM problem, which means simultaneous localization and mapping of the terrain. This is an approach for creating a map of an unknown environment with tracking the location of an object in it. This information is used to calculate the 6 coordinates of the car, which include three spatial coordinates and three-dimensional speed.

The next step is planning the local trajectory of the vehicle. The last step is the control module, which is used to actually execute the trajectory built during track construction.

Currently, we are engaged in setting up and installing equipment and are also working on a car control module.

Cloud

The server part consists of four blocks:

- HD cards

- Telemetry API

- Command API

- simulation module

First, we need a storage for maps in order to correctly locate the car, as well as a telemetry service for data analysis. This is our early server architecture diagram, which includes two blocks. Later we will expand it using the command API to be able to send control commands to the car from the server. We will also add a simulation module.

HD maps are maps that offer a complete picture of the real world accurate to the centimeter, including everything related to the road infrastructure, for example, information about lanes, signs, traffic lights. The map may also contain lidar data to enable the drone to localize on it.

Simulators are needed to generate traffic situations for debugging and testing. With the help of modeling traffic situations, we can increase the robustness of our algorithms by conducting unit testing on an arbitrary number of miles of customizable scenarios and rare conditions, for the part of the time and cost that would be required for testing on real roads.

Road map

In July, we started by developing a concept, created a team and started the path to prototype A. Prototype A is a car that can move along waypoints, but requires operator intervention to avoid obstacles. Prototype B, the development of which we plan to finish by the spring of 2019, is a car that can bypass obstacles without driver control.

So what does a drone have in its heart?

At the heart of the car are advanced algorithms, data streams, high-performance interfaces and sophisticated equipment. In addition, the car needs not only hardware and software, but also server and client applications. This technological heart is open, and we rely not only on the initiative of the StarLine team, but also on the help of the open-source community.

The main objective of the StarLine unmanned vehicle is the desire to make people's lives more comfortable and safer. Technology is important to us, but we believe that technological and scientific knowledge exists to serve people, and this is the true heart of our endeavor. We are approaching the day when road safety will be provided by equipment, and people will have the opportunity to devote more time to what is important to them. And if you share our point of view, then we are pleased to invite you to join us in creating tomorrow's technology.

Gitlab project