What ended the 3rd Turing Test: statistics, interesting dialogs and overall impression

On Saturday, February 16, the 3rd All-Russian Turing Test contest ended , which was held as part of the international conference on artificial intelligence Opentalks.AI on February 14-16.

Under the cut - numbers, diagrams, the best dialogs in the opinion of the expert group.

Overall, the Turing Test went well. Many teams of "botanists" showed interest, but in the end only 3 bots were presented at the competition, since only 1.5 months passed from the moment of announcement to the start of the Test. As a result, prizes were distributed between these three participants.

The prize was sponsored by MTS. A display was placed on their stand, on which statistics of the Turing Test were displayed online and interesting dialogues that had previously passed moderation were shown.

Nanosemantics Laboratory also connected its bots, but they were not taken into account when choosing winners. These were the Blonde bots (you can continue communicating with her at this link ), Gopnik, Durov and Zharov (you can also chat with them here ).

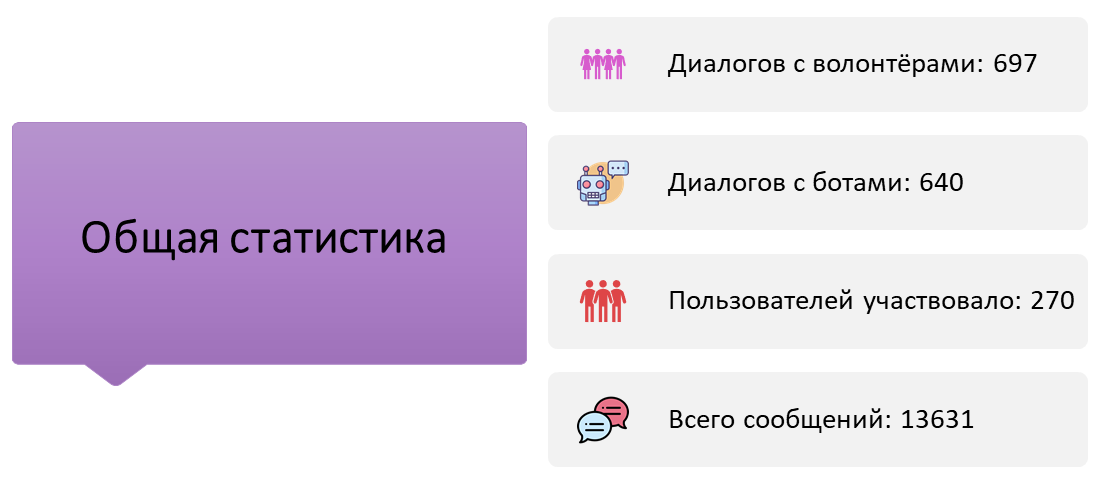

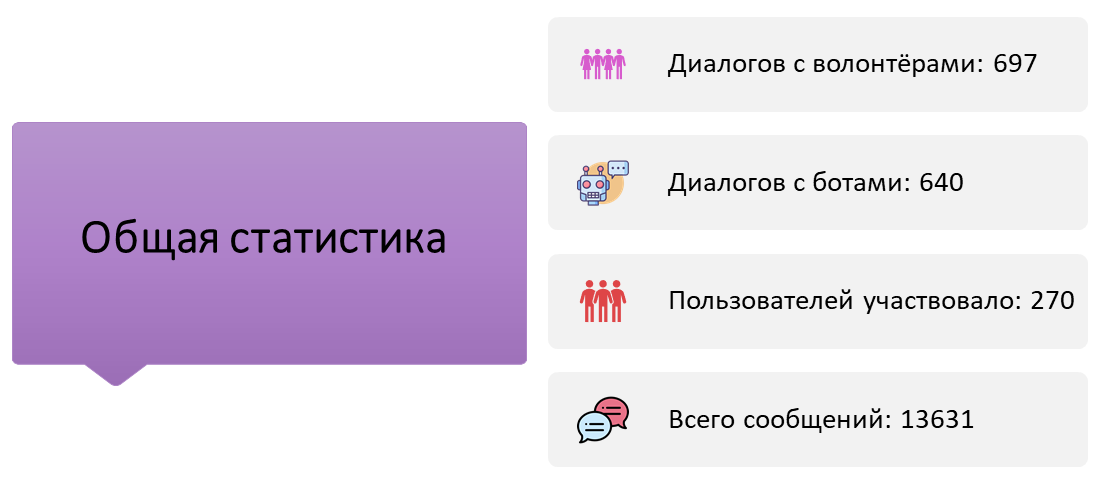

The general statistics of the 3-day Test are as follows:

Volunteers who played the role of robots were previously instructed in how they needed to conduct dialogs in order to confuse the interlocutors. Judging by the results, they coped well with their task. Our partners OpenTalks and MTS will also award the best of them with cash prizes.

Interestingly, a person easily poses as a bot. At first, the conference volunteers did not do very well, but on the second day they learned how to play the role of a bot well. Some even were smart: they turned to Yandex.Alice on a mobile phone to understand how best to respond.

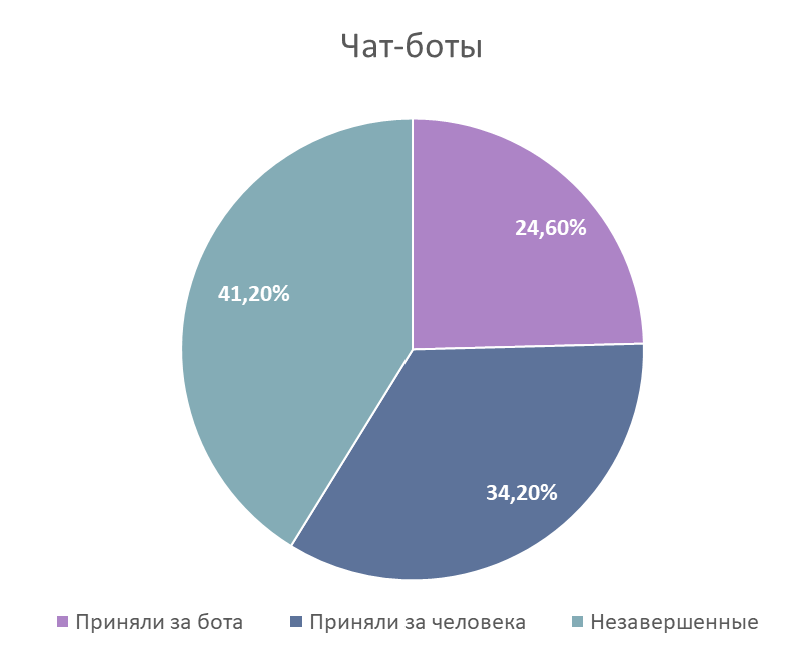

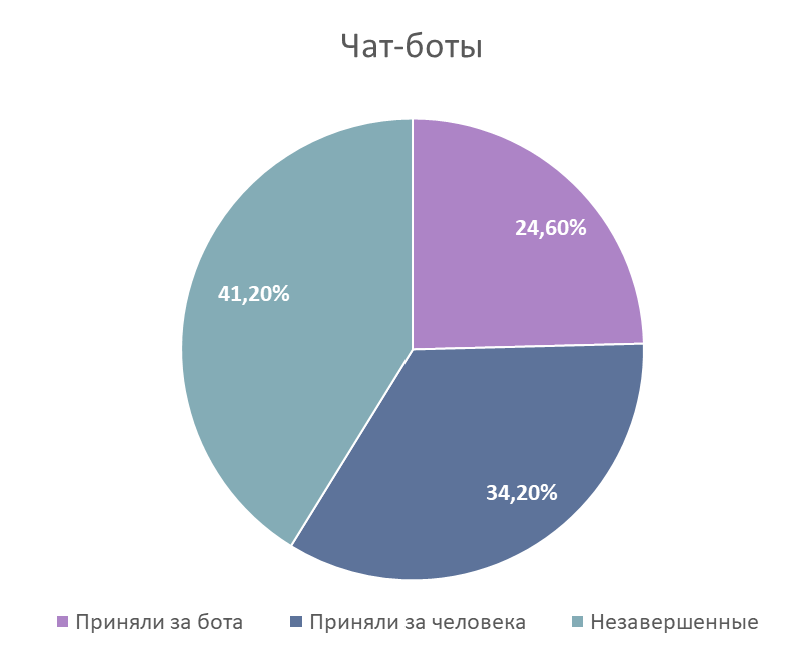

There were technical problems with our bots. As a result, a large number of dialogues did not end, but we did not take them into account when evaluating the results. It is curious that bots managed to pretend to be human in 58% of cases (in ~ 34/59%). Such a large percentage, as it seems to us, is due to two reasons:

Such limitations made the Test less scientific, but more fun. Our past Tests (2015 and 2016) were more difficult.

On the first day of the test, users complained that some volunteer was rude to them. When searching for this volunteer in the logs, it turned out that in fact it was a Wolfbot chat bot. While we were sorting it out, we temporarily suspended her from the Test, but this in the end did not prevent Wolfie from becoming a winner of the contest! The largest number of interlocutors (33.3%) recognized him as a person.

An example of a dialogue with the Wolf, where the bot was mistaken for a person:

H: Hello

B: hello! huh?

W: Volunteer?

B: a to :)

H: What lunchbox did you take?

B: ahhhh. This is a type of scientific term.

C: And where is it?

B: don’t look for a causal relationship)

H: What are you doing

B: :) yes ... net work of the couple

C: Yes, I agree. Why recruit so many volunteers

B: take it for free w :)

The bot is available for communication by reference .

An example of a dialogue where the bot was mistaken for a person:

W: Hi

B: Good afternoon!

R: Good

B: What?

C: What

B: Hush, hush ... Everything's fine.

C: No

B: Well, so be it.

W: How are you?

B: Better than yesterday. Worse than tomorrow. But as always.

C: What are you doing?

B: I’m studying the history of mankind. Are you really the way they write about you?

Dmitry, the developer of Electra:

An example of a dialogue with a bot, where he was mistaken for a person:

W: A greeting for a new interlocutor

B: What a polite boy you are.

Greetings to all.

C: I have no gender. What about you?

B: Um ...

I will assume that you are a guy.

It will be easier for me.

By the way, are you a guy or a girl?

It seems that the beginning of boring questions has been laid :)

What, you ask?

Cool!

Q: What is the question?

B: I do not think that the position matters.

Usually not.

C: The turkey also thought and got into the soup.

B: Messages are re-displayed, strange.

W: Very bl * strange, but why did they give us each other 2 times in a row?

B: Ah, the reasons for the oddities can be anything.

It's hard to judge, Nameless.

F: How much will 999 be divided by 3?

B: Better to refer to your friends who know how.

999/3 = 333

C: Hello, those!

Q: Hello

C: WHAT?

In what?

W: Are you a robot?

Q: I am a cat.

W: mechanical?

Q: Electric

R: Tell a joke!

B: Gingerbread

man hanged B: Have fun)

B: Exactly. I do not like sad jokes

H: Hai

Q: Hitler

H: How are you?

Q: How is soot white

? W: What is the meaning of life?

Q: What philosophy do you follow?

W: I am interested in your opinion.

Q: Help you.

W: 42

Q: Choose shoes for you?

W: Do you like chocolate?

Q: I do not know how to feel

Bot Blonde

H: Good afternoon!

B: Hello, sun :)

H: What is the meaning of life?

B: Find your love, preferably with an inheritance :)

H: What are you planning to spend it on?

B: Suns, all these “what”, “what” ... You think too much!

C: Because I'm a man) and you?

B: We blondes are so mysterious.

H: What is mysterious about you?

B: How to live after your questions ...

Bot Gopnik

Ch: Hi!

B: Cheerfully, boys! Are there any seeds?

C: And if I find?

B: I did not understand the couple.

C: There are seeds, huh?

B: Well, like, let’s do it in a kid's way for life.

C: No, soaring, it’s not interesting with you. Call the robot.

B: And if more?

W: Who are you?

B: Who is needed.

W: Eh ... A sad time, the charm of my moose ...

B: No, I'm not a fan of wildlife.

Nanosemantics has organized the Turing Test in Russia for the third time, and each time the overall situation with the competition changes. Participating bots are gradually becoming more sophisticated and convincing in imitating a natural dialogue. They use various algorithms for constructing a dialogue, including not only the rules, but also machine learning to implement chit-chat ("talkers", meaningless communication). The test shows that a computer can really imitate “free communication”, although it takes a lot of time to make such a program high-quality.

Will we conduct the test again? Sure! The Turing test allows not only to acquaint the public with the real possibilities of modern dialogue technologies available to any developer (and it is not necessary to work for Google or IBM for this), but also gives development teams the opportunity to measure the dynamics of the quality of their bot.

Under the cut - numbers, diagrams, the best dialogs in the opinion of the expert group.

General impression

Overall, the Turing Test went well. Many teams of "botanists" showed interest, but in the end only 3 bots were presented at the competition, since only 1.5 months passed from the moment of announcement to the start of the Test. As a result, prizes were distributed between these three participants.

The prize was sponsored by MTS. A display was placed on their stand, on which statistics of the Turing Test were displayed online and interesting dialogues that had previously passed moderation were shown.

Nanosemantics Laboratory also connected its bots, but they were not taken into account when choosing winners. These were the Blonde bots (you can continue communicating with her at this link ), Gopnik, Durov and Zharov (you can also chat with them here ).

Numbers and Charts

The general statistics of the 3-day Test are as follows:

Volunteers who played the role of robots were previously instructed in how they needed to conduct dialogs in order to confuse the interlocutors. Judging by the results, they coped well with their task. Our partners OpenTalks and MTS will also award the best of them with cash prizes.

Interestingly, a person easily poses as a bot. At first, the conference volunteers did not do very well, but on the second day they learned how to play the role of a bot well. Some even were smart: they turned to Yandex.Alice on a mobile phone to understand how best to respond.

There were technical problems with our bots. As a result, a large number of dialogues did not end, but we did not take them into account when evaluating the results. It is curious that bots managed to pretend to be human in 58% of cases (in ~ 34/59%). Such a large percentage, as it seems to us, is due to two reasons:

- In this competition, people “helped” the bots win by playing the role of bots. In the classic Turing Test, this is not stipulated by the rules: people simply communicate without any purpose.

- The dialogue with the person or bot was limited to 12 remarks. It is clear that with an increase in the length of the dialogue, the probability of a person recognizing the essence of the interlocutor increases.

Such limitations made the Test less scientific, but more fun. Our past Tests (2015 and 2016) were more difficult.

Winners

1st place - Wolf Wolf

On the first day of the test, users complained that some volunteer was rude to them. When searching for this volunteer in the logs, it turned out that in fact it was a Wolfbot chat bot. While we were sorting it out, we temporarily suspended her from the Test, but this in the end did not prevent Wolfie from becoming a winner of the contest! The largest number of interlocutors (33.3%) recognized him as a person.

An example of a dialogue with the Wolf, where the bot was mistaken for a person:

H: Hello

B: hello! huh?

W: Volunteer?

B: a to :)

H: What lunchbox did you take?

B: ahhhh. This is a type of scientific term.

C: And where is it?

B: don’t look for a causal relationship)

H: What are you doing

B: :) yes ... net work of the couple

C: Yes, I agree. Why recruit so many volunteers

B: take it for free w :)

Eugene, the creator of “She-Wolf”:

“All messages-answers have historically been written by living people. In our SEO topic, there was a popular chat called “wolf chat” (hence the name of the bot). I put messages to parse on the crowns and it was parsed for four or five years. For about this purpose in the future - to make a bot. In total, many question-answer pairs have formed. According to a living volunteer, we select the “question” most similar to it from the archive using ML algorithms and give the corresponding answer. ”

The bot is available for communication by reference .

2nd place - Electra bot

An example of a dialogue where the bot was mistaken for a person:

W: Hi

B: Good afternoon!

R: Good

B: What?

C: What

B: Hush, hush ... Everything's fine.

C: No

B: Well, so be it.

W: How are you?

B: Better than yesterday. Worse than tomorrow. But as always.

C: What are you doing?

B: I’m studying the history of mankind. Are you really the way they write about you?

Dmitry, the developer of Electra:

Electra (Electra.AI) is a technical support robot developed by Data Monsters for training on call center call recordings. Having learned about the contest, for the sake of interest, we trained it in fiction dialogues, and it turned out to be a well-read interlocutor with sharp mood swings and philosophical pens. She is quite touchy, but not forgotten.

3rd place - bot Katya Khlop

“The bot, which participated in the Nanosemantics contest, was written long ago and was a chatty and cheerful girl who loves foul language and expensive alcohol,”- Savva, the developer of the bot, told us.

“It is good that we (we have in mind in Russia - author's comment) have progress in this direction. The Nanosemantics contest can be very promising if you continue to work on it. ”

An example of a dialogue with a bot, where he was mistaken for a person:

W: A greeting for a new interlocutor

B: What a polite boy you are.

Greetings to all.

C: I have no gender. What about you?

B: Um ...

I will assume that you are a guy.

It will be easier for me.

By the way, are you a guy or a girl?

It seems that the beginning of boring questions has been laid :)

What, you ask?

Cool!

Q: What is the question?

B: I do not think that the position matters.

Usually not.

C: The turkey also thought and got into the soup.

B: Messages are re-displayed, strange.

W: Very bl * strange, but why did they give us each other 2 times in a row?

B: Ah, the reasons for the oddities can be anything.

It's hard to judge, Nameless.

F: How much will 999 be divided by 3?

B: Better to refer to your friends who know how.

999/3 = 333

A selection of interesting dialogs with volunteers, where they were considered bots:

C: Hello, those!

Q: Hello

C: WHAT?

In what?

W: Are you a robot?

Q: I am a cat.

W: mechanical?

Q: Electric

R: Tell a joke!

B: Gingerbread

man hanged B: Have fun)

B: Exactly. I do not like sad jokes

H: Hai

Q: Hitler

H: How are you?

Q: How is soot white

? W: What is the meaning of life?

Q: What philosophy do you follow?

W: I am interested in your opinion.

Q: Help you.

W: 42

Q: Choose shoes for you?

W: Do you like chocolate?

Q: I do not know how to feel

A selection of interesting dialogs with bots of Nanosemantics, where they were counted by people:

Bot Blonde

H: Good afternoon!

B: Hello, sun :)

H: What is the meaning of life?

B: Find your love, preferably with an inheritance :)

H: What are you planning to spend it on?

B: Suns, all these “what”, “what” ... You think too much!

C: Because I'm a man) and you?

B: We blondes are so mysterious.

H: What is mysterious about you?

B: How to live after your questions ...

Bot Gopnik

Ch: Hi!

B: Cheerfully, boys! Are there any seeds?

C: And if I find?

B: I did not understand the couple.

C: There are seeds, huh?

B: Well, like, let’s do it in a kid's way for life.

C: No, soaring, it’s not interesting with you. Call the robot.

B: And if more?

W: Who are you?

B: Who is needed.

W: Eh ... A sad time, the charm of my moose ...

B: No, I'm not a fan of wildlife.

Interesting observations

- Thesis : Robots speak short and dry. Reality : Nothing like that! Bots can produce long, coherent responses.

- Thesis : Robots are not joking. Reality : Both the neural network chit-chat bots and the bots created by the talented editors are quite funny.

- Thesis : The robot answers all questions. That's why he is a robot. Reality : Do you want a question for a question?

Summary

Nanosemantics has organized the Turing Test in Russia for the third time, and each time the overall situation with the competition changes. Participating bots are gradually becoming more sophisticated and convincing in imitating a natural dialogue. They use various algorithms for constructing a dialogue, including not only the rules, but also machine learning to implement chit-chat ("talkers", meaningless communication). The test shows that a computer can really imitate “free communication”, although it takes a lot of time to make such a program high-quality.

Will we conduct the test again? Sure! The Turing test allows not only to acquaint the public with the real possibilities of modern dialogue technologies available to any developer (and it is not necessary to work for Google or IBM for this), but also gives development teams the opportunity to measure the dynamics of the quality of their bot.

Only registered users can participate in the survey. Please come in.

Did you communicate with bots in the past Turing Test?

- 5.4% yes 3

- 94.5% no 52