Paul Graham: What I Learned from Hacker News

- Transfer

February 2009

Hacker News turned two years old last week. Initially, it was supposed to be a parallel project - an application for honing Arc and a place for news exchange between the current and future founders of Y Combinator. It was getting bigger and taking more time than I expected, but I do not regret it, because I learned a lot from working on this project.

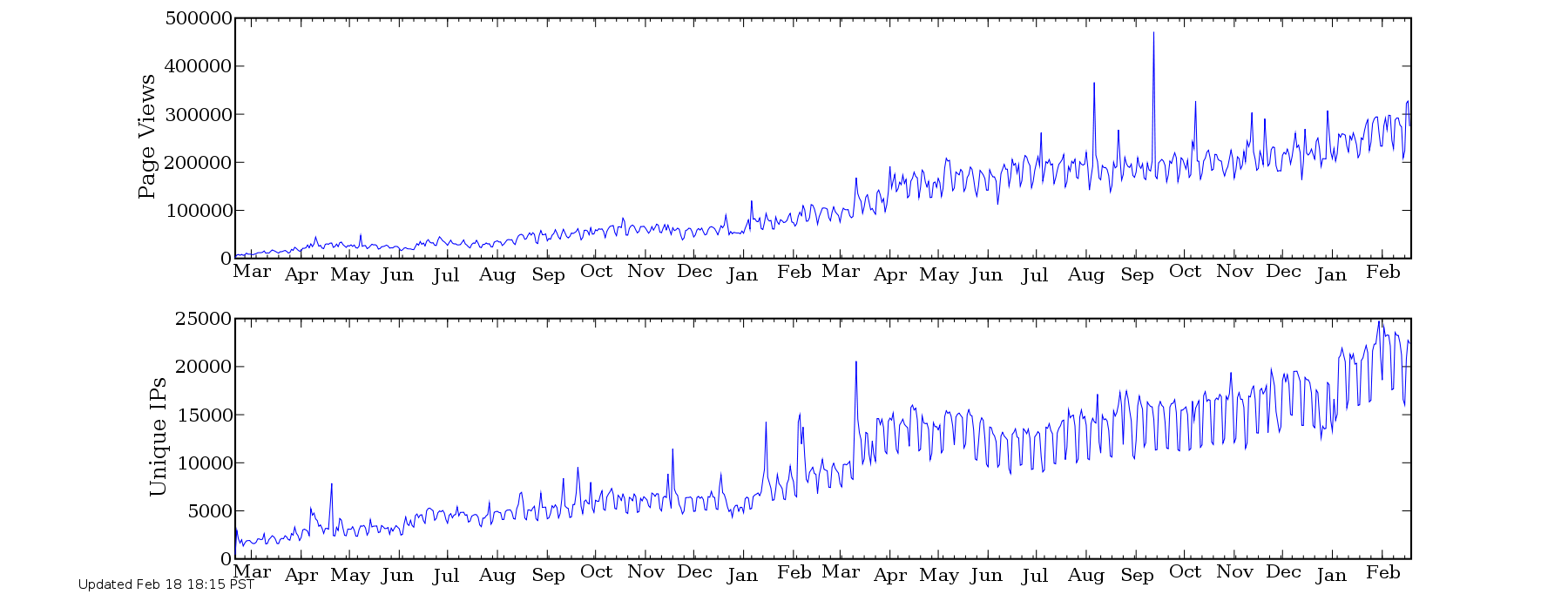

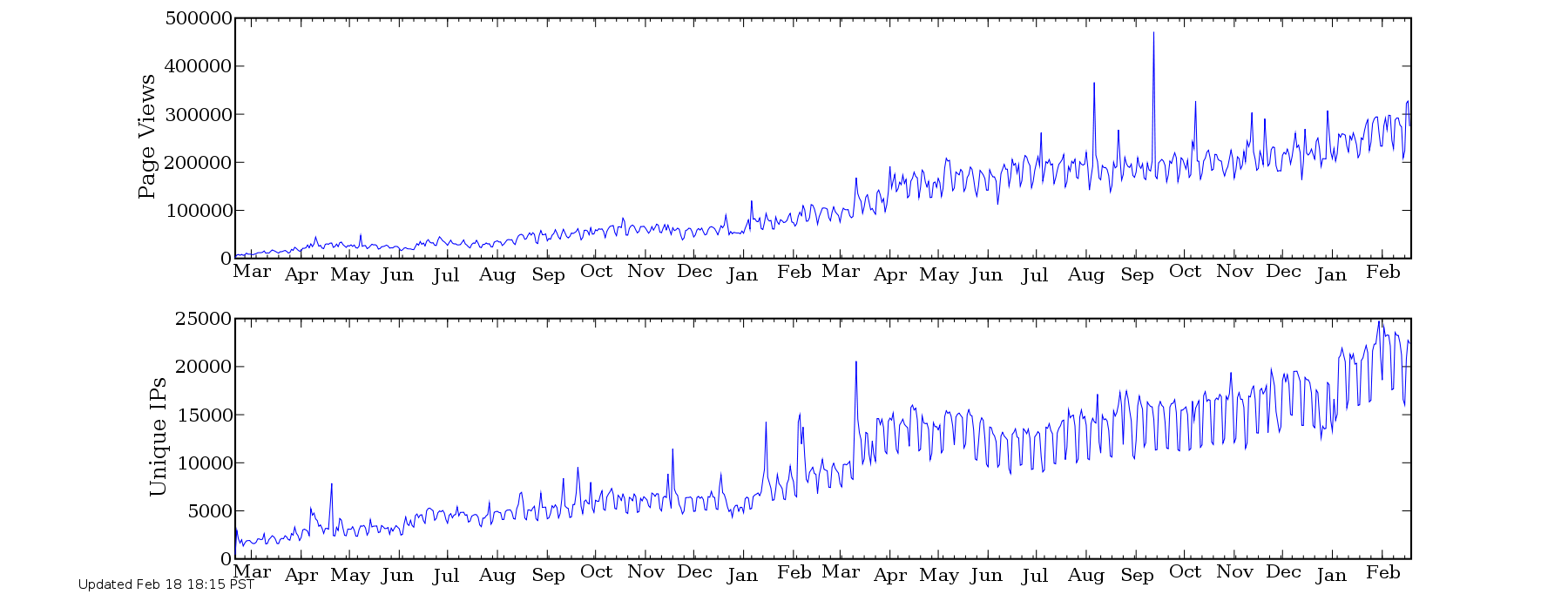

When we launched the project in February 2007, on weekdays the traffic was about 1600 unique daily visitors. Since then, it has increased to 22,000.

This growth rate is slightly higher than we would like. I would like the site to develop, because if the site does not grow at least slowly, then it is probably already dead. But I would not want him to achieve Digg or Reddit growth - mainly because it will weaken the nature of the site, but also because I do not want to spend all my time working on scaling.

I already have enough problems with this. I remember that the initial motivation for HN was to test a new programming language and, moreover, to test a language that focuses on experiments with language design, and not its performance. Every time the site became slow, I supported myself, recalling the famous quote by McIlroy and Bentley

and searched for problem spots that I could fix with a minimum of code. I am still able to maintain the site, in the sense of maintaining the same performance, despite a 14-fold increase. I don’t know how I will manage further, but I’ll probably think of something.

This is my attitude to the site as a whole. Hacker News is an experiment, an experiment in a new field. This type of site is usually only a few years old. There has been only a few decades of discussion on the Internet as such, so we probably only found a small fraction of what we find in the end.

That is why I am so optimistic about HN. When the technology is so new, existing solutions are usually terrible, which means you can do something much better, which in turn means that many problems that seem insoluble are not in fact. Including, I hope, the problem that plagues many communities: destruction due to growth.

Users have been worried about this since the site was only a few months old. So far, these fears have been in vain, but this will not always be the case. Recession is a difficult problem. But probably solvable; this does not mean that open conversations “always” were destroyed by growth, when “always” means only 20 cases.

But it is important to remember that we are trying to solve a new problem, because it means that we should try something new and most of this probably will not work. A couple of weeks ago, I tried to display the names of users with the highest average comment count in orange. [1] It was a mistake. Suddenly, a culture that was more or less united was divided into haves and have-nots. I did not realize how united the culture was until I saw it divided. It was painful to look at it. [2]

Therefore, the orange usernames will not return. (Sorry for that). But there will be other ideas that are just as likely to break in the future, and those that work will probably seem as broken as those that are not.

Perhaps the most important thing I learned about the recession is that it is measured more in behavior than in the users themselves. You want to eliminate bad behavior rather than bad people. User behavior is surprisingly malleable. If you expect people to behave well, they usually do; and vice versa.

Although, of course, the prohibition of bad behavior often eliminates bad people, because they feel unpleasantly limited in a place where they should behave well. This way of getting rid of them is softer and probably more effective than others.

It’s pretty clear now that the theory of broken windows is appropriate for public sites. The theory is that small manifestations of bad behavior encourage even worse behaviors: a residential area with lots of graffiti and broken windows becomes the area where robberies often occur. I lived in New York when Giuliani introduced the reforms that made this theory famous, and the changes were amazing. And I was a Reddit user when the exact opposite happened, and the changes were just as impressive.

I do not criticize Steve and Alexis. What happened to Reddit was not a consequence of neglect. From the very beginning, they had a policy of censoring exclusively spam. In addition, Reddit had other goals compared to Hacker News. Reddit was a startup, not a third-party project; their goal was to grow as fast as possible. Combine fast growth and zero sponsorship and get permissiveness. But I don’t think that they would do anything differently if they had the opportunity. Judging by the traffic, Reddit is much more successful than Hacker News.

But what happened with Reddit doesn't necessarily happen with HN. There are several local upper limits. There may be places with full permissiveness, but there are places that are more meaningful, as well as in the real world; and people will behave differently depending on where they are, just like in the real world.

I observed this in practice. I saw people cross-mailing on Reddit and Hacker News who were not too lazy to record two versions, a nasty message for Reddit and a more restrained version for HN.

There are two main types of problems that a site like Hacker News should avoid: bad stories and bad comments. And it seems that the damage from bad stories is less. At the moment, the stories posted on the main page are still about the same as those posted when HN was just starting out.

I once thought that I would have to think about solutions that did not allow any nonsense to appear on the main page, but so far I have not had to do this. I did not expect the main page to remain so wonderful and I still do not quite understand why this is happening. Perhaps only more meaningful users are careful enough to offer and like links, so the marginal cost of one random user tends to zero. Or perhaps the main page protects itself by posting announcements about what offers it expects.

The most dangerous thing for the main page is material that is too easy to crawl through. If someone is proving a new theorem, the reader needs to do some work to decide if it's worth the like. A funny cartoon takes less time. Loud words with no less flashy headings get zeros because people like them without even reading them.

This is what I call the False Principle: the user selects a new site, the links of which are easiest to judge if you do not take specific measures to prevent this.

Hacker News has two types of nonsense protection. The most common types of information of no value are banned as offtopic. Photographs of kittens, accusatory speeches of politicians and other things are under a special ban. This eliminates most of the unnecessary nonsense, but not all. Some links are nonsense, in the sense that they are very short, and at the same time relevant material.

There is no single solution to this. If the link is simply simply empty demagogy, editors sometimes destroy it, even though it is relevant in the topic of hacking, because it is not relevant according to the real standard, which implies that the article should arouse intellectual curiosity. If the posts on the site are of this type, then I sometimes bath them, which means that all new material at this URL will be automatically destroyed. If the post title contains a bait link, editors sometimes rephrase it to become more actual. This is especially necessary for links with flashy headlines, because otherwise they become hidden “vote if you believe in this and this” posts, and this is the most pronounced form of unnecessary bullshit.

The technique of dealing with such links should be developed, as the links themselves are developing. The existence of aggregators has already influenced what they have combined. Now, writers are deliberately writing something that will raise traffic through aggregators - sometimes quite specific things. (No, the irony of this statement is not lost by me). There are more sinister mutations like linkjacking - publishing a retelling of someone’s article and issuing it instead of the original. This can get a lot of likes, because it remains a lot of good that is in the original article; in fact, the more the retelling is similar to plagiarism, the more good information is retained in the article. [3]

I think it’s important that a site that rejects offers provides users with a way to see what was rejected if they want to. This makes editors honest and, just as importantly, allows users to feel more confident, as they will find out if editors are being cunning. HN users can do this by clicking on the showdead field in their profile (“show the dead”, literally). [4]

Bad comments seem to be a bigger problem than bad comments. While the quality of the links on the main page has not changed much, the quality of the average comment has deteriorated in some way.

There are two main types of comment corruption: rudeness and stupidity. There are many intersections between these two characteristics - rude comments are probably just as stupid - but the strategies for dealing with them are different. Rudeness is easier to control. You can set up rules that say that the user should not be rude, and if you make them behave well, it’s quite possible to control rudeness.

Keeping stupidity under control is more difficult, perhaps because stupidity is not so easy to distinguish. Rude people often know that they are rude, while many stupid people do not realize that they are stupid.

The most dangerous form of stupid comment is not a long but erroneous statement, but a dumb joke. Long but erroneous statements are extremely rare. There is a strong correlation between the quality of a comment and its length; if you want to compare the quality of comments on public sites, the average comment length will be a good indicator. Probably, the reason is human nature, and not something specific to discuss the topic. Probably stupidity just more often takes the form of having several ideas than wrong ideas.

Regardless of the reason, silly comments are usually short. And since it’s hard to write a short comment that differs from the amount of information that it conveys, people try to stand out, trying to be funny. The most seductive format for stupid comments is supposedly witty insults, probably because insults are the easiest form of humor. [5] Therefore, one of the advantages of the prohibition of rudeness is that it also eliminates such comments.

Bad comments are like kudzu: they swiftly take up. Comments have a much greater effect on other comments than suggestions for new material. If someone offers a sloppy article, other articles do not become unsuccessful from this. But if someone posts a dumb comment in the discussion, it entails a ton of similar comments in this area. People respond to stupid jokes with stupid jokes.

Perhaps the solution is to add a delay before people can respond to the comment, and the length of the delay should be inversely proportional to the estimated quality of the comment. Then there will be less stupid discussion. [6]

I noticed that most of the methods that I described are conservative: they are aimed at preserving the nature of the site, and not at improving it. I do not think that I am biased towards the problem. This is due to the form of the problem. Hacker News was fortunate enough to start successfully, so in this case it is literally a matter of conservation. But I think this principle applies to sites of different origins.

Good things on public sites come more from people than from technology; a technique usually comes into play when it is necessary to prevent the appearance of bad things. Technology can certainly expand the discussion. Nested comments, for example. But I would rather use a site with primitive features and smart, pleasant users than a tricked out site that only idiots and trolls use.

The most important thing that a public site should do is to attract the people whom he wants to see as his users. A site that tries to be as large as possible is trying to attract everyone. But a site aimed at a certain type of user should only attract them - and, just as importantly, repel everyone else. I deliberately tried to do this with HN. The website’s graphic design is as simple as possible and the site’s rules discourage dramatic headlines. The goal is for the first person to appear on HN to be interested in the ideas expressed here.

The disadvantage of creating a site aimed only at a certain type of user is that for these users it can be too attractive. I know very well how Hacker News can be addictive. For me, as for many users, this is a kind of virtual city square. When I want to take a break from work, I go to the square, just as I could, for example, walk along Harvard Square or University Avenue in the physical world. [7] But the area in the network is more dangerous than real. If I spent half a day wandering around University Avenue, I will notice it. I have to go a mile to get there, and visiting a cafe is different from work. But visiting an online forum requires you just one click and looks very much like a job. You may be wasting your time, but you are not chilling.

Hacker News is definitely a useful site. I learned a lot from what I read on HN. I wrote several essays that started as comments here. I would not want the site to disappear. But I want to be sure that this is not a network dependence on productivity. What a terrible catastrophe it would be to lure thousands of smart people to the site so that they simply wasted their time. I would like to be 100% sure that this is not a description of HN.

It seems to me that dependence on games and social applications is still basically an unresolved problem. The situation is the same as with crack in the 1980s: we invented terrible new addictive things and we have not yet improved ways to protect against them. We will improve as a result, and this is one of those problems that I want to focus on in the near future.

[1] I tried to rank users by the average and average number of comments, and the average (high score we reject) seems to be a more accurate indicator of high quality. Although the average number of comments may be a more accurate indicator of bad comments.

[2] Another thing that I learned from this experiment is that if you are going to distinguish between people, then make sure that you do it right. This is the kind of problem where rapid prototyping doesn't work. In fact, a reasonable honest argument is the fact that distinguishing between different types of people is probably not a good idea. The reason is not that all people are the same, but because it’s bad to make a mistake and it’s hard not to make a mistake.

[3] When I notice rude linkjacking posts, I replace the URL with the one I copied. Sites that often use linkjacking are banned.

[4] Digg is notorious for its lack of clear identification. The root of the problem is not that the guys who own Digg are especially secretive, but that they use the wrong algorithm to generate their main page. Instead of swelling from the top in the process of getting more votes like Reddit, the stories begin at the top of the page and collide down with new arrivals.

The reason for this difference is that Digg is borrowed from Slashdot, while Reddit is borrowed from Delicious / popular. Digg is Slashdot with voting instead of editors and Reddit is Delicious / popular with voting instead of bookmarks. (You can still see the remnants of their origin in graphic design.)

The Digg algorithm is very sensitive to games, because any story that gets to the main page is a new story. Which in turn forces Digg to use extreme countermeasures. Many startups have some kind of secret about what tricks they had to resort to in the early days, and I suspect Digg's secret is that the best stories in fact are chosen by the editors.

[5] The dialogue between Beavis and Butthead was mainly based on this and when I read comments on really bad sites I can hear their voices.

[6] I suspect that most methods to combat stupid comments have not yet been discovered. Xkcd implemented the smartest method on its IRC channel: don't let it do the same thing twice. As soon as someone said “failure”, do not let him say it again. This will allow you to punish short comments in particular, because they have less opportunity to avoid repetition.

Another promising idea is a stupid filter, which is a probabilistic spam filter, but trained on the basis of stupid and normal comment constructs.

It is not possible to destroy bad comments in order to get rid of the problem. Comments at the bottom of a long discussion are rarely seen, so it’s enough to include quality prediction in the comments sorting algorithm.

[7] What makes most of the suburbs so demoralizing is the lack of a center for walking.

Thanks to Justin Kahn, Jessica Livingston, Robert Morris, Alexis Ohanian, Emmett Shear and Fred Wilson for reading the drafts.

Translation: Diana Sheremyeva

(Part of the translation is taken from translatedby )

Hacker News turned two years old last week. Initially, it was supposed to be a parallel project - an application for honing Arc and a place for news exchange between the current and future founders of Y Combinator. It was getting bigger and taking more time than I expected, but I do not regret it, because I learned a lot from working on this project.

Height

When we launched the project in February 2007, on weekdays the traffic was about 1600 unique daily visitors. Since then, it has increased to 22,000.

This growth rate is slightly higher than we would like. I would like the site to develop, because if the site does not grow at least slowly, then it is probably already dead. But I would not want him to achieve Digg or Reddit growth - mainly because it will weaken the nature of the site, but also because I do not want to spend all my time working on scaling.

I already have enough problems with this. I remember that the initial motivation for HN was to test a new programming language and, moreover, to test a language that focuses on experiments with language design, and not its performance. Every time the site became slow, I supported myself, recalling the famous quote by McIlroy and Bentley

The key to efficiency lies in the elegance of decisions, not in iterating over all possible options.

and searched for problem spots that I could fix with a minimum of code. I am still able to maintain the site, in the sense of maintaining the same performance, despite a 14-fold increase. I don’t know how I will manage further, but I’ll probably think of something.

This is my attitude to the site as a whole. Hacker News is an experiment, an experiment in a new field. This type of site is usually only a few years old. There has been only a few decades of discussion on the Internet as such, so we probably only found a small fraction of what we find in the end.

That is why I am so optimistic about HN. When the technology is so new, existing solutions are usually terrible, which means you can do something much better, which in turn means that many problems that seem insoluble are not in fact. Including, I hope, the problem that plagues many communities: destruction due to growth.

Recession

Users have been worried about this since the site was only a few months old. So far, these fears have been in vain, but this will not always be the case. Recession is a difficult problem. But probably solvable; this does not mean that open conversations “always” were destroyed by growth, when “always” means only 20 cases.

But it is important to remember that we are trying to solve a new problem, because it means that we should try something new and most of this probably will not work. A couple of weeks ago, I tried to display the names of users with the highest average comment count in orange. [1] It was a mistake. Suddenly, a culture that was more or less united was divided into haves and have-nots. I did not realize how united the culture was until I saw it divided. It was painful to look at it. [2]

Therefore, the orange usernames will not return. (Sorry for that). But there will be other ideas that are just as likely to break in the future, and those that work will probably seem as broken as those that are not.

Perhaps the most important thing I learned about the recession is that it is measured more in behavior than in the users themselves. You want to eliminate bad behavior rather than bad people. User behavior is surprisingly malleable. If you expect people to behave well, they usually do; and vice versa.

Although, of course, the prohibition of bad behavior often eliminates bad people, because they feel unpleasantly limited in a place where they should behave well. This way of getting rid of them is softer and probably more effective than others.

It’s pretty clear now that the theory of broken windows is appropriate for public sites. The theory is that small manifestations of bad behavior encourage even worse behaviors: a residential area with lots of graffiti and broken windows becomes the area where robberies often occur. I lived in New York when Giuliani introduced the reforms that made this theory famous, and the changes were amazing. And I was a Reddit user when the exact opposite happened, and the changes were just as impressive.

I do not criticize Steve and Alexis. What happened to Reddit was not a consequence of neglect. From the very beginning, they had a policy of censoring exclusively spam. In addition, Reddit had other goals compared to Hacker News. Reddit was a startup, not a third-party project; their goal was to grow as fast as possible. Combine fast growth and zero sponsorship and get permissiveness. But I don’t think that they would do anything differently if they had the opportunity. Judging by the traffic, Reddit is much more successful than Hacker News.

But what happened with Reddit doesn't necessarily happen with HN. There are several local upper limits. There may be places with full permissiveness, but there are places that are more meaningful, as well as in the real world; and people will behave differently depending on where they are, just like in the real world.

I observed this in practice. I saw people cross-mailing on Reddit and Hacker News who were not too lazy to record two versions, a nasty message for Reddit and a more restrained version for HN.

Materials

There are two main types of problems that a site like Hacker News should avoid: bad stories and bad comments. And it seems that the damage from bad stories is less. At the moment, the stories posted on the main page are still about the same as those posted when HN was just starting out.

I once thought that I would have to think about solutions that did not allow any nonsense to appear on the main page, but so far I have not had to do this. I did not expect the main page to remain so wonderful and I still do not quite understand why this is happening. Perhaps only more meaningful users are careful enough to offer and like links, so the marginal cost of one random user tends to zero. Or perhaps the main page protects itself by posting announcements about what offers it expects.

The most dangerous thing for the main page is material that is too easy to crawl through. If someone is proving a new theorem, the reader needs to do some work to decide if it's worth the like. A funny cartoon takes less time. Loud words with no less flashy headings get zeros because people like them without even reading them.

This is what I call the False Principle: the user selects a new site, the links of which are easiest to judge if you do not take specific measures to prevent this.

Hacker News has two types of nonsense protection. The most common types of information of no value are banned as offtopic. Photographs of kittens, accusatory speeches of politicians and other things are under a special ban. This eliminates most of the unnecessary nonsense, but not all. Some links are nonsense, in the sense that they are very short, and at the same time relevant material.

There is no single solution to this. If the link is simply simply empty demagogy, editors sometimes destroy it, even though it is relevant in the topic of hacking, because it is not relevant according to the real standard, which implies that the article should arouse intellectual curiosity. If the posts on the site are of this type, then I sometimes bath them, which means that all new material at this URL will be automatically destroyed. If the post title contains a bait link, editors sometimes rephrase it to become more actual. This is especially necessary for links with flashy headlines, because otherwise they become hidden “vote if you believe in this and this” posts, and this is the most pronounced form of unnecessary bullshit.

The technique of dealing with such links should be developed, as the links themselves are developing. The existence of aggregators has already influenced what they have combined. Now, writers are deliberately writing something that will raise traffic through aggregators - sometimes quite specific things. (No, the irony of this statement is not lost by me). There are more sinister mutations like linkjacking - publishing a retelling of someone’s article and issuing it instead of the original. This can get a lot of likes, because it remains a lot of good that is in the original article; in fact, the more the retelling is similar to plagiarism, the more good information is retained in the article. [3]

I think it’s important that a site that rejects offers provides users with a way to see what was rejected if they want to. This makes editors honest and, just as importantly, allows users to feel more confident, as they will find out if editors are being cunning. HN users can do this by clicking on the showdead field in their profile (“show the dead”, literally). [4]

Comments

Bad comments seem to be a bigger problem than bad comments. While the quality of the links on the main page has not changed much, the quality of the average comment has deteriorated in some way.

There are two main types of comment corruption: rudeness and stupidity. There are many intersections between these two characteristics - rude comments are probably just as stupid - but the strategies for dealing with them are different. Rudeness is easier to control. You can set up rules that say that the user should not be rude, and if you make them behave well, it’s quite possible to control rudeness.

Keeping stupidity under control is more difficult, perhaps because stupidity is not so easy to distinguish. Rude people often know that they are rude, while many stupid people do not realize that they are stupid.

The most dangerous form of stupid comment is not a long but erroneous statement, but a dumb joke. Long but erroneous statements are extremely rare. There is a strong correlation between the quality of a comment and its length; if you want to compare the quality of comments on public sites, the average comment length will be a good indicator. Probably, the reason is human nature, and not something specific to discuss the topic. Probably stupidity just more often takes the form of having several ideas than wrong ideas.

Regardless of the reason, silly comments are usually short. And since it’s hard to write a short comment that differs from the amount of information that it conveys, people try to stand out, trying to be funny. The most seductive format for stupid comments is supposedly witty insults, probably because insults are the easiest form of humor. [5] Therefore, one of the advantages of the prohibition of rudeness is that it also eliminates such comments.

Bad comments are like kudzu: they swiftly take up. Comments have a much greater effect on other comments than suggestions for new material. If someone offers a sloppy article, other articles do not become unsuccessful from this. But if someone posts a dumb comment in the discussion, it entails a ton of similar comments in this area. People respond to stupid jokes with stupid jokes.

Perhaps the solution is to add a delay before people can respond to the comment, and the length of the delay should be inversely proportional to the estimated quality of the comment. Then there will be less stupid discussion. [6]

People

I noticed that most of the methods that I described are conservative: they are aimed at preserving the nature of the site, and not at improving it. I do not think that I am biased towards the problem. This is due to the form of the problem. Hacker News was fortunate enough to start successfully, so in this case it is literally a matter of conservation. But I think this principle applies to sites of different origins.

Good things on public sites come more from people than from technology; a technique usually comes into play when it is necessary to prevent the appearance of bad things. Technology can certainly expand the discussion. Nested comments, for example. But I would rather use a site with primitive features and smart, pleasant users than a tricked out site that only idiots and trolls use.

The most important thing that a public site should do is to attract the people whom he wants to see as his users. A site that tries to be as large as possible is trying to attract everyone. But a site aimed at a certain type of user should only attract them - and, just as importantly, repel everyone else. I deliberately tried to do this with HN. The website’s graphic design is as simple as possible and the site’s rules discourage dramatic headlines. The goal is for the first person to appear on HN to be interested in the ideas expressed here.

The disadvantage of creating a site aimed only at a certain type of user is that for these users it can be too attractive. I know very well how Hacker News can be addictive. For me, as for many users, this is a kind of virtual city square. When I want to take a break from work, I go to the square, just as I could, for example, walk along Harvard Square or University Avenue in the physical world. [7] But the area in the network is more dangerous than real. If I spent half a day wandering around University Avenue, I will notice it. I have to go a mile to get there, and visiting a cafe is different from work. But visiting an online forum requires you just one click and looks very much like a job. You may be wasting your time, but you are not chilling.

Hacker News is definitely a useful site. I learned a lot from what I read on HN. I wrote several essays that started as comments here. I would not want the site to disappear. But I want to be sure that this is not a network dependence on productivity. What a terrible catastrophe it would be to lure thousands of smart people to the site so that they simply wasted their time. I would like to be 100% sure that this is not a description of HN.

It seems to me that dependence on games and social applications is still basically an unresolved problem. The situation is the same as with crack in the 1980s: we invented terrible new addictive things and we have not yet improved ways to protect against them. We will improve as a result, and this is one of those problems that I want to focus on in the near future.

Notes

[1] I tried to rank users by the average and average number of comments, and the average (high score we reject) seems to be a more accurate indicator of high quality. Although the average number of comments may be a more accurate indicator of bad comments.

[2] Another thing that I learned from this experiment is that if you are going to distinguish between people, then make sure that you do it right. This is the kind of problem where rapid prototyping doesn't work. In fact, a reasonable honest argument is the fact that distinguishing between different types of people is probably not a good idea. The reason is not that all people are the same, but because it’s bad to make a mistake and it’s hard not to make a mistake.

[3] When I notice rude linkjacking posts, I replace the URL with the one I copied. Sites that often use linkjacking are banned.

[4] Digg is notorious for its lack of clear identification. The root of the problem is not that the guys who own Digg are especially secretive, but that they use the wrong algorithm to generate their main page. Instead of swelling from the top in the process of getting more votes like Reddit, the stories begin at the top of the page and collide down with new arrivals.

The reason for this difference is that Digg is borrowed from Slashdot, while Reddit is borrowed from Delicious / popular. Digg is Slashdot with voting instead of editors and Reddit is Delicious / popular with voting instead of bookmarks. (You can still see the remnants of their origin in graphic design.)

The Digg algorithm is very sensitive to games, because any story that gets to the main page is a new story. Which in turn forces Digg to use extreme countermeasures. Many startups have some kind of secret about what tricks they had to resort to in the early days, and I suspect Digg's secret is that the best stories in fact are chosen by the editors.

[5] The dialogue between Beavis and Butthead was mainly based on this and when I read comments on really bad sites I can hear their voices.

[6] I suspect that most methods to combat stupid comments have not yet been discovered. Xkcd implemented the smartest method on its IRC channel: don't let it do the same thing twice. As soon as someone said “failure”, do not let him say it again. This will allow you to punish short comments in particular, because they have less opportunity to avoid repetition.

Another promising idea is a stupid filter, which is a probabilistic spam filter, but trained on the basis of stupid and normal comment constructs.

It is not possible to destroy bad comments in order to get rid of the problem. Comments at the bottom of a long discussion are rarely seen, so it’s enough to include quality prediction in the comments sorting algorithm.

[7] What makes most of the suburbs so demoralizing is the lack of a center for walking.

Thanks to Justin Kahn, Jessica Livingston, Robert Morris, Alexis Ohanian, Emmett Shear and Fred Wilson for reading the drafts.

Translation: Diana Sheremyeva

(Part of the translation is taken from translatedby )

Only registered users can participate in the survey. Please come in.

I read hacker news

- 36.3% almost every day 12

- 12.1% Once a week 4

- 6% Once a month 2

- 6% Once a year 2

- 21.2% less than once a year 7

- 18.1% other 6