Back to microservices with Istio. Part 2

- Transfer

Note perev. : The first part of this series was devoted to introducing Istio and demonstrating it in action. Now we will talk about more complex aspects of the configuration and use of this service mesh, and in particular, about finely tuned routing and network traffic management.

We also remind you that the article uses configurations (manifests for Kubernetes and Istio) from the istio-mastery repository .

Traffic management

With Istio, new features appear in the cluster to provide:

- Dynamic query routing : canary rollouts, A / B testing;

- Load balancing : simple and consistent, based on hashes;

- Fall recovery : timeouts, retries, circuit breakers;

- Fault input : delays, interruption of requests, etc.

In the continuation of the article, these features will be shown as an example of the selected application and new concepts will be introduced along the way. The first such concept will be

DestinationRules(i.e. rules about the recipient of traffic / requests - approx. Transl.) , With the help of which we activate A / B testing.A / B Testing: DestinationRules in Practice

A / B testing is used in cases where there are two versions of the application (usually they differ visually) and we are not 100% sure which one will improve user interaction. Therefore, we simultaneously launch both versions and collect metrics.

To deploy the second version of the frontend needed to demonstrate A / B testing, run the following command:

$ kubectl apply -f resource-manifests/kube/ab-testing/sa-frontend-green-deployment.yaml

deployment.extensions/sa-frontend-green createdThe deployment manifest for the "green version" differs in two places:

- Image is based on another tag -

istio-green, - Pods have a label

version: green.

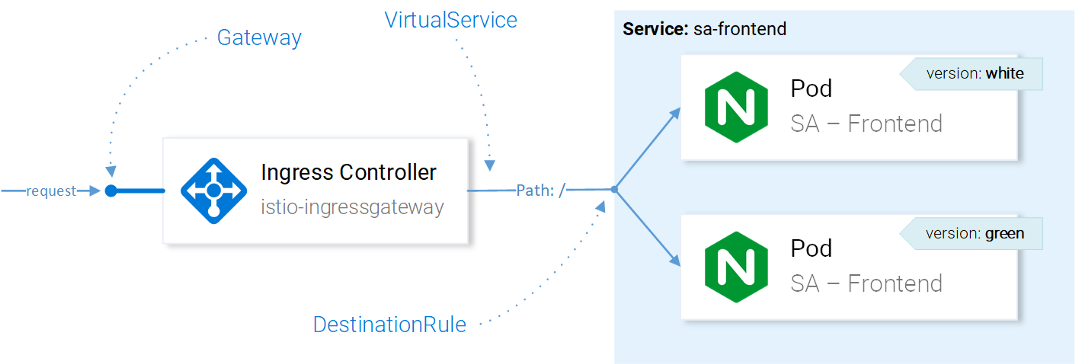

Since both deployments have a label

app: sa-frontend, requests routed by the virtual service sa-external-servicesto the service sa-frontendwill be redirected to all its instances and the load will be distributed using the round-robin algorithm , which will lead to the following situation:

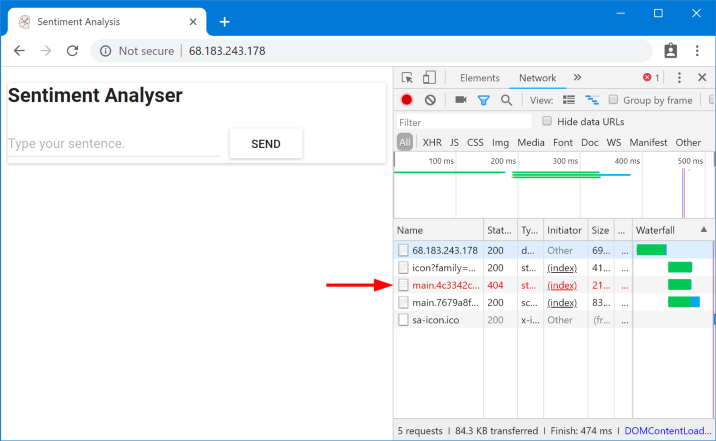

Requested files not found

These files were not found because that they are called differently in different versions of the application. Let's make sure of this:

$ curl --silent http://$EXTERNAL_IP/ | tr '"' '\n' | grep main

/static/css/main.c7071b22.css

/static/js/main.059f8e9c.js

$ curl --silent http://$EXTERNAL_IP/ | tr '"' '\n' | grep main

/static/css/main.f87cd8c9.css

/static/js/main.f7659dbb.jsThis means that

index.htmlrequesting one version of static files can be sent by the load balancer to pods that have a different version, where, for obvious reasons, such files do not exist. Therefore, in order for the application to work, we need to put a restriction: " the same version of the application that gave index.html must also serve subsequent requests ." We will achieve the goal with consistent hash-based load balancing (Consistent Hash Loadbalancing) . In this case, requests from one client are sent to the same backend instance , for which a predefined property is used - for example, an HTTP header. Implemented using DestinationRules.

DestinationRules

After VirtualService has sent a request to the desired service, using DestinationRules we can determine the policies that will be applied to the traffic destined for instances of this service:

Traffic management with Istio resources

Note : The influence of Istio resources on network traffic is presented here in a simplified way to understand . To be precise, the decision on which instance to send the request to is made by Envoy in the Ingress Gateway configured in CRD.

Using the Destination Rules, we can configure load balancing so that consistent hashes are used and responses from the same service instance are guaranteed to the same user. The following configuration allows this (destinationrule-sa-frontend.yaml ):

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: sa-frontend

spec:

host: sa-frontend

trafficPolicy:

loadBalancer:

consistentHash:

httpHeaderName: version # 11 - a hash will be generated based on the contents of the HTTP header

version. Apply the configuration with the following command:

$ kubectl apply -f resource-manifests/istio/ab-testing/destinationrule-sa-frontend.yaml

destinationrule.networking.istio.io/sa-frontend createdNow run the command below and make sure you get the files you need when specifying the header

version:$ curl --silent -H "version: yogo" http://$EXTERNAL_IP/ | tr '"' '\n' | grep mainNote : To add different values in the title and test the results directly in the browser, you can use this extension to Chrome (or this one for Firefox - approx. Transl.) .

In general, DestinationRules has more options in the field of load balancing - check the official documentation for details .

Before exploring VirtualService further, we will remove the “green version” of the application and the corresponding rule in the direction of traffic by executing the following commands:

$ kubectl delete -f resource-manifests/kube/ab-testing/sa-frontend-green-deployment.yaml

deployment.extensions “sa-frontend-green” deleted

$ kubectl delete -f resource-manifests/istio/ab-testing/destinationrule-sa-frontend.yaml

destinationrule.networking.istio.io “sa-frontend” deletedMirroring: Virtual Services in Practice

Shadowing (“shielding”) or Mirroring (“mirroring”) is used in cases where we want to test a change in production without affecting end users: for this we duplicate (“mirror”) requests for the second instance where the necessary changes are made, and look at the consequences. Simply put, this is when your (a) colleague selects the most critical issue and makes a pull request in the form of such a huge lump of dirt that no one can actually make him a review.

To test this scenario in action, create a second instance of SA-Logic with bugs (

buggy) by running the following command:$ kubectl apply -f resource-manifests/kube/shadowing/sa-logic-service-buggy.yaml

deployment.extensions/sa-logic-buggy createdAnd now we execute the command to make sure that all instances of c also

app=sa-logichave labels with the corresponding versions:$ kubectl get pods -l app=sa-logic --show-labels

NAME READY LABELS

sa-logic-568498cb4d-2sjwj 2/2 app=sa-logic,version=v1

sa-logic-568498cb4d-p4f8c 2/2 app=sa-logic,version=v1

sa-logic-buggy-76dff55847-2fl66 2/2 app=sa-logic,version=v2

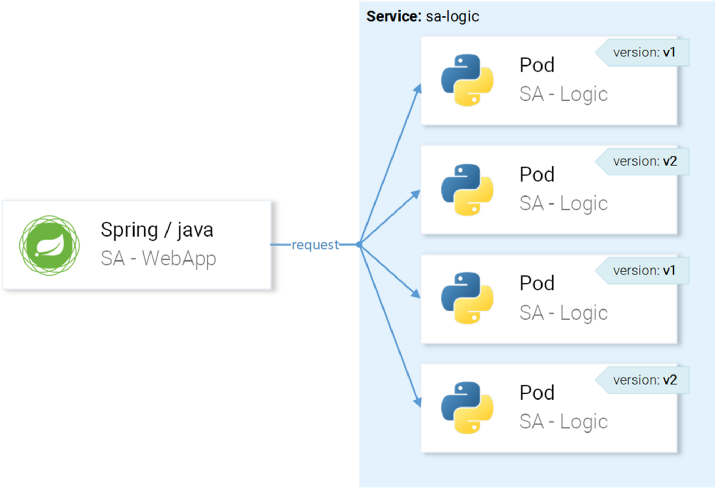

sa-logic-buggy-76dff55847-kx8zz 2/2 app=sa-logic,version=v2The service

sa-logicis aimed at pods with a label app=sa-logic, so all requests will be distributed between all instances:

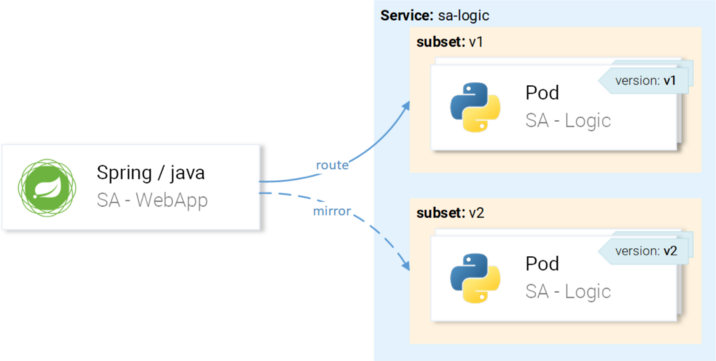

... but we want requests to be sent to instances with version v1 and mirrored to instances with version v2:

We will achieve this through VirtualService in combination with DestinationRule, where the rules define subsets and VirtualService routes to a particular subset.

Defining Subsets in Destination Rules

Subset (subsets) are determined by the following configuration ( sa-logic-subsets-destinationrule.yaml ):

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: sa-logic

spec:

host: sa-logic # 1

subsets:

- name: v1 # 2

labels:

version: v1 # 3

- name: v2

labels:

version: v2- The host (

host) determines that this rule applies only to cases when the route goes towards the servicesa-logic; - The names (

name) of the subsets are used when routing to instances of the subset; - The label (

label) defines the key-value pairs that the instances must match in order to become part of the subset.

Apply the configuration with the following command:

$ kubectl apply -f resource-manifests/istio/shadowing/sa-logic-subsets-destinationrule.yaml

destinationrule.networking.istio.io/sa-logic createdNow that the subsets are defined, you can move on and configure VirtualService to apply the rules to requests to sa-logic so that they:

- Routed to a subset

v1, - Mirrored to a subset

v2.

The following manifest allows you to achieve your plan ( sa-logic-subsets-shadowing-vs. yaml ):

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: sa-logic

spec:

hosts:

- sa-logic

http:

- route:

- destination:

host: sa-logic

subset: v1

mirror:

host: sa-logic

subset: v2No explanation is required here, so just take a look at the action:

$ kubectl apply -f resource-manifests/istio/shadowing/sa-logic-subsets-shadowing-vs.yaml

virtualservice.networking.istio.io/sa-logic createdAdd the load by calling this command:

$ while true; do curl -v http://$EXTERNAL_IP/sentiment \

-H "Content-type: application/json" \

-d '{"sentence": "I love yogobella"}'; \

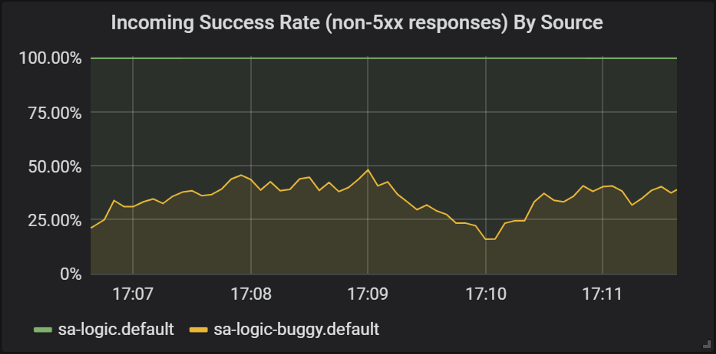

sleep .8; doneLet's look at the results in Grafana, where you can see that the version with bugs (

buggy) crashes for ~ 60% of requests, but none of these crashes affects end users, because they have a working service.

Success of responses of different versions of the sa-logic service

Here we first saw how VirtualService is applied to the Envoys of our services: when

sa-web-appit makes a request to sa-logic, it passes through the Envoy sidecar, which - through VirtualService - is configured to route the request to a subset of v1 and mirror a request to a subset of v2 service sa-logic. I know: you already had time to think that Virtual Services are simple. In the next section, we expand this view by the fact that they are also truly magnificent.

Canary rolls

Canary Deployment is the process of rolling out a new version of an application for a small number of users. It is used to make sure that there are no problems in the release and only after that, already being confident in its sufficient (release) quality, to spread to a larger audience.

To demonstrate canary rollouts, we will continue to work with a subset of

buggyy sa-logic. Let's not waste time on it and immediately send 20% of users to the version with bugs (it will represent our canary rollout), and the remaining 80% to normal service. To do this, apply the following VirtualService ( sa-logic-subsets-canary-vs.yaml ):

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: sa-logic

spec:

hosts:

- sa-logic

http:

- route:

- destination:

host: sa-logic

subset: v1

weight: 80 # 1

- destination:

host: sa-logic

subset: v2

weight: 20 # 11 is weight (

weight), which determines the percentage of requests that will be sent to the recipient or a subset of the recipient. Update the previous VirtualService configuration for the

sa-logicfollowing command:$ kubectl apply -f resource-manifests/istio/canary/sa-logic-subsets-canary-vs.yaml

virtualservice.networking.istio.io/sa-logic configured... and immediately see that part of the requests crashes:

$ while true; do \

curl -i http://$EXTERNAL_IP/sentiment \

-H "Content-type: application/json" \

-d '{"sentence": "I love yogobella"}' \

--silent -w "Time: %{time_total}s \t Status: %{http_code}\n" \

-o /dev/null; sleep .1; done

Time: 0.153075s Status: 200

Time: 0.137581s Status: 200

Time: 0.139345s Status: 200

Time: 30.291806s Status: 500VirtualServices activate canary rollouts: in this case, we narrowed the potential consequences from problems to 20% of the user base. Perfectly! Now, in each case, when we are not sure of our code (in other words, always ...), we can use mirroring and canary rollouts.

Timeouts and Retries

But not always bugs are in the code. In the list of " 8 errors in distributed computing " in the first place appears the erroneous opinion that "the network is reliable." In fact, the network is not reliable, and for this reason we need timeouts (timeouts) and repeated attempts (retries) .

For demonstration, we will continue to use version

sa-logic( buggy) of the same problem , and we will simulate network unreliability with random failures. Let our service with bugs have a 1/3 chance for a response that is too long, 1/3 for a completion with an Internal Server Error, and 1/3 for a successful page return.

In order to mitigate the consequences of such problems and make life better for users, we can:

- add a timeout if the service responds for more than 8 seconds,

- retry if the request fails.

For implementation, we will use the following resource definition ( sa-logic-retries-timeouts-vs.yaml ):

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: sa-logic

spec:

hosts:

- sa-logic

http:

- route:

- destination:

host: sa-logic

subset: v1

weight: 50

- destination:

host: sa-logic

subset: v2

weight: 50

timeout: 8s # 1

retries:

attempts: 3 # 2

perTryTimeout: 3s # 3- The timeout for the request is set to 8 seconds;

- Repeated request attempts are made 3 times;

- And each attempt is considered unsuccessful if the response time exceeds 3 seconds.

So we have achieved optimization, because the user does not have to wait more than 8 seconds and we will make three new attempts to get an answer in case of failures, increasing the chance of a successful response.

Apply the updated configuration with the following command:

$ kubectl apply -f resource-manifests/istio/retries/sa-logic-retries-timeouts-vs.yaml

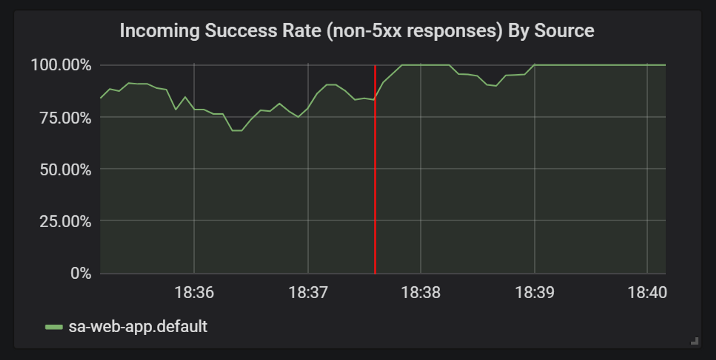

virtualservice.networking.istio.io/sa-logic configuredAnd check in the graphs of Grafana that the number of successful answers has exceeded:

Improvements in the statistics of successful answers after adding timeouts and retries

Before moving on to the next section (or rather, to the next part of the article, because in this practical experiment will no longer be - approx. transl.) , uninstall

sa-logic-buggyVirtualService by executing the following commands:$ kubectl delete deployment sa-logic-buggy

deployment.extensions “sa-logic-buggy” deleted

$ kubectl delete virtualservice sa-logic

virtualservice.networking.istio.io “sa-logic” deletedCircuit Breaker and Bulkhead Patterns

We are talking about two important patterns in microservice architecture that allow you to achieve self-healing services.

Circuit Breaker (“circuit breaker”) is used to stop requests coming to an instance of a service that is considered unhealthy and restore it while client requests are redirected to healthy instances of this service (which increases the percentage of successful responses). (Note perev .: A more detailed description of the pattern can be found, for example, here .)

Bulkhead ("partition")isolates service failures from hitting the entire system. For example, service B is broken, and another service (the client of service B) makes a request to service B, as a result of which it will use up its thread pool and will not be able to serve other requests (even if they are not related to service B). (Note: a more detailed description of the pattern can be found, for example, here .)

I will omit details on the implementation of these patterns, because they are easy to find in the official documentation , and I really want to show authentication and authorization, which will be discussed in the next part of the article.

PS from the translator

Read also in our blog: