Apocalypse Mathematics: Game Theory and the Caribbean Nuclear Crisis

- Transfer

Theory of moves

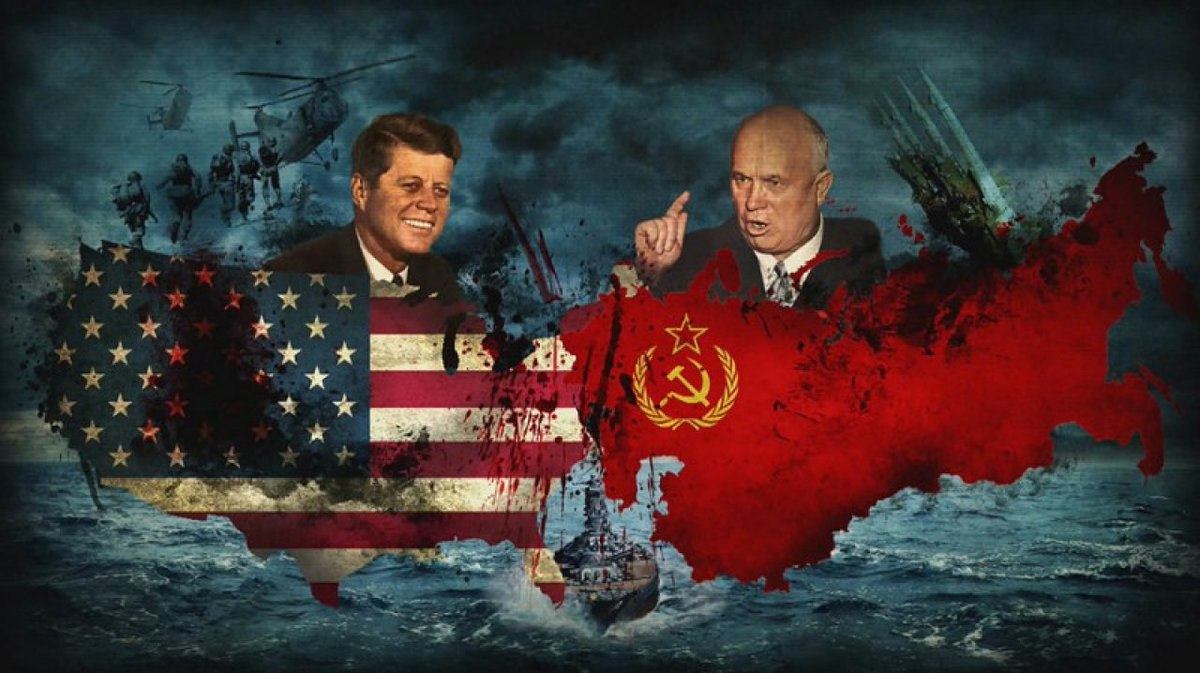

“We played staring eyes, and, in my opinion, the enemy blinked,” said US Secretary of State Dean Rusk at the peak of the Cuban missile crisis in October 1962. He was referring to the signals that the Soviet Union gave, wanting to resolve the most dangerous nuclear confrontation between the two superpowers, which many analysts interpreted as a classic example of playing nuclear "chicken" (in Russian, the analogue of this game is called "hawks and pigeons").

“We played staring eyes, and, in my opinion, the enemy blinked,” said US Secretary of State Dean Rusk at the peak of the Cuban missile crisis in October 1962. He was referring to the signals that the Soviet Union gave, wanting to resolve the most dangerous nuclear confrontation between the two superpowers, which many analysts interpreted as a classic example of playing nuclear "chicken" (in Russian, the analogue of this game is called "hawks and pigeons"). The game of "chicken" is usually used to simulate conflicts in which each of the players headed for a collision. Players can be drivers approaching each other on a narrow road, each of which has a choice - to turn to avoid collisions, or not to turn off. In the story "Rebel Without a Cause"which was later converted into a film with the participation of James Dean, the drivers were two teenagers, but they did not drive at each other, but towards the edge. The goal of the game was to not press the brakes first and thus turn into a “chicken”, and at the same time not to fall off a cliff.

Although the Caribbean missile crisis looks like a chicken game, in reality it is poorly modeled by this game. Another game more accurately describes the actions of the leaders of the United States and the Soviet Union, but even for this game, standard game theory does not fully describe the options available to them.

On the other hand, it reproduces or predicts the past actions of the leaders of the “theory of moves” (theory of moves), based on the theory of games, but radically changing the standard rules of the game. More importantly, this theory sheds light on the dynamics of the game, based on the assumption that players think not only about the immediate consequences of their actions, but also about their influence on the game in the future.

I use the Caribbean nuclear crisis to illustrate certain parts of this theory, which is not just an abstract mathematical model, but also reflects the choice made in real life, the thought processes that led to it, and also explains the actions of living flesh and blood players. Theodor Sorensen, a special adviser to President John F. Kennedy, actually used the terminology of “moves” to describe the discussions of the Executive Committee (Excom, Executive Committee) of Kennedy's main advisers during the Cuban missile crisis:

"We discussed the reaction of the Soviets to any possible moves of the United States, our reaction to these actions of the Soviets, and so on, trying to reach each of these paths to a logical conclusion."

Classic game theory and nuclear crisis

Game theory is a branch of mathematics that studies decision making in social interactions. It applies to situations ( games ) in which two or more people (called players ) choose from two or more modes of action (called strategies ). The possible outcomes of the game depend on the actions selected by all players, and can be evaluated in order of preference for each player.

Game theory is a branch of mathematics that studies decision making in social interactions. It applies to situations ( games ) in which two or more people (called players ) choose from two or more modes of action (called strategies ). The possible outcomes of the game depend on the actions selected by all players, and can be evaluated in order of preference for each player. In some games with two players and two strategies, there are player strategies that are in some sense “stable”. This is true when no player, deviating from his strategy, can achieve the best results. These two strategies together are called the Nash equilibrium, in honor of mathematician John Nash., received in 1994 the Nobel Prize in Economics for his work in the field of game theory. Nash equilibria do not necessarily lead to the best results for one or even two players. Moreover, in games that can be analyzed and where players can set only the rank of results (“ordinal games”), but cannot associate numerical values with them (“cardinal games”) - they may not exist. (Although, as Nash showed, they always exist in cardinal games, but Nash equilibrium in such games can include “mixed strategies”, which I will discuss below.)

The Cuban nuclear crisis was initiated by an attempt by the Soviet Union in October 1962 to install in Cuba medium-range and intermediate-range nuclear ballistic missiles capable of striking a large part of the United States. The goal of the United States was the immediate movement of Soviet missiles, and to achieve it, the top leadership of the United States seriously considered two strategies [ see Figure 1 ]:

- The naval blockade (B) , or, as it was covertly called, "quarantine", to prevent the delivery of new missiles, followed by potentially more serious action that would force the Soviet Union to remove the already installed missiles.

- A “surgical” airstrike (A) to destroy already installed missiles, as far as possible, which could potentially be followed by an invasion of the island.

The following alternatives were opened before the leadership of the Soviet Union:

- Review (W) of their missiles.

- Conservation (M) of rockets on the island.

| Soviet Union (USSR) | |||

| Review (W) | Preservation (M) | ||

| United States (USA) | Blockade (B) | Compromise (3.3) | Victory of the Soviets, defeat of the USA (2.4) |

| Air strike (A) | US victory, defeat of the Soviets (4.2) | Nuclear War (1.1) | |

Figure 1: Cuban nuclear crisis as a chicken game

Key: (x, y) = (US win, Soviets win): 4 = best; 3 = slightly worse than best; 2 = slightly better than the worst; 1 = worst. Nash equilibrium is underlined.

These strategies can be considered alternative programs of actions that can be chosen by two parties, or “players” in the terminology of game theory. They lead to four possible outcomes that players must rank for as follows: 4 = best; 3 = slightly worse than best; 2 = slightly better than the worst; 1 = worst. That is, the greater the number, the greater the gain; but the winnings are only ordinal , that is, they denote only the order of winnings from best to worst, but not to the extent that the player prefers one result to another. The first number in each of the paired results is the player’s horizontal gain (US), the second number is the vertical gain of the player (USSR).

Needless to say, the election of the strategy, the likely results and the winnings associated with them, shown in Figure 1, provide only a general skeleton of the picture of the crisis unfolding over thirteen days. Both sides considered more than two alternatives from the list, and each of them had several variations. The Soviets, for example, demanded the withdrawal of American missiles from Turkey as a quid pro quo for recalling their own missiles from Cuba. This requirement was publicly ignored by the United States.

Nevertheless, most observers of this crisis believed that the two superpowers headed for a collision, which gave the name of one of the books devoted to this nuclear confrontation. In addition, they agree that none of the parties sought to take any irreparable steps, like one of the drivers playing in the “chicken”, defiantly disrupting the steering wheel of his car in view of the other driver, thus excluding the possibility of turning.

Although in a sense the United States "won" by forcing the Soviets to withdraw their missiles, USSR First Secretary Nikita Khrushchev at the same time fished President Kennedy out of a promise not to attack Cuba, therefore such an end result could be considered a kind of compromise. But for the game of "chicken" this is not a prediction on game theory, because the strategies associated with the compromise do not constitute Nash equilibrium.

To make sure of this, suppose that the game is in a compromise position (3.3), that is, the United States is blocking Cuba, and the USSR is withdrawing its missiles. This strategy is unstable, since both players have an incentive to reject to their more militant strategy. If the United States had deviated, changing its strategy to an airstrike, the game would have shifted to (4.2), improving the gains received by the United States; if the USSR had deviated, changing the strategy for preserving rockets, the game would have shifted to (2.4), giving the USSR a gain of 4. (This classic game theory scheme does not give us any information about what result will be chosen, because the winning table symmetric for both players. This is a frequent problem of interpreting the results of a theoretical analysis of games where several equilibrium positions may arise.) Finally, if players get the mutually worst result (1.1),

Theory of Movements and the Nuclear Crisis

Using the chicken game to simulate a situation like the Caribbean crisis is problematic, not only because the compromise outcome (3.3) is unstable, but also because in the real world the two sides do not choose their strategies simultaneously or independently of each other, as it is supposed in the chicken game described above. The Soviets responded specifically to the blockade, after it was declared by the United States. Moreover, the fact that the United States considered the possibility of an escalation of the conflict, at least up to airstrike, suggests that the initial decision on the blockade was not considered final. That is, after the announcement of the blockade, the USA still considered possible options for choosing a strategy.

Using the chicken game to simulate a situation like the Caribbean crisis is problematic, not only because the compromise outcome (3.3) is unstable, but also because in the real world the two sides do not choose their strategies simultaneously or independently of each other, as it is supposed in the chicken game described above. The Soviets responded specifically to the blockade, after it was declared by the United States. Moreover, the fact that the United States considered the possibility of an escalation of the conflict, at least up to airstrike, suggests that the initial decision on the blockade was not considered final. That is, after the announcement of the blockade, the USA still considered possible options for choosing a strategy.Consequently, this game is best modeled as successive negotiations in which neither side made the “all or nothing” choice; both considered alternatives, in particular in the event that the opposite side did not respond in a way that the other side deemed appropriate. In the most serious deterioration of the nuclear deterrence relationship between the superpowers, which has been preserved since the Second World War, each of the parties cautiously probed their way, making threatening steps. The Soviet Union, fearing a US invasion of Cuba before the crisis, and also trying to maintain its strategic position in the world, concluded that the risk of installing missiles on the island was worth it. He believed that the United States, faced with a fait accompli(with a fait accompli), will refrain from attacking Cuba and will not dare to take other harsh retaliatory measures. Even if the installation of missiles triggers a crisis, the Soviets did not consider the likelihood of war to be high (during the crisis, President Kennedy estimated the likelihood of war in the interval from 1/3 to 1/2), that is, the risk of provoking the United States would be rational for them.

There are valid reasons to believe that top US leadership did not view confrontation as a “chicken game,” at least in the way it interpreted and ranked the possible results. I propose an alternative model of the Caribbean nuclear crisis in the form of a game, which I will call “Alternative” . In it, I will keep the same strategies of the players as in the “chicken,” but I will assume a different ranking and interpretation of the results by the United States [see figure 2 ]. Such rankings and interpretations better correspond to historical documents than the parameters of the “chicken” game, as far as can be judged by statements made by President Kennedy and the United States Air Force, as well as by the type and quantity of nuclear weapons available to the USSR (more on this below ).

- BW : The choice of the US blockade and the recall of missiles by the Soviet Union is still considered a compromise for both players - (3.3).

- BM : in the face of the blockade of the United States, the preservation by the Soviets of missiles in Cuba leads to the victory of the USSR (the best result for it) and the surrender of the US (the worst result for them) - (1.4).

- AM : an airstrike that destroys the missiles saved by the Soviet Union is considered an “honorable” for the USA action (the best result for them) and the defeat of the Soviets (their worst result) - (4.1).

- AW : an airstrike that destroys the missiles recalled by the Soviets is considered a “shameful” US action (the result is slightly better than the worst for them) and the defeat of the Councils (the result is slightly better than the worst) - (2.2).

| Soviet Union (USSR) | ||||

| Review (W) | Preservation (M) | |||

| United States (USA) | Blockade (B) | Compromise (3.3) | → | Soviet Victory, US capitulation (1.4) |

|  | |||

| Air strike (A) | "Shameful" US action, defeat of the Soviets (2.2) | ← | "Honorary" action of the USA, defeat of the Soviets (4.1) | |

Figure 2: The Caribbean Nuclear Crisis as an “Alternative”

Key: (x, y) = (win for the USA, win for the USSR), 4 = best; 3 = slightly worse than best; 2 = slightly better than the worst; 1 = worst. Non-myopic equilibria are bold. The arrows indicate the direction of the cycle.

Even though an air strike in both cases leads to the defeat of the Soviets, (2.2) and (4.1), I interpret (2.2) as causing the least damage to the USSR, because from the point of view of the rest of the world, an air strike can be considered as a blatantly overreaction, and therefore a “disgraceful” US action in the event of the existence of clear evidence that the Soviets are in the process of recalling missiles. On the other hand, in the absence of such evidence, an airstrike by the United States, which might have been followed by an invasion, would have been an action to oust Soviet missiles.

Statements from the top US leadership confirm compliance with "Alternative." In response to a letter from Khrushchev, Kennedy reports:

"If you agree to the dismantling of these weapons systems from Cuba ... we, for our part, will agree ... (a) to urgently remove the quarantine measures currently in force and (b) guarantee non-aggression in Cuba",

which corresponds to the “Alternative”, since (3.3) for the United States is preferable to (2.2), while (4.2) in the “chicken” is not preferable (3.3).

If the Soviets had kept their missiles, the United States would have preferred the blockade of an airstrike. According to Robert Kennedy, a close adviser to his brother at the time,

“If they do not remove these bases, we will remove them,”

which corresponds to the “Alternative”, since the US will prefer the result (4.1) to the result (1.4), rather than the result (1.1) to the result (2.4) in the game of “chicken”.

Finally, it was well known that many advisers to President Kennedy were very reluctant to consider initiating an attack on Cuba, without exhausting less militant methods of action that could lead to the recall of missiles with less risk and greater conformity with the ideals and values of America. In particular, Robert Kennedy stated that an immediate attack would look like “Pearl Harbor, on the contrary, and it would blacken the name of the United States in the pages of history”, which corresponds to “Alternative” because the US ranked AW a little better than the worst result (2 ) - as the “shameful” action of the States, and not as the best (4) - the US victory - in the “chicken”.

Although Alternativa provides a more realistic view of the perception of the game participants than the chicken, standard game theory almost does not help in explaining how the compromise was achieved and why it turned out to be a stable compromise (3.3). As in the chicken, the strategies associated with this result are not the Nash equilibrium, because the Soviets have an immediate incentive to move from (3.3) to (1.4).

However, unlike “chicken”, in “Alternative” there are no results at all that are Nash equilibria, with the exception of “mixed strategies”. These are strategies in which players randomize their chosen actions, choosing each of their two so-called pure strategies with given probabilities. But for the analysis of "Alternatives" it is impossible to use mixed strategies, because to perform such an analysis, each result must be linked to numerical gains, and not ranked in order.

The instability of the results in "Alternative" is best seen when studying the cycle of preferences, indicated by arrows, going in this game in a clockwise direction. Following these arrows means that the game is cyclical., and one player always has an immediate incentive to deviate from each state: the Soviets - from (3.3) to (1.4); in the USA - from (1.4) to (4.1); for the Soviets, from (4.1) to (2.2); and in the United States - from (2.2) to (3.3). We again became indefinable, but not because of the presence of several Nash equilibria, as in the “chicken”, but because in “Alternative” there are no equilibria between pure strategies.

The rules of the game in the theory of moves

Then how do we explain the choice (3.3) in the "Alternative", and at the same time in the "chicken", given the non-equilibrium state of the standard game theory? It turns out that (3.3) is in both games a “non-myopic equilibrium” (nonmyopic equilibrium), and in “Alternative”, according to the theory of moves (theory of moves) (TOM) is the only such balance. By postulating that the players think ahead not only for the immediate effects of the moves, but also for the counter-moves in response to these moves, counter-moves and so on, TOM extends the strategic analysis of the conflict to a more distant future.

Then how do we explain the choice (3.3) in the "Alternative", and at the same time in the "chicken", given the non-equilibrium state of the standard game theory? It turns out that (3.3) is in both games a “non-myopic equilibrium” (nonmyopic equilibrium), and in “Alternative”, according to the theory of moves (theory of moves) (TOM) is the only such balance. By postulating that the players think ahead not only for the immediate effects of the moves, but also for the counter-moves in response to these moves, counter-moves and so on, TOM extends the strategic analysis of the conflict to a more distant future.Of course, the theory of games allows you to take into account such thinking through the analysis of "game trees", which describe the sequential actions of players over time. But the game tree is constantly changing every time a crisis develops. In contrast, in the “Alternative” the configuration of the winnings remains more or less constant, although there players are in a modified matrix. In essence, TOM, describing wins in one game, but allowing players to do consecutive calculations of moves in different positions, adds non-myopic thinking to the proposed classical theory of games of description economics.

The founders of game theory, John von Neumann and Oscar Morgenstern, defined the gameas "describing its set of rules." Although the TOM rules apply to all games between two players, here I will assume that each of the players has only two strategies. Four rules of the TOM game describe the possible choices of players at each stage of the game:

Rules of the game

- The game begins with the initial state specified by the intersection of the row and column in the matrix of winnings.

- Any player can unilaterally change his strategy, that is, make a move, and thus transfer the initial state to a new state in the same row or column as the initial state. A strategy-changing player is called player 1 ( P1 ).

- Player 2 ( P2 ) can answer, =, unilaterally changing his strategy, thus transferring the game to a new state.

- Answers continue to alternate, as long as the player ( P1 or P2 ), who must go next, does not change his strategy. When this happens, the game ends in its final state, which is the result of the game.

End rule

- A player does not move from the initial state if his moves (i) lead to a less preferred outcome, or (ii) return the game to the initial state, making this state result.

Advantage rule

- If for one player it is rational to move, and for another - not to move from the initial state, then the move has an advantage: it cancels stay in place, therefore the result will be caused by the player who made the move.

Notice that the sequence of moves and counter moves strictly alternates: let's say, the player first walks horizontally, then the player vertically, and so on, until one of the players stops, and at this stage the state will be final, which means the result of the game. I assume that the players gain does not accumulate while in the state, unless it becomes the result of the game (which can also be the initial state, if the players decide not to move out of it).

To assume the opposite, it is necessary that the winnings are numerical, and not just ranked, then players could accumulate them, passing through the state. But in many real-world games, wins are difficult to quantify or summarize according to the states they were in. Moreover, in many games a great reward is extremely dependent on the final state reached, and not on how it was achieved. In politics, for example, the gain for most politicians is not in campaigning, because they are laborious and costly, but in victory.

Rule 1 is very different from the corresponding game rule in standard game theory, where players simultaneously select strategies from a matrix game that determines its result. Instead of starting with a choice of strategy, TOM assumes that at the beginning of the game, players are already in some state and receive a gain from this state only if they remain in it . Based on these winnings, they individually have to decide whether to change this state, trying to achieve the best.

Of course, some decisions are made collectively by the players, and in this case it is reasonable to say that they choose strategies from scratch, or at the same time, or by coordinating their actions. But if, say, two countries coordinate their actions, for example, they agree to sign an agreement, then an important strategic question is what individual calculations led them to this situation. The formal act of jointly signing a treaty is the culmination of their negotiations and does not reveal the process of counter-moves preceding this signing. It is for the disclosure of these negotiations and the underlying calculations that TOM is intended.

Let's continue this example: the parties signing the contract were in a certain previous state, from which both decided to move - or, probably, only one decided to move, and the other could not interfere with this move (advantage rule). Over time, they fell into a new state, after, let's say, negotiations on signing, and in this state it is rational for both countries to sign a previously negotiated agreement.

As is the case with the signing of the contract, almost all the results of the observed games have their own history. TOM seeks to strategically explain the development of (temporary) states, which has led to led to a (more permanent) result. Consequently, the game begins in the initial state, in which players receive winnings, only if they remain in this state and it becomes the final state, or the result, of the game.

If they do not remain in this state, they still know what the winnings would have been if they had remained in the state; therefore, they can make a rational calculation of the benefits of maintaining a state or moving out of it. They move precisely because they have calculated that they can improve the situation by changing the strategy, waiting for the best result, when the process of moves and counter-moves finally comes to an end. When the game starts in a different state, the game will be different, but the configuration of the winnings will remain the same.

Rules 1 - 4 (rules of the game) say nothing about what forcesthe game is completed, but only about when it is completed: completion occurs when "the player who must go next does not change his strategy" (rule 4). But when it is rational not to continue the movement, or not to move at all from the initial state?

The termination rule states that this happens when a player does not move from the initial state. Condition (i) does not require an explanation, but condition (ii) must be justified. It says that if after the move P1 for the game party it is rational to return to the initial state in a cycle, then P1 will not move. In the end, what is the point of plotting the whole process of counter-moves if the party simply returns to the “first square of the field”, taking into account that players will not receive any winnings along the way to the result?

Reverse induction

To determine where the game ends, when at least one player wants to move from the initial state, I will assume that the players use reverse induction . This is a process of reasoning, in which players, passing back from the last possible course of the game, foresee each other's rational actions. For this, I will assume that each of them has full information about the preferences of the other, so everyone can calculate the rational actions of another player, as well as their own, regarding the decision whether to move from the initial state or any subsequent state.

To illustrate reverse induction, let’s look at Alternative 2 again in Figure 2. After the discovery of the missiles and the US blockade, the game was in the BM state, the worst for the USA (1) and the best for the Soviet Union (4). Now consider the development of moves clockwise, which can be initiated by the United States, moving to AM, after which the USSR moves to AW, and so on, assuming that players can predict the likelihood that the game will complete one full cycle and return to its original state ( one):

| State 1 | Condition 2 | State 3 | Condition 4 | State 1 | |||||

| The us begin | United States (1.4) | → | USSR (4.1) | → | United States (2.2) | → | | USSR (3.3) | → | (1.4) |

| Survivor | (2.2) | (2.2) | (2.2) | (1.4) |

This is a game tree, only drawn not vertically, but horizontally. The survivor is the state chosen at each stage as a result of reverse induction. It is determined by the return from the state in which the game can theoretically end (state 1, at the end of the cycle).

Suppose that alternating moves of players in “Alternative” were made clockwise from (1.4) to (4.1), then to (2.2) and to (3, 3), and at this stage the USSR is in the 4 had to decide whether to stop at (3.3) or end the cycle, returning to (1.4). It is obvious that the USSR will prefer the result (1.4) to the result (3.3), therefore (1.4) is indicated as a surviving state under (3.3): since the USSR will return the process back to (1.4) if it reaches (3.3), then players know that if the process of counter-moves reaches this state, the result will be (1.4).

Knowing this, will the United States in the previous state (2.2) move to (3.3)? Since the US will prefer (2.2) to the survivor in (3.3) condition, namely (1.4), the answer is no. Consequently (2.2) becomes a surviving state when the US must choose between stopping at (2.2) and moving at (3.3) - which, as I just showed, will turn into (1.4) after reaching (3 3).

In the previous state (4.1), the USSR would prefer to move to (2.2) rather than stop at (4.1), therefore (2.2) will again survive if the process reaches (4.1). Similarly, in the initial state (1.4), since the US will prefer the previous surviving state (2.2) to the state (1.4), then in this state the survivor will also be (2.2).

The fact that (2.2) is a survivor in the initial state (1.4) means that for the United States it is rational to move to (4.1), and the USSR then go to (2.2) where the process will stop by doing ( 2.2) it is a rational choice if the United States makes the first move from the initial state (1.4). That is, returning back from the choice of the USSR about completing or not completing the cycle from (3.3), players can reverse the process, and looking ahead, determine what will be rationally done for each of them. I point out that for the process it is rational to stop at (2.2) by putting a vertical line that prevents the arrow coming from (2.2), and emphasizing (2.2) at this stage.

Note that (2,2) in the AM state is worse for both players than (3.3) in the BW state. Can the USSR, instead of allowing the US to initiate the process of counter-moves in state (1.4), improve its situation by seizing the initiative and moving counter-clockwise from its best state (1.4)? The answer is positive, moreover, it is in the interests of the United States to also allow the USSR to begin this process, as can be seen in the following development of moves from (1.4) counterclockwise:

| State 1 | Condition 2 | State 3 | Condition 4 | State 1 | |||||

| USSR starts | USSR (1.4) | → | United States (3.3) | → | | USSR (2.2) | → | United States (4.1) | → | (1.4) |

| Survivor | (3.3) | (3.3) | (2.2) | (4.1) |

The USSR, acting “generously”, moves from BM (4) winning state to compromise (3) to BW, and makes for the US rational the end of the game (3.3), which is indicated by the blocked arrow from state 2. Of course, that’s occurred in crisis, with the threat of further escalation by the United States, including the forced ascent of Soviet submarines, as well as air strikes (the US Air Force estimated the probability of destroying all missiles at 90%), becoming for the Soviets an incentive to recall all of their missiles.

TOM application

As with any scientific theory, TOM calculations cannot take into account the empirical reality of a situation. For example, in the second calculation of reverse induction it is difficult to imagine the relocation of the Soviet Union from state 3 to state 4, including the preservation (through re-installation?) Of missiles in Cuba after their withdrawal and airstrike. However, if the transition to state 4, and later back to state 1 were excluded as impracticable, the result would be the same: when performing reverse induction in state 3, it would be rational for the USSR to initially move to state 2 (compromise), on which the game would stop .

A compromise will also be rational in the first calculation of reverse induction, if the same move (return to rocket preservation), which during this development is a transition from state 4 to state 1, is considered impracticable: performing the reverse induction in state 4, it will be rational for the US to continue escalating to air strikes to cause moves leading players to compromise in state 4. Since it will be less costly for both sides, if the Soviet Union initiates a compromise, eliminating the need for air strikes, it is not surprising which is exactly what happened.

To summarize: the theory of moves turns game theory into a more dynamic theory. By postulating that the players think ahead not only for the immediate consequences of the moves, but also for the counter-moves in response to these moves, counter-moves and so on, it expands the strategic analysis of conflicts into a more distant future. TOM was also used to show the possible influence of different degrees of use of force (displacements, orders and threats) on the outcome of the conflict, as well as to demonstrate how disinformation can affect the players' choice. These concepts and analysis are illustrated by many different examples, from conflicts in the Bible to modern disputes and conflicts.

Additional reading

- "Theory of Moves", Steven J. Brams. Cambridge University Press, 1994.

- «Game Theory and Emotions», Steven J. Brams in Rationality and Society, Vol. 9, No. 1, pages 93-127, February 1997.

- «Long-term Behaviour in the Theory of Moves», Stephen J. Willson, in Theory and Decision, Vol. 45, No. 3, pages 201-240, December 1998.

- «Catch-22 and King-of-the-Mountain Games: Cycling, Frustration and Power», Steven J. Brams and Christopher B. Jones, in Rationality and Society, Vol. 11, No. 2, pages 139-167, May 1999.

- «Modeling Free Choice in Games», Steven J. Brams in Topics in Game Theory and Mathematical Economics: Essays in Honor of Robert J. Aumann, pages 41-62. Edited by Myrna H. Wooders. American Mathematical Society, 1999.

Об авторе

Steven J. Brams is a professor of politics at New York University. He is the author and co-author of 13 books on the application of game theory and social choice theory in polls and elections, negotiation and justice, international relations, the Bible and theology. His recent books: Fair Division: From Cake-Cutting to Dispute Resolution (1996) and The Win-Win Solution: Guaranteeing Fair Shares to Everybody (1999) are published in collaboration with Alan D. Taylor. He is a member of the American Association for the Advancement of Science, the Society for Public Choice, a Guggenheim Fellow, invited expert from the Russell Sage Foundation and President of the International Society of Peace Science.

Minute of care from UFO

This material could cause conflicting feelings, so before writing a comment, refresh something important in your memory:How to write a comment and survive

- Не пишите оскорбительных комментариев, не переходите на личности.

- Воздержитесь от нецензурной лексики и токсичного поведения (даже в завуалированной форме).

- Для сообщения о комментариях, нарушающих правила сайта, используйте кнопку «Пожаловаться» (если доступна) или форму обратной связи.

Что делать, если: минусуют карму | заблокировали аккаунт

→ Кодекс авторов Хабра и хабраэтикет

→ Полная версия правил сайта