We are doing a machine learning project in Python. Part 3

- Transfer

- Tutorial

Translation of A Complete Machine Learning Walk-Through in Python: Part Three

Many people don’t like that machine learning models are black boxes : we put data into them and get answers without any explanation - often very accurate answers. In this article we will try to figure out how the model we created makes forecasts and what it can tell about the problem we are solving. And we conclude with a discussion of the most important part of the machine learning project: we document what we have done and present the results.

In the first part, we examined data cleansing, exploratory analysis, design, and feature selection. In the second partWe studied the filling of missing data, the implementation and comparison of machine learning models, hyperparametric tuning using random search with cross-validation, and, finally, the evaluation of the resulting model.

All project code is on GitHub. And the third Jupyter Notebook related to this article lies here . You can use it for your projects!

So, we are working on a solution to the problem using machine learning, or rather, using supervised regression. Based on energy data from buildings in New York, we created a model that predicts the Energy Star Score. We have a gradient gradient boosted regression model.», Capable of predicting, based on test data, within 9.1 points (in the range from 1 to 100).

Model interpretation

Gradient boosting regression is located approximately in the middle of the model interpretability scale : the model itself is complex, but consists of hundreds of fairly simple decision trees . There are three ways to understand how our model works:

- Rate the importance of the symptoms .

- Visualize one of the decision trees.

- Apply the LIME method - Local Interpretable Model-Agnostic Explainations , local interpreted model-independent explanations.

The first two methods are characteristic of tree ensembles, and the third, as you can understand from its name, can be applied to any machine learning model. LIME is a relatively new approach, it is a significant step forward in an attempt to explain the operation of machine learning .

The importance of symptoms

The importance of signs allows you to see the relationship of each sign with the aim of forecasting. The technical details of this method are complex ( the mean decrease impurity or the decrease in error due to the inclusion of a trait is measured ), but we can use relative values to understand which traits are more relevant. In Scikit-Learn, you can extract the importance of attributes from any tree-based “student” ensemble. The code below is our trained model, and with the help of it you can determine the importance of the traits. Then we send them to the Pandas data frame and display the 10 most important attributes:

modelmodel.feature_importances_import pandas as pd

# model is the trained model

importances = model.feature_importances_

# train_features is the dataframe of training features

feature_list = list(train_features.columns)

# Extract the feature importances into a dataframe

feature_results = pd.DataFrame({'feature': feature_list,'importance': importances})

# Show the top 10 most important

feature_results = feature_results.sort_values('importance',ascending = False).reset_index(drop=True)

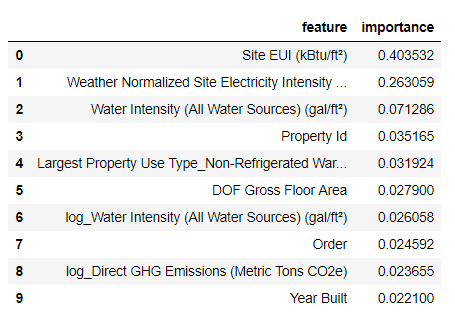

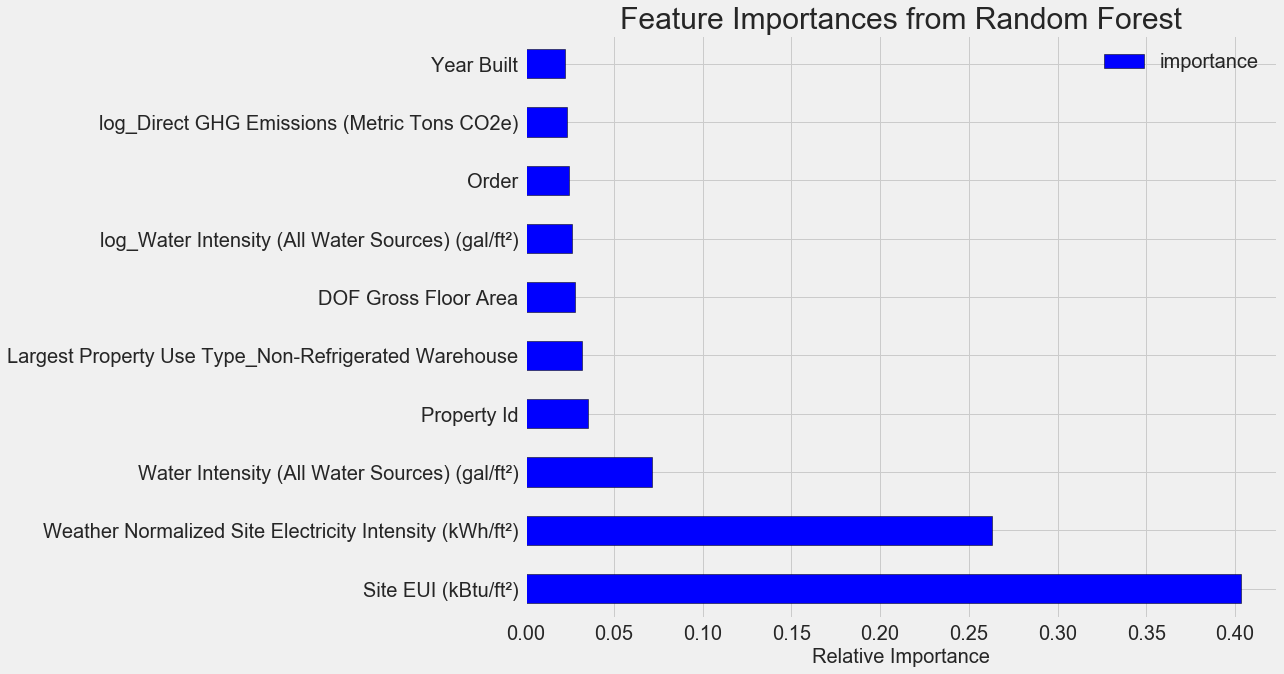

feature_results.head(10)

The most important signs are

Site EUI ( energy consumption intensity ) and Weather Normalized Site Electricity Intensitythey account for more than 66% of the total importance. Already in the third feature, the importance falls dramatically, this suggests that we do not need to use all 64 features to achieve high forecasting accuracy (in Jupyter notebook this theory is tested using only the 10 most important features, and the model was not very accurate). Based on these results, one of the initial questions can finally be answered: the most important indicators of Energy Star Score are Site EUI and Weather Normalized Site Electricity Intensity. Let's not go too deep into the jungle of importance of signs, we’ll just say that with them you can begin to understand the forecasting mechanism by the model.

Visualization of a single decision tree

It is hard to comprehend the entire regression model based on gradient boosting, which cannot be said about individual decision trees. You can visualize any tree with

Scikit-Learn-функции export_graphviz. First, extract the tree from the ensemble, and then save it as a dot-file:from sklearn import tree

# Extract a single tree (number 105)

single_tree = model.estimators_[105][0]

# Save the tree to a dot file

tree.export_graphviz(single_tree, out_file = 'images/tree.dot', feature_names = feature_list)Using the Graphviz visualizer, we convert the dot-file to png by typing at the command line:

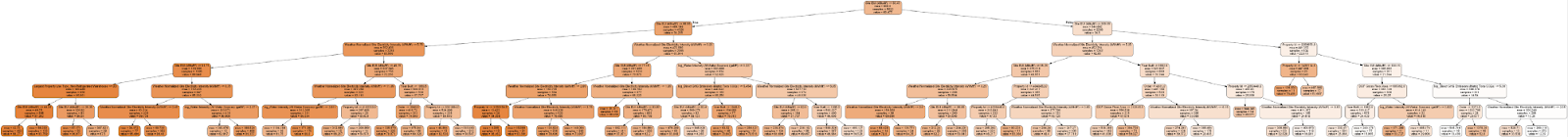

dot -Tpng images/tree.dot -o images/tree.pngGot a complete decision tree:

A bit cumbersome! Although this tree is only 6 layers deep, it’s difficult to track all the transitions. Let's change the function call

export_graphvizand limit the depth of the tree to two layers:

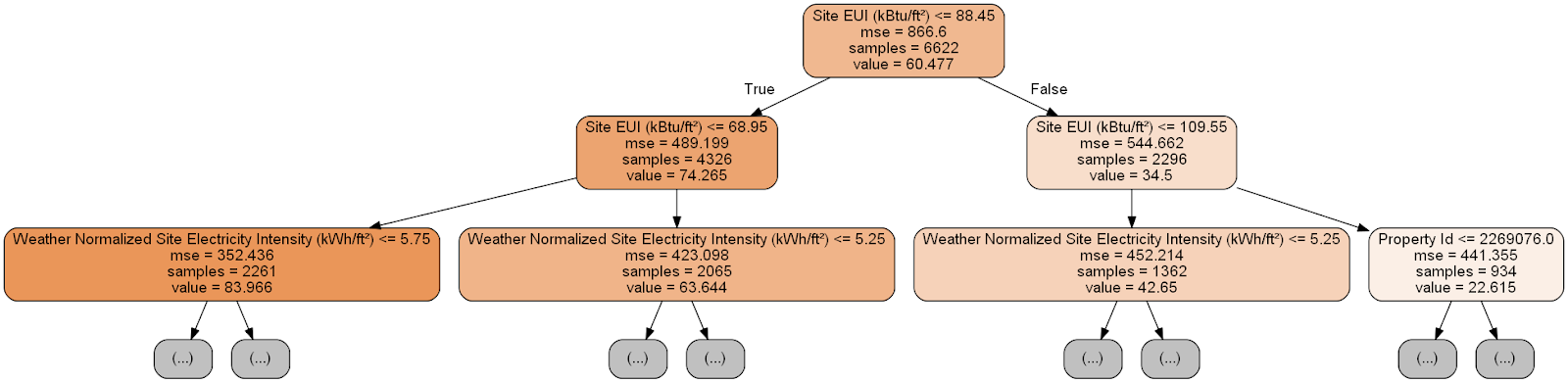

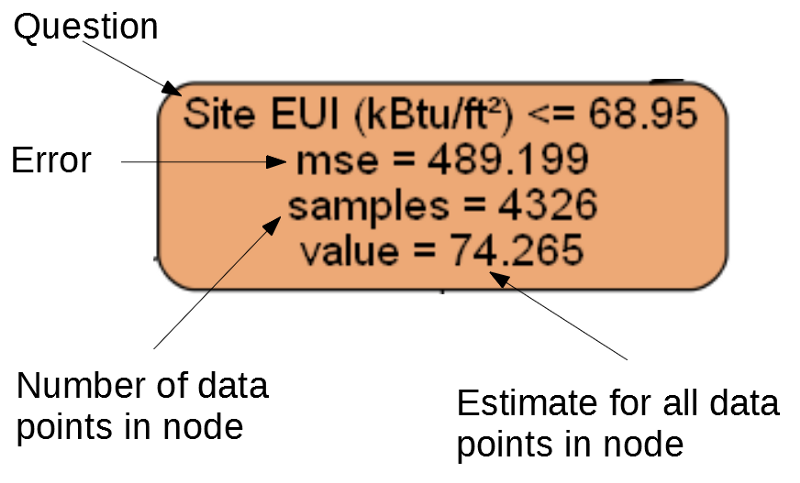

Each node (rectangle) of the tree contains four lines:

- Asked question about the value of one of the signs of a particular dimension: it depends on which direction we will exit this node.

Mse- measure of error in the node.Samples- the number of data samples (measurements) in the node.Value- goal assessment for all sample data in the node.

Separate node.

(Leaves contain only 2. –4., Because they represent the final score and do not have child nodes).

Forecasting for a given measurement in the decision tree starts from the top node - the root, and then descends down the tree. In each node, you need to answer the asked question “yes” or “no”. For example, the previous illustration asks: “Is the site EUI of the building less than or equal to 68.95?” If so, the algorithm goes to the right child node, if not, then to the left.

This procedure is repeated on each layer of the tree until the algorithm reaches the leaf node on the last layer (these nodes are not shown in the illustration with the reduced tree). The forecast for any dimension in the worksheet is

value. If several dimensions come to the sheet (samples), then each of them will receive the same forecast. As the depth of the tree increases, the error on the training data will decrease, since there will be more leaves and the samples will be divided more carefully. However, a tree that is too deep will lead to retraining on training data and will not be able to generalize test data. In the second article, we set up the number of model hyperparameters that control each tree, for example, the maximum depth of the tree and the minimum number of samples needed for each sheet. These two parameters strongly affect the balance between over- and under-learning, and visualization of the decision tree will allow us to understand how these settings work.

Although we cannot study all the trees in the model, an analysis of one of them will help to understand how each “student” predicts. This flowchart-based method is very similar to how a person makes a decision. Ensembles of decision trees combine forecasts of numerous individual trees, which allows you to create more accurate models with less variability. Such ensembles are very accurate and easy to explain.

Local Interpretable Model Dependent Explanations (LIME)

The last tool with which you can try to figure out how our model “thinks”. LIME allows you to explain how a single forecast is generated for any machine learning model . To do this, locally, next to some measurement, a simplified model is created on the basis of a simple model such as linear regression (details are described in this work: https://arxiv.org/pdf/1602.04938.pdf ).

We will use the LIME method to study the completely erroneous forecast of our model and understand why it is mistaken.

First we find this incorrect forecast. To do this, we will train the model, generate a forecast and select the value with the largest error:

from sklearn.ensemble import GradientBoostingRegressor

# Create the model with the best hyperparamters

model = GradientBoostingRegressor(loss='lad', max_depth=5, max_features=None, min_samples_leaf=6, min_samples_split=6, n_estimators=800, random_state=42)

# Fit and test on the features

model.fit(X, y)

model_pred = model.predict(X_test)

# Find the residuals

residuals = abs(model_pred - y_test)

# Extract the most wrong prediction

wrong = X_test[np.argmax(residuals), :]

print('Prediction: %0.4f' % np.argmax(residuals))

print('Actual Value: %0.4f' % y_test[np.argmax(residuals)])Prediction: 12.8615

Actual Value: 100.0000

Then we will create an explainer and give it training data, mode information, labels for the training data and the names of the attributes. Now it is possible to convey the observational data and the forecasting function to the explainer, and then ask them to explain the reason for the forecast error.

import lime

# Create a lime explainer object

explainer = lime.lime_tabular.LimeTabularExplainer(training_data = X, mode = 'regression', training_labels = y, feature_names = feature_list)

# Explanation for wrong prediction

exp = explainer.explain_instance(data_row = wrong, predict_fn = model.predict)

# Plot the prediction explaination

exp.as_pyplot_figure();Prediction Explanation Diagram:

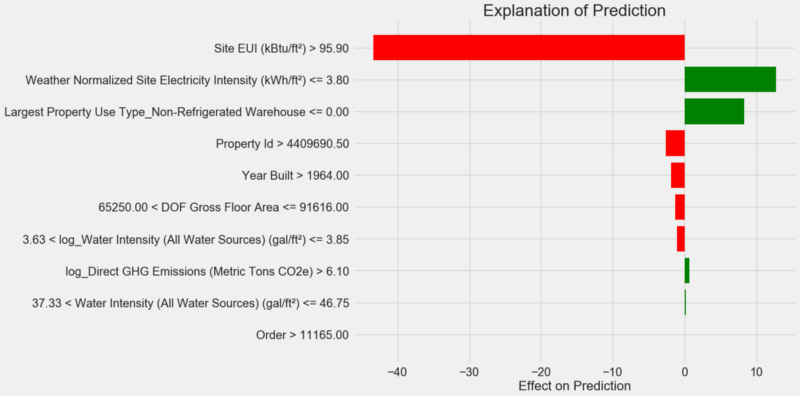

How to interpret the diagram: each record along the Y axis represents one variable value, and the red and green bars reflect the influence of this value on the forecast. For example, according to the upper record, the influence is

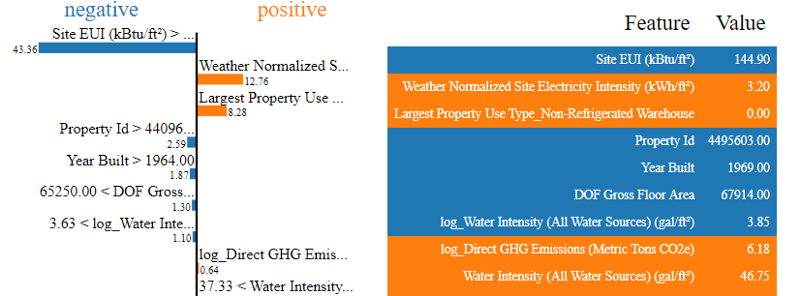

Site EUIgreater than 95.90, as a result, about 40 points are subtracted from the forecast. According to the second record, the influence is Weather Normalized Site Electricity Intensityless than 3.80, and therefore about 10 points are added to the forecast. The final forecast is the sum of the intercept and the effects of each of the listed values. Let's look at it from the other side and call the method

.show_in_notebook():# Show the explanation in the Jupyter Notebook

exp.show_in_notebook()

The process of decision-making by the model is shown on the left: the effect on the forecast of each variable is visually displayed. The table on the right shows the actual values of the variables for a given measurement.

In this case, the model predicted about 12 points, but in fact it was 100. At first you may wonder why this happened, but if you analyze the explanation, it turns out that this is not an extremely bold assumption, but the calculation result based on specific values. The value

Site EUIwas relatively high and one could expect a low Energy Star Score (because it is strongly influenced by the EUI), which our model did. But in this case, this logic turned out to be erroneous, because in fact the building received the highest Energy Star Score - 100.Model errors can upset you, but such explanations will help you understand why the model was wrong. Moreover, thanks to the explanations, you can start digging out why the building got the highest score despite the high Site EUI. Perhaps we will learn something new about our task that would elude our attention if we did not begin to analyze model errors. Such tools are not ideal, but they can greatly facilitate the understanding of the model and make better decisions .

Documentation of work and presentation of results

Many projects pay little attention to documentation and reports. You can do the best analysis in the world, but if you do not present the results properly , they will not matter!

By documenting a data analysis project, we pack all versions of the data and code so that other people can reproduce or collect the project. Remember that code is read more often than written, therefore our work should be clear to other people, and to us, if we return to it in a few months. Therefore, insert useful comments into the code and explain your decisions. Notebooks Jupyter Notebook software - the perfect tool for documentation, they can explain the first solution, and then show the code.

Also, Jupyter Notebook is a good platform for interacting with other specialists. Using the extensions for notebooks, you can hide the code from the final report , because no matter how hard it is to believe, not everyone wants to see a bunch of code in the document!

You may not want to make a squeeze, but show all the details. However, it is important to understand your audience when presenting your project, and prepare a report accordingly . Here is an example of a summary of the essence of our project:

- Using data on the energy consumption of buildings in New York, you can build a model that predicts the number of Energy Star Points with an error of 9.1 points.

- Site EUI and Weather Normalized Electricity Intensity are the main factors influencing the forecast.

We wrote a detailed description and conclusions in the Jupyter Notebook, but instead of PDF, we converted the .tex file to Latex , which we then edited in texStudio , and the resulting version was converted to PDF. The fact is that the default export result from Jupyter to PDF looks pretty decent, but it can be greatly improved in just a few minutes of editing. In addition, Latex is a powerful document preparation system that is useful to own.

Ultimately, the value of our work is determined by the decisions that it helps to make, and it’s very important to be able to “deliver the goods in person”. By correctly documenting, we help other people reproduce our results and give us feedback, which will allow us to become more experienced and rely on the results obtained in the future.

conclusions

In our series of publications, we have covered a machine learning tutorial from start to finish. We started by clearing the data, then created a model, and in the end we learned how to interpret it. Recall the general structure of the machine learning project:

- Cleaning and formatting data.

- Exploratory data analysis.

- Design and selection of features.

- Comparison of the metrics of several machine learning models.

- Hyperparametric tuning of the best model.

- Evaluation of the best model on a test data set.

- Interpretation of the results of the model.

- Conclusions and well-documented report.

The set of steps may vary depending on the project, and machine learning is often iterative rather than linear, so this guide will help you in the future. We hope you can now confidently implement your projects, but remember: no one acts alone! If you need help, there are many very useful communities where you will be given advice.

These sources can help you:

- Hands-On Machine Learning with Scikit-Learn and Tensorflow (The Jupyter Notebook for this book is available as a free download)!

- An Introduction to Statistical Learning

- Kaggle: The Home of Data Science and Machine Learning

- Datacamp : Good Guides for Practicing Data Analysis Programming.

- Coursera : Free and paid courses on many topics.

- Udacity : paid courses on programming and data analysis.