The process of evolution of video adapters from the 80s to the 2000s

Such an important and indispensable component of the system as a video card has come a long way. Over the decades, graphics accelerators have been improved and changed in accordance with advanced technologies.

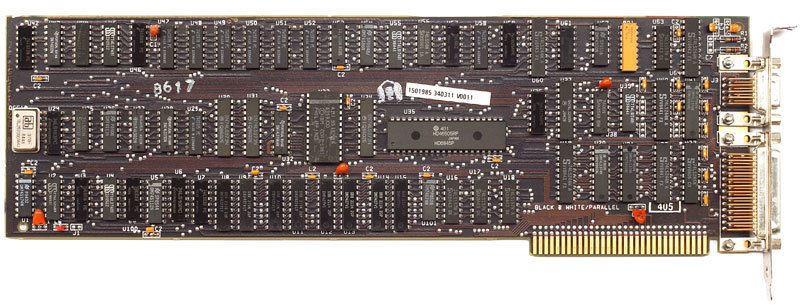

Both models were released by IBM in 1981. MDA was originally focused on the business sector and was created to work with text. Working with non-standard vertical and horizontal frequencies, this adapter provides clarity of character images. At the same time, CGA supported only standard frequencies and was inferior in quality of the displayed text. By the way, in IBM PC it was possible to use both adapters at the same time.

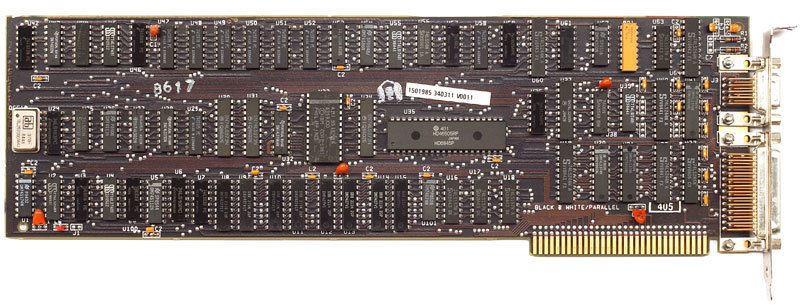

Monochrome video adapter MDA (Monochrome Display Adapter) was presented as a standard for monitors connected to it. MDA supported exclusively text mode (80 columns per 25 rows), without graphical modes. A Motorola Motorola 6845 chip was used as a core; the amount of video memory reached 4 Kb. Symbols were represented using a 9x14 pixel matrix, where the visible part of the symbol was composed as 7x11, and the remaining pixels formed an empty space between rows and columns. Symbols could be invisible, ordinary, underlined, bold, inverted and blinking. Attributes could be combined. Depending on the monitor, the color of the characters changed (white, amber, emerald).

The working screen resolution was 720x350 pixels (80x25 characters). Since the MDA adapter worked exclusively in text mode and could not address individual pixels, it simply placed one of 256 characters in each familiarity.

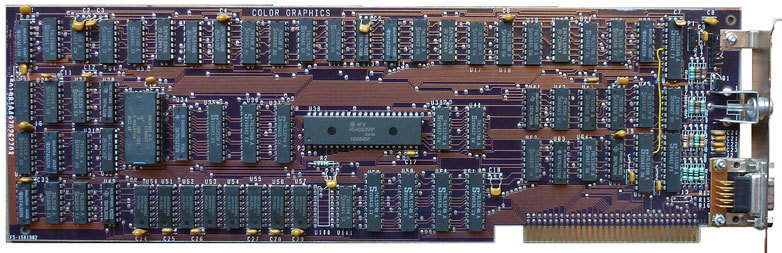

CGA (Color Graphics Adapter) - the first "color" video card. Unlike MDA, the CGA video adapter functioned in graphics mode, supporting both black and white and color images. The Motorola MC6845 chip was also used as the core, but the amount of video memory increased four times and reached 16 Kb.

In text modes of 40 × 25 characters, the effective screen resolution was 320 × 200 pixels, and in modes 80 × 25 - 640 × 200 pixels. At the same time, like the first model, CGA did not have the ability to access each pixel separately. The maximum color depth of the adapter was 4 bits, which allowed the use of a palette of 16 colors. 256 different characters were available. From the palette, it was possible to choose a color for each character and for the background.

CGA palette:

But in graphics modes, it was possible to access any single pixel. Only four colors were used at a time, which were determined by two palettes:

1) purple, blue-green, white and background color (black by default);

2) red, green, brown / yellow and background color (black by default).

Of course, in monochrome 640 × 200 pixels, only two colors were available - white and black.

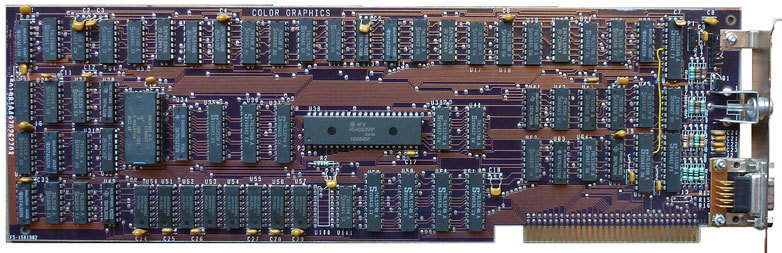

The EGA video adapter replaced the two previous ones. It was released by IBM in 1984 for the IBM PC / AT. In fact, this is the first video adapter that was able to reproduce a normal color image. EGA supported both text and graphic modes. In this case, it was possible to use 16 colors out of 64 possible with a resolution of 640x350 pixels.

The amount of video memory was 64 Kb (but over time it increased to 256 Kb). For data transfer, the ISA bus was used. Due to the processor’s ability to fill segments in parallel, the frame filling speed has also increased. To expand the graphic functions of the BIOS, the video adapter was equipped with an additional 16 KB of ROM.

EGA is the first IBM video adapter to programmatically change text mode fonts. The adapter supported three text modes. The first two were standard:

- with a resolution of 80x25 characters and 640x350 pixels;

- with a resolution of 40x25 characters and 320x200 pixels.

But the resolution of the third mode was 80x43 characters and 640x350 pixels. To use it, it was necessary to preset the 80 × 25 mode and load the 8 × 8 font using the BIOS command. The frame rate is 60 Hz, but 21.8 KHz for 350 lines and 15.7 KHz for 200 lines could be used.

In 1987, the MCGA (MultiColor Graphics Adapter), a multi-color graphics adapter, was introduced in early models of computers from IBM PS / 2. It was integrated into the motherboard and was not produced as a separate device.

The amount of video memory was 64 KB, as in EGA. The general palette has expanded - up to 262,144 shades due to the introduction of 64 brightness levels for each color. The number of colors displayed increased to 256.

In 256-color mode, the MCGA resolution was 320x200 pixels, with a refresh rate of 70 Hz. There were no bit planes, each pixel on the screen was encoded with a corresponding byte. The adapter supported all CGA modes, worked in monochrome mode with a resolution of 640x480 pixels and a refresh rate of 60 Hz.

During the rise of MCGA, most games were only supported in 4-color CGA mode. And with the help of an analog signal, it was possible to adjust to the increase in the displayed colors, while maintaining compatibility with old modes. Therefore, the connection to the monitor was carried out by the DB-15 connector of the D-Sub family.

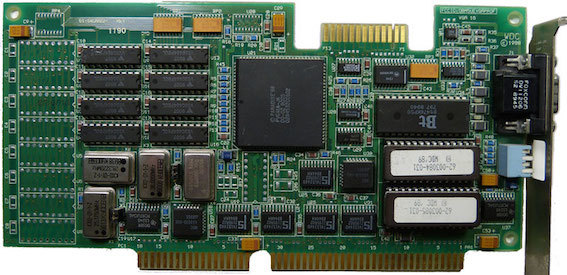

In the same year, IBM released the revolutionary VGA (Video Graphics Array) adapter. A feature of VGA was the location of the main subsystems on a single chip, which made the video card more compact.

The VGA architecture consisted of subsystems:

- a graphics controller responsible for the exchange of data between the central processor and video memory;

- video memory with a capacity of 256 KB DRAM (64 KB for each color layer);

- a sequencer that converts data from video memory into a bit stream transmitted to the attribute controller;

- an attribute controller that converts input to color values;

- a synchronizer that controls the time parameters of the video adapter and switches the color layers;

- a CRT controller that generates synchronization signals for the display.

There were more colors displayed and new graphics modes were required. VGA had standard modes:

- with a resolution of 640x480 pixels (with 2 and 16 colors);

- with a resolution of 640x350 pixels (with 16 colors and monochrome);

- with a resolution of 640 × 200 pixels (with 2 and 16 colors);

- with a resolution of 320x200 pixels (with 4, 16 and 256 colors).

Programmers worked on increasing the resolution of VGA, as a result of which there were non-standard, the so-called “X-modes” of 256 colors with a resolution of 320 × 200, 320 × 240 and 360 × 480. Non-standard modes used the planar organization of video memory (color formation of 2 bits from each plane). Such organization of video memory helped to use the entire video memory of the card to form a 256-color image. This allowed the use of higher resolutions.

VGA supported several types of fonts and modes. The standard font has a resolution of 8x16 pixels. To work with text, various combinations of several modes and types of fonts were used.

Following the VGA in 1987, the "professional" video adapter IBM 8514 / A was released, which was released with 512 KB (low version) and 1 MB (high version) of video memory. It did not combine with any of the previous adapters.

With 1 MB of IBM 8514 / A video memory, 256 color images were created with a maximum resolution of 1024 × 768 pixels. In the case of 512 KB of video memory, the resolution also gave no more than 16 colors. The versions also supported a lower resolution of 640 × 480 pixels with 256 colors and hardware graphics acceleration.

The video adapter used the standardized software interface “Adapter Interface” or AI.

One of the notable features of the 8514 / A was support for hardware-accelerated drawing, with which the video adapter accelerated the creation of lines and rectangles, fill shapes, and supported BitBLT technology.

The IBM 8514 / A video adapter had quite a few clones. Most of them had ISA interface support. The most popular of the copies were ATI adapters - Mach 8 and Mach 32.

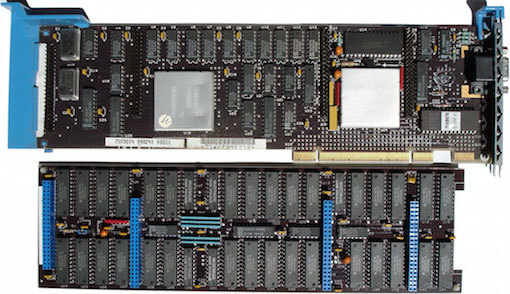

In 1990, IBM announced the release of the 32-bit XGA (eXtended Graphics Array) Display Adapter.

XGA used a 512KB VRAM type of video memory. It supported a resolution of 640x480 pixels with 16-bit color, as well as a 256-color image with a resolution of 1024x768 pixels.

In 1992, the company presented an updated version of the video adapter - XGA-2. The second model was not much different from the first. The amount of video memory increased by 1 MB, an accelerated version of VRAM was used. The video adapter additionally supported 1360x1024 -16 colors. XGA-2 did not use interlaced scanning in high-resolution modes.

In 1989, Super VGA (Super Video Graphics Array) introduced a generation of video adapters that are compatible with VGA, but capable of functioning in higher resolution and with more colors. SVGA supported resolutions from 800 × 600 and the number of colors to 16 million. Since there were no clear specifications for the devices, as such, the SVGA standard did not exist. Therefore, almost all SVGA video adapters followed the single program interface of the VESA (Video Electronic Standards Association). The VESA standard required all permissions. The most common were video modes: 800 × 600, 1024 × 768, 1280 × 1024, 1600 × 1200.

A characteristic feature of SVGA was the built-in accelerator.

The S3 Virtual Reality Graphics Engine (ViRGE) is one of the pioneers in the 2D / 3D accelerator market. It was released in 1995 with the main goal - to accelerate three-dimensional graphics in real time.

S3 ViRGE had a 64-bit integrated 2D / 3D accelerator with a TV-out and a standard set of filters. That is, a television screen could be used as a monitor. The memory capacity reached 4 MB, there was a built-in digital-to-analog converter at 170 MHz. The frequency of the GPU was 66 MHz. The interface used was PCI. Support was provided for Direct3D, BRender, RenderWare, OpenGL, and the native S3D API.

Despite its intended purpose, S3 ViRGE worked better in 2D mode (for example, with Windows GUI processing). When processing three-dimensional images, performance dropped significantly.

Since 1996, ATI Technologies has launched the ATI Rage series of graphics chipsets with acceleration of 2D, 3D graphics and video. The most famous was the ATI Rage II graphics card. The graphics processor was based on a redesigned Mach64 GUI core, complemented by 3D support and an MPEG-2 video acceleration function. The amount of video memory was 2 MB, 4 MB or 8 MB. The memory frequency of the SGRAM type reached 83 MHz, and the graphics core operated at a frequency of 60 MHz.

The chip also had drivers for Microsoft Direct3D and Reality Lab, QuickDraw 3D Rave, Criterion RenderWare, and Argonaut BRender. Rage II was used on some Macintosh computers and on the iMac G3 prototype (Rage II +).

The range of Rage II video cards was presented by IIC, II + and II + DVD models, which differed in processor frequency and memory size. In Rage II + DVD, the core and memory frequencies were 60 MHz, there was up to 83 MHz SGRAM, and the memory bandwidth reached 480 Mb / s.

RIVA 128 (Real-time Interactive Video and Animation accelerator) was launched in 1997 by Nvidia. This was the company's first GPU to become famous. This video card combines the functions of both a 2D and a 3D accelerator.

The RIVA 128 was designed with compatibility with Direct3D 5 and the OpenGL API. On the chip of this graphics processor, made by 350-nanometer process technology, housed 3.5 million transistors. The core operating frequency reached 100 MHz. The video card used 4 MB SGRAM memory. The memory bus was 128 bits wide with a bandwidth of 1.6 GB / s. RIVA 128 worked through the PCI interface, as well as through the AGP 1x port.

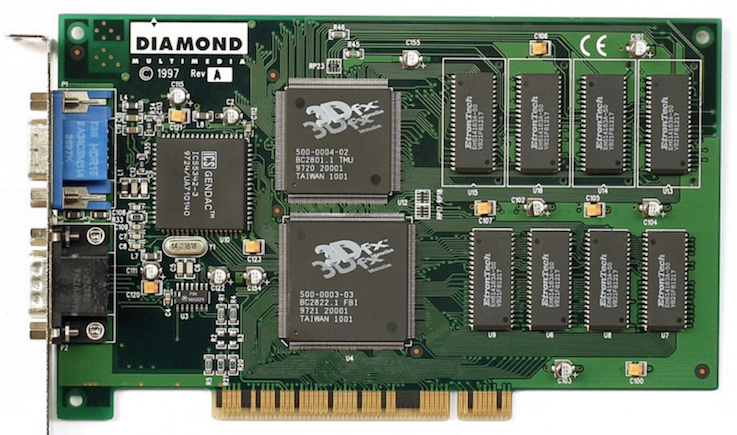

3Dfx released a whole generation of video adapters. The first development of the young team was Voodoo Graphics, released in 1996. A set of hardware was used in arcade games. The first such game was ICE Home Run Derby. Subsequently, the company positioned its product as high-performance and high-quality three-dimensional graphics technology for computer games.

The graphics processor and memory Voodoo Graphics worked at a frequency of 50 MHz, DirectX 3, PCI. The amount of memory such as EDO was 4 MB. The memory interface was 64-bit. The board accelerated only three-dimensional graphics, so it required a 2D video card for conventional two-dimensional software. It was connected by an adapter VGA cable to the input of the Voodoo video controller. And in the second (output) connector the monitor was connected.

In 1997, a new development was released - Voodoo Rush, which represents a combination of the Voodoo Graphics chipset and the two-dimensional graphics chipset. Most cards used the Alliance Semiconductor two-dimensional component AT25 / AT3D. But Macronix 2D chips were installed in certain samples. Voodoo Rush had the same characteristics as its predecessor, but in practice it was significantly inferior in performance. The reason was the use of Voodoo Rush and CRTC of a two-dimensional chipset of the same memory, which reduced performance. In addition, Voodoo Rush was not brought directly to the PCI bus.

In 1998, the company released the Voodoo2 chipset with Voodoo Graphics architecture, complemented by a second texture processor. This addition allowed us to draw two textures in one pass, which of course greatly increased the performance of the video card. The chip only worked with a three-dimensional image. Its frequency was 90-100 MHz, and EDO DRAM with a capacity of 8 MB and 12 MB was used as memory. Image resolution reached 1024x768 pixels with 12 MB of memory and 800x600 in the case of 8 MB of memory with a color mode of 16 bits. The innovative was the SLI (Scan-Line Interleave) technology, which allowed two Voodoo2 boards to work simultaneously. These boards were connected using a special cable and each processed half the lines on the screen.

In 1999, the company released the third generation of graphics cards - Voodoo3, combining 2D and 3D accelerators on one board. The core and memory frequencies were 143 MHz, the volume reached 16 MB on SGRAM type chips. The video card supported 16-bit color. The maximum resolution in 3D was 1600x1200 pixels. The interface used PCI or AGP 2x ports.

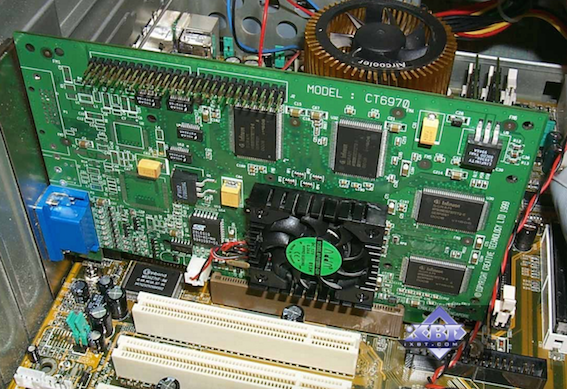

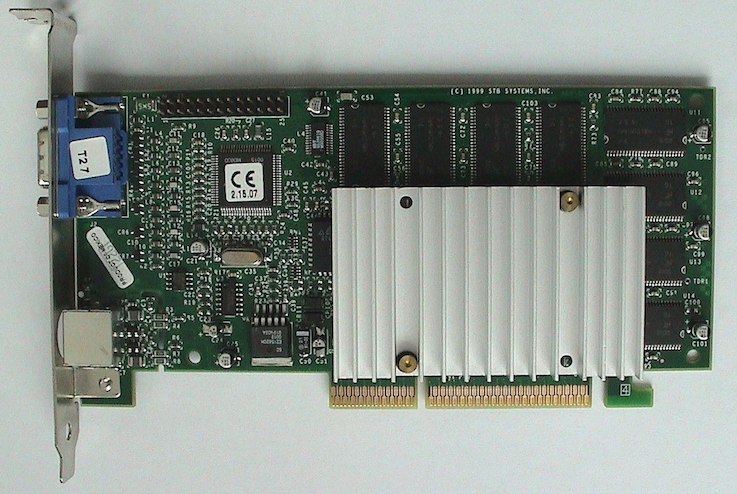

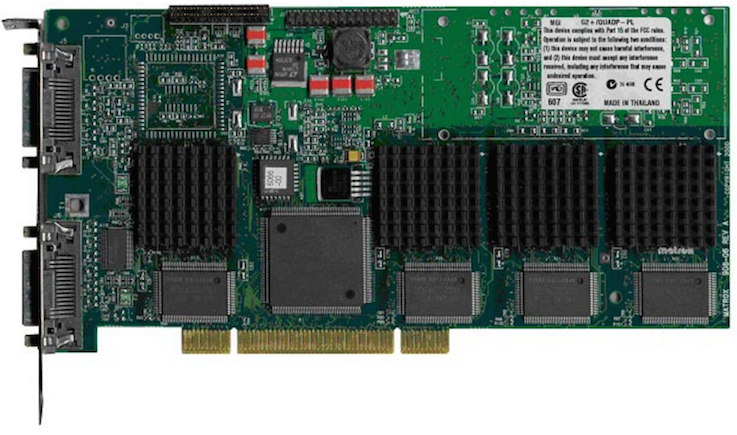

In 1998, Matrox introduced its 3D accelerator, the G200. The architecture of the video card contained a lot of interesting technologies. Like SRA (Symmetric Rendering Architecture), which provides reading and writing graphic data to system memory. Such manipulations increased the speed of the video card. G200 supported VCQ (Vibrant Color Quality) technology, which uses 32-bit color for rendering regardless of the color depth of the final image. That is, all operations took place in 32-bit mode, and then, if necessary (if the picture was 16-bit), the palette was compressed. Thus, it was possible to achieve the best image quality at that time.

The G200 supported 8 MB or 16 MB SGRAM memory, as well as SDRAM and built-in RAMDAC. To speed up the transfer of textures from RAM, DIME (Direct Memory Execute) was used.

The G200 chip had a 128-bit core. In order to increase productivity in the two-dimensional mode, the architecture of the DualBus memory bus was used. She used two 64-bit buses and a pair of command pipelines. Very high resolutions were supported, in 3D - up to 1280x1024 pixels and 32-bit color depth.

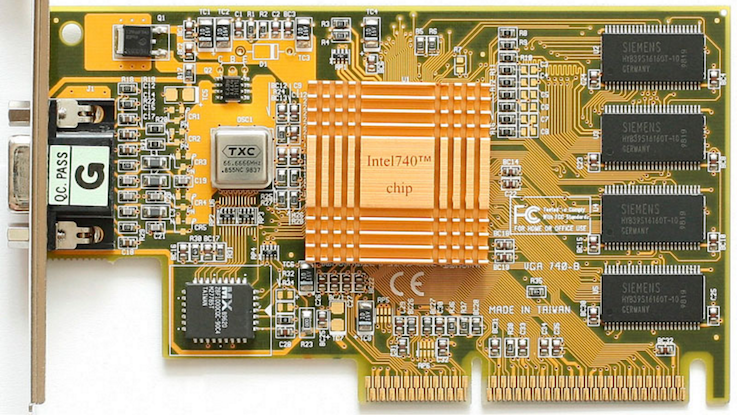

In 1998, Intel introduced its Intel i740 graphics adapter. This model was primarily intended for systems based on Pentium II processors.

The adapter was created using 350-nanometer technology, the core and video memory frequencies were 66 MHz, and the memory bus width was 64 bits. The amount of memory such as SDRAM or SGRAM reached 16 MB. The interface used was the AGP or PCI bus. The video card supported bilinear and trilinear texturing. The maximum resolution was 1280 × 1024 pixels in 16-bit color and 1600 × 1200 in 8-bit.

RIVA TNT (Real-time Interactive Video and Animation accelerator TwiN Texel, code-named NV4) is the NVIDIA GPU released in 1998. The new chip contained 7 million transistors, and its frequency was 90 MHz. As memory chips, 16 MB SDRAM modules were used, a 128-bit memory bus was used. The color depth of the video card reached 32 bits with a texture resolution of 1024x1024 pixels.

The RIVA TNT video adapter supported Twin-Texel technology (the ability of the chip to work with two texels simultaneously) with which it was possible to superimpose two textures per pixel per cycle in multitexturing mode. This greatly increased the filling speed.

In 1999, the company released the TNT2 graphics card (codenamed NV5). The model largely corresponded to its predecessor, but at the same time included support for AGP 4X, 32MB VRAM. The technical process has also decreased from 0.35 microns to 0.25 microns, which made it possible to increase the processor frequency to 150 MHz. The rendering unit was finalized and the RAMDAC frequency was raised to 300 MHz. This ensured the operation of the video card in ultra-high resolutions. A function of 32-bit color in 3D was added, support for textures larger than 2048 × 2048 pixels and support for the AGP 4x interface appeared. In total, four TNT2 modifications were introduced to the market.

In 1999, the Rage 128 graphics card was released, manufactured using the 350-nanometer process technology. The core and memory frequencies were 103 MHz, RAMDAC - 250 MHz. The amount of memory reached 32 MB, a 128-bit bus was used. The video card supported 32-bit color mode.

The video card supported single-pass trilinear filtering and hardware-accelerated DVD-video. In addition, Rage 128 worked with Twin Cache Architecture, combining pixel and texture caches to increase bandwidth. The chip also had a superscalar rendering (SSR - Super Scalar Rendering), which processed two pixels simultaneously in two pipelines.

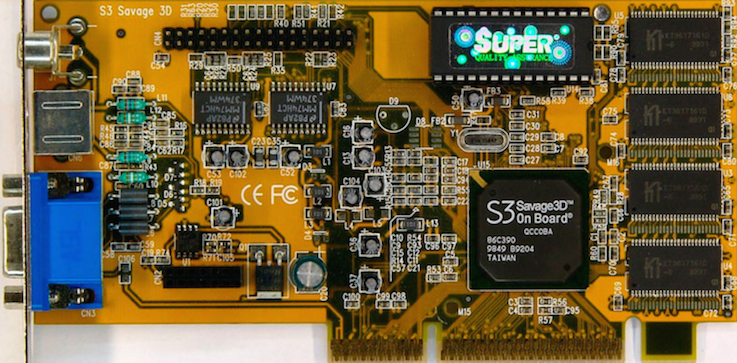

The company S3 Graphics entered the market of productive 3D accelerators, which announced in 1998 the release of the Savage 3D video card. Among the features of this video adapter, single-pass trilinear filtering, support for the S3TC texture compression algorithm, MPEG-2 video standard and the presence of a TV-out were highlighted. Savage 3D supported the AGP 2x interface. The amount of video memory was 8 MB, a 64-bit bus was used. The core operated at a frequency of 125 MHz. In 2D mode, a resolution of 1600x1200 pixels was achieved with a screen refresh rate of 85 Hz.

In 1999, the Savage4 3D accelerator was released, which was produced using a 250-nanometer process technology. The operating frequency remained 125 MHz. The amount of memory has increased to 32 MB. The memory bus remained unchanged (64-bit).

Savage4 introduces support for single-pass multitexturing and the AGP 4x interface. The video card also supported single-pass trilinear filtering. Thanks to the good quality of this filtering and S3TC texture compression technology, Savage4 produced a high-quality image. The video card was a DVD decoder.

All in the same 1999, NVIDIA released the GeForce 256 adapter (codename NV10), which was able to get ahead of the rest due to its excellent functionality. It was a very powerful 3D accelerator, one of the first to replace the built-in geometric coprocessor. He had four rendering pipelines with an operating frequency of 120 MHz and 32 MB of SDRAM. The core frequency in 3D mode reached 120 MHz. The width of the video memory bus was 128-bit, and the frequency was 166 MHz. Supported resolution up to 2048x1536 75 Hz.

The GeForce 256 included: an integrated geometric coordinate conversion processor and lighting setup (T&L), cubic texturing with environment maps, projective textures, and texture compression.

Video adapters MDA and CGA

Both models were released by IBM in 1981. MDA was originally focused on the business sector and was created to work with text. Working with non-standard vertical and horizontal frequencies, this adapter provides clarity of character images. At the same time, CGA supported only standard frequencies and was inferior in quality of the displayed text. By the way, in IBM PC it was possible to use both adapters at the same time.

Monochrome video adapter MDA (Monochrome Display Adapter) was presented as a standard for monitors connected to it. MDA supported exclusively text mode (80 columns per 25 rows), without graphical modes. A Motorola Motorola 6845 chip was used as a core; the amount of video memory reached 4 Kb. Symbols were represented using a 9x14 pixel matrix, where the visible part of the symbol was composed as 7x11, and the remaining pixels formed an empty space between rows and columns. Symbols could be invisible, ordinary, underlined, bold, inverted and blinking. Attributes could be combined. Depending on the monitor, the color of the characters changed (white, amber, emerald).

The working screen resolution was 720x350 pixels (80x25 characters). Since the MDA adapter worked exclusively in text mode and could not address individual pixels, it simply placed one of 256 characters in each familiarity.

CGA (Color Graphics Adapter) - the first "color" video card. Unlike MDA, the CGA video adapter functioned in graphics mode, supporting both black and white and color images. The Motorola MC6845 chip was also used as the core, but the amount of video memory increased four times and reached 16 Kb.

In text modes of 40 × 25 characters, the effective screen resolution was 320 × 200 pixels, and in modes 80 × 25 - 640 × 200 pixels. At the same time, like the first model, CGA did not have the ability to access each pixel separately. The maximum color depth of the adapter was 4 bits, which allowed the use of a palette of 16 colors. 256 different characters were available. From the palette, it was possible to choose a color for each character and for the background.

CGA palette:

But in graphics modes, it was possible to access any single pixel. Only four colors were used at a time, which were determined by two palettes:

1) purple, blue-green, white and background color (black by default);

2) red, green, brown / yellow and background color (black by default).

Of course, in monochrome 640 × 200 pixels, only two colors were available - white and black.

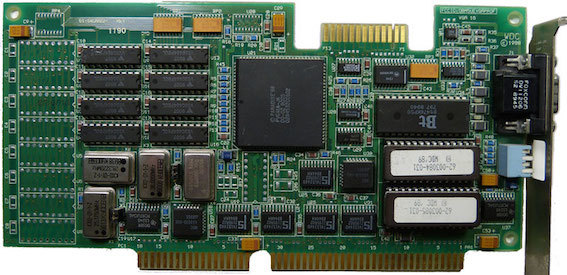

EGA video adapter

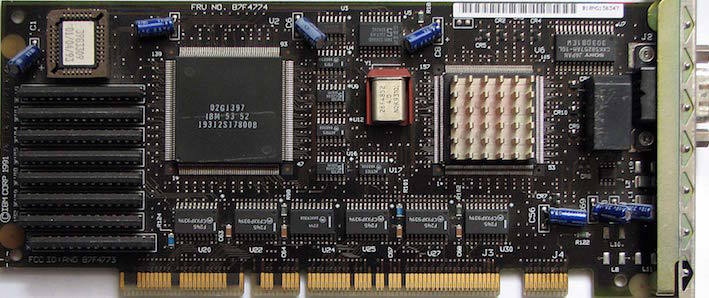

The EGA video adapter replaced the two previous ones. It was released by IBM in 1984 for the IBM PC / AT. In fact, this is the first video adapter that was able to reproduce a normal color image. EGA supported both text and graphic modes. In this case, it was possible to use 16 colors out of 64 possible with a resolution of 640x350 pixels.

The amount of video memory was 64 Kb (but over time it increased to 256 Kb). For data transfer, the ISA bus was used. Due to the processor’s ability to fill segments in parallel, the frame filling speed has also increased. To expand the graphic functions of the BIOS, the video adapter was equipped with an additional 16 KB of ROM.

EGA is the first IBM video adapter to programmatically change text mode fonts. The adapter supported three text modes. The first two were standard:

- with a resolution of 80x25 characters and 640x350 pixels;

- with a resolution of 40x25 characters and 320x200 pixels.

But the resolution of the third mode was 80x43 characters and 640x350 pixels. To use it, it was necessary to preset the 80 × 25 mode and load the 8 × 8 font using the BIOS command. The frame rate is 60 Hz, but 21.8 KHz for 350 lines and 15.7 KHz for 200 lines could be used.

MCGA video adapter

In 1987, the MCGA (MultiColor Graphics Adapter), a multi-color graphics adapter, was introduced in early models of computers from IBM PS / 2. It was integrated into the motherboard and was not produced as a separate device.

The amount of video memory was 64 KB, as in EGA. The general palette has expanded - up to 262,144 shades due to the introduction of 64 brightness levels for each color. The number of colors displayed increased to 256.

In 256-color mode, the MCGA resolution was 320x200 pixels, with a refresh rate of 70 Hz. There were no bit planes, each pixel on the screen was encoded with a corresponding byte. The adapter supported all CGA modes, worked in monochrome mode with a resolution of 640x480 pixels and a refresh rate of 60 Hz.

During the rise of MCGA, most games were only supported in 4-color CGA mode. And with the help of an analog signal, it was possible to adjust to the increase in the displayed colors, while maintaining compatibility with old modes. Therefore, the connection to the monitor was carried out by the DB-15 connector of the D-Sub family.

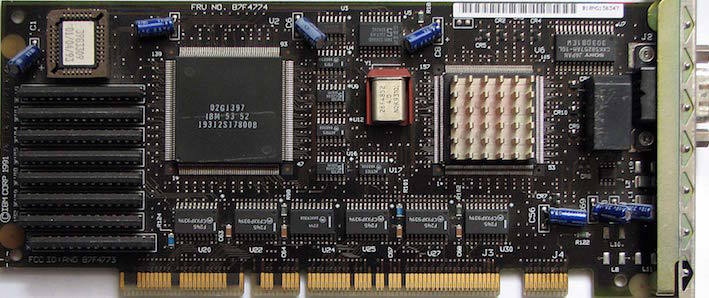

VGA video adapter

In the same year, IBM released the revolutionary VGA (Video Graphics Array) adapter. A feature of VGA was the location of the main subsystems on a single chip, which made the video card more compact.

The VGA architecture consisted of subsystems:

- a graphics controller responsible for the exchange of data between the central processor and video memory;

- video memory with a capacity of 256 KB DRAM (64 KB for each color layer);

- a sequencer that converts data from video memory into a bit stream transmitted to the attribute controller;

- an attribute controller that converts input to color values;

- a synchronizer that controls the time parameters of the video adapter and switches the color layers;

- a CRT controller that generates synchronization signals for the display.

There were more colors displayed and new graphics modes were required. VGA had standard modes:

- with a resolution of 640x480 pixels (with 2 and 16 colors);

- with a resolution of 640x350 pixels (with 16 colors and monochrome);

- with a resolution of 640 × 200 pixels (with 2 and 16 colors);

- with a resolution of 320x200 pixels (with 4, 16 and 256 colors).

Programmers worked on increasing the resolution of VGA, as a result of which there were non-standard, the so-called “X-modes” of 256 colors with a resolution of 320 × 200, 320 × 240 and 360 × 480. Non-standard modes used the planar organization of video memory (color formation of 2 bits from each plane). Such organization of video memory helped to use the entire video memory of the card to form a 256-color image. This allowed the use of higher resolutions.

VGA supported several types of fonts and modes. The standard font has a resolution of 8x16 pixels. To work with text, various combinations of several modes and types of fonts were used.

Video adapter IBM 8514 / A

Following the VGA in 1987, the "professional" video adapter IBM 8514 / A was released, which was released with 512 KB (low version) and 1 MB (high version) of video memory. It did not combine with any of the previous adapters.

With 1 MB of IBM 8514 / A video memory, 256 color images were created with a maximum resolution of 1024 × 768 pixels. In the case of 512 KB of video memory, the resolution also gave no more than 16 colors. The versions also supported a lower resolution of 640 × 480 pixels with 256 colors and hardware graphics acceleration.

The video adapter used the standardized software interface “Adapter Interface” or AI.

One of the notable features of the 8514 / A was support for hardware-accelerated drawing, with which the video adapter accelerated the creation of lines and rectangles, fill shapes, and supported BitBLT technology.

The IBM 8514 / A video adapter had quite a few clones. Most of them had ISA interface support. The most popular of the copies were ATI adapters - Mach 8 and Mach 32.

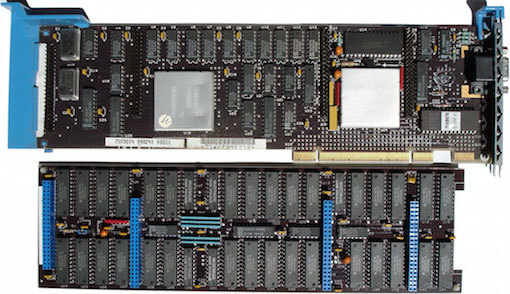

XGA video adapter

In 1990, IBM announced the release of the 32-bit XGA (eXtended Graphics Array) Display Adapter.

XGA used a 512KB VRAM type of video memory. It supported a resolution of 640x480 pixels with 16-bit color, as well as a 256-color image with a resolution of 1024x768 pixels.

In 1992, the company presented an updated version of the video adapter - XGA-2. The second model was not much different from the first. The amount of video memory increased by 1 MB, an accelerated version of VRAM was used. The video adapter additionally supported 1360x1024 -16 colors. XGA-2 did not use interlaced scanning in high-resolution modes.

SVGA video adapter

In 1989, Super VGA (Super Video Graphics Array) introduced a generation of video adapters that are compatible with VGA, but capable of functioning in higher resolution and with more colors. SVGA supported resolutions from 800 × 600 and the number of colors to 16 million. Since there were no clear specifications for the devices, as such, the SVGA standard did not exist. Therefore, almost all SVGA video adapters followed the single program interface of the VESA (Video Electronic Standards Association). The VESA standard required all permissions. The most common were video modes: 800 × 600, 1024 × 768, 1280 × 1024, 1600 × 1200.

A characteristic feature of SVGA was the built-in accelerator.

Video adapter S3 ViRGE

The S3 Virtual Reality Graphics Engine (ViRGE) is one of the pioneers in the 2D / 3D accelerator market. It was released in 1995 with the main goal - to accelerate three-dimensional graphics in real time.

S3 ViRGE had a 64-bit integrated 2D / 3D accelerator with a TV-out and a standard set of filters. That is, a television screen could be used as a monitor. The memory capacity reached 4 MB, there was a built-in digital-to-analog converter at 170 MHz. The frequency of the GPU was 66 MHz. The interface used was PCI. Support was provided for Direct3D, BRender, RenderWare, OpenGL, and the native S3D API.

Despite its intended purpose, S3 ViRGE worked better in 2D mode (for example, with Windows GUI processing). When processing three-dimensional images, performance dropped significantly.

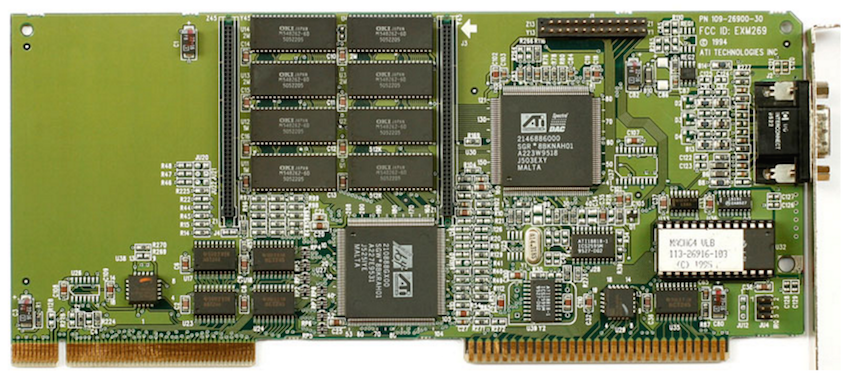

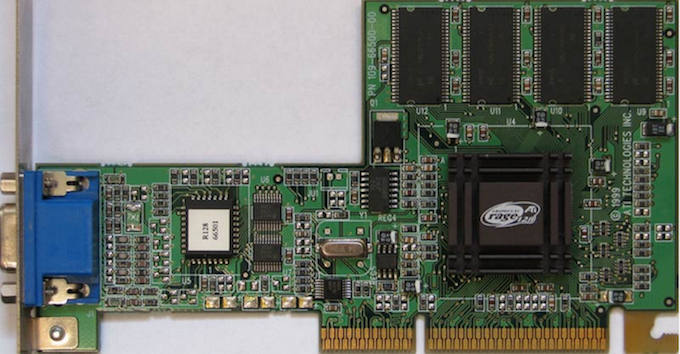

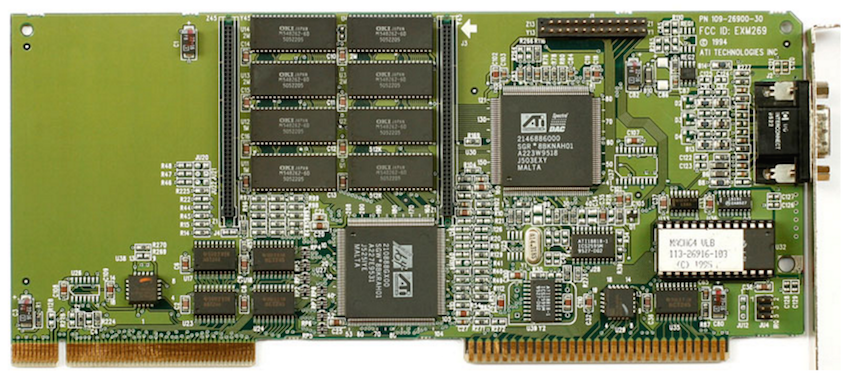

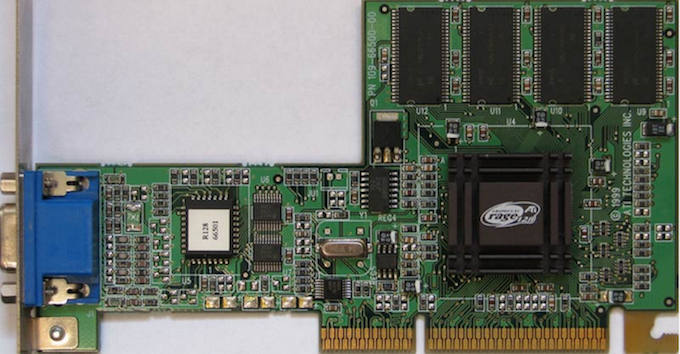

ATI Rage II Video Adapter

Since 1996, ATI Technologies has launched the ATI Rage series of graphics chipsets with acceleration of 2D, 3D graphics and video. The most famous was the ATI Rage II graphics card. The graphics processor was based on a redesigned Mach64 GUI core, complemented by 3D support and an MPEG-2 video acceleration function. The amount of video memory was 2 MB, 4 MB or 8 MB. The memory frequency of the SGRAM type reached 83 MHz, and the graphics core operated at a frequency of 60 MHz.

The chip also had drivers for Microsoft Direct3D and Reality Lab, QuickDraw 3D Rave, Criterion RenderWare, and Argonaut BRender. Rage II was used on some Macintosh computers and on the iMac G3 prototype (Rage II +).

The range of Rage II video cards was presented by IIC, II + and II + DVD models, which differed in processor frequency and memory size. In Rage II + DVD, the core and memory frequencies were 60 MHz, there was up to 83 MHz SGRAM, and the memory bandwidth reached 480 Mb / s.

Video adapter RIVA 128

RIVA 128 (Real-time Interactive Video and Animation accelerator) was launched in 1997 by Nvidia. This was the company's first GPU to become famous. This video card combines the functions of both a 2D and a 3D accelerator.

The RIVA 128 was designed with compatibility with Direct3D 5 and the OpenGL API. On the chip of this graphics processor, made by 350-nanometer process technology, housed 3.5 million transistors. The core operating frequency reached 100 MHz. The video card used 4 MB SGRAM memory. The memory bus was 128 bits wide with a bandwidth of 1.6 GB / s. RIVA 128 worked through the PCI interface, as well as through the AGP 1x port.

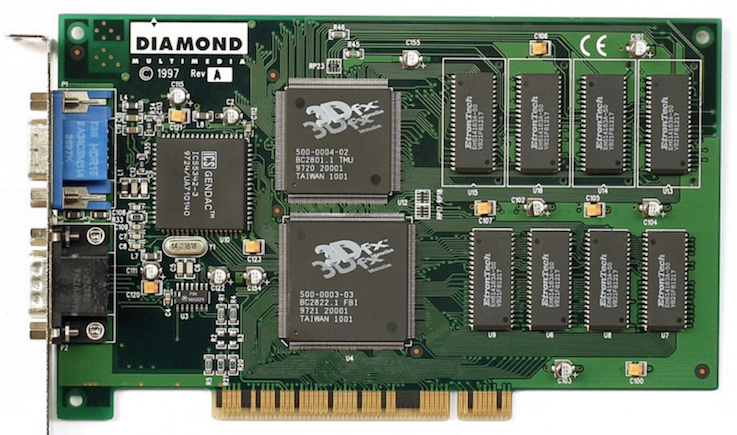

Voodoo Video Adapters

3Dfx released a whole generation of video adapters. The first development of the young team was Voodoo Graphics, released in 1996. A set of hardware was used in arcade games. The first such game was ICE Home Run Derby. Subsequently, the company positioned its product as high-performance and high-quality three-dimensional graphics technology for computer games.

The graphics processor and memory Voodoo Graphics worked at a frequency of 50 MHz, DirectX 3, PCI. The amount of memory such as EDO was 4 MB. The memory interface was 64-bit. The board accelerated only three-dimensional graphics, so it required a 2D video card for conventional two-dimensional software. It was connected by an adapter VGA cable to the input of the Voodoo video controller. And in the second (output) connector the monitor was connected.

In 1997, a new development was released - Voodoo Rush, which represents a combination of the Voodoo Graphics chipset and the two-dimensional graphics chipset. Most cards used the Alliance Semiconductor two-dimensional component AT25 / AT3D. But Macronix 2D chips were installed in certain samples. Voodoo Rush had the same characteristics as its predecessor, but in practice it was significantly inferior in performance. The reason was the use of Voodoo Rush and CRTC of a two-dimensional chipset of the same memory, which reduced performance. In addition, Voodoo Rush was not brought directly to the PCI bus.

In 1998, the company released the Voodoo2 chipset with Voodoo Graphics architecture, complemented by a second texture processor. This addition allowed us to draw two textures in one pass, which of course greatly increased the performance of the video card. The chip only worked with a three-dimensional image. Its frequency was 90-100 MHz, and EDO DRAM with a capacity of 8 MB and 12 MB was used as memory. Image resolution reached 1024x768 pixels with 12 MB of memory and 800x600 in the case of 8 MB of memory with a color mode of 16 bits. The innovative was the SLI (Scan-Line Interleave) technology, which allowed two Voodoo2 boards to work simultaneously. These boards were connected using a special cable and each processed half the lines on the screen.

In 1999, the company released the third generation of graphics cards - Voodoo3, combining 2D and 3D accelerators on one board. The core and memory frequencies were 143 MHz, the volume reached 16 MB on SGRAM type chips. The video card supported 16-bit color. The maximum resolution in 3D was 1600x1200 pixels. The interface used PCI or AGP 2x ports.

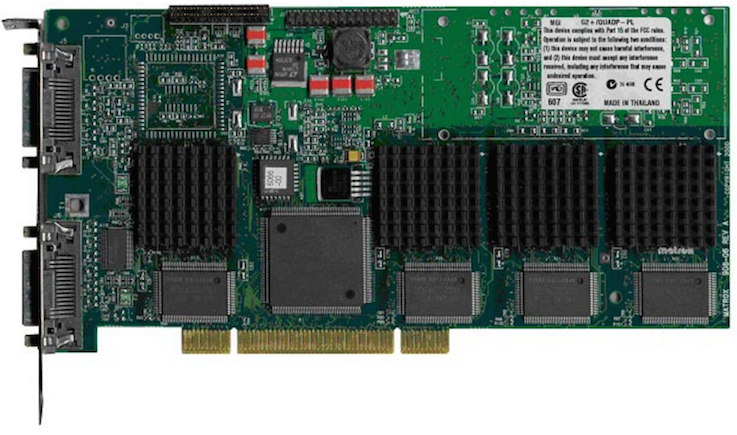

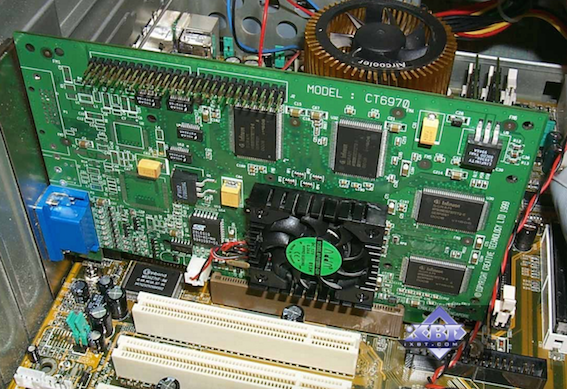

Video adapter Matrox G200

In 1998, Matrox introduced its 3D accelerator, the G200. The architecture of the video card contained a lot of interesting technologies. Like SRA (Symmetric Rendering Architecture), which provides reading and writing graphic data to system memory. Such manipulations increased the speed of the video card. G200 supported VCQ (Vibrant Color Quality) technology, which uses 32-bit color for rendering regardless of the color depth of the final image. That is, all operations took place in 32-bit mode, and then, if necessary (if the picture was 16-bit), the palette was compressed. Thus, it was possible to achieve the best image quality at that time.

The G200 supported 8 MB or 16 MB SGRAM memory, as well as SDRAM and built-in RAMDAC. To speed up the transfer of textures from RAM, DIME (Direct Memory Execute) was used.

The G200 chip had a 128-bit core. In order to increase productivity in the two-dimensional mode, the architecture of the DualBus memory bus was used. She used two 64-bit buses and a pair of command pipelines. Very high resolutions were supported, in 3D - up to 1280x1024 pixels and 32-bit color depth.

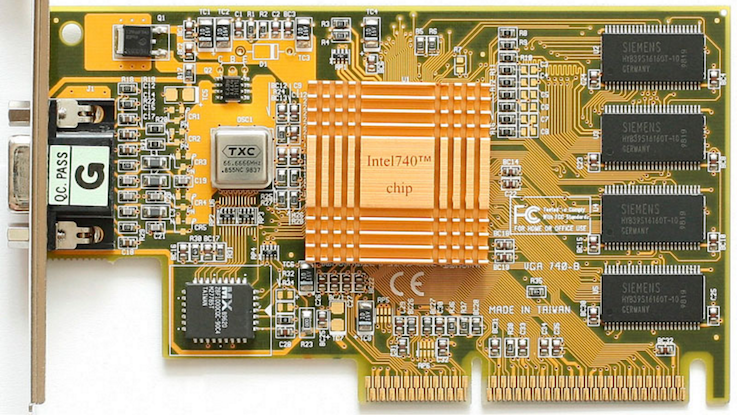

Intel i740 video adapter

In 1998, Intel introduced its Intel i740 graphics adapter. This model was primarily intended for systems based on Pentium II processors.

The adapter was created using 350-nanometer technology, the core and video memory frequencies were 66 MHz, and the memory bus width was 64 bits. The amount of memory such as SDRAM or SGRAM reached 16 MB. The interface used was the AGP or PCI bus. The video card supported bilinear and trilinear texturing. The maximum resolution was 1280 × 1024 pixels in 16-bit color and 1600 × 1200 in 8-bit.

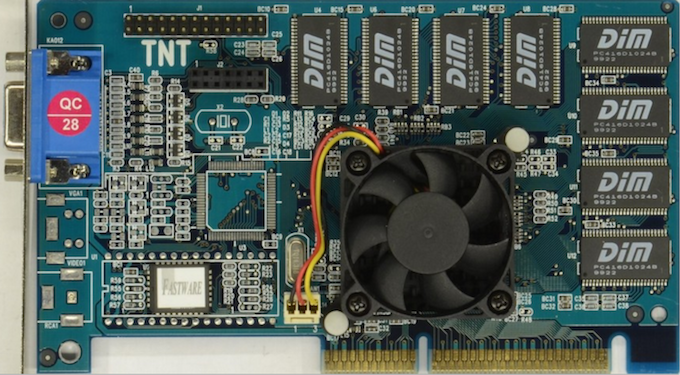

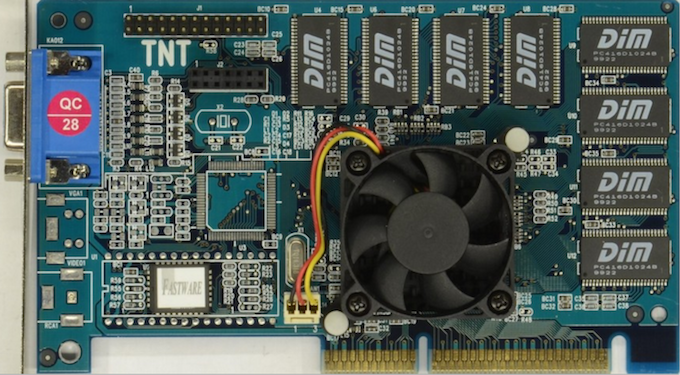

RIVA TNT and TNT2 video adapters

RIVA TNT (Real-time Interactive Video and Animation accelerator TwiN Texel, code-named NV4) is the NVIDIA GPU released in 1998. The new chip contained 7 million transistors, and its frequency was 90 MHz. As memory chips, 16 MB SDRAM modules were used, a 128-bit memory bus was used. The color depth of the video card reached 32 bits with a texture resolution of 1024x1024 pixels.

The RIVA TNT video adapter supported Twin-Texel technology (the ability of the chip to work with two texels simultaneously) with which it was possible to superimpose two textures per pixel per cycle in multitexturing mode. This greatly increased the filling speed.

In 1999, the company released the TNT2 graphics card (codenamed NV5). The model largely corresponded to its predecessor, but at the same time included support for AGP 4X, 32MB VRAM. The technical process has also decreased from 0.35 microns to 0.25 microns, which made it possible to increase the processor frequency to 150 MHz. The rendering unit was finalized and the RAMDAC frequency was raised to 300 MHz. This ensured the operation of the video card in ultra-high resolutions. A function of 32-bit color in 3D was added, support for textures larger than 2048 × 2048 pixels and support for the AGP 4x interface appeared. In total, four TNT2 modifications were introduced to the market.

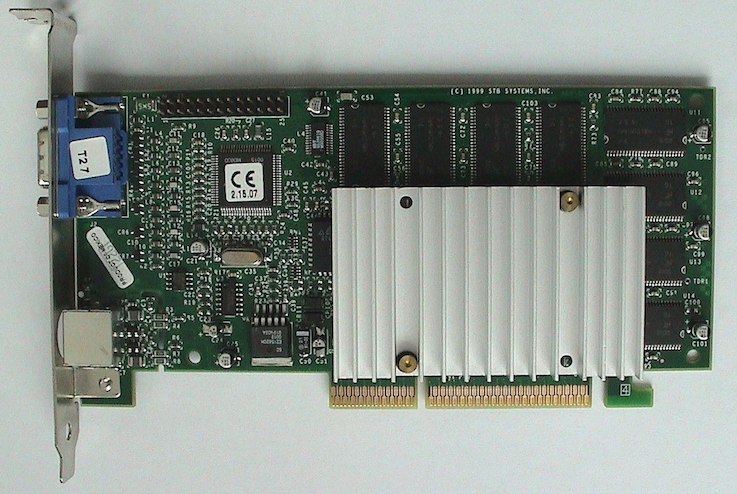

ATI Rage 128 Video Adapter

In 1999, the Rage 128 graphics card was released, manufactured using the 350-nanometer process technology. The core and memory frequencies were 103 MHz, RAMDAC - 250 MHz. The amount of memory reached 32 MB, a 128-bit bus was used. The video card supported 32-bit color mode.

The video card supported single-pass trilinear filtering and hardware-accelerated DVD-video. In addition, Rage 128 worked with Twin Cache Architecture, combining pixel and texture caches to increase bandwidth. The chip also had a superscalar rendering (SSR - Super Scalar Rendering), which processed two pixels simultaneously in two pipelines.

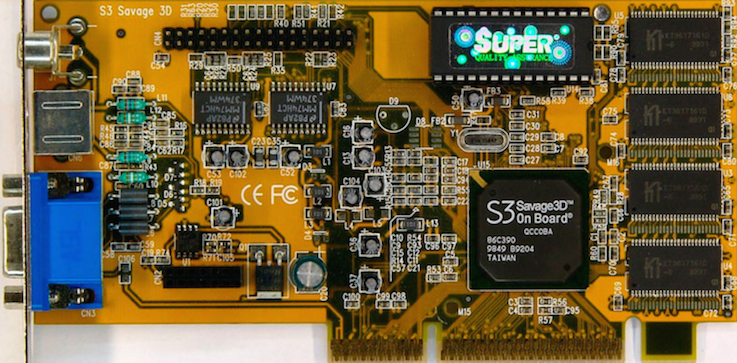

S3 Savage Graphics

The company S3 Graphics entered the market of productive 3D accelerators, which announced in 1998 the release of the Savage 3D video card. Among the features of this video adapter, single-pass trilinear filtering, support for the S3TC texture compression algorithm, MPEG-2 video standard and the presence of a TV-out were highlighted. Savage 3D supported the AGP 2x interface. The amount of video memory was 8 MB, a 64-bit bus was used. The core operated at a frequency of 125 MHz. In 2D mode, a resolution of 1600x1200 pixels was achieved with a screen refresh rate of 85 Hz.

In 1999, the Savage4 3D accelerator was released, which was produced using a 250-nanometer process technology. The operating frequency remained 125 MHz. The amount of memory has increased to 32 MB. The memory bus remained unchanged (64-bit).

Savage4 introduces support for single-pass multitexturing and the AGP 4x interface. The video card also supported single-pass trilinear filtering. Thanks to the good quality of this filtering and S3TC texture compression technology, Savage4 produced a high-quality image. The video card was a DVD decoder.

GeForce 256 video adapter

All in the same 1999, NVIDIA released the GeForce 256 adapter (codename NV10), which was able to get ahead of the rest due to its excellent functionality. It was a very powerful 3D accelerator, one of the first to replace the built-in geometric coprocessor. He had four rendering pipelines with an operating frequency of 120 MHz and 32 MB of SDRAM. The core frequency in 3D mode reached 120 MHz. The width of the video memory bus was 128-bit, and the frequency was 166 MHz. Supported resolution up to 2048x1536 75 Hz.

The GeForce 256 included: an integrated geometric coordinate conversion processor and lighting setup (T&L), cubic texturing with environment maps, projective textures, and texture compression.