A brief history of USB: predecessors and competing losers

A vivid demonstration of the "principle of superposition of a USB port"

USB technology can hardly be called perfect, but it has become an excellent alternative to many ports that we are unlikely to deal with again.

Like all technologies, USB has evolved gradually. Despite the title of “universal” serial bus, for more than 18 years on the market the technology has repeatedly appeared in new variations with different connection speeds and an infinite number of cables. USB Implementers Forum , a

group of companies that specializes in studying this data transfer standard, is familiar with this trend and intends to propose a solution to the problem using a new type of cable, known as Type-C. According to preliminary data, this connector will replace the USB Type-A and Type-B ports of all sizes provided for in phones, tablets, computers, and other external devices. Type-C will support the new, accelerated version of USB at 10 Gb / s generation 3.1 in 2 specifications with the possibility of further increasing throughput.

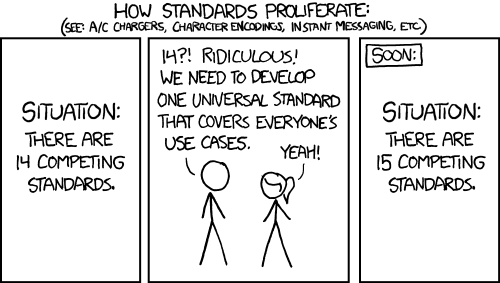

It is likely that in a few years USB Type-C will become a common standard, thanks to which it will be possible to say goodbye to tangled tangles of cables at the desktop. Meanwhile, another venomous comic strip from XKCD about the familiar mechanism for introducing new standards is being released.

XKCD Comic

In the meantime, it is still not clear whether Type-C will save us from cable invasion or will only worsen the situation, let's see how USB has changed over the course of its history, what standards have tried to compete with it, and who wants to argue with the usual technology for the leadership in the near future the future.

They are left behind

If you sat down at a computer about 10 years ago, it is not surprising that USB is something you take for granted. And, of course, even taking into account the constantly changing characteristics and connection methods, what we have is much better than previous versions of the familiar data transfer interface.

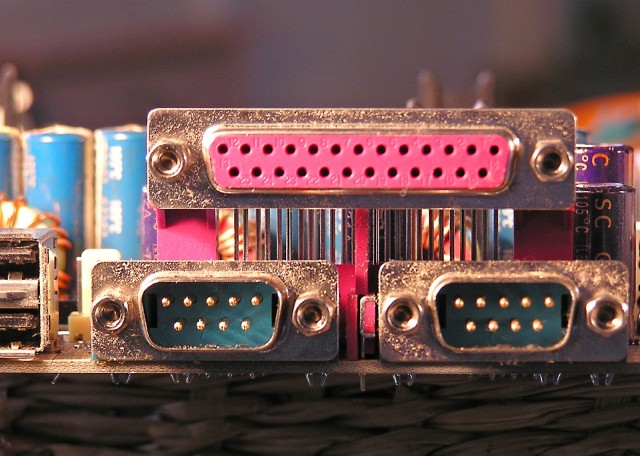

If you were dealing with a computer before the advent of USB in the era of Pentium and Pentium II, then remember that users had to constantly connect something through different ports. Need to connect a mouse? You may need a PS / 2 or serial port. Want to connect a keyboard? And again PS / 2, Apple Desktop Bus or DIN port. For printers and scanners, they usually used large old parallel ports, which also served as external drives, if you did not like the SCSI standard. To connect a prefix or joystick, you needed a game port, like those that were widely used in specialized sound cards until the 90s (this was the reality before creating audio chips for motherboards for desktop computers and laptops).

In my opinion, the problem is on the face. Some of these ports required their own expansion cards, which also took up a lot of space, and, as a rule, it was not very convenient to configure and restart them. By the end of the 90s, computers appeared with several USB ports, usually on the back of the system unit, most often USB 1.1 ports, capable of speeds up to 12 Mbit (or 1.5 Mbit for external devices, such as a keyboard and mouse). Component manufacturers did not immediately switch to USB, although USB ports and connectors began to appear gradually in keyboards, mice, printers and other devices as an additional option, and later as the main one.

When USB 2.0 became widespread in the early to mid-2000s, the standard became an excellent replacement for a much larger number of familiar designs. USB flash drives, in fact, buried a floppy disk (and, in fact, its relatives in the form of a Zip drive), and also contributed to the gradual disappearance of CDs and DVDs - and really, why use them to store data and install the operating system when you can to choose a compact and more versatile USB that will cope with tasks faster? Using USB 2.0, it was possible to connect external devices such as Wi-Fi adapters, optical drives, Ethernet ports, etc. - and until recently, they had to be installed on a computer without fail. Increasing the data transfer rate up to 480 Mbps has allowed to realize many ideas. So the number of USB ports grew, and then they completely replaced the outdated ports on computers and (especially) laptops. As a rule, four or more USB ports could be found on the rear panel of stationary computers, plus 1-2 on the front panel to save time.

USB comes of age during the period of USB 2.0 distribution, while an increase in USB 3.0 speeds of up to 5 Gb / s turned out to be even more convenient, in particular for the previously mentioned tasks: making system backups and moving heavy video files has become easier, but at the same time it is freed space for 802.11ac or Gigabit Ethernet adapters. It is quite convenient to start the OS from USB 3.0 hard drives or flash drives, especially if you need to troubleshoot or recover data. USB ports are increasingly becoming the only kind of ports on the computer, because with the spread of Wi-Fi specialized Ethernet ports were not needed.

In other words, USB was able, although not without problems, to succeed and maintain broad developer support, and the USB Type-A connector of a classic size and shape has been saved on most computers for almost 20 years. Given the long list of interfaces replaced by USB technology, the achievement is more than solid.

Who had to go through

After firmly securing USB to a leading position, several types of ports appeared to challenge such dominance. As a rule, they were able to achieve little success and they even had some functions to perform tasks that USB did not provide for, but as a result, the widespread use of the latter played a decisive role.

One such port was FireWire (also known as IEEE 1394), a standard that was supported mainly by Apple from the late 90s to the beginning of 2010. At that time, FireWire had several advantages over USB. FireWire devices could be connected in series with each other, that is, one port was enough to connect a dozen devices; FireWire operations did not require much intervention from the host processor; the FireWire standard could also transmit data in two directions at once (the principle of “full-duplex”), while USB 1.1 and 2.0 - only in one direction (“half-duplex”). In addition, during the period under review, FireWire was usually faster than USB. FireWire 400 supported speeds of up to 400 Mbit as opposed to 12 Mbit for USB 1.1, and FireWire 800 did 800 Mbit altogether, which stood out against 480 Mbit,

The main problem of FireWire was that the implementation of the standard turned out to be much more expensive, because special chips were needed to control the operation of the computer and external devices. Initially, FireWire users even had to pay a license fee in favor of Apple, whose rating just began to grow in the late 90s and early 2000s, although it was far from the current power of the company. The confusion began with the names, which, in fact, were just the names of one standard - here is Sony's iLINK and the rambling “IEEE 1394”. The transition from FireWire 400 to FireWire 800 also required the use of cables, while USB 1.0, 1.1, 2.0, and 3.0 used physically compatible connectors for all generations of the standard (with some additions regarding mini and micro versions).

As a result, the final cost of external drives and video equipment, requiring a significant amount of traffic, has significantly increased; the USB standard was still cheaper, and therefore used more often. Currently, new versions of FireWire with a maximum speed of 1.6, 3.2 and 6.4 Gb / s are at various stages of development, but since Apple no longer supports this standard in most of its products, interface investment has been significantly reduced.

Thanks to its accelerated interface, Thunderbolt ports have now taken the place of FireWire on Apple's Mac. Thunderbolt, first of all, is associated with Mac computers, because for the first time this standard was launched on one of the poppies, and it is the Mac that holds the lead in the Apple product line. In fact, Thunderbolt was developed by Intel. Initially, the standard, then Light Peak, was responsible for data transfer in both directions at a speed of up to 10 Gb / s, which was two times higher than USB 3.0. And this, of course, ensured the project’s success in the market a year or two before USB 3.0 was fixed as the most common standard for most computers.

Second-generation Thunderbolt controllers increased speed to 20 Gb / s by changing the data transfer mechanism. First-generation Thunderbolt controllers transmitted data on one bus at 10 Gb / s and could receive data on another PCI Express bus; Thunderbolt 2 combined two streams, which guaranteed an increase in data transfer speed in one direction. None of these ports became generally accepted, and remained a development for Mac, professional workstation motherboards and expansion cards.

Until that moment, the changes were minor, and then Thunderbolt 3, the new 40 Gb / s version of the technology, which, lo and behold, uses a USB Type-C port, was released. The new Thunderbolt ports still require a separate controller, but they are fully compatible with USB Type-C and provide support for 10 Gb / s USB 3.1 second generation. Recently, the same ports appeared on several high-end laptops, in particular, in the Dell XPS line and on the HP Elite x2 tablet. This doesn’t look like a USB range, but before Thunderbolt couldn’t even dream of such serious support from major PC manufacturers .

Nevertheless, the creators of Thunderbolt repeated the same unforgivable mistakes as FireWire: Thunderbolt assumes a separate controller and cable in the PC, plus component manufacturers have to tinker with additional chips necessary for the interface to work. Theoretically, Intel can take advantage of its authority and promote Thunderbolt in the market by initiating the integration of the mentioned controllers into each manufactured chip, but there are no difficulties here either. This approach increases the amount of silicon needed to produce each chip, which means costs again, and Intel is unlikely to want to pay out of pocket. Moreover, in this case, the microcircuits will require more energy, and Intel already climbs out of their skin to reduce such indicators.

Currently, the above factors explain why Thunderbolt is used on a limited number of systems. And although this is an excellent choice for happy owners of 4K screens or people who constantly transmit a huge amount of information, for most ordinary USB users, it remains the fastest and most common way to complete tasks.

New competitors

The main obstacle to the further development of USB may be technologies that perform similar functions, but without wires.

Often we choose wireless technologies that solve the tasks that once were the destiny of USB. Cloud data synchronization services timely update mail, contact list, calendar, files and online shopping list on all available devices without any cables. Bluetooth, NFC, Wi-Fi Direct, and AirDrop are a great USB replacement for transferring individual files, while Miracast and AirPlay provide wireless connectivity for any device to your TV (although some models without built-in features still require wired receivers like Apple TV, or Chromecast). Printers, cameras with Wi-Fi and memory cards are also more and more common.

As a rule, the main snag of the listed options lies in speed. If you need to transfer a lot of photos or process video with a resolution of 1080 pixels taken from a smartphone, you most likely will not need these wireless bells and whistles, because USB 2.0 is much faster and more reliable. Inexperienced PC users, as before, will use USB to connect mobile devices to computers, because it’s not so easy to install the favorite Android ROM, transferring data via Wi-Fi or Bluetooth.

Even if you never connect anything to a computer, all devices are somehow tied to wires - without power, nothing. There have been attempts to replace USB with one of several wireless charging standards, but there are still many shortcomings in such developments. A large number of products significantly complicate the standardization process for a single charger (although some companies are working to resolve this annoying misunderstanding). Component manufacturers will have to find a way to integrate wireless chargers into their existing phone designs or release additional chargers. But there are not so many new types of chargers, and they charge, of course, not as effective as when connected directly to the network. And, the most interesting thing is that most of these devices still require the use of USB.

In any case, it is unlikely that something will happen to USB in the near future, even with the active development of wireless competitive projects. Just as the advent of Wi-Fi did not crash wired Ethernet, it is unlikely that wireless technology will replace USB. At least not right now. Even taking into account the excellent performance in terms of speed and the appearance of all kinds of decent controllers, it is worth recognizing that the speed, convenience and compatibility offered by USB will provide this standard with more than one year of successful existence.

Source: Arstechnica