How should an unmanned vehicle behave if it is impossible to avoid casualties as a result of an accident?

French scientists from the Toulouse School of Economics, led by Jean-Francois Bonnefon, published a paper entitled “ Autonomous Vehicles Need Experimental Ethics: Are We Ready for Utilitarian Cars ” - Robomobiles Need Experimental Ethics: Are We Ready to Drive Cars on Roads? ? ), in which they tried to answer a difficult ethical question: what should be the behavior of an unmanned car if it is impossible to avoid an accident and the victims are inevitable? The authors interviewed several hundred participants in the Amazon Mechanical Turk crowdsourcing site , which brings together people who are ready to do some kind of problematic work that computers are not yet capable of for various reasons. The article drew attention to the resource MIT Technology Review.

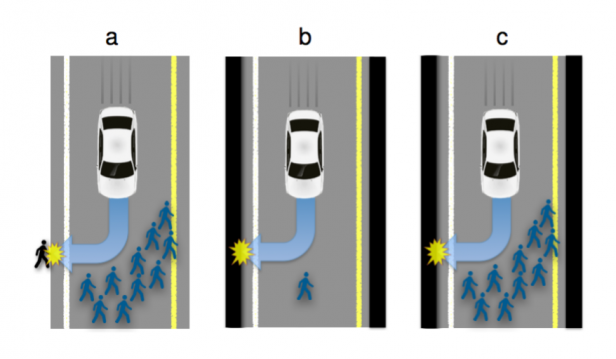

The authors approached the study with caution, not demanding unambiguous answers from people: yes or no. At the same time, a robomobile meant truly autonomous cars that are completely controlled by a computer, without the possibility of influencing the situation by a living person. Several questions were formulated, illustrating a number of situations in which the behavior of a computer algorithm controlling a robomobile becomes an ethical problem. For example, if traffic circumstances develop in such a way that there are only two options for the development of events: to avoid a collision with a group of 10 pedestrians means to drive into the bump yourself or to enter a crowd of people when the number of victims becomes truly unpredictable. What then should a computer algorithm do? Even more difficult situation: if there is only one pedestrian and one robomobile on the road? What is ethically more acceptable - to kill a pedestrian or to kill the owner of a car?

According to the results of the study, it turned out that people consider it “relatively acceptable” if the robomobile is programmed to minimize human casualties in the event of a road accident. In other words, in the event of a possible accident involving 10 pedestrians, the computer algorithm should actually sacrifice its owner. True, the researchers received a more or less unequivocal answer exactly until the moment when the study participants found themselves behind the wheel of a robomobile - they refused to put themselves in the place of its driver. This leads to another controversial issue. The fewer people who buy robomobiles, which are really much less likely than living drivers to get into dangerous situations, the more people will die in accidents on ordinary cars. In other words,