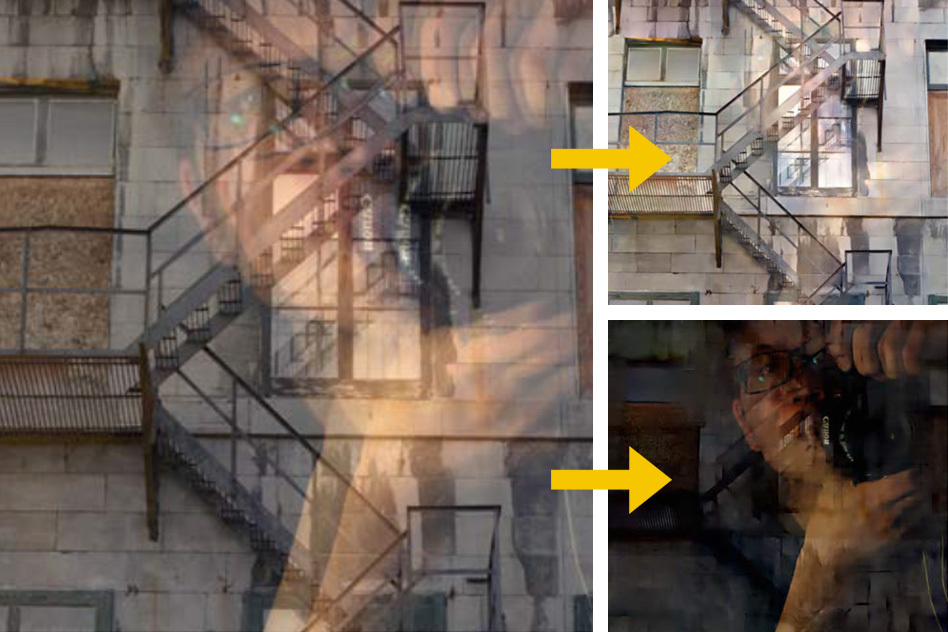

MIT has developed an algorithm that removes reflections in windows from photos

If you go to the window and try to take a photo through the glass, it will be difficult to avoid reflection of your own figure. The unnecessary object will be the more noticeable, the darker the space outside the window. Professional photographers solve this problem by bringing the camera lens close to the glass and applying many other techniques, for example, polarizing filters. But often only the “eye” of the smartphone is available, or the shooting possibilities are limited, and you have to put up with the presence of reflection. Researchers at the Massachusetts Institute of Technology have found an algorithmic solution to this problem.

The algorithm will be presented in June at a conference on computer vision and pattern recognition (Computer Vision and Pattern Recognition), but are now availablesome details. For work, the fact that reflections are repeated several times due to the structure of the windows is used. Based on this principle, digital photographs are automatically cleaned in a wide variety of cases.

According to the first author of the work, YiChang Shih, in Boston, double-glazed windows are often used to retain heat in the cold season. Therefore, usually two reflections are obtained from each of the glasses. But this does not mean that the algorithm will work only for double windows, one thick glass also gives two reflections: one from the inside, the second from the outside. Without providing additional information about the second reflection, the task of removing unnecessary elements is practically unsolvable, since the result of the photo is the sum of the image outside the window and the reflection in the glass. If A + B = C, then restoring A and B only from C will be impossible.

The second reflection provides the necessary information for image correction. The values of individual reflection pixels should approximately coincide, and this facilitates the search for the desired solution, although their number still remains large. Shi and other co-authors of the work - professors of computer science and computer engineering Fredo Duran and Bill Freeman (scientific supervisors of the work) and Dilip Krishnan (doctor of sciences who now works at Google Research) - added to the algorithm the expectation that both reflection and and the captured view from the window has statistical patterns called a natural image.

It is assumed that in unchanged graphical representations of the world at the level of pixel groups, sharp color changes are rare, and if they happen, then within clear boundaries. If some pixels are the border between the blue and red objects, then it is expected that on one side the picture will have a blue tint, and on the other - red. In the field of computer vision, this is usually realized using the gradient principle, which characterizes each block of pixels with respect to the general direction of color change and the intensity of this process. But Shi and his colleagues found that this technique in this case does not work too well.

Therefore, Daniel Zoran of the Freeman group and Yair Weiss of the Hebrew University of Jerusalem created an algorithm that splits an image into groups of 8 × 8 pixel blocks. For each of the groups, an analysis was carried out using 50 thousand training images, and on the basis of the data obtained, a reliable way to distinguish reflections from images outside the window was obtained. To test the work, Shi and his colleagues performed a search on Google and on Flickr photo hosting, asking them the form “problems with reflection in the window on the photo”. After excluding results that were not photos in the window, 197 images were collected. Of these, in 96 cases, the shift between the two images was wide enough so that the algorithm worked.

Yoav Schechner, professor of electrical engineering at the Israeli Technion, said that attempts to remove such unnecessary elements in photographs have been made before, but some methods used only one reflection. The task was greatly complicated, and success was only partial, there was no automated way to separate the reflection from the restored image. Shi's work is a breakthrough on several fronts at once. Schechner believes that if the algorithm is refined over time, then he will be able to get into the popular digital photo processing packages and help computer vision algorithms in robots. Researchers do not report the possibility of extracting a photographer’s image from reflection, but this application is probably possible. The latter may be of interest to law enforcement and peepers.

The algorithm will be presented in June in Boston at the Computer Vision and Pattern Recognition conference.

Images: Massachusetts Institute of Technology .