Can artificial intelligence be taught new tricks?

- Transfer

Almost all AI achievements you know are related to the breakthrough of thirty years ago

I am standing in a room that will soon become the center of the world - or simply in a very large room on the seventh floor of a sparkling tower in downtown Toronto. The tour suits me Jordan Jacobs, co-founder of this place: the nascent Vector Institute, opening its doors in the fall of 2017, and striving to become the global epicenter of artificial intelligence.

I am standing in a room that will soon become the center of the world - or simply in a very large room on the seventh floor of a sparkling tower in downtown Toronto. The tour suits me Jordan Jacobs, co-founder of this place: the nascent Vector Institute, opening its doors in the fall of 2017, and striving to become the global epicenter of artificial intelligence. We are in Toronto since Jeffrey Hintonlocated in Toronto, and Jeffrey Hinton is the father of Deep Learning (GO), the technology behind the current enthusiasm for AI. “After 30 years, we look back and say that Jeff was Einstein in AI, deep learning, in what we call AI,” says Jacobs. Among researchers at the forefront of GO, Hinton has more citations than the next three combined. His undergraduate and graduate students have launched AI labs at Apple, Facebook, and OpenAI; Hinton himself is a leading scientist in the Google Brain AI team. Almost all the achievements in the field of AI of the last decade - translations, speech recognition, image recognition, games - are somehow based on the work of Hinton.

The Vector Institute, a monument to the take-off of Hinton’s ideas, is a research center where companies from the USA and Canada - such as Google, Uber and Nvidia - will finance attempts to commercialize AI technologies. Money poured into him faster than Jacobs had time to request them; the two co-founders studied companies in the Toronto area, and it turned out that the demand for AI specialists was ten times higher than the opportunities for their graduation in Canada. "Vector" is the starting point of a global attempt to mobilize around civil society: to make money on technology, educate people about it, hone and apply it. Data centers are being built, skyscrapers are filled with start-ups, an entire generation of students is pouring into the region.

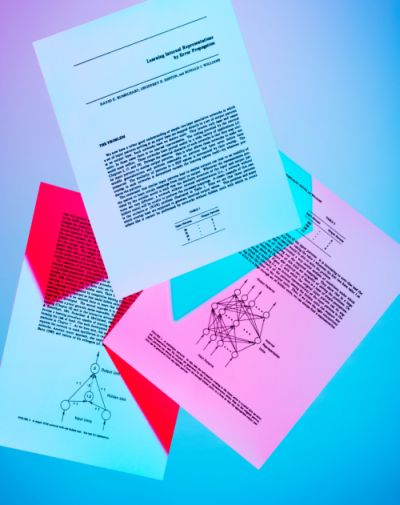

The impression of being in the “Vector” office, empty and echoing, waiting to be filled, is as if you were at the beginning of something. But, what is strange about GO is how old its key ideas are. Hinton's breakthrough work, done in conjunction with David Rumelhart and Ronald Williams, was published in 1986. The work was sorted out by a technology called “back propagation of errors” (ORO). ORO, in the words of John Cohen, a specialist in computational psychology at Princeton, “is what all deep learning is based on - literally everything.”

In fact, AI today is GO, and GO is ORO, and this is surprising given that ORO is over 30 years old. It is worthwhile to figure out how this happened - how the technology can lie in standby mode for so long, and then lead to such an explosion. Having understood the history of ORO, you will begin to understand the current development of AI, and in particular, the fact that, perhaps, we are not at the beginning of the revolution. Perhaps we are at its end.

Proof of

A walk from the Vector Institute to Hinton’s Google office, where he spends most of his time (he is now an emeritus professor at the University of Toronto), is a bit of a live advertisement for the city, at least in the summer. One can understand why Hinton, who was born in Britain, moved here in the 1980s after working at Carnegie Mallon University in Pittsburgh.

Going out, even in the business center near the financial district, you feel as if you were in nature. I think the whole thing is the smell: it smells of moist humus. Toronto was built in a wooded gorge, and it is called the "city in the park"; during urbanization, local authorities imposed severe restrictions to support tree growth. On approaching the city, its outskirts seem to be covered in cartoonishly lush vegetation.

Toronto is the fourth largest city in North America (after Mexico City, New York and Los Angeles), and the most motley: more than half of its inhabitants were born outside of Canada. This is evident when walking around the city. People in the tech quarter don't look like the people of San Francisco — young white guys in hoodies — but more internationally. There is free medicine, good public schools, people are friendly, the political climate is stable and tends to the left; all this attracts people like Hinton, who says he left the US because of the Iran-Contras scandal . This is one of the first topics that we raise at our first meeting, shortly before lunch.

“Most people at Carnegie Mallon University think it was wise for the US to invade Nicaragua,” he says. “They thought the country belonged to them.” He says that he recently had a big working breakthrough: “a very good young engineer is working with me,” a woman named Sarah Sabur. She is from Iran, and she was denied a work visa to the United States. The Toronto Google office took her to her.

Hinton, 69 years old, has a kind, thin, British-style face of a " great kind giant", with thin lips, big ears and a prominent nose. He was born in Wimbledon, in England, and his voice in conversation sounds like the voice of an actor reading a children's book on science: you can hear curiosity, charm and a desire to explain everything. He’s funny, he’s a little showman. He’s standing all the time, because it turned out to be painful for him to sit. "In June 2005, I sat down and it was a mistake," he tells me, to explain after a pause that he has problems with the intervertebral disc. Because of this, he can’t fly, and earlier that day he had to drag a resemblance to a surfboard so that he could lie on it in the dentist's office, who worked with a cracked tooth root.

In the 1980s, Hinton was an expert on neural networks, a simplified model of the network of neurons and synapses in the brain. But at that time, everyone decided that neural networks were a dead end in AI research. Although the very first neural network, Perceptron, which he began to develop in the 1950s, was called the first step towards human-level machine intelligence, a 1969 book by authors from MIT, Marvin Minsky and Seymour Papert called Perceptrons mathematically proved that such networks can carry out only the simplest functions. Such networks had only two layers of neurons, input and output. Networks with a large number of layers between input and output in theory could solve a huge number of problems, but no one knew how to train them, so in practice they were useless. And besides a bunch of dodgers like Hinton, the Perceptrons led to

Hinton's breakthrough in 1986 was that he showed how ORO can train a deep neural network - that is, a network with many layers. But another 26 years passed before the increased computing power was able to take advantage of this discovery. In a 2012 year study, Hinton and two students from Toronto showed that deep neural networks trained with ORO outperform the best image recognition systems. Deep learning has begun to spread. For the outside world, AI woke up in one night. For Hinton, it was a long-standing favor.

Reality distortion field

A neural network is often drawn in the form of a club sandwich in which layers are stacked on top of each other. The layers contain artificial neurons, dumb little computational units that can be excited - like a real neuron is excited - and transmit the excitation to other neurons with which they are connected. Excitation of a neuron is represented by a number, such as 0.13 or 32.39, indicating its magnitude. On each of the connections between neurons, there is another critical number that determines the excitation of what force can be transmitted through it. This number simulates the strength of synapses between neurons in the brain. The larger the number, the stronger the connection, the more excitement is transmitted to others.

One of the most successful applications of deep neural networks is pattern recognition, reminiscent of a scene from the Silicon Valley series, in which the team creates a program that can tell if there is a hot dog on the image. Such programs exist, and ten years ago they would have been impossible. To make them work, you first need to get a picture. Let's say this is a small black and white image of 100x100 pixels. You feed this image to your neural network by adjusting the excitation of each neuron in the input layer so that it equals the brightness of each pixel. This is the bottom layer of a club sandwich: 10,000 neurons (100x100) representing the brightness of each pixel in the picture.

Then you connect this large layer of neurons with another large layer of neurons above it, which, for example, accumulates several thousand, and they, in turn, with several thousand neurons, and so on, in several layers. Finally, in the upper layer of the sandwich, the outer layer, there are only two neurons - one means “there is a hot dog”, the other means “no hot dog”. The idea is to teach the neural network to excite only the first of these neurons if there is a hot dog in the image, and only the second if it is not there. ORO - the technology on which Hinton built his career - is the method for accomplishing this task.

ORO surprisingly simply works, although it works best on large data sets. Therefore, big data is so important for AI - that's why Facebook and Google are so hungry for it, and so the Vector Institute decided to place its office on the same street with the four largest Canadian hospitals and developed a partnership agreement with them.

In our case, the data appears in the form of millions of images, some of which have hot dogs, and some do not. The trick is that the presence of hot dogs on the images is marked. When the neural network was first created, the connections between the neurons have random weights - random numbers that determine the size of the transmitted excitation. Everything works as if the synapses of the brain are not configured yet. The goal of the ORO is to change these weights so that they make the neural network work: so that when you transfer the image of the hot dog to the lower level, the neuron "eat a hot dog" of the upper level is excited.

Let's say you take the first training picture, which depicts a piano. You will convert the intensity of the pixels in a 100x100 image into 10,000 numbers, one for each neuron in the lower layer of the network. The excitation propagates up the network according to the strength of the bonds between the neurons in the neighboring layers, and as a result it appears in the last layer - in the one where there are only two neurons. Since this image is a piano, ideally, the neuron "have a hot dog" should be zero, and "no hot dog" should get a large number. But let's say this did not happen. Let's say the neural network is wrong. ORO is a procedure for shaking the forces of each connection in the network so as to correct the error for this training example.

You start with the last two neurons, and see how much they made a mistake: what is the difference between what values for excitations should be and what they were. After that, you check all the connections that led to these neurons - those that are in the lower layer - and calculate their contribution to the error. You continue to do this until you reach the very first set of connections, at the very bottom of the network. At this point, you know exactly what each individual connection made to the error, and at the last step, change all the weights in the direction that best reduces the entire error. The technique is called “backward error propagation” because you propagate the error backward (or downward) across the network, starting from the output layer.

The amazing fact is that when you do this with millions or billions of images, the network will very well recognize if there is a hot dog in the picture. Even more surprisingly, the individual layers of these image recognition networks begin to “see” images in much the same way that our visual system sees them. That is, the first layer can begin to recognize faces, in the sense that its neurons are excited when there are faces, and not excited when they are not; a layer above can begin to recognize sets of faces, such as corners; a layer above sees forms; the layer above already sees such things as an open or closed bun, in the sense that it has neurons that are responsible for each of the options. The network organizes itself in hierarchical layers, despite the fact that no one has programmed it for this.

This thing captivated everyone. The point is not that neural networks simply classify images of hot dogs or anything else: they are able to build ideas about ideas. In the case of the text, this can be seen even better. You can feed a Wikipedia text, billions of words into a simple neural network, and train it so that for each word it displays a large list of numbers corresponding to the excitation of each neuron in the layer. If you imagine that these numbers are coordinates in a complex space, then you will essentially look for a point (in this context, a vector) for each word in this space. Then train your network so that words appearing close to each other on Wikipedia pages have similar coordinates - and voila, something strange will happen: words with similar meanings will appear close to each other in this space. That is, the words “crazy” and “crazy” have little difference in coordinates, like the words “three” and “seven,” and so on. Moreover, the so-called vector arithmetic allows you to subtract the vector "France" from the vector "Paris", add the vector "Italy" and find yourself somewhere near the "Rome". And this works without a preliminary explanation for the network that Rome treats Italy the same way Paris treats France.

“This is amazing,” says Hinton. “This is shocking.” We can say that neural networks take certain entities - images, words, conversation records, medical data - and place them in a high-dimensional vector space, where the distance between the entities reflects some important feature of the real world. Hinton believes the brain does the same. “If you want to know what a thought is,” he says, “I can express it for you with a set of words. I can say, "John thought," Foolish. " But if you ask: “What is this idea? What does it mean for John - to give rise to this thought? ”This does not mean that an opening quotation mark appeared in his head, then“ crap ”, then closing — or even some version of these symbols. A big, large pattern of neural activity appeared in his head. " A mathematician can display large schemes of neural activity in a vector space, where the activity of each neuron corresponds to a number, and each number corresponds to the coordinate of a very large vector. From Hinton's point of view, this is the idea: the dance of vectors.

Jeffrey Hinton

It is no coincidence that the main AI institute in Toronto was called the "Vector." The name was invented by Hinton himself.

Hinton creates a kind of field of distortion of reality, an atmosphere of confidence and enthusiasm in which you feel that there is nothing that the vector could not do. After all, look what they have already achieved: cars that behave themselves, computers that recognize cancer, cars that instantly translate what was said out loud into another language. And look at this charming British scientist talking about gradient descent in high-dimensional spaces!

Only when you leave the room, you recall: these systems of deep learning are still rather dumb, despite the seeming ingenuity. A computer processing a photo of a heap of donuts lying in a heap on a table automatically signs it: “a bunch of donuts lies on a table” and seems to understand the world around it. But when the same program processes a photo of a girl brushing her teeth and signs it: “the boy holds a baseball bat”, you understand how unsteady his understanding, if it exists at all.

Neural networks are thoughtless recognizers of fuzzy patterns, and are as useful as recognizers of fuzzy patterns can be - hence the rush associated with their integration into all possible software. They represent a limited kind of intelligence that is easy to fool.. A deep neural network that recognizes images can be deadlocked if you change one pixel or add visual noise that a person doesn’t even perceive. And indeed, every time we find new ways to use GO, we find its limitations. Robomobiles can not cope with orientation in conditions hitherto not met. Machines hardly recognize sentences that require common sense and understanding of the work of the world.

GO in some sense imitates the processes occurring in the human brain, but not too deeply - this, perhaps, explains why AI sometimes turns out to be so shallow. OROs were not discovered by sensing the brain, which decrypts its messages; it grew out of animal training models by trial and error obtained in old experiments on the development of reflexes. And in most of the breakthroughs that came with him, there were no new neurobiological revelations; there were only technical improvements obtained over the years of work of mathematicians and programmers. Our knowledge of how the intellect works is nothing compared to what we do not yet know about it.

David Duvenaud, an assistant professor who works in the same department of the University of Toronto as Hinton, says GO resembles the work of engineers before the advent of physics. “Someone wrote a work where he said:“ I built a bridge and it doesn’t fall! ”Another friend wrote:“ I built a bridge and it fell - and then I added columns and it didn’t fall. ” After that, the columns become a hot topic. Someone comes up with arches, and as a result, everyone says: “Arches are cool!” And after the appearance of physics, he says, “you can understand what works and why.” He argues that we have only recently begun to move into a phase of real understanding of AI.

Hinton himself says: “Most of the conferences consist of introducing small changes, instead of thinking carefully and saying:“ What are we missing in our current activities? What is her problem? Let's concentrate on that. ”

From the outside it is difficult to catch when you see only one eulogy about the next breakthrough after another. But the latest progress in AI belongs more to engineers than scientists, and is reminiscent of fuss at random. And although we already better understand what changes the system with civil defense can improve, we, by and large, are still unaware of how these systems work, or whether they can ever come close to such a powerful system as the human mind.

It is worth asking whether we have already squeezed out everything possible from the ORO. If so, it is possible that the AI progress chart has reached a plateau.

Patience

If you want to know where the next breakthrough will take place, which can form the basis for creating machines with more flexible intelligence, you should study the research process, similar to how if ORO came up in the 80s: very smart people play with ideas that not yet working.

A few months ago, I was at the Center for Mind, Brain, and Machines - a joint project of several institutes based at MIT to watch my friend Eyal Dechter defend a thesis on cognitive science. Right before the report, his wife Amy, their dog Ruby and daughter Susanne spun alongside him and wished him good luck. On the screen was a photograph of Ruby, and next to her - a photo of Suzanne in infancy. When dad asked Suzanne to show where she is on the screen, she gladly slammed the folding pointer over her infant photograph. Leaving the room, she unrolled her toy stroller and shouted: “Good luck, daddy!” Over her shoulder. And she added: “Vámanos!” She is two years old.

Iyal began his presentation with the question: how is it that Suzanne, having two years of experience, learned to talk, play, follow the plot in history? How can a human brain learn so well? Will the computer ever learn as fast and smoothly?

We comprehend new phenomena on the basis of what we already understand. We break the whole into parts and study the parts. Iyal is a mathematician and programmer, and he imagines tasks - like, say, cooking souffles - as very complex computer programs. But you don’t learn to make a souffle through the study of trillions of microinstructions like “turn your elbow 30 degrees, look down at the countertop, extend your index finger” ... If you had to do this with each new task, the training would be too difficult, and you would stayed with what you already know. Instead, we write the program in high-level steps like “beat the egg whites”, consisting of routines such as “beat the eggs” and “separate the yolks from the whites”.

Computers do not, and this is one of the main reasons for their stupidity. To make the GO system recognize hot dogs, you need to feed it 40 million hot dog images. To teach Suzanne to recognize a hot dog, you show her a hot dog. And very soon, she will already understand the language at a deeper level than the realization that certain words often appear together. Unlike a computer, she has in her head a model of the work of the whole world. “I am surprised to hear people fear that computers will take their work,” Iyal says. - Computers cannot replace lawyers, because lawyers do a very difficult job. Lawyers read and talk with people. We have not come close to this. "

Real intelligence does not break when you slightly change the requirements for the problem that it is trying to solve. A key part of Iyala’s work is a demonstration of how, in principle, it is possible to make a computer work like this: it’s easy to apply existing knowledge to new tasks, quickly switch from an almost complete state of ignorance to an expert.

In essence, this is a procedure that he calls the “study-compression” algorithm. The computer works in a manner similar to a programmer, building a library of reusable, modular components with which you can create more and more complex programs. Having not received knowledge about a new area, the computer tries to structure knowledge about it, simply playing with this area, consolidating the information received, playing again - just like a child does.

Its curator, Joshua Tenenbaum, is one of the most frequently cited AI researchers. His name popped up in half of the discussions I had with other scientists. Some key people from DeepMind - the team behind AlphaGo, which shocked computer scientists by defeating the world champion in 2016 - worked with him as a research supervisor. It is associated with a startup trying to give robomobiles an intuition about the basic laws of physics and the intentions of other drivers, so that AI can better predict the outcome of situations like which he never saw - like, for example, a truck with a trailer in it, or an aggressive attempt to rebuild.

Iyala's thesis is not applicable to practical applications, not to mention writing any programs that can beat a person and get into the headlines. It's just that the problems that Iyal is working on are “very, very complex,” Tenenbaum says. “They will take many, many generations.”

Tenenbaum had long, curly graying hair, and when we sat down with him to have coffee, he was in a shirt and slacks that was buttoned up to all buttons. He said that he turned to the history of the development of ORO for inspiration. For decades, ORO was just cool math, unsuitable for anything. When computers became faster and programming more difficult, suddenly it came in handy. He hopes that something similar will happen to both his work and the work of his students, "but it can happen in a couple of decades."

Hinton himself is convinced that in order to overcome the limitations of AI, it is necessary “to build a bridge between computer science and biology.” From his point of view, ORO was a triumph of biology-inspired computing; the idea originally came not from programming, but from physiology. So now Hinton is trying to do a similar trick.

Today's neural networks consist of large flat layers, but in the cerebral cortex, real neurons are organized not only in horizontal layers, but also in vertical columns. Hinton believes that he knows why they are needed - for example, they are critical for vision and its ability to recognize objects even when changing the field of view. So he creates an artificial version of them — he calls them “capsules” —to test his theory. So far, it does not work - the capsules could not significantly improve the network. But the exact same situation has been with ORO for almost 30 years.

“This idea just needs to be right,” he says of capsule theory, and he laughs at his own confidence. “And the fact that it does not work is a temporary nuisance.”

James Somers - New York Journalist and Programmer