Unreal Engine 4 Tutorial: Paint Filter

- Transfer

Over time, the appearance of games is getting better and better. In an era of stunning graphics, it's hard to make your own game stand out from the rest. One way to make the game graphically more unique is to use non-photorealistic rendering.

Non-photorealistic rendering includes many rendering techniques. These include cel shading, toon contours and hatching. You can even make the game look like a picture! One way to achieve this effect is to blur the Kawahara filter.

To implement Kawahara filtering, we will learn the following:

- Calculate average and variance for multiple cores

- Print the average for the kernel with the smallest dispersion

- Use the Sobel operator to find the local orientation of a pixel

- Rotate sampling cores based on local pixel orientation

Note: this tutorial assumes that you are already familiar with the basics of Unreal Engine. If you are just learning Unreal Engine, then check out our series of Unreal Engine tutorials for beginners in ten parts.

Since this tutorial uses HLSL, you should be familiar with it or a language similar to it, such as C #.

Note: this tutorial is the fourth part of a series of tutorials on shaders:

- Part 1: Cel Shading

- Part 2: Toon Circuits

- Part 3: Custom HLSL shaders

- Part 4: Paint Filter

Getting to work

Start by downloading the tutorial materials . Unzip them, go to PaintFilterStarter and open PaintFilter.uproject . You will see the following scene:

To save time, the scene already has a Post Process Volume with PP_Kuwahara . This is the material (and shader files) that we will modify.

First, let's figure out what a Kawahara filter is and how it works.

Kawahara filter

When taking photos, you may notice a grainy texture in the image. This is noise that we absolutely do not need.

Usually noise is eliminated by using a low-pass filter, such as blur. Below is a noisy image after applying box blur with a radius of 5 to it.

Most of the noise disappeared, but all boundaries lost their sharpness. I wish there was a filter that could smooth the image and save the borders of objects!

As you might have guessed, the Kawahara filter meets all of these requirements. Let's see how it works.

How Kawahara Filtering Works

As in convolutions, Kawahara filters use cores, but instead of one, four are used. The cores are arranged in such a way that they overlap in one pixel (in the current). The following is an example of cores for a Kawahara filter.

First, we calculate the average (average color) for each core. So we blur the core, that is, smooth out the noise.

Also for each core, we calculate the variance . In fact, this is a measure of how much the color in the core varies. For example, a core with similar colors will have low dispersion. If the colors are different, then the dispersion of the core will be high .

Note: if you are not familiar with the concept of variance or don’t know how to calculate it, then read the article Standard Deviation and Variance on Math is Fun.

Finally, we find the core with the smallest dispersion and derive its average value. Thanks to the choice based on the variance, the Kawahara filter allows you to maintain boundaries. Let's look at a few examples.

Kawahara Filtration Examples

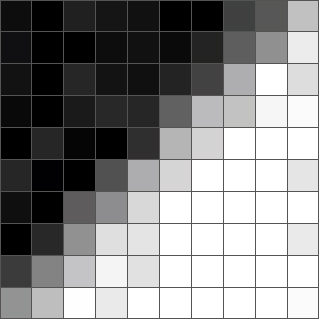

Shown below is a 10x10 grayscale image. It can be seen that there is a border on it, going from the lower left to the upper right corner. You may also notice that noise is present in some areas of the image.

First we select a pixel and determine which core has the smallest dispersion. Here is the pixel next to the border and its associated kernels:

As you can see, the nuclei lying on the border vary greatly in color. This tells us a high variance means that the filter is not to choose them. By avoiding the choice of cores lying on the border, the filter eliminates the problem of blurry borders.

For this pixel, the filter will select the green core, because it is the most uniform. The output value will then be the average value for the green core, that is, a color close to black.

Here is another pixel of the border and its core:

This time, the yellow core has the smallest dispersion, because it is the only one that is not on the border. Therefore, the output value will be the average of the yellow core, that is, a color close to white.

Below is a comparison between box blur and Kawahara filtering with a radius of 5.

As you can see, Kawahara filtering does a great job of smoothing and maintaining borders. In our case, the filter even made the border sharper!

Coincidentally, this anti-aliasing function with preserving borders can give the image the appearance of a painted picture. Since brush strokes usually produce sharp borders and low noise, the Kawahara filter proves to be a convenient choice for converting realistic images into an artistic style.

Here is the result for a variable sized Kawahara filtering photo:

It looks pretty pretty, right? Let's get started creating a Kawahara filter.

Creating a Kawahara Filter

In this tutorial, the filter is divided into two shader files: Global.usf and Kuwahara.usf . The first file will contain the function of calculating the average value and variance of the kernel. The second file is the filter entry point, which will call the above function for each core.

First, we will create a function to calculate the mean and variance. Open the project folder in the OS and go to the Shaders folder . Then open Global.usf . Inside you will find a function

GetKernelMeanAndVariance(). Before we start creating a function, we need an additional parameter. Change the function signature as follows:

float4 GetKernelMeanAndVariance(float2 UV, float4 Range)To sample the mesh, we need two cycles

for: one for horizontal offsets. the second is for vertical ones. The first two Range channels will contain the boundaries of the horizontal loop. The second two will contain the boundaries of the vertical cycle. For example, if we sample the upper left core, and the filter has a radius of 2 , then Range will have the values:Range = float4(-2, 0, -2, 0);Now is the time to start sampling.

Pixel Sampling

First we need to create two cycles

for. Add to the GetKernelMeanAndVariance()following code (under the variables):for (int x = Range.x; x <= Range.y; x++)

{

for (int y = Range.z; y <= Range.w; y++)

{

}

}This will give us all the core offsets. For example, if we sample the upper left core and the filter has a radius of 2 , then the offsets will be in the range from (0, 0) to (-2, -2) .

Now we need to get the color of the sample pixel. Add the

forfollowing code to the inner loop :float2 Offset = float2(x, y) * TexelSize;

float3 PixelColor = SceneTextureLookup(UV + Offset, 14, false).rgb;The first line takes the offset of the sample pixel and converts it to UV space. The second line uses the offset to get the color of the sample pixel.

Now we need to calculate the mean and variance.

Calculation of mean and variance

Calculating the average is a fairly simple task. We simply summarize all the colors and divide them by the number of pixels in the sample. For variance, we use the formula below, where x is the color of the sample pixel:

The first thing we need to do is calculate the amounts. To get the average, we just need to add the colors in the variable Mean . To get the variance we need to square the color and then add it to Variance . Add the following code below the previous one:

Mean += PixelColor;

Variance += PixelColor * PixelColor;

Samples++;Next, add the following after the loops

for:Mean /= Samples;

Variance = Variance / Samples - Mean * Mean;

float TotalVariance = Variance.r + Variance.g + Variance.b;

return float4(Mean.r, Mean.g, Mean.b, TotalVariance);The first two lines calculate the mean and variance. However, a problem arises: the dispersion is distributed between the RGB channels . To solve it, in the third line we summarize the channels to get the total variance.

At the end, the function returns the mean and variance as float4 . The average value is in the channels RGB , and dispersion - in channel A .

Now that we have a function to calculate the mean and variance, we need to call it for each core. Go back to the Shaders folder and open Kuwahara.usf . First we need to create some variables. Replace the code inside with the following:

float2 UV = GetDefaultSceneTextureUV(Parameters, 14);

float4 MeanAndVariance[4];

float4 Range;Here is what each variable is used for:

- UV: UV coordinates of the current pixel

- MeanAndVariance: array for storing the average and variance of each core

- Range: used to store the

forcurrent loop boundaries of the current kernel

Now we need to call for each core

GetKernelMeanAndVariance(). To do this, add the following:Range = float4(-XRadius, 0, -YRadius, 0);

MeanAndVariance[0] = GetKernelMeanAndVariance(UV, Range);

Range = float4(0, XRadius, -YRadius, 0);

MeanAndVariance[1] = GetKernelMeanAndVariance(UV, Range);

Range = float4(-XRadius, 0, 0, YRadius);

MeanAndVariance[2] = GetKernelMeanAndVariance(UV, Range);

Range = float4(0, XRadius, 0, YRadius);

MeanAndVariance[3] = GetKernelMeanAndVariance(UV, Range);So we get the average and variance of each core in the following order: upper left, upper right, lower left and lower right.

Next, we need to select the core with the smallest dispersion and derive its average value.

Least Dispersion Kernel Selection

To select the kernel with the smallest dispersion, add the following code:

// 1

float3 FinalColor = MeanAndVariance[0].rgb;

float MinimumVariance = MeanAndVariance[0].a;

// 2for (int i = 1; i < 4; i++)

{

if (MeanAndVariance[i].a < MinimumVariance)

{

FinalColor = MeanAndVariance[i].rgb;

MinimumVariance = MeanAndVariance[i].a;

}

}

return FinalColor;Here is what each part does:

- Creates two variables to hold the final color and the least variance. Initializes both of them with the mean and variance values of the first core.

- Loops through the remaining three cores. If the variance of the current core is below the smallest, then its average and variance become the new FinalColor and MinimumVariance . After the cycles are completed, FinalColor is displayed, which will be the average value of the kernel with the smallest dispersion.

Return to Unreal and go to Materials \ PostProcess . Open PP_Kuwahara , make any unaffected changes and click Apply . Return to the main editor and look at the results!

It looks pretty good, but if you look closely, you can see that the image has strange block areas. I highlighted some of them:

This is a side effect of using kernels aligned with the axes. We can reduce this effect by applying an improved version of the filter, which I call the Kawahara Directional Filter .

Kawahara Directional Filter

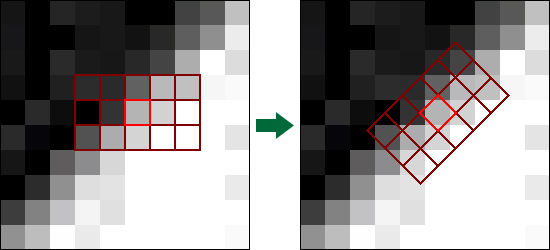

This filter is similar to the original one, but now the kernels will be aligned with the local pixel orientation. Here is an example of a kernel in a Kawahara directional filter:

Note: since we can represent the core as a matrix, we record measurements in the form height x width instead of the usual width x height . We will talk more about matrices below.

Here, the filter determines the orientation of the pixel so that it is located along the border. Then you can rotate the entire core accordingly.

To calculate the local orientation, the filter passes the convolution using the Sobel operator . If the term “Sobel operator” sounds familiar to you, it is because it is a popular technique for recognizing boundaries. But if this is a border recognition technique, then how can it be used to obtain a local orientation? To answer this question, we need to understand how the Sobel operator works.

How the Sobel operator works

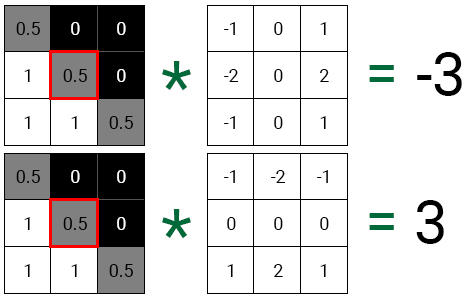

Instead of one core, the Sobel operator uses two.

Gx gives us a gradient in the horizontal direction. Gy gives us a gradient in the vertical direction. Let’s use as an example such a 3 × 3 grayscale image:

First, we will convolve the average pixel for each core.

If we put each value on a 2D plane, we will see that the resulting vector points in the same direction as the boundary.

To find the angle between the vector and the X axis, we substitute the values of the gradients into the arctangent function (atan). Then we can use the resulting angle to rotate the core.

That is how we can use the Sobel operator to obtain the local orientation of the pixel. Let's try to do it.

Finding a local orientation

Open Global.usf and add the

GetPixelAngle()following code inside :float GradientX = 0;

float GradientY = 0;

float SobelX[9] = {-1, -2, -1, 0, 0, 0, 1, 2, 1};

float SobelY[9] = {-1, 0, 1, -2, 0, 2, -1, 0, 1};

int i = 0;Note: Note that the last bracket is

GetPixelAngle()missing. This is done on purpose! If you want to know why to do this, read our tutorial on HLSL shaders . Here is what each variable is used for:

- GradientX: stores gradient for horizontal direction

- GradientY: stores gradient for vertical direction

- SobelX: the core of the horizontal Sobel operator as an array

- SobelY: the core of the vertical Sobel operator as an array

- i: used to access each item in SobelX and SobelY

Next, we need to convolution using the SobelX and SobelY cores . Add the following code:

for (int x = -1; x <= 1; x++)

{

for (int y = -1; y <= 1; y++)

{

// 1

float2 Offset = float2(x, y) * TexelSize;

float3 PixelColor = SceneTextureLookup(UV + Offset, 14, false).rgb;

float PixelValue = dot(PixelColor, float3(0.3,0.59,0.11));

// 2

GradientX += PixelValue * SobelX[i];

GradientY += PixelValue * SobelY[i];

i++;

}

}Here is what happens in each part:

- The first two lines get the color of the sample pixel. The third line reduces the color saturation, converting it to a value of shades of gray. This simplifies the calculation of the gradients of the image as a whole, instead of obtaining gradients for each color channel.

- For both cores, we multiply the value of the pixel in shades of gray by the corresponding kernel element. Then we add the result to the corresponding gradient variable. Then the increment i occurs so that it contains the index of the next element of the kernel.

To get the angle, we use the function

atan()and substitute our gradient values. Under the loops, foradd the following code:returnatan(GradientY / GradientX);Now that we have a function to get the angle of the pixel, we need to somehow apply it to rotate the kernel. We can do this with a matrix .

What is a matrix?

A matrix is a two-dimensional array of numbers. For example, here is a 2 × 3 matrix (with two rows and three columns):

The matrix itself does not look particularly interesting. But the true power of matrices is manifested when we multiply it with a vector. This allows us to perform actions such as rotation and scaling (depending on the type of matrix). But how do we create a matrix for rotation?

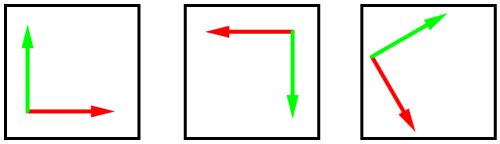

In the coordinate system, we have a vector for each dimension. These are basic vectors that determine the positive directions of the axes.

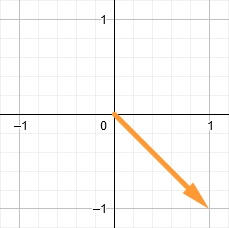

Below are a few examples of different basis vectors for a two-dimensional coordinate system. The red arrow indicates the positive direction in X. The green arrow indicates the positive direction in Y.

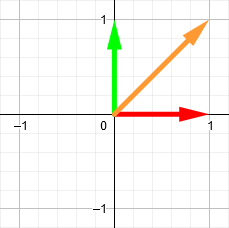

To rotate a vector, we can use these basis vectors to construct a rotation matrix . It is simply a matrix containing the positions of the basis vectors after rotation. For example, imagine that we have a vector (orange arrow) in coordinates (1, 1) .

Suppose we want to rotate it 90 degrees clockwise. First, we rotate the basis vectors by the same amount.

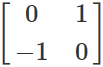

Then we construct a 2 × 2 matrix using the new positions of the basis vectors. The first column is the position of the red arrow, and the second is the position of the green arrow. This is our rotation matrix.

Finally, we perform matrix multiplication using the orange vector and the rotation matrix. The result will be the new position of the orange vector.

Note: you do not need to know how matrix multiplication is performed, because in HLSL there is a built-in function for this. But if you want to find out, check out the How to Multiply Matrices article on Math is Fun.

Is not that great? But even better is that we can use the matrix shown above to rotate any 2D vector 90 degrees clockwise. If we talk about our filter, this means that it’s enough for us to create a rotation matrix for each pixel once and use it for the entire core.

Now it's time to rotate the kernel using the rotation matrix.

Core rotation

First we need to change

GetKernelMeanAndVariance()so that it gets a 2 × 2 matrix. This is necessary because we will create a rotation matrix in Kuwahara.usf and transfer it. Change the signature GetKernelMeanAndVariance()as follows:float4 GetKernelMeanAndVariance(float2 UV, float4 Range, float2x2 RotationMatrix)Next, replace the first line of the inner loop

forwith this code:float2 Offset = mul(float2(x, y) * TexelSize, RotationMatrix);mul()will perform matrix multiplication using offset and RotationMatrix . So we will rotate the offset around the current pixel. Next we need to create a rotation matrix.

Create a rotation matrix

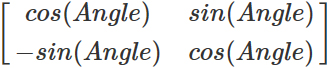

To create the rotation matrix, we apply the sine and cosine functions as follows:

Close Global.usf and open Kuwahara.usf . Then add the following under the list of variables:

float Angle = GetPixelAngle(UV);

float2x2 RotationMatrix = float2x2(cos(Angle), -sin(Angle), sin(Angle), cos(Angle));The first line calculates the angle of the current pixel. The second row creates a rotation matrix using an angle.

Finally, we need to transfer for each core RotationMatrix . Change each call

GetKernelMeanAndVariance()as follows:GetKernelMeanAndVariance(UV, Range, RotationMatrix)And this is where we finished creating the Kawahara directional filter! Close Kuwahara.usf and return to PP_Kuwahara . Make changes that do not affect anything, click Apply and close it.

The image below shows a comparison of conventional and directional Kawahara filters. Note that a directional filter does not create blocking.

Note: You can use PPI_Kuwahara to resize the filter. I recommend to change the size of the filter so that the range of X is larger than the radius of Y . This will increase the size of the core along the border and will help in creating directivity.

Where to go next?

You can download the finished project here .

If you want to know more about the Kawahara filter, you can read the article about anisotropic Kawahara filtering . In fact, the Kawahara directional filter is a simplified version of the filter presented in this article.

I recommend that you experiment with matrices so that you can try to create new effects with them. For example, you can use a combination of rotation and blur matrices to create radial or circular blur. If you want to learn more about matrices and how they work, check out the 3Blue1Brown Essence of Linear Algebra video series .