As I wrote the graphic bot and what it turned into. Pengueebot

In this article, we will analyze the experience of writing a tool that allows you to automate a wide range of routine tasks with a minimum of effort and time.

I needed to make a bot to perform several tasks that require logic and reaction speed. I did not want to climb into the API and pick the program binaries. It was decided to go by visual automation. I found several bots, but none of them came up to my requirements, being either too slow, or the script part was greatly cut down or there was insufficient functionality to work with the visual component. Since I had successful experience in using a visual bot in the past (albeit slow and heavily trimmed in the script part), I decided to make my implementation.

Functionality required at the beginning The

following features were required:

Existing analogues

There are a number of analogues, but each of them has its advantages and disadvantages. Consider the most functional:

Sikuli - has a huge set of useful functionality, a convenient Python scripting language and syntax, cross-platform with some reservations,

however, the initial task required precisely the reaction speed, which it could not provide for the most part because of Tesseract.

The second problem is binding to Java 1.6, which will not allow you to translate it without dancing with a tambourine to 1.7 or 1.8 for example.

The older version of Jython is also not very happy, although 2.5.2 is not so much outdated.

Otherwise, Sikuli is a great tool, I advise everyone!

AutoIt - uses Basic for scripting.

Kulibins on the forums made it possible to search for pictures on the screen, unfortunately this function did not meet my requirements due to unnecessary simplifications and a bunch of restrictions.

Works only under Windows, requires installation.

It requires compilation for use on other machines; there is no way to quickly fix the script if AutoIt is not installed.

AutoHotKey - uses its own syntax for writing scripts, which still needs to be studied for a long time, it will be more correct to call it “horrible”. It is difficult to do anything with truly branched logic without a proper habit. The search for images on the screen is too truncated and did not fit my needs.

It has several ports for Linux / Unix systems, requires installation

Clickermann - uses its own language for writing scripts, which still needs to be studied. Due to simplifications - functionality has been cut, for example, the same http requests.

There is no search for pictures on the screen, although there is a primitive search for pixels.

UOPilot - attached to processes that didn’t suit me, there are diseases like Clickermann. There is no cross-platform, large scripts are not convenient to write.

There were also many macros, most of which worked on the principle of “repeat after me”, remembering and repeating user actions, which in turn does not allow creating anything with a branched algorithm of actions with a bunch of if and while.

And the remaining ones did not have a search function for any icon on the screen.

Analogs on Habr

Read an article, the author made a bot to get discounts for laser vision correction. The article describes the many problems encountered during writing. Most of these problems arose because of understandable simplifications and the crutch was solved with a new crutch, I advise you to read the article.

Choosing technologies for your own bike

From the very beginning, it was decided to use Java SE to write the kernel itself, which in turn saved time, since I use Java most often. In addition, I was familiar with the Robot class, which allows you to conveniently simulate mouse and keyboard controls.

When adding a script interpreter - Python was chosen as a fairly simple and popular one. For Java, there is a Jython implementation that runs on the JVM and does not require installation. In addition, they will allow you to work with Java classes and objects directly from the script, which greatly expands the possibilities of scripting, not limiting what is in the core of the bot.

Subsequently, he added the search for pictures on the screen through GPGPU using OpenCL, for Java there was an implementation of JOCL, but more on that later.

Graphical interface on Swing, a simple and at the same time functional component available on any JRE right out of the box.

In Java, there is a Robot class that allows you to simulate keyboard keystrokes, mouse movements and clicks; there were no special problems with it. So I just expanded some functionality with methods like mouseClick (x, y) using mouseMove + mousePress + mouseRelease, adding Thread.sleep (ms) between these actions, subsequently added a few more methods with different arguments by overloading. The same Drag & Drop as a single method.

The keyClick () methods were added in the same way.

All this was necessary to facilitate the writing of actions in the script. Raise the level of scripting to a more abstract "You need it and you do it."

Phew, read ... Have you rested? And now let's go further!

Eyes of the kernel

The next step is the most difficult and took the most time - adding the ability to take a screenshot of the screen and find a pre-prepared icon on it.

First, how to take a screenshot of the screen and how to store it?

Secondly, how to search for a pattern (icon)?

Thirdly, where to get this pattern (icon)?

If taking a screenshot isn’t all that difficult

That with the search came out certain problems, where to get the pattern for the search? Create, but how?

To create the first pattern, the good old Paint was used, with the help of PrintScreen I threw the screenshot into the editor and cut a small piece from the screenshot, saving it in a separate .bmp file format.

Well, the pattern itself is there, we loaded it from the code in BufferedImage. A screenshot is also created in BufferedImage, now we have to make a search algorithm. On the network, I came across the option to go brute force - take the first pixel of a small picture, the first pixel of a large picture and compare them, if the pixels have the same color code, check the remaining pixels relative to that point. If all the pixels match, this means that the desired picture was found. If it does not match, we take the next pixel from the large picture and repeat the action again.

That doesn’t sound very good, but it works.

We start, and ... It works! Found one match! However, rather slowly, which is completely unacceptable to us. Time was wasted that getRGB () methods were called every time, the processor cache is used very inefficiently, and for us this can be said - purely matrix search. Pixel Matrix! Therefore, I decided to translate the BufferedImage object that stores the screenshot into the int [] [] matrix, so the desired fragment was translated into the int [] [] matrix, we will fix our cycles for working with the matrix. We start and ... Does not find.

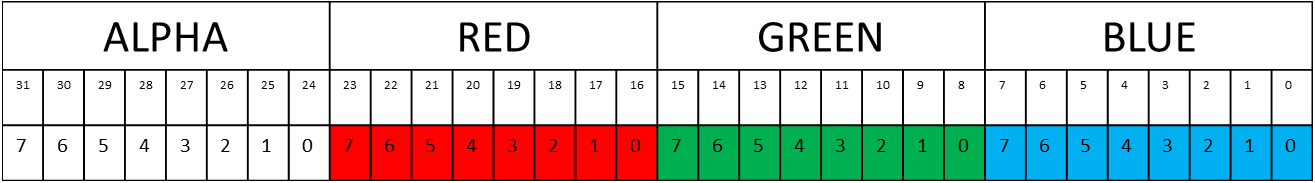

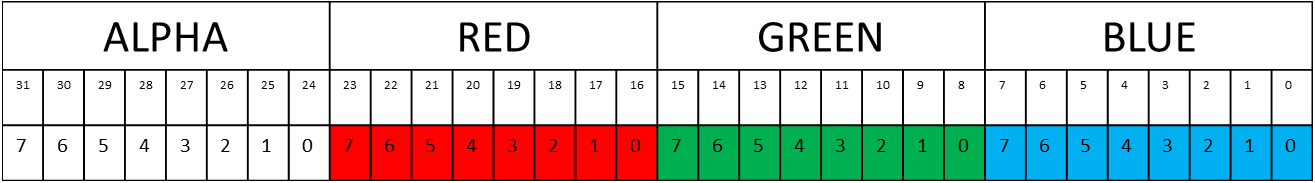

After actively searching for answers in search engines, it became clear that the reason for everything was the ARGB / RGBA / RGB format in which BufferedImage data is stored. The screenshot had an ARGB file with a BGR fragment.

I had to convert everything to one format, namely the ARGB screenshot format, since it’s faster to bring fragments to the screenshot format once than the screenshot to the fragment format each time. The smaller one leads to the larger format, which took quite a lot of time, but eventually worked, the patterns began to successfully appear on the screenshot much faster, almost twice as fast!

Further, it was up to the small optimization like caching the matrix row, if conditions, which also added speed to the search result.

I tried to play with the type of matrices, long vs int and the best result was still with the int [] [] matrix for both 64 / 32bit JVM configurations on the i7 4790.

Namely, the script part, it should be convenient, the syntax is clear without further explanation. Ideally, any popular language that can be embedded in the bot core and has rich documentation is suitable. Using the kernel API should be simple and easy to remember.

The choice fell on Python, popular, easy to learn, well documented, has many ready-made libraries, and most importantly - the script is easy to edit in any text editor! In addition, I have long wanted to study it.

For Java, there is an embedded Python implementation called Jython. It runs on the JVM and does not require anything extra to get started, allows you to use literally all classes, Java libraries, as well as .jar packs! In turn, this only strengthened confidence in the right choice.

We connect Jython to the project, create an interpreter object and run our script file.

Now look at the script

From the script, we load the necessary classes of our kernel and simply create their objects. Thus, you can pull class methods, which means that it allows you to use the kernel API to perform the actions we need!

These classes became Action and MatrixPosition, later Exception classes of the type FragmentNotLoadedException and ScreenNotGrabbedException

Action were added - it is used as the main class for accessing the kernel functionality. It contains useful methods designed to simplify the process of writing a script, to reduce the number of extra lines required to solve a problem. The same mouseClick, keyClick, find fragments in the screenshot, grab to create the screenshots themselves, etc.

In addition, you can create many objects of this class, and accordingly use it independently in several threads at once!

Let's complement our script with a couple of lines to use the kernel API

MatrixPosition - used as a wrapper for the coordinates on the screen. In this format, the bot API returns the coordinates. Of course, I recall the ready-made Point class, which already has the necessary functionality. However, not everything is so simple, the X and Y fields are accessible only through the pos.getX pos.getY methods, which causes a lot of inconvenience during scripting. It is much more convenient to access the fields through pos.x pos.y. In addition, practice has shown that positions should also have their own names, which turned out to be necessary for some tasks such as sorting positions among themselves (processing numbers from the screen alphabetically).

The possibilities are also expanded using the add, sub methods, which allow you to create a new position relative to the coordinates of the current object.

Pattern coordinates cache

According to search statistics, it became clear that it was necessary to find mostly static images that did not change their position on the screen. For this, a cache with coordinates was added. If the picture is in the cache, then first the check will be made for the presence of the picture in the cached coordinates, if not, then search the rest of the screen. This small detail significantly increased the speed of script execution.

GPGPU in service

I always wanted to make the process of finding a pattern on the big screen fast, the optimization of the algorithm has its limitations. Prior to this, the entire search process took place on the processor, dividing it into separate threads would not give a real gain in speed, but would increase the problems with the load by an order of magnitude. Having experience writing kernel code for GPGPU, I loaded the OpenCL library and threw the same search algorithm that was used on the processor (not the best solution for video cards), with some corrections for adaptation to the kernel program features.

For comparison, on an intel i7 4790 with a screen resolution of 1920 * 1080, processor search spent 0-12ms in the worst case (the farthest corner of the screen), then on Intel HD 4600 0-2ms is stable. However, you have to pay for the longer process of creating the screenshot itself, since you need to load the matrix of the screenshot into the memory of the video card, which takes time. At the same time, this is offset by the fact that you can search for many different pictures in the same screenshot, which ultimately gives a performance gain before the processor search.

Thread safety

It is especially important to make it possible to use threads and search for any fragments independently of each other, so that buffers, objects were made local so that when writing a script, bugs would not come out.

Cross-platform

Scripts work the same on any platform, the exception is only the text that is printed to the console, different encodings on different operating systems, so this is a separate problem. JVM allows you to run the bot on any platform without the need for installation. "Took and running."

The number of kernel methods that can be called from the script has more than 70 pieces and their number is constantly growing.

This tool is suitable for testing software and graphical interfaces. It helps out in cases where you need to automate something, but there is no desire or sufficient knowledge to pick binaries (Good for enikees).

Real use cases

For obvious reasons, I do not give names or source code.

GUI bot review

Writing a simple script to parse

YouTube playlist

Link to Github

Link to API

UPD: 02/28/2019 Playlist added, links updated

In subsequent versions:

The format of the fragments was transferred from .bmp to .png with backward compatibility.

Search for a similar (not exact) match has been added, for example 85% 90% 95% ...

Added search for fragments with transparency, fragments can be of any shape

Added extension scripts in python that accelerate quick start

Foreword

I needed to make a bot to perform several tasks that require logic and reaction speed. I did not want to climb into the API and pick the program binaries. It was decided to go by visual automation. I found several bots, but none of them came up to my requirements, being either too slow, or the script part was greatly cut down or there was insufficient functionality to work with the visual component. Since I had successful experience in using a visual bot in the past (albeit slow and heavily trimmed in the script part), I decided to make my implementation.

Functionality required at the beginning The

following features were required:

- Mouse simulation, cursor movement, button presses.

- Simulation of keyboard keystrokes.

- The ability to search on the screen for a pre-prepared piece of the picture, for example, an icon or letter, and if found, let him do anything with this information.

- A script interpreter so that you can simply describe the algorithm of actions and do not need to compile time after time.

Existing analogues

There are a number of analogues, but each of them has its advantages and disadvantages. Consider the most functional:

Sikuli - has a huge set of useful functionality, a convenient Python scripting language and syntax, cross-platform with some reservations,

however, the initial task required precisely the reaction speed, which it could not provide for the most part because of Tesseract.

The second problem is binding to Java 1.6, which will not allow you to translate it without dancing with a tambourine to 1.7 or 1.8 for example.

The older version of Jython is also not very happy, although 2.5.2 is not so much outdated.

Otherwise, Sikuli is a great tool, I advise everyone!

AutoIt - uses Basic for scripting.

Kulibins on the forums made it possible to search for pictures on the screen, unfortunately this function did not meet my requirements due to unnecessary simplifications and a bunch of restrictions.

Works only under Windows, requires installation.

It requires compilation for use on other machines; there is no way to quickly fix the script if AutoIt is not installed.

AutoHotKey - uses its own syntax for writing scripts, which still needs to be studied for a long time, it will be more correct to call it “horrible”. It is difficult to do anything with truly branched logic without a proper habit. The search for images on the screen is too truncated and did not fit my needs.

It has several ports for Linux / Unix systems, requires installation

Clickermann - uses its own language for writing scripts, which still needs to be studied. Due to simplifications - functionality has been cut, for example, the same http requests.

There is no search for pictures on the screen, although there is a primitive search for pixels.

UOPilot - attached to processes that didn’t suit me, there are diseases like Clickermann. There is no cross-platform, large scripts are not convenient to write.

There were also many macros, most of which worked on the principle of “repeat after me”, remembering and repeating user actions, which in turn does not allow creating anything with a branched algorithm of actions with a bunch of if and while.

And the remaining ones did not have a search function for any icon on the screen.

Analogs on Habr

Read an article, the author made a bot to get discounts for laser vision correction. The article describes the many problems encountered during writing. Most of these problems arose because of understandable simplifications and the crutch was solved with a new crutch, I advise you to read the article.

Choosing technologies for your own bike

From the very beginning, it was decided to use Java SE to write the kernel itself, which in turn saved time, since I use Java most often. In addition, I was familiar with the Robot class, which allows you to conveniently simulate mouse and keyboard controls.

When adding a script interpreter - Python was chosen as a fairly simple and popular one. For Java, there is a Jython implementation that runs on the JVM and does not require installation. In addition, they will allow you to work with Java classes and objects directly from the script, which greatly expands the possibilities of scripting, not limiting what is in the core of the bot.

Subsequently, he added the search for pictures on the screen through GPGPU using OpenCL, for Java there was an implementation of JOCL, but more on that later.

Graphical interface on Swing, a simple and at the same time functional component available on any JRE right out of the box.

First steps

In Java, there is a Robot class that allows you to simulate keyboard keystrokes, mouse movements and clicks; there were no special problems with it. So I just expanded some functionality with methods like mouseClick (x, y) using mouseMove + mousePress + mouseRelease, adding Thread.sleep (ms) between these actions, subsequently added a few more methods with different arguments by overloading. The same Drag & Drop as a single method.

public void mouseClick(int x, int y) throws AWTException {

mouseClick(x, y, InputEvent.BUTTON1_MASK, mouseDelay);

}

public void mouseClick(int x, int y, int button_mask) throws AWTException {

mouseClick(x, y, button_mask, mouseDelay);

}

public void mouseClick(int x, int y, int button_mask, int sleepTime) throws AWTException {

bot.mouseMove(x, y);

bot.mousePress(button_mask);

sleep(sleepTime);

bot.mouseRelease(button_mask);

}

public void mouseClick(MatrixPosition mp) throws AWTException {

mouseClick(mp.x, mp.y);

}

public void mouseClick(MatrixPosition mp, int button_mask) throws AWTException {

mouseClick(mp.x, mp.y, button_mask);

}

public void mouseClick(MatrixPosition mp, int button_mask, int sleepTime) throws AWTException {

mouseClick(mp.x, mp.y, button_mask, sleepTime);

}

public MatrixPosition mousePos() {

return new MatrixPosition(MouseInfo.getPointerInfo().getLocation());

}The keyClick () methods were added in the same way.

public void keyPress(int key_mask) {

bot.keyPress(key_mask);

}

public void keyPress(int... keys) {

for (int key : keys)

bot.keyPress(key);

}

public void keyRelease(int key_mask) {

bot.keyRelease(key_mask);

}

public void keyRelease(int... keys) {

for (int key : keys)

bot.keyRelease(key);

}

public void keyClick(int key_mask) {

bot.keyPress(key_mask);

sleep(keyboardDelay);

bot.keyRelease(key_mask);

}

public void keyClick(int... keys) {

keyPress(keys);

sleep(keyboardDelay);

keyRelease(keys);

}All this was necessary to facilitate the writing of actions in the script. Raise the level of scripting to a more abstract "You need it and you do it."

Phew, read ... Have you rested? And now let's go further!

Eyes of the kernel

The next step is the most difficult and took the most time - adding the ability to take a screenshot of the screen and find a pre-prepared icon on it.

First, how to take a screenshot of the screen and how to store it?

Secondly, how to search for a pattern (icon)?

Thirdly, where to get this pattern (icon)?

If taking a screenshot isn’t all that difficult

public void grab() throws Exception {

image = robot.createScreenCapture(screenRect);

} That with the search came out certain problems, where to get the pattern for the search? Create, but how?

To create the first pattern, the good old Paint was used, with the help of PrintScreen I threw the screenshot into the editor and cut a small piece from the screenshot, saving it in a separate .bmp file format.

Well, the pattern itself is there, we loaded it from the code in BufferedImage. A screenshot is also created in BufferedImage, now we have to make a search algorithm. On the network, I came across the option to go brute force - take the first pixel of a small picture, the first pixel of a large picture and compare them, if the pixels have the same color code, check the remaining pixels relative to that point. If all the pixels match, this means that the desired picture was found. If it does not match, we take the next pixel from the large picture and repeat the action again.

That doesn’t sound very good, but it works.

for (int y = 0; y < screenshot.getHeight() - fragment.getHeight(); y++) {

__columnscan: for (int x = 0; x < screenshot.getWidth() - fragment.getWidth(); x++) {

if (screenshot.getRGB(x, y) != fragment.getRGB(0, 0))

continue;

for (int yy = 0; yy < fragment.getHeight(); yy++) {

for (int xx = 0; xx < fragment.getWidth(); xx++) {

if (screenshot.getRGB(x + xx, y + yy) != fragment.getRGB(xx, yy))

continue __columnscan;

}

}

System.out.println(“found!”);

}

}We start, and ... It works! Found one match! However, rather slowly, which is completely unacceptable to us. Time was wasted that getRGB () methods were called every time, the processor cache is used very inefficiently, and for us this can be said - purely matrix search. Pixel Matrix! Therefore, I decided to translate the BufferedImage object that stores the screenshot into the int [] [] matrix, so the desired fragment was translated into the int [] [] matrix, we will fix our cycles for working with the matrix. We start and ... Does not find.

After actively searching for answers in search engines, it became clear that the reason for everything was the ARGB / RGBA / RGB format in which BufferedImage data is stored. The screenshot had an ARGB file with a BGR fragment.

I had to convert everything to one format, namely the ARGB screenshot format, since it’s faster to bring fragments to the screenshot format once than the screenshot to the fragment format each time. The smaller one leads to the larger format, which took quite a lot of time, but eventually worked, the patterns began to successfully appear on the screenshot much faster, almost twice as fast!

// USED FOR BMP/PNG BUFFERED_IMAGE

private int[][] loadFromFile(BufferedImage image) {

final byte[] pixels = ((DataBufferByte) image.getData().getDataBuffer())

.getData();

final int width = image.getWidth();

if (rgbData == null)

rgbData = new int[image.getHeight()][width];

for (int pixel = 0, row = 0; pixel < pixels.length; row++)

for (int col = 0; col < width; col++, pixel += 3)

rgbData[row][col] = -16777216 + ((int) pixels[pixel] & 0xFF)

+ (((int) pixels[pixel + 1] & 0xFF) << 8)

+ (((int) pixels[pixel + 2] & 0xFF) << 16); // 255

// alpha, r

// g b;

return rgbData;

}Further, it was up to the small optimization like caching the matrix row, if conditions, which also added speed to the search result.

public MatrixPosition findIn(Frag b, int x_start, int y_start, int x_stop,

int y_stop) {

// precalculate all frequently used data

final int[][] small = this.rgbData;

final int[][] big = b.rgbData;

final int small_height = small.length;

final int small_width = small[0].length;

final int small_height_minus_1 = small_height - 1;

final int small_width_minus_1 = small_width - 1;

final int first_pixel = small[0][0];

final int last_pixel = small[small_height_minus_1][small_width_minus_1];

int[] row_cache_big = null;

int[] row_cache_big2 = null;

int[] row_cache_small = null;

for (int y = y_start; y < y_stop; y++) {

row_cache_big = big[y];

__columnscan: for (int x = x_start; x < x_stop; x++) {

if (row_cache_big[x] != first_pixel

|| big[y + small_height_minus_1][x

+ small_width_minus_1] != last_pixel)

// if (row_cache_big[x] != first_pixel)

continue __columnscan; // No first match

// There is a match for the first element in small

// Check if all the elements in small matches those in big

for (int yy = 0; yy < small_height; yy++) {

row_cache_big2 = big[y + yy];

row_cache_small = small[yy];

for (int xx = 0; xx < small_width; xx++) {

// If there is at least one difference, there is no

// match

if (row_cache_big2[x + xx] != row_cache_small[xx]) {

continue __columnscan;

}

}

}

// If arrived here, then the small matches a region of big

return new MatrixPosition(x, y);

}

}

return null;

}I tried to play with the type of matrices, long vs int and the best result was still with the int [] [] matrix for both 64 / 32bit JVM configurations on the i7 4790.

Bot Brains

Namely, the script part, it should be convenient, the syntax is clear without further explanation. Ideally, any popular language that can be embedded in the bot core and has rich documentation is suitable. Using the kernel API should be simple and easy to remember.

The choice fell on Python, popular, easy to learn, well documented, has many ready-made libraries, and most importantly - the script is easy to edit in any text editor! In addition, I have long wanted to study it.

For Java, there is an embedded Python implementation called Jython. It runs on the JVM and does not require anything extra to get started, allows you to use literally all classes, Java libraries, as well as .jar packs! In turn, this only strengthened confidence in the right choice.

We connect Jython to the project, create an interpreter object and run our script file.

class JythonVM {

private boolean isJythonVMLoaded = false;

private Object jythonLoad = new Object();

private PythonInterpreter pi = null;

public JythonVM() {

// TODO Auto-generated constructor stub

}

void load() {

System.out.println("CORE: Loading JythonVM...");

pi = new PythonInterpreter();

isJythonVMLoaded = true;

System.out.println("CORE: JythonVM loaded.");

synchronized (jythonLoad) {

jythonLoad.notify();

}

}

void run(String script) throws Exception {

System.out.println("CODE: Waiting for JythonVM to load");

if (!isJythonVMLoaded)

synchronized (jythonLoad) {

jythonLoad.wait();

}

System.out.println("CORE: Running " + script + "...\n\n");

pi.execfile(script);

System.out.println("CORE: Script execution finished.");

}

} Now look at the script

# -*- coding: utf-8 -*-

print("hello")From the script, we load the necessary classes of our kernel and simply create their objects. Thus, you can pull class methods, which means that it allows you to use the kernel API to perform the actions we need!

These classes became Action and MatrixPosition, later Exception classes of the type FragmentNotLoadedException and ScreenNotGrabbedException

Action were added - it is used as the main class for accessing the kernel functionality. It contains useful methods designed to simplify the process of writing a script, to reduce the number of extra lines required to solve a problem. The same mouseClick, keyClick, find fragments in the screenshot, grab to create the screenshots themselves, etc.

In addition, you can create many objects of this class, and accordingly use it independently in several threads at once!

Let's complement our script with a couple of lines to use the kernel API

# -*- coding: utf-8 -*-

from bot.penguee import Action

a = Action() # создаем объект для работы с API ядра

print("hello") # строчка с предыдущего скрипта, не трогаем для наглядности

a.mouseMove(1000, 500) # должно сдвинуть курсор мыши на координаты x 1000, y 500MatrixPosition - used as a wrapper for the coordinates on the screen. In this format, the bot API returns the coordinates. Of course, I recall the ready-made Point class, which already has the necessary functionality. However, not everything is so simple, the X and Y fields are accessible only through the pos.getX pos.getY methods, which causes a lot of inconvenience during scripting. It is much more convenient to access the fields through pos.x pos.y. In addition, practice has shown that positions should also have their own names, which turned out to be necessary for some tasks such as sorting positions among themselves (processing numbers from the screen alphabetically).

The possibilities are also expanded using the add, sub methods, which allow you to create a new position relative to the coordinates of the current object.

# -*- coding: utf-8 -*-

from bot.penguee import MatrixPosition, Action

a = Action()

print("hello")

mp = MatrixPosition(1000, 500)

print(mp.x, mp.y)

a.mouseMove(mp)Subsequent Improvements

Pattern coordinates cache

According to search statistics, it became clear that it was necessary to find mostly static images that did not change their position on the screen. For this, a cache with coordinates was added. If the picture is in the cache, then first the check will be made for the presence of the picture in the cached coordinates, if not, then search the rest of the screen. This small detail significantly increased the speed of script execution.

GPGPU in service

I always wanted to make the process of finding a pattern on the big screen fast, the optimization of the algorithm has its limitations. Prior to this, the entire search process took place on the processor, dividing it into separate threads would not give a real gain in speed, but would increase the problems with the load by an order of magnitude. Having experience writing kernel code for GPGPU, I loaded the OpenCL library and threw the same search algorithm that was used on the processor (not the best solution for video cards), with some corrections for adaptation to the kernel program features.

For comparison, on an intel i7 4790 with a screen resolution of 1920 * 1080, processor search spent 0-12ms in the worst case (the farthest corner of the screen), then on Intel HD 4600 0-2ms is stable. However, you have to pay for the longer process of creating the screenshot itself, since you need to load the matrix of the screenshot into the memory of the video card, which takes time. At the same time, this is offset by the fact that you can search for many different pictures in the same screenshot, which ultimately gives a performance gain before the processor search.

Thread safety

It is especially important to make it possible to use threads and search for any fragments independently of each other, so that buffers, objects were made local so that when writing a script, bugs would not come out.

Cross-platform

Scripts work the same on any platform, the exception is only the text that is printed to the console, different encodings on different operating systems, so this is a separate problem. JVM allows you to run the bot on any platform without the need for installation. "Took and running."

Final result

The number of kernel methods that can be called from the script has more than 70 pieces and their number is constantly growing.

This tool is suitable for testing software and graphical interfaces. It helps out in cases where you need to automate something, but there is no desire or sufficient knowledge to pick binaries (Good for enikees).

Real use cases

For obvious reasons, I do not give names or source code.

- A trading bot for auctioning online MMO games, a real-time bot analyzes the figures for the cost of goods and possible profit from resale, then using overlay layers writes the numbers of possible profit directly on the user's screen.

- A passive macro for a single player game, improves buildings automatically, passively observing the presence of upgrade buttons and intercepting control for a moment, clicking on the necessary buttons in a very short time.

- When you receive a new message in Skype - opens the program and the window of the desired dialogue.

- Office counting of work done by the names of employees, data is visually taken from the interface of an outdated program that does not have an API or easy access to the database.

- Office counting of goods from 1C, Enikey is not able to work with the API and the database directly, I used this bot.

GUI bot review

Writing a simple script to parse

YouTube playlist

Script from the video

# -*- coding: utf-8 -*-

from bot.penguee import MatrixPosition, Action

from java.awt.event import InputEvent, KeyEvent

a = Action()

p1 = MatrixPosition(630, 230)

p2 = MatrixPosition(1230, 780)

while True:

a.grab(p1, p2)

a.searchRect(630, 230, 1230, 780) #общее окно

if a.find("verstak.gui"):

a.searchRect(760, 320, 960, 500) #Верстак

emptyCells = a.findAllPos("cell_empty")

a.searchRect(700, 520, 1220, 770) # Инвентарь

if a.findClick("coal.item"):

coalRecentPos = a.recentPos()

print(coalRecentPos.name)

for i in range(len(emptyCells)):

a.mouseClick(emptyCells[i], InputEvent.BUTTON3_MASK)

a.sleep(50)

a.mouseClick(coalRecentPos)

a.searchRect(630, 230, 1230, 780) #общее окно

result = a.findPos("verstak.arrow").relative(70, 0)

a.keyPress(KeyEvent.VK_SHIFT)

a.sleep(100)

a.mouseClick(result)

a.sleep(100)

a.keyRelease(KeyEvent.VK_SHIFT)

elif a.find("pech.gui"):

if a.find("pech.off"):

a.searchRect(700, 520, 1220, 770) # Инвентарь

if a.findClick("coal.block"):

coalBlockRecentPos = a.recentPos()

a.searchRect(630, 230, 1230, 780) #общее окно

a.mouseClick(a.findPos("pech.off"), InputEvent.BUTTON3_MASK)

a.mouseClick(coalBlockRecentPos)

result = a.findPos("verstak.arrow").relative(70, 0)

a.keyPress(KeyEvent.VK_SHIFT)

a.sleep(100)

a.mouseClick(result)

a.sleep(100)

a.keyRelease(KeyEvent.VK_SHIFT)

if a.find("pech.empty"):

a.searchRect(700, 520, 1220, 770) # Инвентарь

if a.findClick("gold.ore"):

a.searchRect(630, 230, 1230, 780) #общее окно

a.mouseClick(a.findPos("pech.empty"))

a.sleep(6000)

Link to Github

Link to API

UPD: 02/28/2019 Playlist added, links updated

In subsequent versions:

The format of the fragments was transferred from .bmp to .png with backward compatibility.

Search for a similar (not exact) match has been added, for example 85% 90% 95% ...

Added search for fragments with transparency, fragments can be of any shape

Added extension scripts in python that accelerate quick start