What will Web 3.0 be like: blockchain marketplace for machine learning

- Transfer

How to create the most powerful artificial intelligence? One way is to use machine learning models with data that is distributed through blockchain-based marketplaces. Why is blockchain here? It is with its help that in the future we can expect the appearance of open electronic exchanges where everyone can sell their data without violating confidentiality. And developers - choose and acquire the most useful information for their algorithms. In this post we will talk about the development and prospects of such sites.

Today, the basic elements of such systems are only being formed. Simple initial versions of such solutions inspire hope for success. These trading platforms will provide a transition from the current era of exclusive ownership of Web 2.0 data to Web 3.0 - open competition for data and algorithms with the possibility of direct monetization.

The idea for such a platform came to me in 2015 after a conversation with Richard from the Numerai hedge fund. They held a competition to develop a stock market model and sent encrypted market data to any specialist who wanted to participate in it. As a result, Numerai combines the best models into a “metamodel”, sells it and pays a reward to those professionals whose models work efficiently.

Competition between data processing and analysis specialists seemed like a promising idea. Then I thought: is it possible to create a fully decentralized version of such a system that would be general in nature and could be used to solve any problems? I believe that this question can be answered in the affirmative.

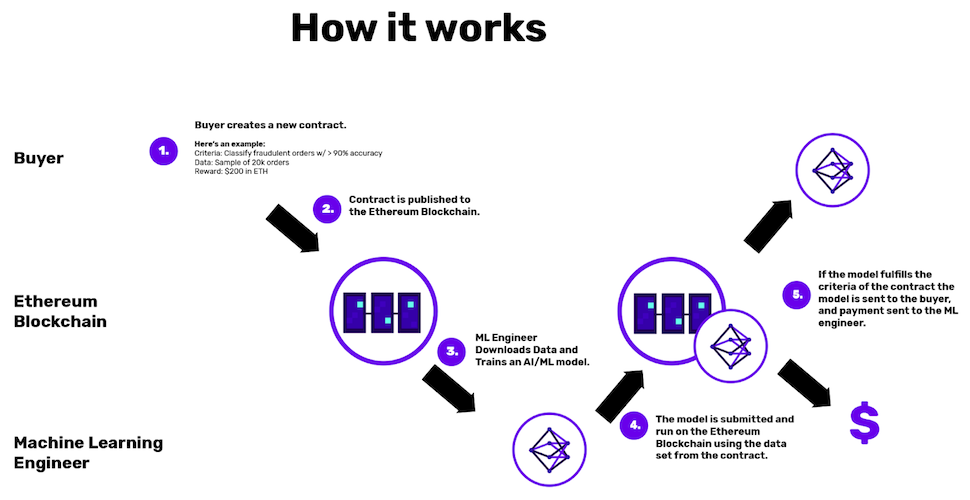

As an example, let's try to create a fully decentralized system for trading cryptocurrencies on decentralized exchanges. Here is one of the possible schemes:

We explain the different levels of its work:

Data . Data providers market their data and share it with model developers.

Models . Model developers select which data to use and create models. Training is carried out using a safe method of calculation, which allows you to train models without revealing the data used. Models are listed on the exchange in the same way as data.

Metamodels . The metamodel is created on the basis of an algorithm that takes into account the exchange price of each model. Creating a metamodel is optional - some models are used without combining it into a metamodel. A smart contract uses a metamodel in electronic trading through decentralized exchange mechanisms (on-chain transactions).

Profit / loss distribution . After some time, the trades give profit or loss, which are divided between the developers of the metamodel, depending on their contribution to its improvement. Models that have had a negative impact on the metamodel lose all of the raised funds in whole or in part. And the data providers for this model also suffer some losses.

Verification of computing . The calculations at each stage are performed in two ways. Or centrally, but with the ability to verify and protest through mechanisms such as Truebit . Or decentralized using a confidential computing protocol.

Hosting . Data and models are hosted either on IPFS, or on nodes in a secure confidential computing system with a large number of participants. On-chain storage in this case will be too expensive.

We list the main advantages of such a system:

In addition to the above, the most important property is confidentiality. The guarantee of confidentiality allows ordinary users to calmly provide any personal data. And also prevent the loss of economic value of both data and models. If you leave the data and models unencrypted in the public domain, they will be copied for free and used by others who do not make any contribution to the common cause (“ free rider effect ”).

A partial solution to the free rider problem is selling data privately. Even if buyers want to resell or disclose the data, it’s not so scary, because the cost of the data will depreciate over time anyway. However, with this approach, data is used exclusively in the short term, and problems with ensuring their confidentiality are not resolved. So the use of secure computing seems to be a more complex, but also more effective approach.

Safe calculation methods allow you to train models without revealing the data itself. Three main types of secure computing are currently used and investigated: homomorphic encryption (HE), confidential computing protocol (MPC), and zero disclosure proof (ZKP). For machine learning using personal data, MPC is most often used today, since HE is usually too slow, and how to apply ZKP for machine learning is still unclear. Methods of safe computing - this is the most relevant topic of modern computer research. Such algorithms, as a rule, require much more time than usual calculations, and become the bottleneck of the system. But in recent years they have been noticeably improved.

To illustrate the potential of machine learning on private data, imagine an application called The Ideal Recommender System. It monitors everything that you do on your devices: it analyzes all visited sites, all actions in applications, viewed pictures on your phone, location data, expense history, information from wearable sensors, text messages, data from cameras in your home and on your future augmented reality glasses. This information will allow the application to give you recommendations: which next website to visit, which article to read, which song to listen to or which product to buy.

This recommendation system will be extremely powerful, more powerful than any of the existing "silos" with data from Google, Facebook or anyone else. All thanks to the most in-depth analysis and the ability to learn using the most sensitive personal data that you would not share with anyone else . As in the previous example with the cryptocurrency trading system, the key to the functioning of the recommendation system is the creation of a market for models focused on different areas (for example, recommendations of websites or music). These models would compete for access to your encrypted data, the ability to recommend - and probably even pay you for using your data or for your attention to recommendations.

A system of distributed learningGoogle and Apple’s differential privacy system can be considered steps in the direction of machine learning using personal data. But these decisions still imply the establishment of trusting relationships , do not allow users to independently monitor their security and store data separately.

Talking about complete systems of this kind is too early. At the moment, few people already have something working, and most go to such systems gradually.

Company Algorithmia Research has developed a fairly simple solution that rewards for a model with an accuracy above a certain threshold determined retrospectively:

Numerai hedge fund gone three steps forward. His system:

Data analysts should use Numeraire as a shell, thereby confirming their own interest and stimulating future performance. And yet, Numerai currently distributes data centrally, so the most important feature of the system is still not implemented.

At the moment, a successful blockchain-based data marketplace has not yet been created. The first attempt to develop such a system, at least in general terms, was The Ocean . Others start by building secure computing networks. As part of the Openmined project, work is underway to create a multi-user computer network for training Unity- based machine learning models, which can work on any device, including game consoles (similar to Folding at Home ). Subsequently, it is planned to expand this system to a protocol of confidential computing. Enigma takes a similar approach .

As a result of these works, it would be great to get metamodels that would provide co-owners - data providers and model developers - with property rights in an amount proportional to their contribution to improving the metamodel. Models would be tokenized and could generate income over time, and those who trained them could even manage them. It would be a kind of swarm intelligence, jointly owned. Of all that I have seen so far, the Openmined project came closest to such a system, according to a video about it.

I will not say that I know which project is better, but I have some thoughts on this.

In relation to the blockchain, I evaluate the system as follows. If you expand it in the continuous spectrum of “physical-digital-blockchain”, then the more from the blockchain, the better. The less blockchain is in it, the more you have to attract trusted parties. So the system becomes more complex, and it is becoming less and less convenient to use as an integral part of other systems.

This means that the system will be more likely to work if its value is quantifiable. Ideally, in monetary terms, and even better in the form of tokens. This will create a fully enclosed system. To evaluate the effectiveness, compare the system above, for example, with the X-ray tumor recognition system. In the latter case, you need to convince the insurance company that the x-rays are of some value, agree on how valuable they are, and then entrust a small group of people with confirmation of the success or failure of the x-ray.

Such a system can be used in a host of other useful scenarios. They can be tied to the curation market - they can work in a closed cycle according to the blockchain model, and tokens of this market can act as a bonus. Now the picture is still not clear, but I assume that over time the number of areas requiring the use of the blockchain will only grow.

Decentralized markets for data and machine learning models could break the data monopoly that modern corporations have. Over the past 20 years, they have been standardizing and trading the main source of value on the Internet: proprietary data networks and the impact they have. But now the creation of value is no longer associated with data, but with algorithms.

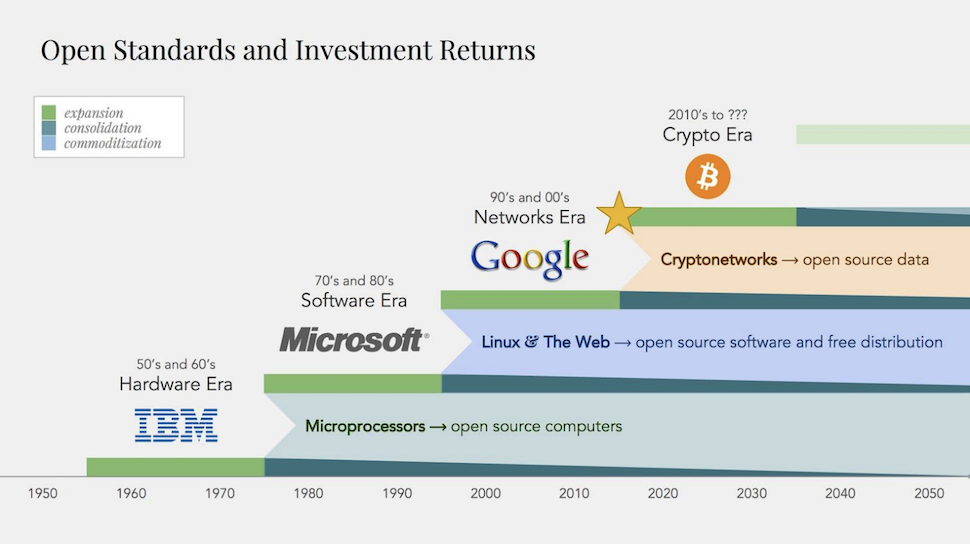

Technology standardization and commercialization cycles. We are nearing the end of the era of networks that monopolize data.

In other words, they create a business model of artificial intelligence based on direct interaction , provide both the provision of data and training models.

The advent of decentralized markets for data and models for machine learning can lead to the creation of the most powerful AI in the world. Due to direct economic incentives, they could receive the most valuable data and models. Their strength is enhanced by multilateral network effects. Web 2.0 monopolies of network data are turning into everyday goods and becoming good material for a new conglomeration. We probably have a few more years to go to this, but we are going in the right direction.

As an example of a recommender system shows, the search process is globally inverted. Now people are looking for goods - and in the future, goods will search for people and compete for them. Each consumer will have personal curation markets, in which recommendation systems will compete for the placement of the most relevant content on their channels. And relevance will be determined by the consumer.

New models will allow us to get the same benefits from the powerful machine learning services that we are used to using the services of Google and Facebook. But without providing personal data.

Finally, machine learning will evolve faster, since any development engineer will be able to access the open data marketplace, not just a small group of engineers in large Web 2.0 companies.

First of all, safe computing methods are currently running slowly, and machine learning already requires a lot of computing power. On the other hand, interest in safe computing methods is beginning to appear, and their productivity is growing. Over the past six months, I have seen several new approaches that significantly improve the performance of HE, MPC, and ZKP.

It is difficult to determine the value of a particular dataset or model for a metamodel.

Cleaning and formatting crowdsourcing data is also not easy. Most likely, a number of tools will be used in combination with each other, and standardization processes will begin in the segment with the active participation of small companies.

Finally, paradoxically, the business model for creating such a generalized system is less obvious than in the case of the private system. The same situation with many new crypto primitives, including curation markets.

The combination of machine learning based on private data with blockchain rewards can lead to the creation of the most productive artificial intelligence systems for various purposes. Now there are big technical problems that over time seem quite solvable. This segment has great potential in the long term, and its formation can weaken the dominant position of large Internet companies in the field of access to data. These systems even raise some concerns: they load themselves, develop independently, consume sensitive data and become almost indestructible, making me wonder if their creation would lead to the appearance of the most powerful Molochin history. In any case, these systems are another example of how cryptocurrencies can, at first, slowly, and then rapidly break into all spheres of economic activity.

Today, the basic elements of such systems are only being formed. Simple initial versions of such solutions inspire hope for success. These trading platforms will provide a transition from the current era of exclusive ownership of Web 2.0 data to Web 3.0 - open competition for data and algorithms with the possibility of direct monetization.

The emergence of ideas

The idea for such a platform came to me in 2015 after a conversation with Richard from the Numerai hedge fund. They held a competition to develop a stock market model and sent encrypted market data to any specialist who wanted to participate in it. As a result, Numerai combines the best models into a “metamodel”, sells it and pays a reward to those professionals whose models work efficiently.

Competition between data processing and analysis specialists seemed like a promising idea. Then I thought: is it possible to create a fully decentralized version of such a system that would be general in nature and could be used to solve any problems? I believe that this question can be answered in the affirmative.

Design

As an example, let's try to create a fully decentralized system for trading cryptocurrencies on decentralized exchanges. Here is one of the possible schemes:

We explain the different levels of its work:

Data . Data providers market their data and share it with model developers.

Models . Model developers select which data to use and create models. Training is carried out using a safe method of calculation, which allows you to train models without revealing the data used. Models are listed on the exchange in the same way as data.

Metamodels . The metamodel is created on the basis of an algorithm that takes into account the exchange price of each model. Creating a metamodel is optional - some models are used without combining it into a metamodel. A smart contract uses a metamodel in electronic trading through decentralized exchange mechanisms (on-chain transactions).

Profit / loss distribution . After some time, the trades give profit or loss, which are divided between the developers of the metamodel, depending on their contribution to its improvement. Models that have had a negative impact on the metamodel lose all of the raised funds in whole or in part. And the data providers for this model also suffer some losses.

Verification of computing . The calculations at each stage are performed in two ways. Or centrally, but with the ability to verify and protest through mechanisms such as Truebit . Or decentralized using a confidential computing protocol.

Hosting . Data and models are hosted either on IPFS, or on nodes in a secure confidential computing system with a large number of participants. On-chain storage in this case will be too expensive.

Why will it be efficient and productive?

We list the main advantages of such a system:

- An incentive to attract the most requested data. Typically, for most machine learning projects, the main limiting factor is the lack of quality data. A properly designed reward structure will allow you to access all the most valuable data in the same way that the emergence of bitcoin with a reward system for participants led to the emergence of the world's most powerful computing network. In addition, it is almost impossible to shut down a system in which data comes from thousands or millions of sources.

- Competition between algorithms. Models and algorithms directly compete with each other in areas where this has not happened before. Imagine a decentralized Facebook network with thousands of competing news feed algorithms.

- Transparency of rewards . Data and model providers see that they are getting a fair price for their products, as all calculations can be verified. This will attract even more data providers.

- Automation. Transactions are conducted in the blockchain environment and the cost is generated directly in tokens. Thus, all interaction becomes automated and closed, not requiring the establishment of trusting relationships.

- Network effect. The participation of users, data providers, and data processing and analysis specialists provides a multifaceted network effect and makes the system self-developing. The better it works, the more capital it attracts. More capital means more potential payouts. This, in turn, attracts more data providers and specialists in their processing, which make the system more perfect and rational. As a result, more investments are attracted, and further in a circle.

System privacy

In addition to the above, the most important property is confidentiality. The guarantee of confidentiality allows ordinary users to calmly provide any personal data. And also prevent the loss of economic value of both data and models. If you leave the data and models unencrypted in the public domain, they will be copied for free and used by others who do not make any contribution to the common cause (“ free rider effect ”).

A partial solution to the free rider problem is selling data privately. Even if buyers want to resell or disclose the data, it’s not so scary, because the cost of the data will depreciate over time anyway. However, with this approach, data is used exclusively in the short term, and problems with ensuring their confidentiality are not resolved. So the use of secure computing seems to be a more complex, but also more effective approach.

Secure Computing

Safe calculation methods allow you to train models without revealing the data itself. Three main types of secure computing are currently used and investigated: homomorphic encryption (HE), confidential computing protocol (MPC), and zero disclosure proof (ZKP). For machine learning using personal data, MPC is most often used today, since HE is usually too slow, and how to apply ZKP for machine learning is still unclear. Methods of safe computing - this is the most relevant topic of modern computer research. Such algorithms, as a rule, require much more time than usual calculations, and become the bottleneck of the system. But in recent years they have been noticeably improved.

“The ideal recommendation system”

To illustrate the potential of machine learning on private data, imagine an application called The Ideal Recommender System. It monitors everything that you do on your devices: it analyzes all visited sites, all actions in applications, viewed pictures on your phone, location data, expense history, information from wearable sensors, text messages, data from cameras in your home and on your future augmented reality glasses. This information will allow the application to give you recommendations: which next website to visit, which article to read, which song to listen to or which product to buy.

This recommendation system will be extremely powerful, more powerful than any of the existing "silos" with data from Google, Facebook or anyone else. All thanks to the most in-depth analysis and the ability to learn using the most sensitive personal data that you would not share with anyone else . As in the previous example with the cryptocurrency trading system, the key to the functioning of the recommendation system is the creation of a market for models focused on different areas (for example, recommendations of websites or music). These models would compete for access to your encrypted data, the ability to recommend - and probably even pay you for using your data or for your attention to recommendations.

A system of distributed learningGoogle and Apple’s differential privacy system can be considered steps in the direction of machine learning using personal data. But these decisions still imply the establishment of trusting relationships , do not allow users to independently monitor their security and store data separately.

Implemented Approaches

Talking about complete systems of this kind is too early. At the moment, few people already have something working, and most go to such systems gradually.

Company Algorithmia Research has developed a fairly simple solution that rewards for a model with an accuracy above a certain threshold determined retrospectively:

Numerai hedge fund gone three steps forward. His system:

- uses encrypted data (although this type of encryption cannot be considered completely homomorphic),

- integrates crowdsourcing models into a metamodel,

- rewards models through Numeraire’s own Ethereum token based on future performance (exchange trading weeks) rather than retrospective testing.

Data analysts should use Numeraire as a shell, thereby confirming their own interest and stimulating future performance. And yet, Numerai currently distributes data centrally, so the most important feature of the system is still not implemented.

At the moment, a successful blockchain-based data marketplace has not yet been created. The first attempt to develop such a system, at least in general terms, was The Ocean . Others start by building secure computing networks. As part of the Openmined project, work is underway to create a multi-user computer network for training Unity- based machine learning models, which can work on any device, including game consoles (similar to Folding at Home ). Subsequently, it is planned to expand this system to a protocol of confidential computing. Enigma takes a similar approach .

As a result of these works, it would be great to get metamodels that would provide co-owners - data providers and model developers - with property rights in an amount proportional to their contribution to improving the metamodel. Models would be tokenized and could generate income over time, and those who trained them could even manage them. It would be a kind of swarm intelligence, jointly owned. Of all that I have seen so far, the Openmined project came closest to such a system, according to a video about it.

What could work faster?

I will not say that I know which project is better, but I have some thoughts on this.

In relation to the blockchain, I evaluate the system as follows. If you expand it in the continuous spectrum of “physical-digital-blockchain”, then the more from the blockchain, the better. The less blockchain is in it, the more you have to attract trusted parties. So the system becomes more complex, and it is becoming less and less convenient to use as an integral part of other systems.

This means that the system will be more likely to work if its value is quantifiable. Ideally, in monetary terms, and even better in the form of tokens. This will create a fully enclosed system. To evaluate the effectiveness, compare the system above, for example, with the X-ray tumor recognition system. In the latter case, you need to convince the insurance company that the x-rays are of some value, agree on how valuable they are, and then entrust a small group of people with confirmation of the success or failure of the x-ray.

Such a system can be used in a host of other useful scenarios. They can be tied to the curation market - they can work in a closed cycle according to the blockchain model, and tokens of this market can act as a bonus. Now the picture is still not clear, but I assume that over time the number of areas requiring the use of the blockchain will only grow.

Market implications

Decentralized markets for data and machine learning models could break the data monopoly that modern corporations have. Over the past 20 years, they have been standardizing and trading the main source of value on the Internet: proprietary data networks and the impact they have. But now the creation of value is no longer associated with data, but with algorithms.

Technology standardization and commercialization cycles. We are nearing the end of the era of networks that monopolize data.

In other words, they create a business model of artificial intelligence based on direct interaction , provide both the provision of data and training models.

The advent of decentralized markets for data and models for machine learning can lead to the creation of the most powerful AI in the world. Due to direct economic incentives, they could receive the most valuable data and models. Their strength is enhanced by multilateral network effects. Web 2.0 monopolies of network data are turning into everyday goods and becoming good material for a new conglomeration. We probably have a few more years to go to this, but we are going in the right direction.

As an example of a recommender system shows, the search process is globally inverted. Now people are looking for goods - and in the future, goods will search for people and compete for them. Each consumer will have personal curation markets, in which recommendation systems will compete for the placement of the most relevant content on their channels. And relevance will be determined by the consumer.

New models will allow us to get the same benefits from the powerful machine learning services that we are used to using the services of Google and Facebook. But without providing personal data.

Finally, machine learning will evolve faster, since any development engineer will be able to access the open data marketplace, not just a small group of engineers in large Web 2.0 companies.

Problems

First of all, safe computing methods are currently running slowly, and machine learning already requires a lot of computing power. On the other hand, interest in safe computing methods is beginning to appear, and their productivity is growing. Over the past six months, I have seen several new approaches that significantly improve the performance of HE, MPC, and ZKP.

It is difficult to determine the value of a particular dataset or model for a metamodel.

Cleaning and formatting crowdsourcing data is also not easy. Most likely, a number of tools will be used in combination with each other, and standardization processes will begin in the segment with the active participation of small companies.

Finally, paradoxically, the business model for creating such a generalized system is less obvious than in the case of the private system. The same situation with many new crypto primitives, including curation markets.

Conclusion

The combination of machine learning based on private data with blockchain rewards can lead to the creation of the most productive artificial intelligence systems for various purposes. Now there are big technical problems that over time seem quite solvable. This segment has great potential in the long term, and its formation can weaken the dominant position of large Internet companies in the field of access to data. These systems even raise some concerns: they load themselves, develop independently, consume sensitive data and become almost indestructible, making me wonder if their creation would lead to the appearance of the most powerful Molochin history. In any case, these systems are another example of how cryptocurrencies can, at first, slowly, and then rapidly break into all spheres of economic activity.