Defeating the Android Camera2 API with RxJava2 (Part 2)

This is the second part of the article , in which I show how using RxJava2 helps build logic on top of the asynchronous API. As such an interface, I chose the Android Camera2 API (and did not regret it!). This API is not only asynchronous, but also contains non-obvious implementation features that are not clearly described anywhere. So the article will do the reader double benefit.

Who is this post for? I expect the reader to be an experienced wizard, but still an inquisitive Android developer. A basic understanding of reactive programming (a good introduction here ) and an understanding of Marble Diagrams are highly desirable . The post will be useful to those who want to get into a reactive approach, as well as those who plan to use the Camera2 API in their projects.

Project sourcesfound on github .

Reading the first part is a must!

Formulation of the problem

At the end of the first part, I promised that I would open the question of waiting for autofocus / autoexposure to work.

Let me remind you that the chain of operators looked like this:

Observable.combineLatest(previewObservable, mOnShutterClick, (captureSessionData, o) -> captureSessionData)

.firstElement().toObservable()

.flatMap(this::waitForAf)

.flatMap(this::waitForAe)

.flatMap(captureSessionData -> captureStillPicture(captureSessionData.session))

.subscribe(__ -> {}, this::onError)

So what do we want from

waitForAeand methods waitForAf? In order for the autofocus / autoexposure processes to be started, and upon their completion we would receive a notification that the image is ready. For this, it is necessary that both methods return

Observable, which emits an event when the camera reports that the convergence process has worked (so as not to repeat the words “autofocus” and “autoexposure”, then I will use the word “convergence”). But how to start and control this process?The most unobvious features of the Camera2 API pipeline

At first I thought that it was enough to call

capturewith the necessary flags and wait in the transferred CaptureCallbackcall onCaptureCompleted. It seems logical: they launched the request, waited for the execution, which means the request has been completed. And such a code even went into production.

But then we noticed that on some devices in very dark conditions, even when the flash fires, the photos are out of focus and darkened. At the same time, the system camera worked perfectly, however, it took much more time to prepare for the picture. I began to suspect that in my case, autofocus

onCaptureCompleteddid not have time to focus by the time.To check my thesis, I added a delay per second - and the pictures began to be obtained! It is clear that I could not be satisfied with such a decision, and began to look for how, in fact, it can be understood that autofocus worked and can continue. I could not find documentation on this topic, and I had to turn to the sors of the system camera, since they are available as part of the Android Open Source Project . The code turned out to be extremely unreadable and confusing, I had to add logging and analyze the camera logs when shooting in the dark. And I found that after capture with the necessary flags, the system camera calls the

setRepeatingRequestpreview to continue and waits until it comes to the callback onCaptureCompletedwith a certain set of flags in TotalCaptureResult. The right answer could come in a few onCaptureCompleted!When I realized this feature, the behavior of the Camera2 API began to seem logical. But how much effort was required to find this information! Well, now we can proceed to the description of the solution.

So our action plan:

- calling capture with flags that start the convergence process;

- challenge

setRepeatingRequestto continue the preview; - receiving notifications from both methods;

- Waiting in the results of notifications for

onCaptureCompletedevidence that the convergence process is complete.

Go!

Checkboxes

Create a class

ConvergeWaiterwith the following fields:private final CaptureRequest.Key mRequestTriggerKey;

private final int mRequestTriggerStartValue; This is the key and the value of the flag that will start the necessary convergence process when called

capture. For autofocus, this will be

CaptureRequest.CONTROL_AF_TRIGGER, CameraMetadata.CONTROL_AF_TRIGGER_STARTrespectively. For auto exposure - CaptureRequest.CONTROL_AE_PRECAPTURE_TRIGGERand CameraMetadata.CONTROL_AE_PRECAPTURE_TRIGGER_STARTaccordingly.private final CaptureResult.Key mResultStateKey;

private final List mResultReadyStates;

And this is the key and the set of expected flag values from the result

onCaptureCompleted. When we see one of the expected key values, we can assume that the convergence process is completed. For autofocus, the key value

CaptureResult.CONTROL_AF_STATE, a list of values:CaptureResult.CONTROL_AF_STATE_INACTIVE,

CaptureResult.CONTROL_AF_STATE_PASSIVE_FOCUSED,

CaptureResult.CONTROL_AF_STATE_FOCUSED_LOCKED,

CaptureResult.CONTROL_AF_STATE_NOT_FOCUSED_LOCKED;

for autoexposure, key value

CaptureResult.CONTROL_AE_STATE, list of values:CaptureResult.CONTROL_AE_STATE_INACTIVE,

CaptureResult.CONTROL_AE_STATE_FLASH_REQUIRED,

CaptureResult.CONTROL_AE_STATE_CONVERGED,

CaptureResult.CONTROL_AE_STATE_LOCKED.

Do not ask me how I found out! Now we can create instances

ConvergeWaiterfor autofocus and exposure, for this we will make a factory:static class Factory {

private static final List afReadyStates = Collections.unmodifiableList(

Arrays.asList(

CaptureResult.CONTROL_AF_STATE_INACTIVE,

CaptureResult.CONTROL_AF_STATE_PASSIVE_FOCUSED,

CaptureResult.CONTROL_AF_STATE_FOCUSED_LOCKED,

CaptureResult.CONTROL_AF_STATE_NOT_FOCUSED_LOCKED

)

);

private static final List aeReadyStates = Collections.unmodifiableList(

Arrays.asList(

CaptureResult.CONTROL_AE_STATE_INACTIVE,

CaptureResult.CONTROL_AE_STATE_FLASH_REQUIRED,

CaptureResult.CONTROL_AE_STATE_CONVERGED,

CaptureResult.CONTROL_AE_STATE_LOCKED

)

);

static ConvergeWaiter createAutoFocusConvergeWaiter() {

return new ConvergeWaiter(

CaptureRequest.CONTROL_AF_TRIGGER,

CameraMetadata.CONTROL_AF_TRIGGER_START,

CaptureResult.CONTROL_AF_STATE,

afReadyStates

);

}

static ConvergeWaiter createAutoExposureConvergeWaiter() {

return new ConvergeWaiter(

CaptureRequest.CONTROL_AE_PRECAPTURE_TRIGGER,

CameraMetadata.CONTROL_AE_PRECAPTURE_TRIGGER_START,

CaptureResult.CONTROL_AE_STATE,

aeReadyStates

);

}

}

capture/setRepeatingRequest

To call

capture/ setRepeatingRequestwe need:- previously opened

CameraCaptureSession, which is available atCaptureSessionData; CaptureRequestwhich we will create usingCaptureRequest.Builder.

Create a method

Single waitForConverge(@NonNull CaptureSessionData captureResultParams, @NonNull CaptureRequest.Builder builder) In the second parameter we will pass

builder, configured for the preview. Therefore, CaptureRequestyou can create a preview right away by calling CaptureRequest previewRequest = builder.build();To create a

CaptureRequestconvergence procedure to start, add to the builderflag that will start the necessary convergence process:builder.set(mRequestTriggerKey, mRequestTriggerStartValue);

CaptureRequest triggerRequest = builder.build();

And we will use our methods to get

Observablefrom capture/ methods setRepeatingRequest:Observable triggerObservable = CameraRxWrapper.fromCapture(captureResultParams.session, triggerRequest);

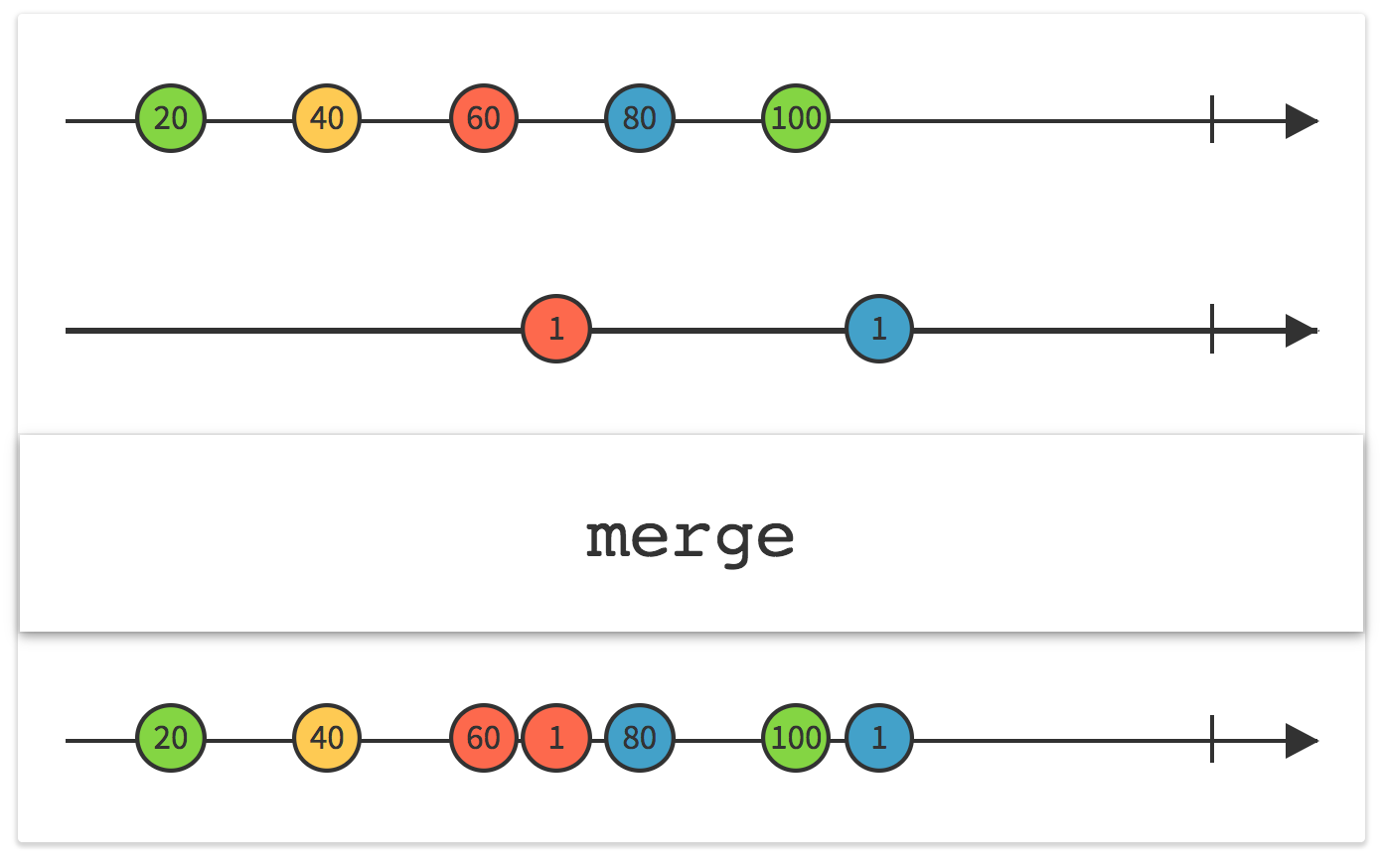

Observable previewObservable = CameraRxWrapper.fromSetRepeatingRequest(captureResultParams.session, previewRequest); Chaining operators

Now we can form a reactive stream in which there will be events from both Observable using the operator

merge.

Observable convergeObservable = Observable

.merge(previewObservable, triggerObservable)

Received

convergeObservablewill emit events with call results onCaptureCompleted. We need to wait until the moment

CaptureResultpassed to this method contains the expected flag value. To do this, create a function that accepts CaptureResultand returns trueif it has the expected flag value:private boolean isStateReady(@NonNull CaptureResult result) {

Integer aeState = result.get(mResultStateKey);

return aeState == null || mResultReadyStates.contains(aeState);

}

A check is

nullneeded for curve implementations of the Camera2 API so that it does not hang waiting forever. Now we can use the operator

filterto wait for the event for which it is executed isStateReady:

.filter(resultParams -> isStateReady(resultParams.result))

We are only interested in the first such event, so we add

.first(captureResultParams);

A fully reactive stream looks like this:

Single convergeSingle = Observable

.merge(previewObservable, triggerObservable)

.filter(resultParams -> isStateReady(resultParams.result))

.first(captureResultParams);

In case the convergence process drags on for too long or something went wrong, enter a timeout:

private static final int TIMEOUT_SECONDS = 3;

Single timeOutSingle = Single

.just(captureResultParams)

.delay(TIMEOUT_SECONDS, TimeUnit.SECONDS, AndroidSchedulers.mainThread());

The operator

delayresets events with a specified delay. By default, it does this in the stream belonging to the computation scheduler, so we throw it into the Main Thread using the last parameter. Now we combine

convergeSingleand timeOutSingle, and whoever emits the event first, he won:return Single

.merge(convergeSingle, timeOutSingle)

.firstElement()

.toSingle();

Full function code:

@NonNull

Single waitForConverge(@NonNull CaptureSessionData captureResultParams, @NonNull CaptureRequest.Builder builder) {

CaptureRequest previewRequest = builder.build();

builder.set(mRequestTriggerKey, mRequestTriggerStartValue);

CaptureRequest triggerRequest = builder.build();

Observable triggerObservable = CameraRxWrapper.fromCapture(captureResultParams.session, triggerRequest);

Observable previewObservable = CameraRxWrapper.fromSetRepeatingRequest(captureResultParams.session, previewRequest);

Single convergeSingle = Observable

.merge(previewObservable, triggerObservable)

.filter(resultParams -> isStateReady(resultParams.result))

.first(captureResultParams);

Single timeOutSingle = Single

.just(captureResultParams)

.delay(TIMEOUT_SECONDS, TimeUnit.SECONDS, AndroidSchedulers.mainThread());

return Single

.merge(convergeSingle, timeOutSingle)

.firstElement()

.toSingle();

}

waitForAf/waitForAe

The bulk of the work has been done, it remains only to create instances:

private final ConvergeWaiter mAutoFocusConvergeWaiter = ConvergeWaiter.Factory.createAutoFocusConvergeWaiter();

private final ConvergeWaiter mAutoExposureConvergeWaiter = ConvergeWaiter.Factory.createAutoExposureConvergeWaiter();

and use them:

private Observable waitForAf(@NonNull CaptureSessionData captureResultParams) {

return Observable

.fromCallable(() -> createPreviewBuilder(captureResultParams.session, mSurface))

.flatMap(

previewBuilder -> mAutoFocusConvergeWaiter

.waitForConverge(captureResultParams, previewBuilder)

.toObservable()

);

}

@NonNull

private Observable waitForAe(@NonNull CaptureSessionData captureResultParams) {

return Observable

.fromCallable(() -> createPreviewBuilder(captureResultParams.session, mSurface))

.flatMap(

previewBuilder -> mAutoExposureConvergeWaiter

.waitForConverge(captureResultParams, previewBuilder)

.toObservable()

);

}

The highlight here is the use of the operator

fromCallable. You may be tempted to use the operator just. For example, like this:just(createPreviewBuilder(captureResultParams.session, mSurface)).But in this case, the function

createPreviewBuilderwill be called right at the time of the call waitForAf, and we want it to be called only when a subscription to ours appears Observable.Conclusion

As you know, the most valuable part of any article on Habré is comments! Therefore, I urge you to actively share your thoughts, comments, valuable knowledge and links to more successful implementations in the comments.

Project sources can be found on GitHub . Pulkvest welcome!