DEFCON 21. Passwords alone are not enough, or why disk encryption “breaks down” and how it can be fixed. Part 1

- Transfer

Thank you all for coming, today we will talk about full disk encryption (FDE), which is not as secure as you think. Raise your hands, who encrypts the HDD of your computer this way. Amazing Well, welcome to DefCon!

It appears that 90% of you use open source software to encrypt a disk to be able to audit them. Now let those who completely turn off the computer raise their hands if they leave it unattended. I think about 20% of those present. Tell me, and who generally leaves your computer unattended for a few hours, doesn’t it turn on or off? Consider that I ask these questions, just to make sure that you are not a zombie and do not sleep. I think that almost everyone had to leave their computer for at least a few minutes.

So why do we encrypt our computers? It is difficult to find someone who would ask this question, so I think it is really important to formulate the motivation of specific actions in the field of security. If we do not do this, we will not be able to understand how to organize this work.

There is a lot of documentation on software that encrypts the disk, which describes what the software does, what algorithms it uses, which passwords, and so on, but almost never says why.

So, we encrypt your computer because we want to control our data, we want to guarantee their confidentiality and that no one can steal or change it without our knowledge. We want to decide for ourselves how to deal with our data and control what happens to it.

There are situations where you simply must ensure the secrecy of data, for example, if you are a lawyer or a doctor who has confidential client information. The same applies to financial and accounting documentation. Companies are obliged to inform customers about the leakage of such information, for example, if someone left an unprotected laptop in a car that was hijacked, and now this confidential information may be freely available on the Internet.

In addition, you need to control the physical access to the computer and ensure that it is protected from physical impact, because FDE will not help if someone physically takes over your computer.

If we want to secure the network, we need to control access to the end user's computer. We will not be able to build a secure Internet without securing every end user.

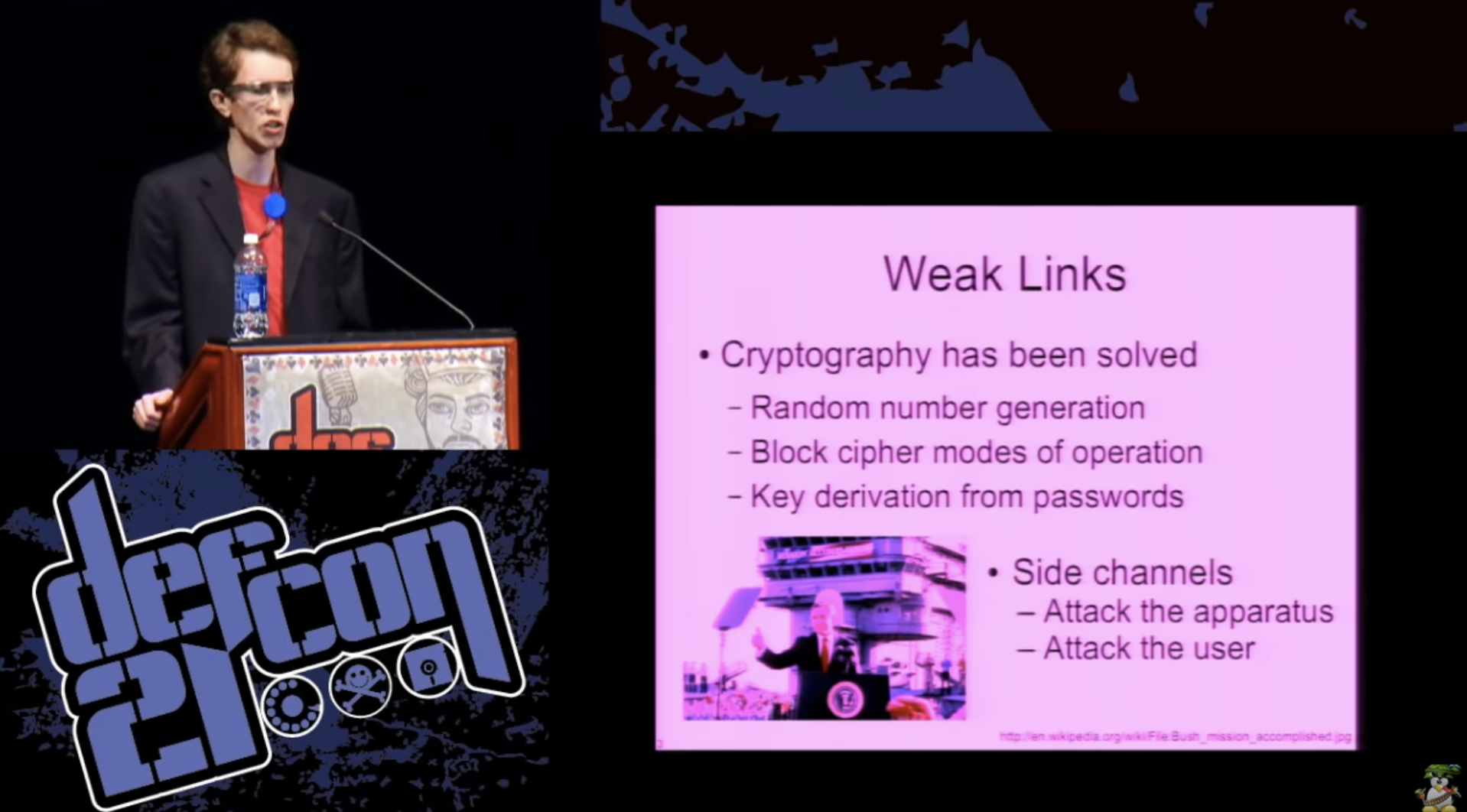

So, we have dealt with the theoretical aspects of the need for disk encryption. We know how to generate random numbers to secure keys, how to control block encryption modes used for full disk encryption, how to securely inherit a key for passwords, so that we can assume that “the mission is complete”, as President Bush said, speaking on board aircraft carrier. But you know that this is not the case, and we still have a lot to do to complete it.

Even if you have flawless cryptography and you know that it is almost impossible to crack it, in any case it should be implemented on a real computer, where you have no analogs of reliable black boxes. An attacker does not need to attack cryptography if he tries to crack the full disk encryption. To do this, he just needs to attack the computer itself or somehow deceive the user, convincing him to provide a password, or use a keylogger, etc.

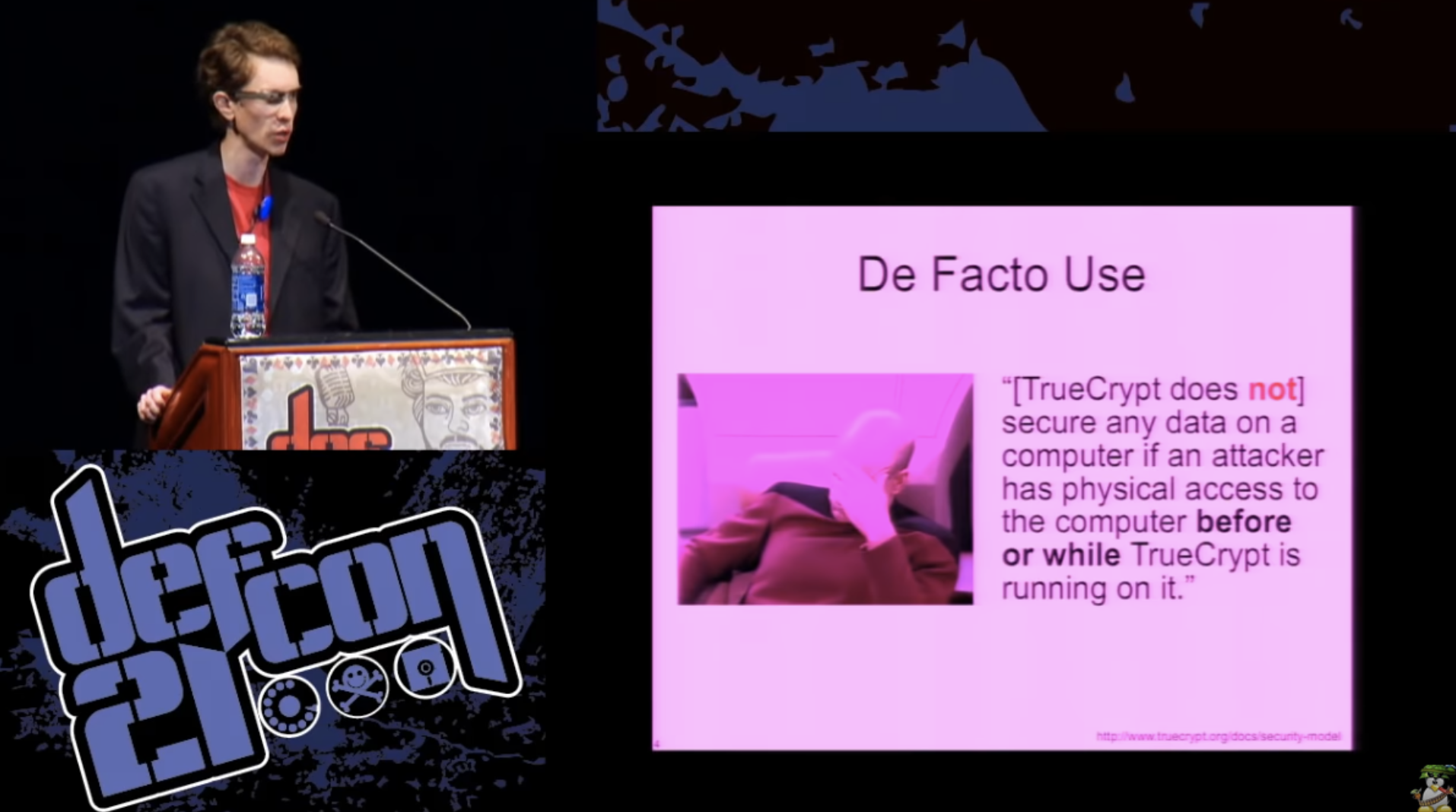

The actual use of encryption does not match the FDE security model. If we consider software designed for full disk encryption, it is clear that its creators paid a lot of attention to the theoretical aspects of encryption. I will quote an excerpt from the technical documentation from the TrueCrypt website: “Our program will not protect any data on the computer if the attacker has physical access to the computer before starting or in the process of running TrueCrypt.”

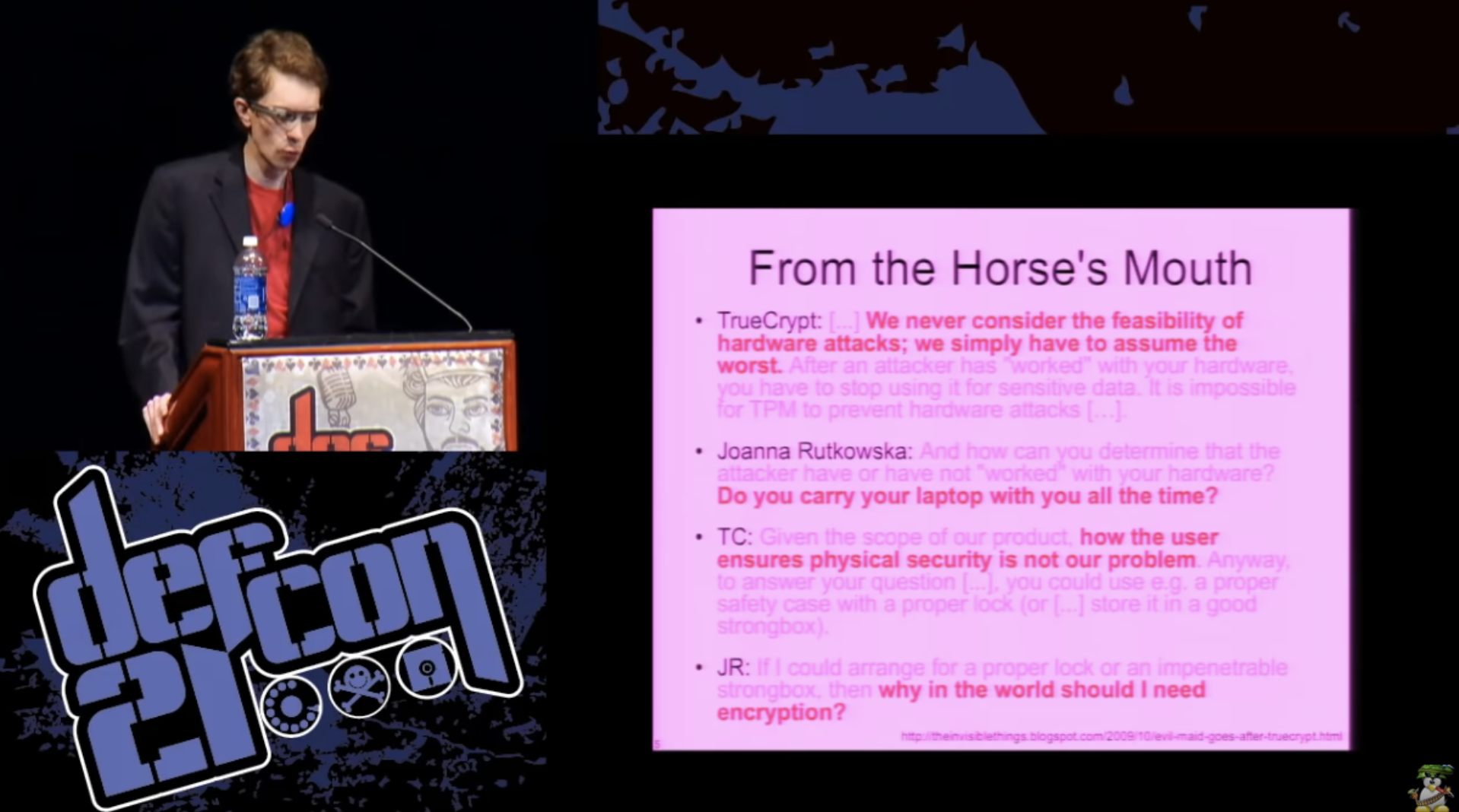

In principle, their entire security model looks like this: “if our program correctly encrypts a disk and correctly decrypts it, we did our job.” I apologize for the text shown on the next slide, if you find it difficult to read it, I will do it myself. These are excerpts from the correspondence between the developers of TrueCrypt and security researcher Joanna Rutkowska about the attacks of the janitor.

TrueCrypt: “We never consider the possibility of hardware attacks, we just assume the worst. After the attacker has “worked” on your computer, you just have to stop using it to store confidential information. The TPM crypto processor is not able to prevent hardware attacks, for example, using keyloggers.

Joanna Rutkovskaya asked them: “How can you determine whether the attacker“ worked ”on your computer or not, because you don’t carry a laptop with you all the time?”, To which the developers replied: “We don’t care how the user ensures safety and security your computer. For example, a user could use a lock or place a laptop while in his absence in a lockable cabinet or safe. ” Joanna answered them very correctly: “If I am going to use a lock or safe, why do I need your encryption at all”?

Thus, ignoring the possibility of such an attack is a hoax, we cannot do that! We live in the real world, where these systems exist, with which we interact and which we use. There is no way to compare 10 minutes of attack, performed only with the help of software, for example, from a “flash drive”, with something that you can perform by manipulating the system solely with the help of hardware.

Thus, regardless of what they say, physical security and resistance to physical attacks depends on FDE. It doesn’t matter what you give up on your security model, and at least if they don’t want to take responsibility, they should be very clear and honest about how you can easily break the protection they offer.

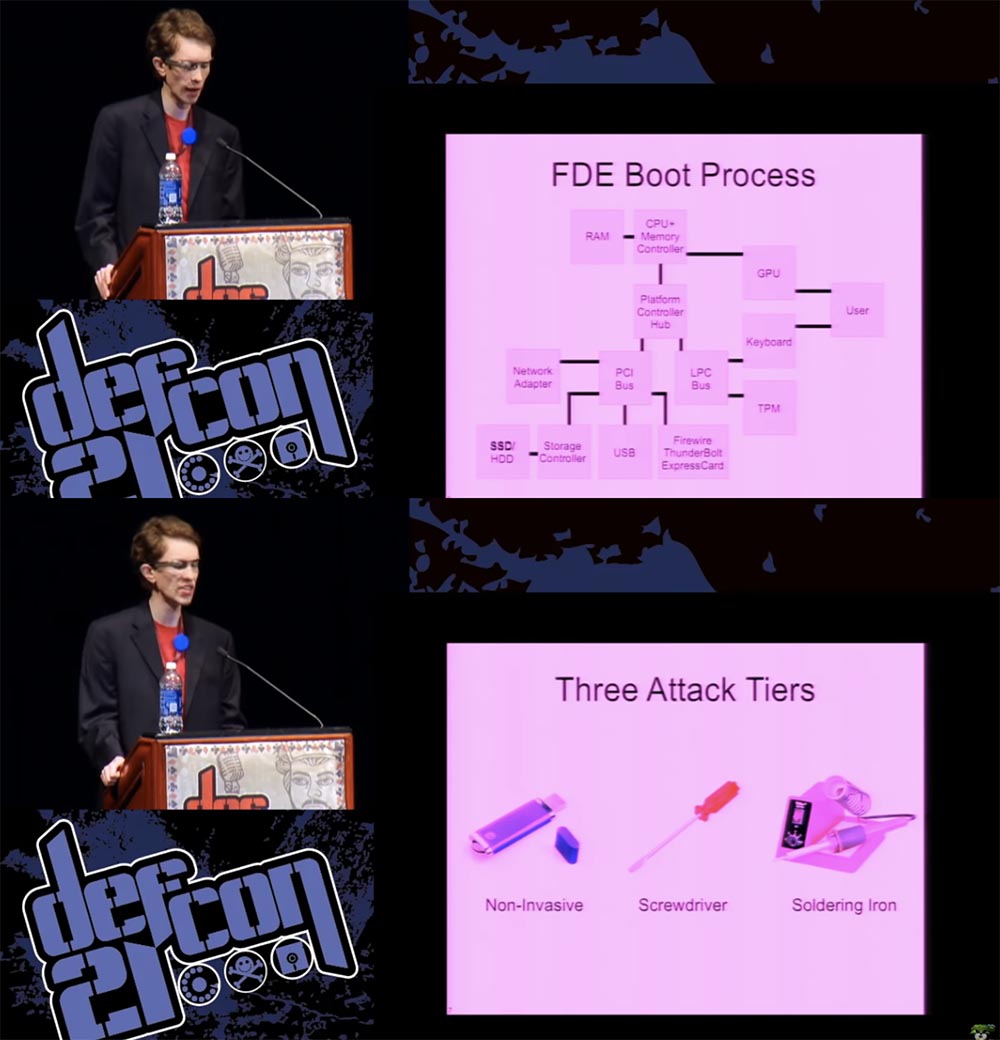

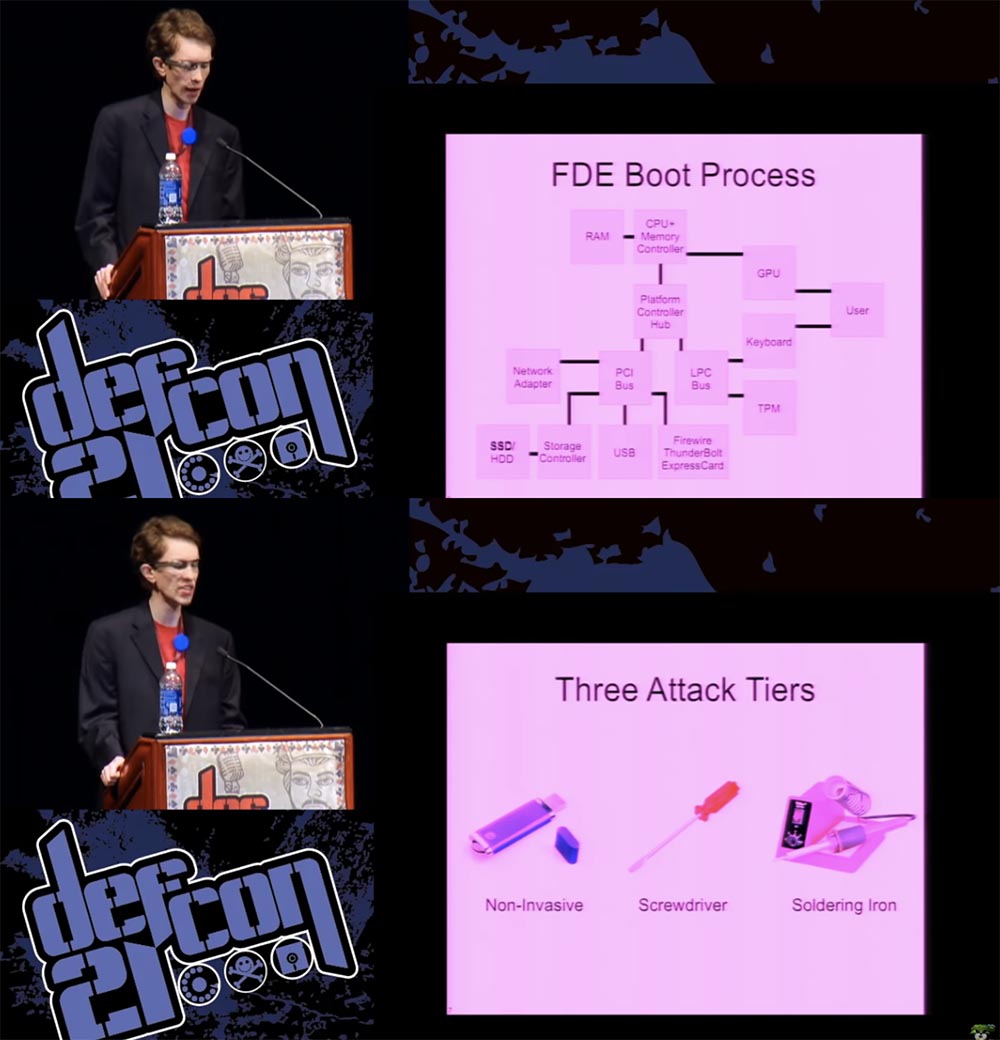

The next slide shows the scheme for downloading abstract FDE, which is used in most modern computers.

As we know, the bootloader is loaded from the SSD / HDD using the BIOS and copied to main memory on the data transfer path. Storage Controller - PCI Bus - Platform Controller Hub. The bootloader then prompts the user for authentication information, such as a password or smart card key. Next, the password goes from the keyboard to the processor, after which the loader takes control, while both components — OS and key — remain in memory in order to ensure the transparency of the encryption and disk decryption process. This is an idealized view of the process, suggesting that no one will try to interfere in it in any way. I think you know perfectly well how to hack it, so let's list things that can go wrong if someone tries to attack you. I divide the attacks into 3 levels.

The first one is non-invasive, which does not require the capture of your computer, as it is done with the help of a flash drive with malware. You do not need to “disassemble” the system if you can easily connect to it any hardware component such as a PCI card, Express Card or Thunderbolt — Apple's newest adapter, which provides open access to the PCI bus.

A second-level attack will require a screwdriver, because you may need to temporarily remove some component of the system in order to deal with it in your own small environment. The third level, or “soldering iron attack,” is the most difficult, here you physically either add or modify system components, such as chips, to try to crack them.

One type of first-level attack is a compromised bootloader, also known as the evil maid attack, where you need to run some unencrypted code as part of the system boot process, something you can download yourself using the user's personal information in order to get access to the rest of the data encrypted on your hard drive. There are several different ways to do this. You can physically change the boot loader in the storage system. You can compromise the BIOS or download a malicious BIOS that will take control of the keyboard adapter or disk reading procedures and modify them so that they are resistant to removing the hard disk. But in any case, you can modify the system so that when the user enters his password,

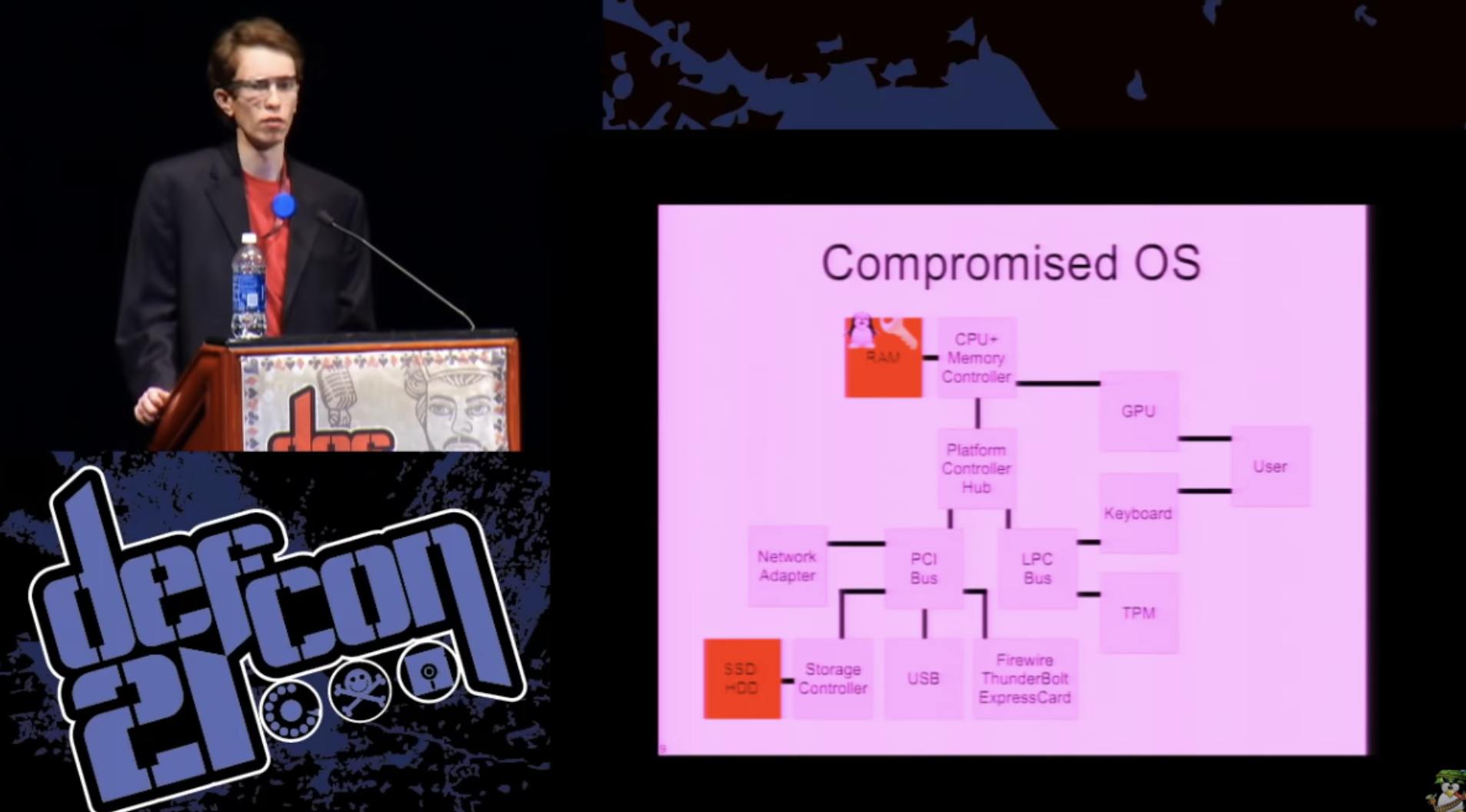

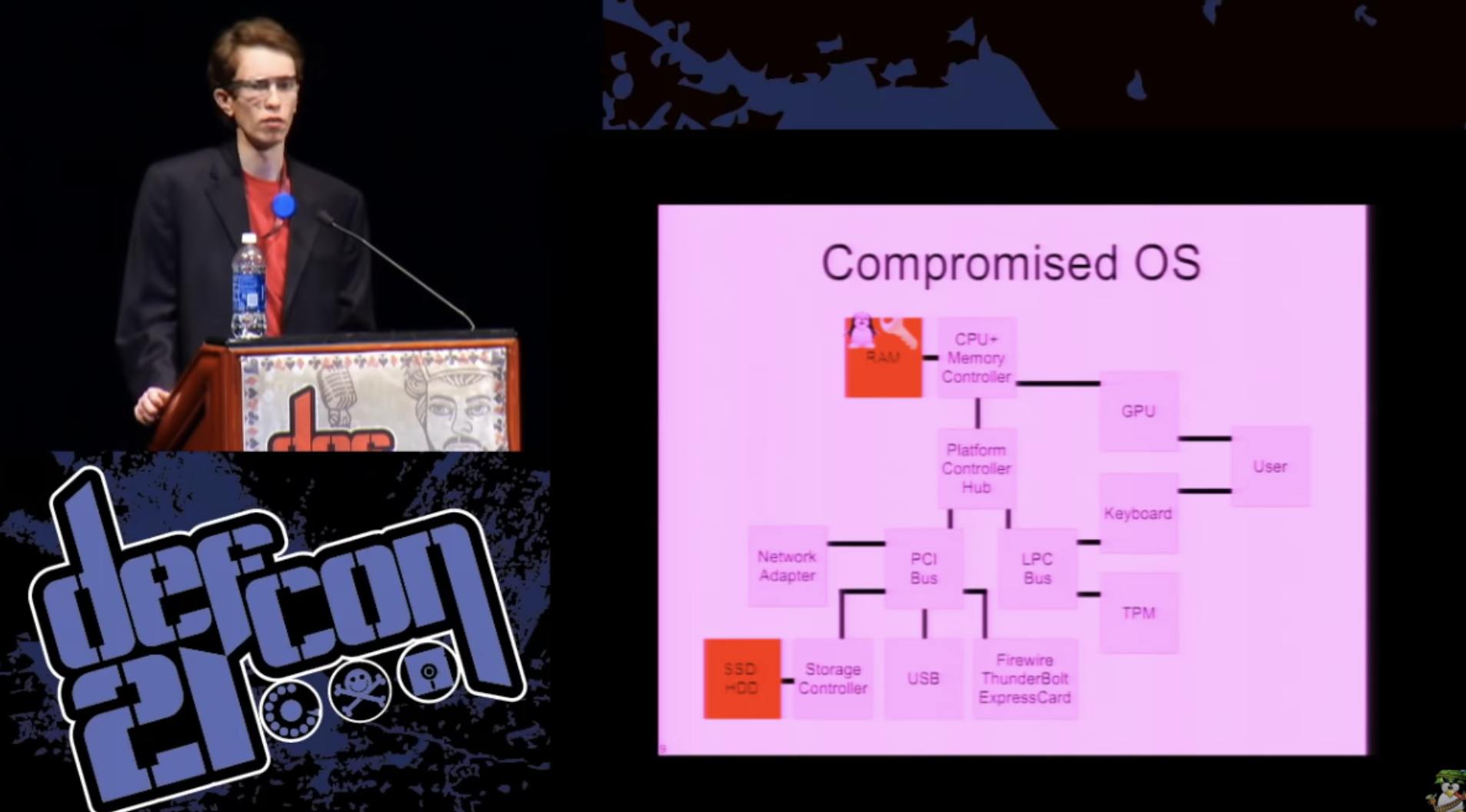

You can do something similar at the operating system level. This is especially true if you are not using full disk encryption, but container encryption.

This can also occur when an exploit is attacked by a system, thanks to which an attacker gets root rights and can read the key from the main memory, this is a very common attack method. This key can be saved as a plaintext on the hard disk for later use by an attacker or sent over the network to Command & Control.

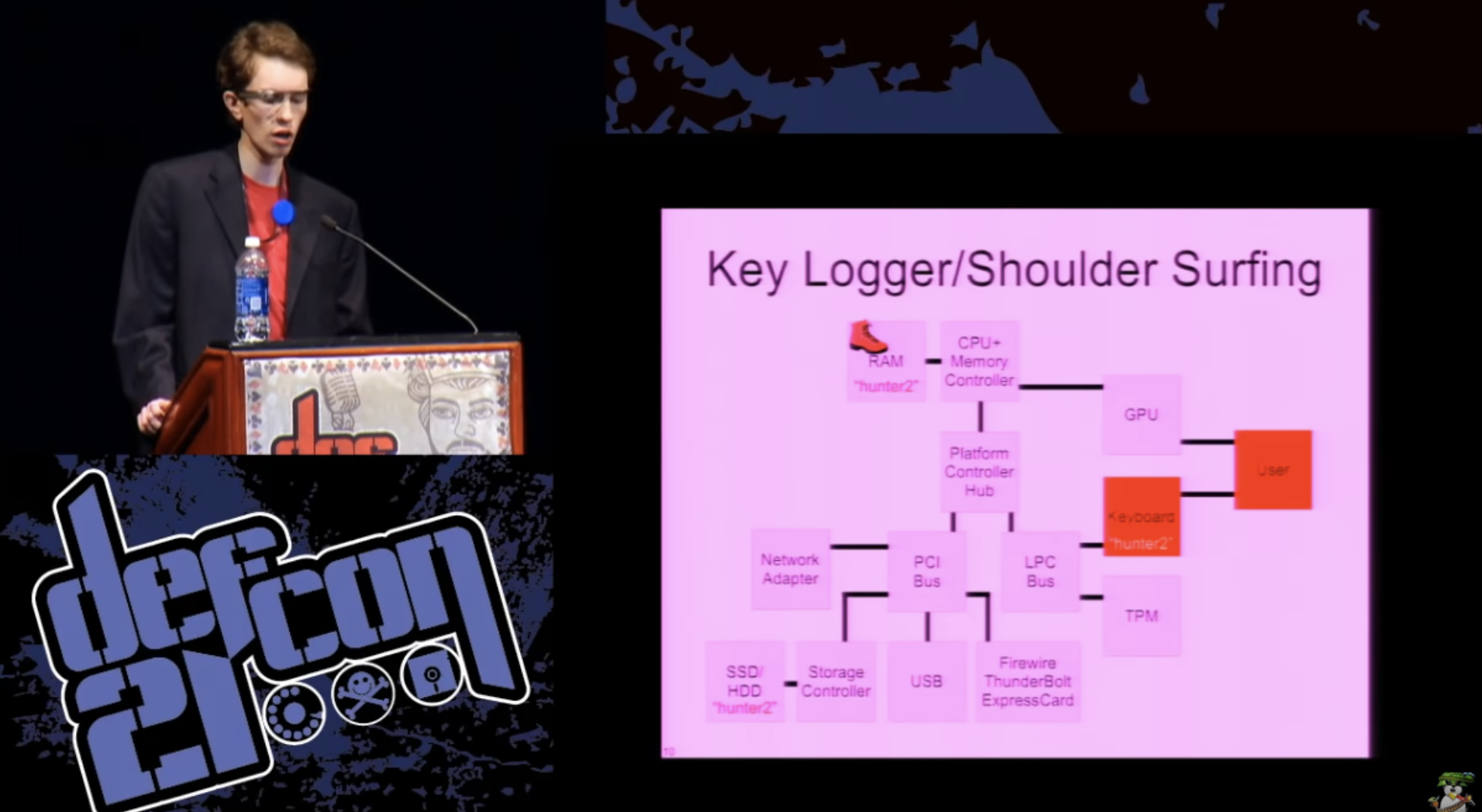

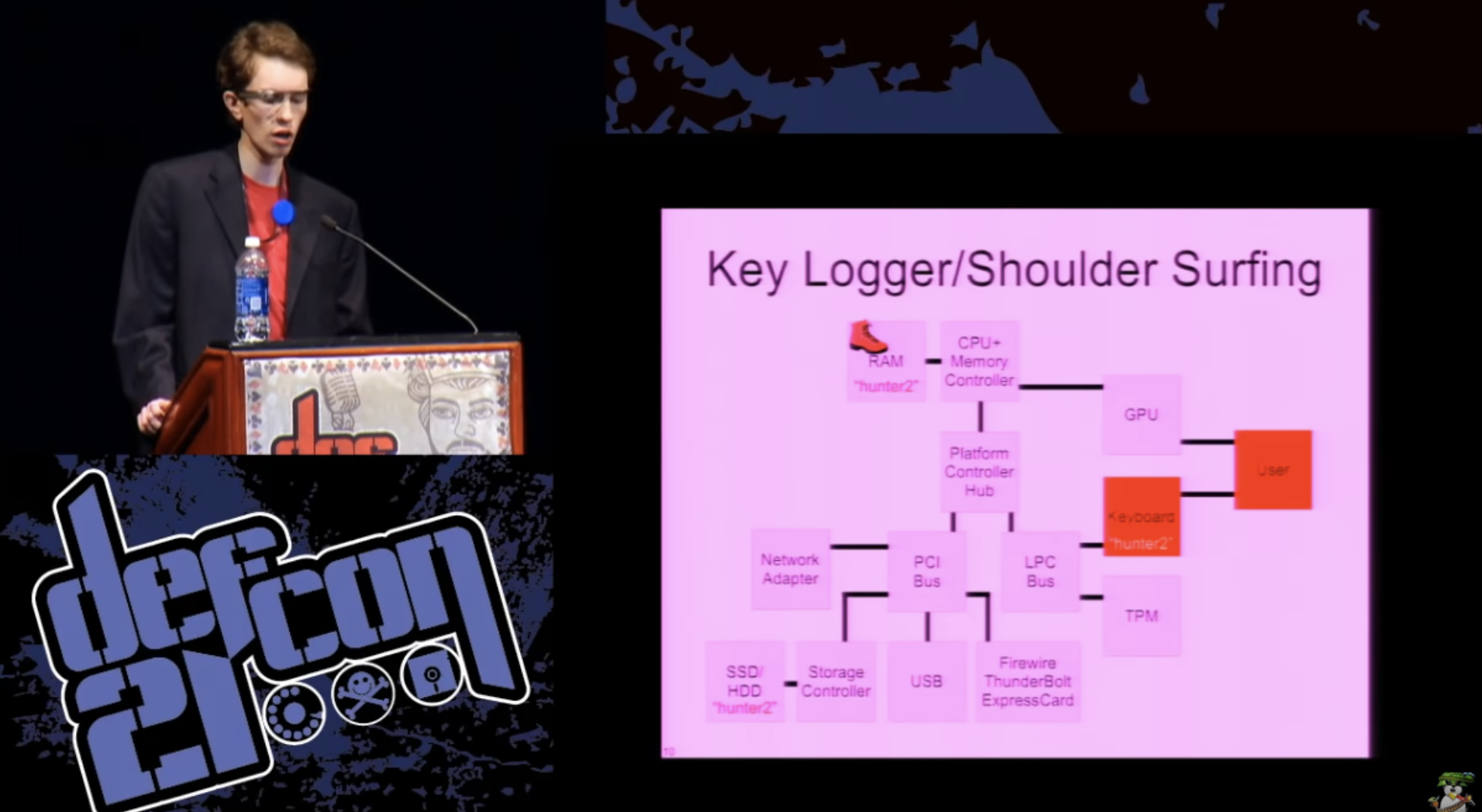

Another possibility is keyboard interception using a keylogger, be it software, hardware, or something exotic, such as a needle-eye camera or, for example, a microphone that records sounds that a user presses with a key and tries to figure out what keys. It is difficult to prevent such an attack, because it potentially includes components that are outside the system.

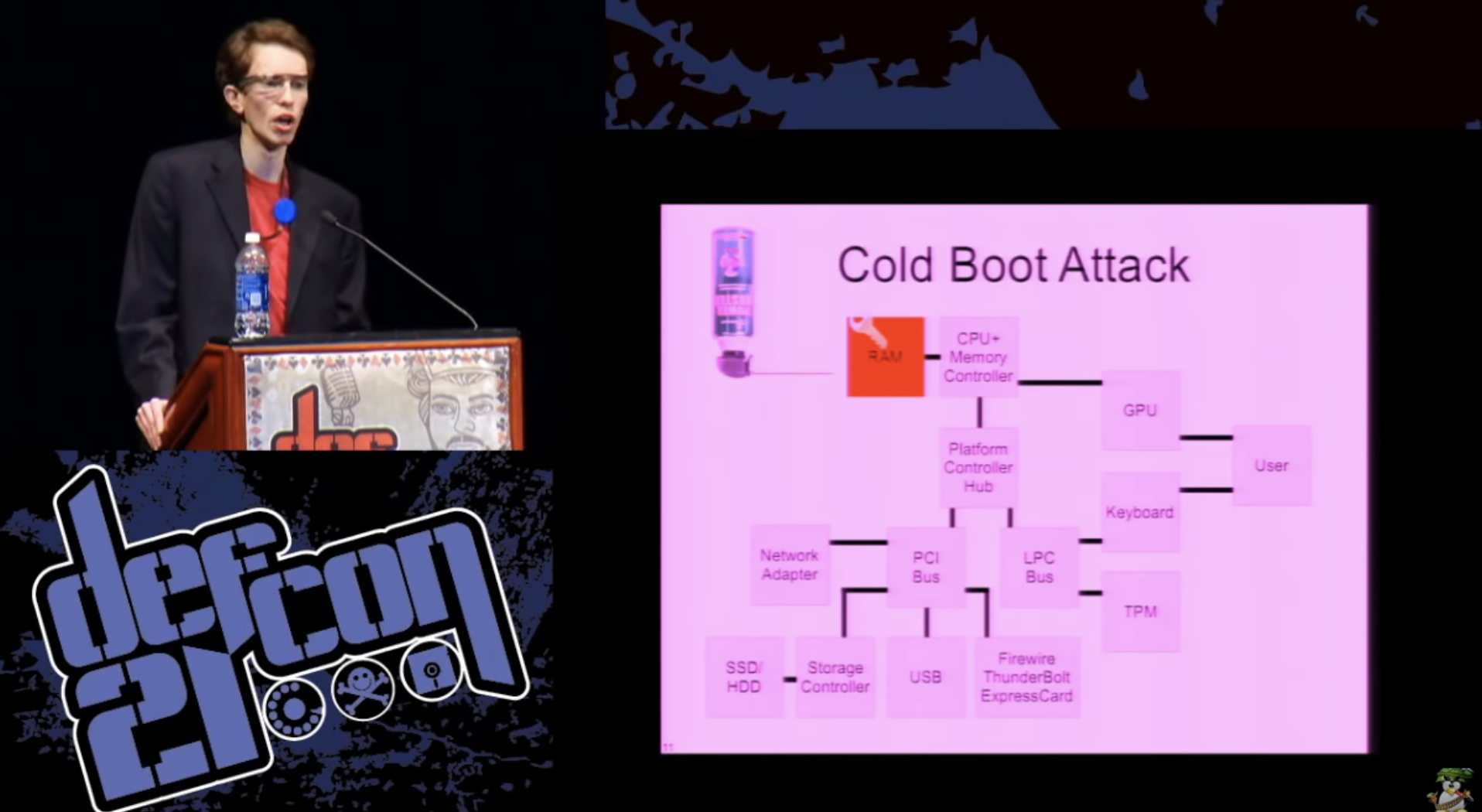

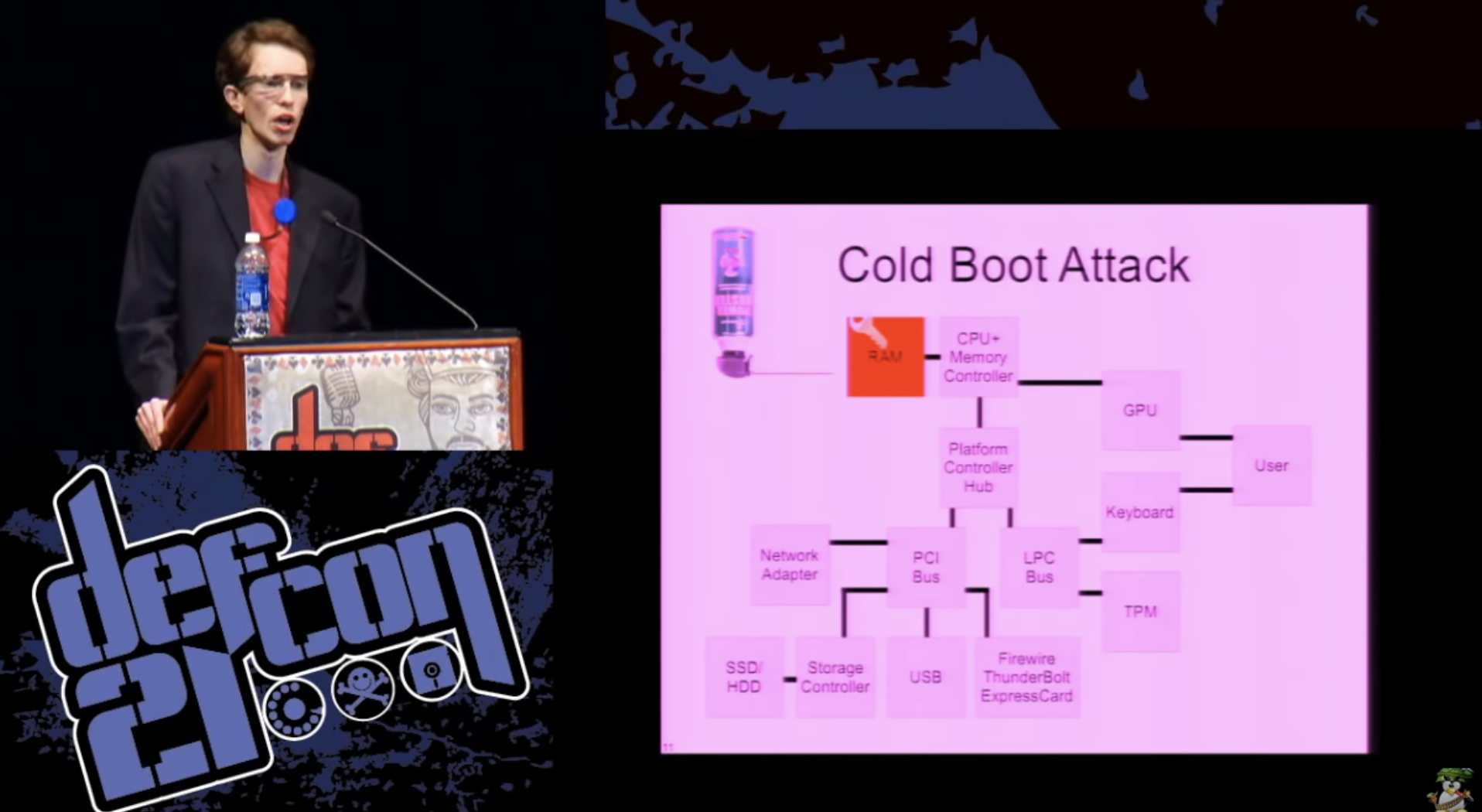

I would also like to mention data recovery attacks, better known as Cold Boot Attacks, “cold boot attacks”. If 5 years ago you asked even very computer-savvy people what the main memory security properties were, they would say that when the power is turned off, the data disappears very quickly.

But in 2008, excellent studies were published by Princeton, who found that in fact, even at room temperature, there was very little data loss in RAM memory for a few seconds. And if you cool the module to cryogenic temperatures, you can get a few minutes, during which there is only a slight degradation of data in the main memory.

Thus, if your key is in the main memory and someone has extracted the modules from your computer, they can attack your key by finding out where it is in the main memory in the clear. There are certain methods to counter this at the hardware level, for example, forcing the memory contents to be cleared when the power is turned off or when the computer is restarted, but this does not help if someone simply pulls out the module and places it in another computer or in a dedicated piece of equipment to retrieve its contents.

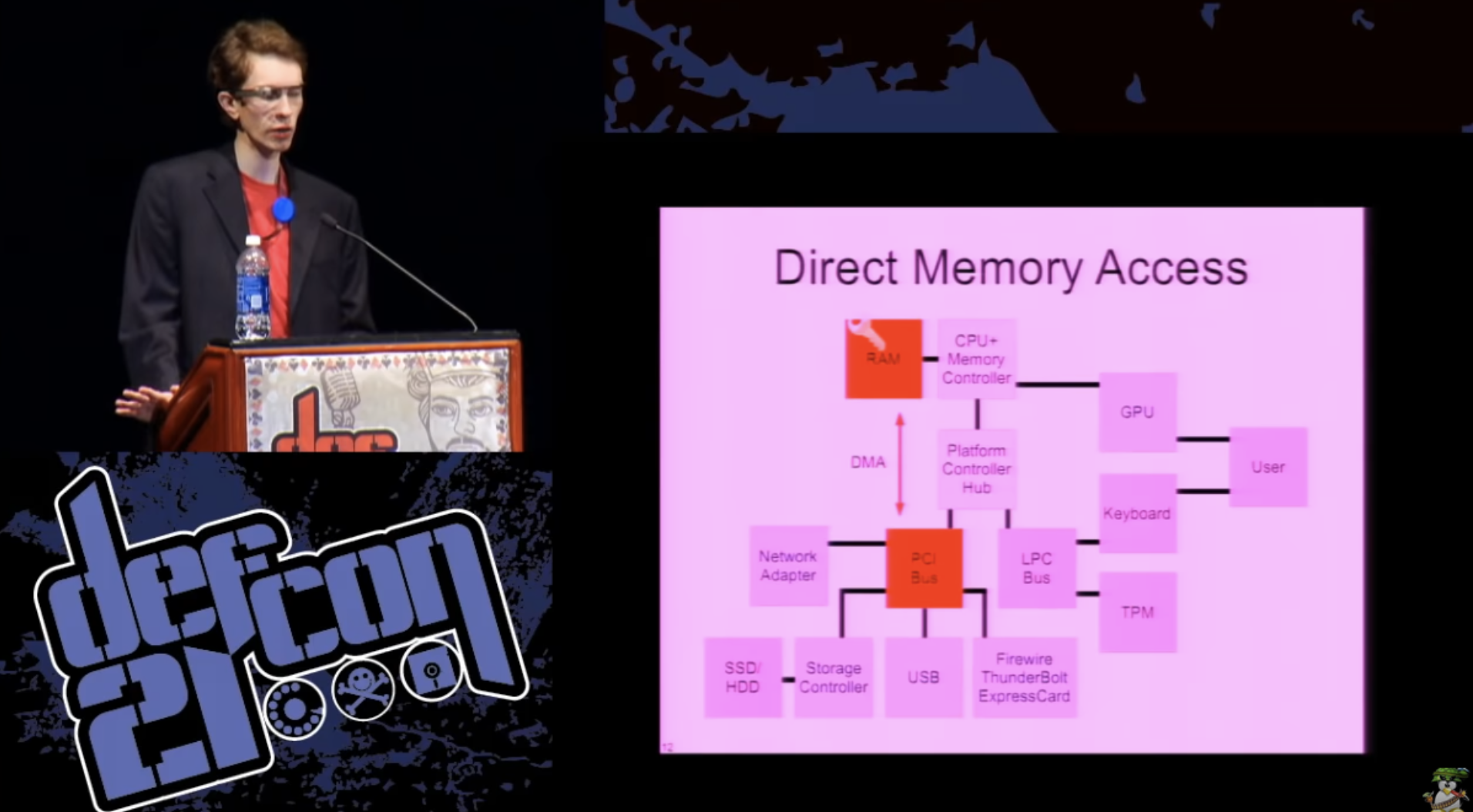

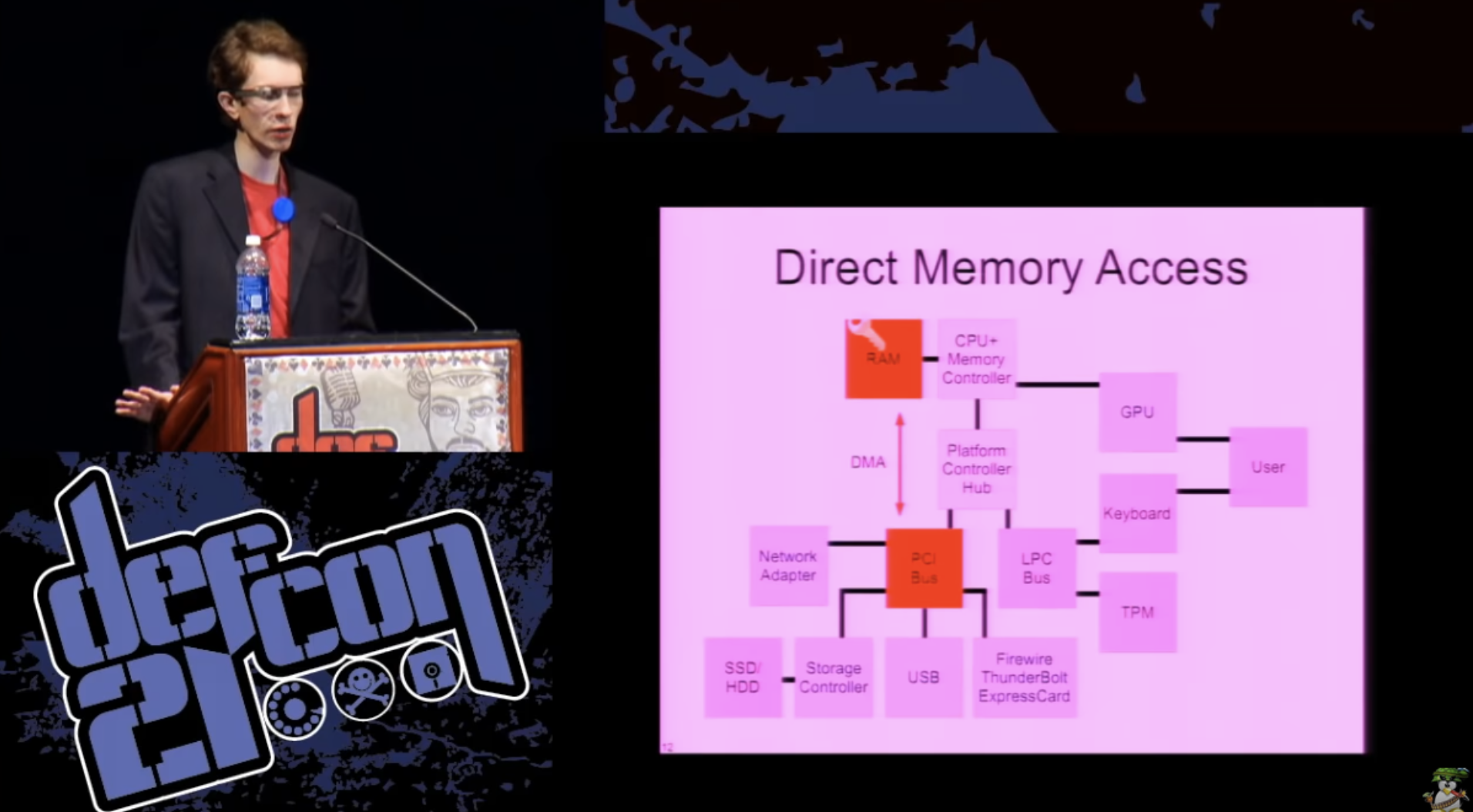

Finally, there is the possibility of direct memory access. Any PCI device in your computer has the ability to normally read and write the contents of any sector in the main memory. They can do anything.

This was developed even when the computers were much slower and we didn’t want the CPU to “nurse” with each data transfer to the device from the main memory. Thus, devices get direct memory access, the processor can issue commands to them that they can simply complete, but the data will remain in memory whenever you need it.

This is a problem because PCI devices can be reprogrammed. Many of these things have a writeable firmware, which you can just reflash to something hostile. And this may jeopardize the security of the entire operating system, as it will allow to carry out any form of attack, even modify the OS itself, or remove the key directly. In computer forensics, there is equipment designed for such things in the process of investigating crimes: they connect something to your computer and pull out the contents of the memory. You can do this with FireWire, ExpressCard or Thunderbolt ... In fact, all these are external ports that provide access to the internal system bus.

So, it would be nice if you could not store the key in RAM, because we sort of showed that the RAM is not very reliable from a security point of view. Is there any dedicated keystore or special cryptographic equipment? Yes there is. You can use cryptographic accelerators for a web server to process more SSL transactions per second. They are resistant to unauthorized interference. CA certificate authorities have things that keep them completely secret keys, but in fact they are not intended for such high-performance operations as using disk encryption. So are there any other options?

Can we use the processor as a kind of pseudo-hardware crypto module? Can we calculate something like a symmetric AES block encryption algorithm in the CPU, using instead of RAM only something like CPU registers?

Intel and AMD have added great new instructions to their processors that have taken on the job of doing AES, so now you can perform primitive block encryption operations with just one simple assembly instruction. The question is, can we keep our key in memory, or can we perform this process without relying on main memory? In modern x86 processors there is a fairly large set of registers, and if one of you really tried to add all the bits that are in these, it turns out about 4 kilobytes. Thus, we can actually use some CPUs to store keys and create space to perform encryption operations.

One of the possibilities is the use of hardware debug point registers. In a typical Intel processor, there are 4 such registers, and on the x64 system, each of them will contain a 64-bit pointer. This is 256 bits of potential disk space that most people will never use. Of course, the advantage of using debug registers is their privilege, because only the operating system can get access to them. There are other nice advantages, for example, if you turn off the power of the processor when you turn off the system or go into sleep mode, you really lose the entire contents of the register, so you can not be afraid of a “cold reset”.

The guy from Germany, Tilo Muller, implemented this similar thing called TRESOR for Linux in 2011. He tested the performance of such a system and concluded that it works no slower than with regular AES software calculations.

How about storing two 128-bit keys instead of one key? This will lead us to a larger crypto module space. We can store one master key that never leaves the processor when it is loaded, and then load and unload the key versions we need to perform additional operations and solve additional tasks.

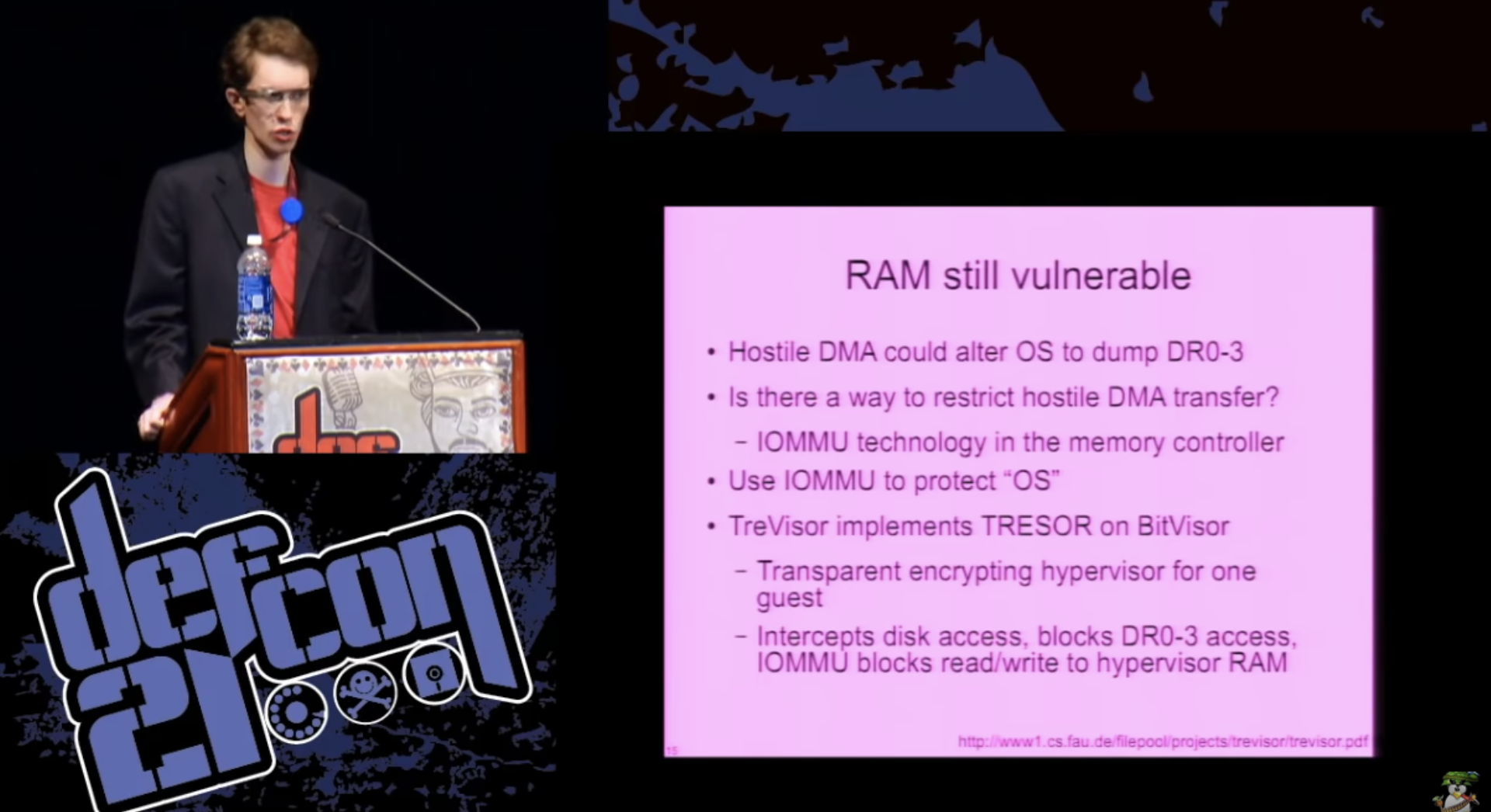

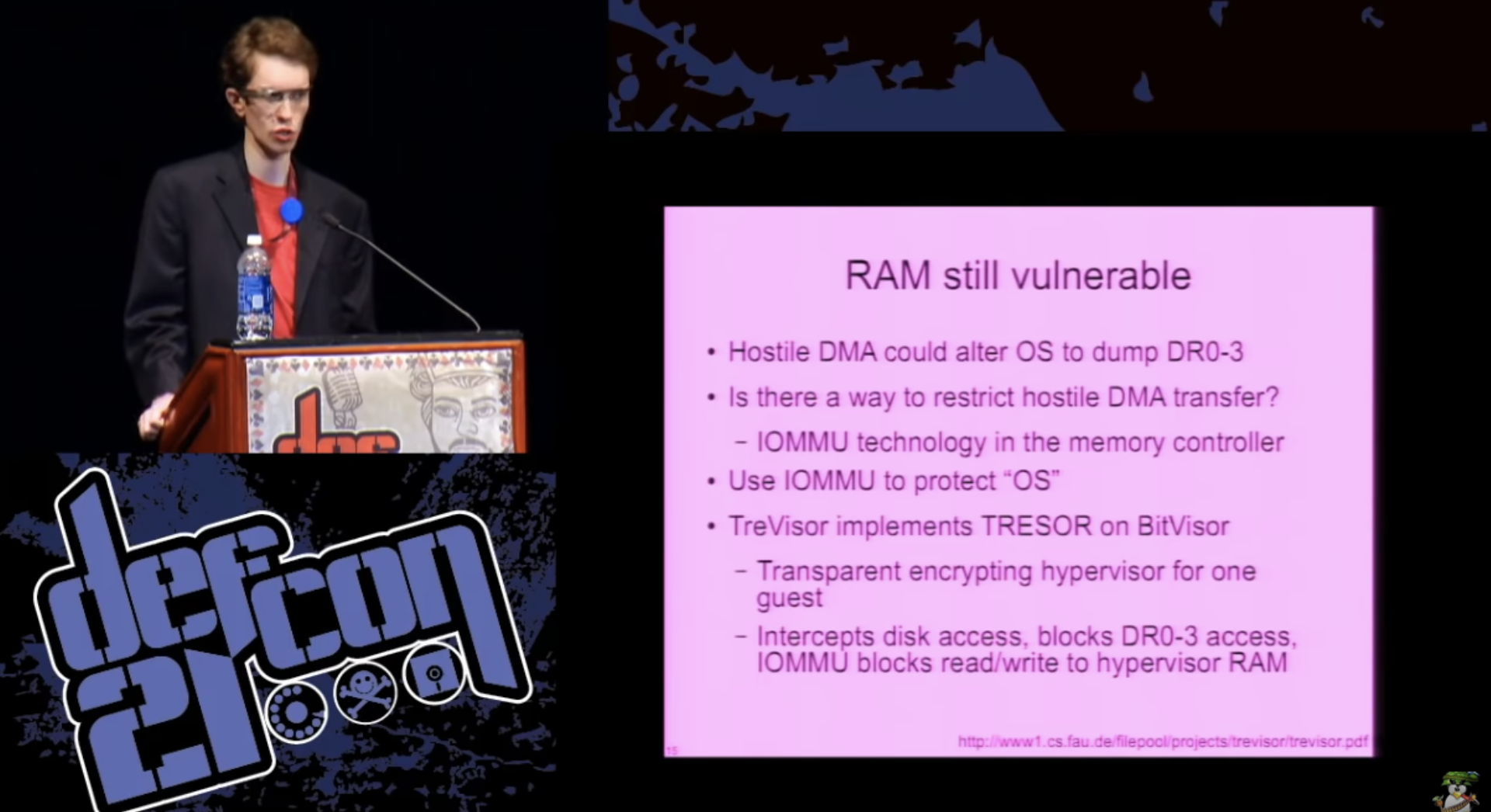

The problem is that we can store our code or our keys outside the main memory, but the CPU will still handle the contents of the memory anyway. Thus, the use of DMA, direct memory access technology, bypassing the central processor, or other manipulations, allows you to change the operating system and keep its dump outside the main memory registers, or, if more exotic methods are used, outside the debug registers.

Can we do something with a DMA angle of attack? As it turned out, yes, we can. Recently, as part of new technologies to increase server virtualization, for performance reasons, people like to be able to connect, say, a network adapter to a virtual server, so it must be connected through a hypervisor.

IOMMU technology has been designed in such a way that you can isolate a PCI device in your own small memory section, from where it cannot read and write arbitrarily anywhere in the system. This is perfect: we can configure the IOMMU permissions to protect our operating system or what we use to process keys, and protect them from random access.

Again, our friend from Germany, Tilo Muller, implemented the TRESOR version on a microbit bit called BitVisor, which does this. This allows you to run a separate operating system and transparently accesses disk encryption, and it's great that you don't need to take care of it or know anything about it. The disk access is completely transparent to the OS, the operating system cannot access the debug registers, and the IOMMU is configured in such a way that the hypervisor is completely protected from any manipulation.

But, as it turned out, in memory, in addition to the disk encryption keys, there are other things that should be taken care of. There is a problem that I already mentioned - earlier we used container encryption, and now we mainly perform full disk encryption.

We do full disk encryption, because it is very difficult to make sure that you do not accidentally write your confidential data to temporary files or the system cache during container encryption. Now that we consider RAM as an insecure, unreliable place to store data, we need to treat it accordingly. We need to encrypt all data that is not wanted to be leaked, everything that is really important, such as SSH keys, private keys or PGP keys, even the password manager and any “top secret” documents you work with.

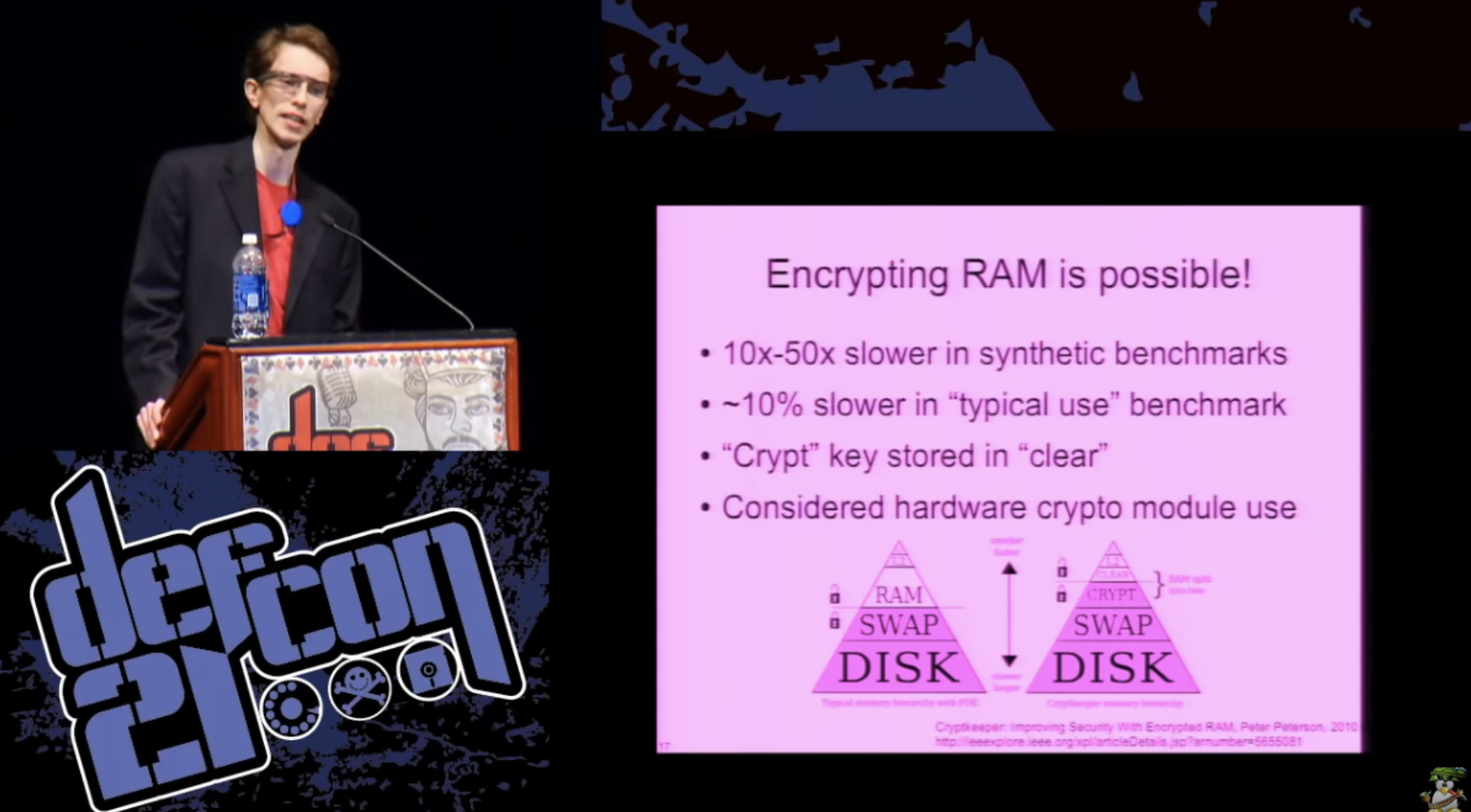

I had a very stupid idea: can we encrypt RAM? Or at least most of the main memory in which we will keep secrets in order to minimize the possible amount of leakage.

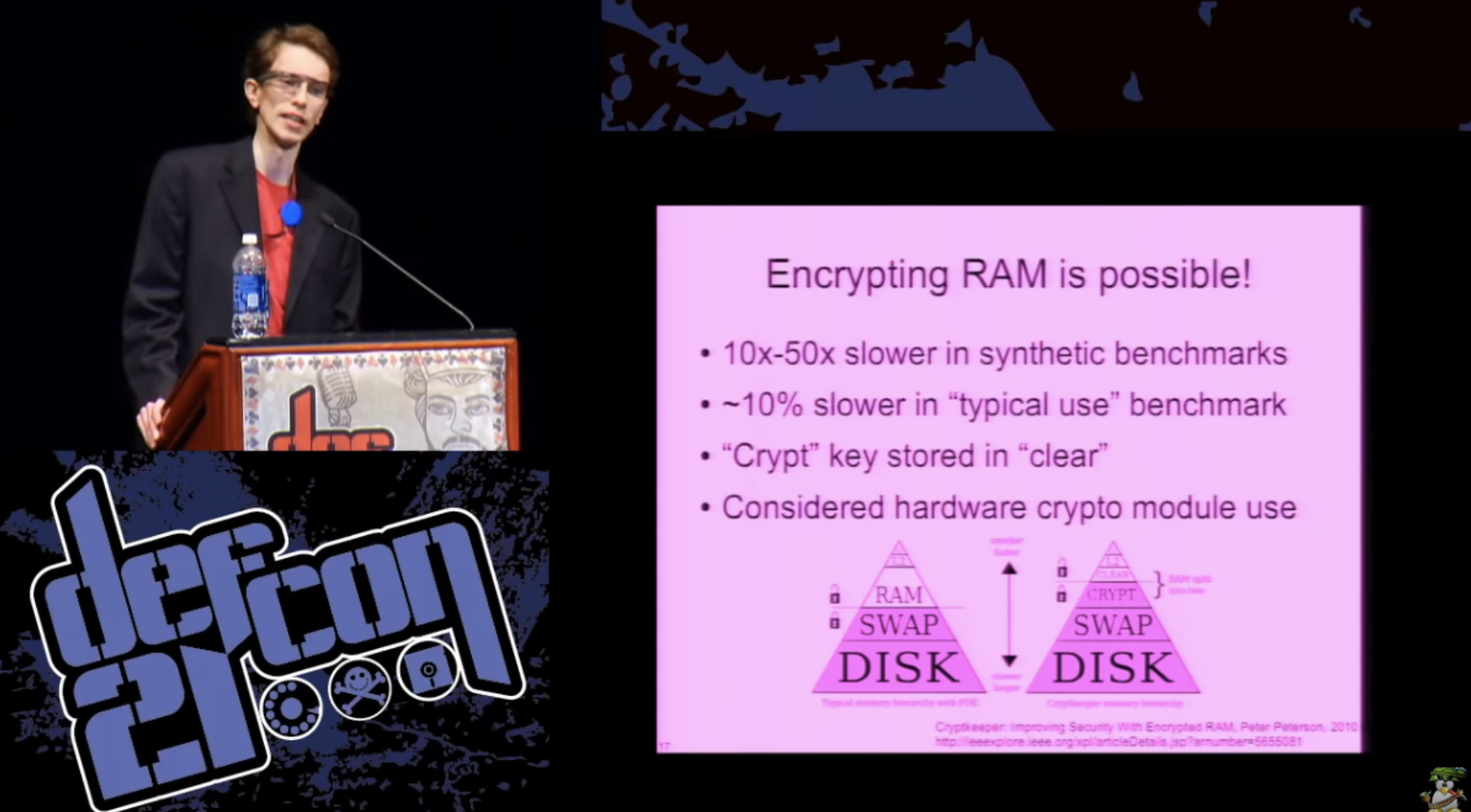

And, again, it is surprising or not, but the answer is yes, we can! As proof of this concept, in 2010 a guy named Peter Peterson tried to implement a RAM encryption solution. Not all of the RAM is actually encrypted - it divided the main memory into two parts: a small unencrypted component of a fixed size called “clear”, and a larger pseudo-device of paging, where all data was encrypted before being stored in the main memory. In this case, synthetic tests showed a decrease in system performance by 10-50 times. However, in the real world, when you run a web browser test, for example, it actually works quite well - only 10% slower. I think this can be reconciled. The problem with this proof of concept realization was that that he stored the decryption key in main memory, because where else could it be placed? The author considered the possibility of using such things as TPM for bulk encryption operations, but these things worked even more slowly than the dedicated hardware cryptosystem, so they were completely unsuitable for use.

But if we have the opportunity to use the processor as a kind of pseudo-hardware crypto module, then it is right in the center of events and must be very fast to do such things. So maybe we can use something like this with a high-performance CPU.

Suppose that we have such a system. Our keys are not in the main memory, and our code responsible for managing keys is protected from random access of malicious hardware components to read and write. The main memory is encrypted, so most of our secrets will not leak, even if someone tries to perform a cold boot attack. But how do we get the system loaded to this state? After all, we need to start with the system turned off, authenticate and start the system. How can this be done in the most reliable way? In the end, someone can still modify the system software to deceive us so that we think that we are launching this “big new” system without actually doing anything like that.

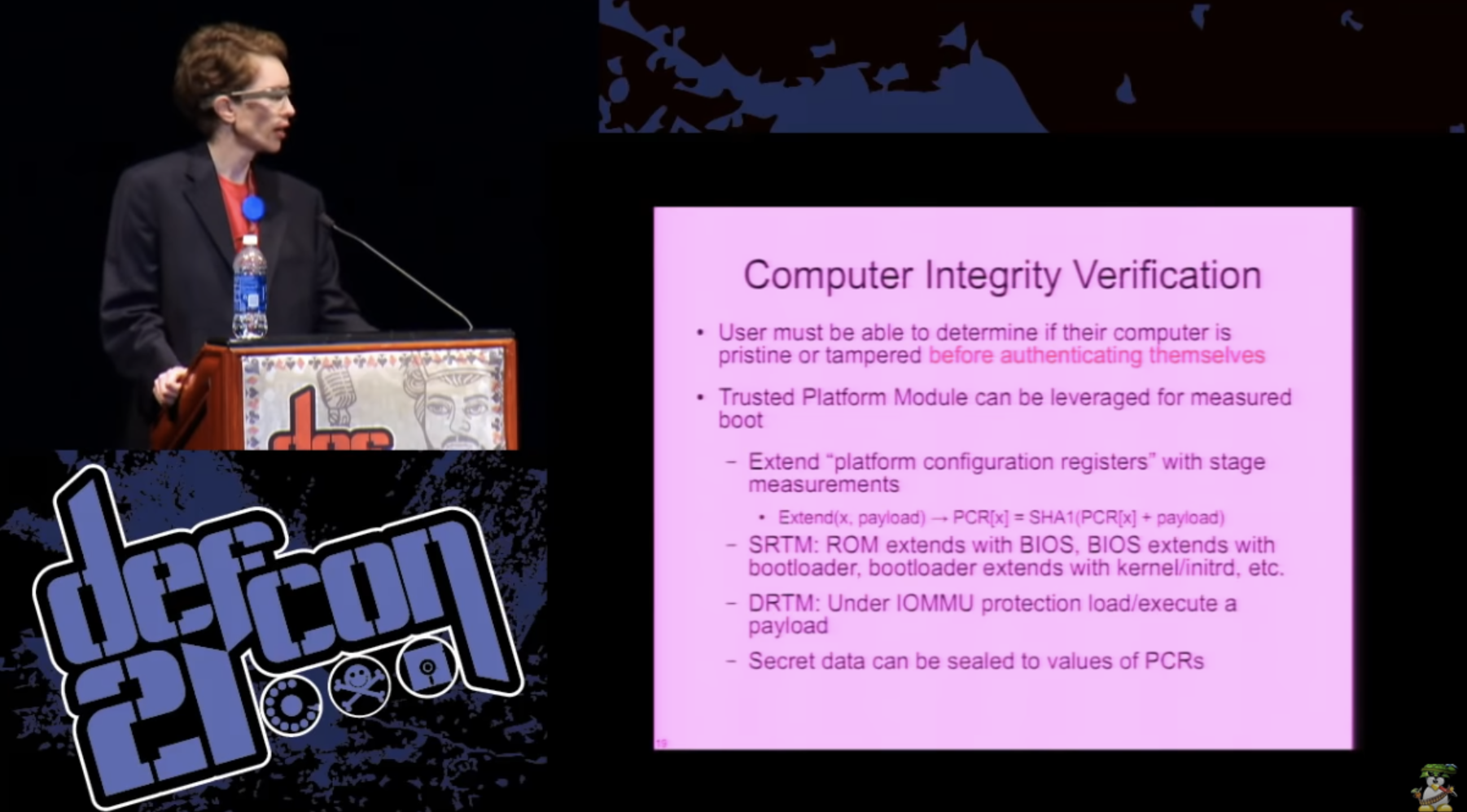

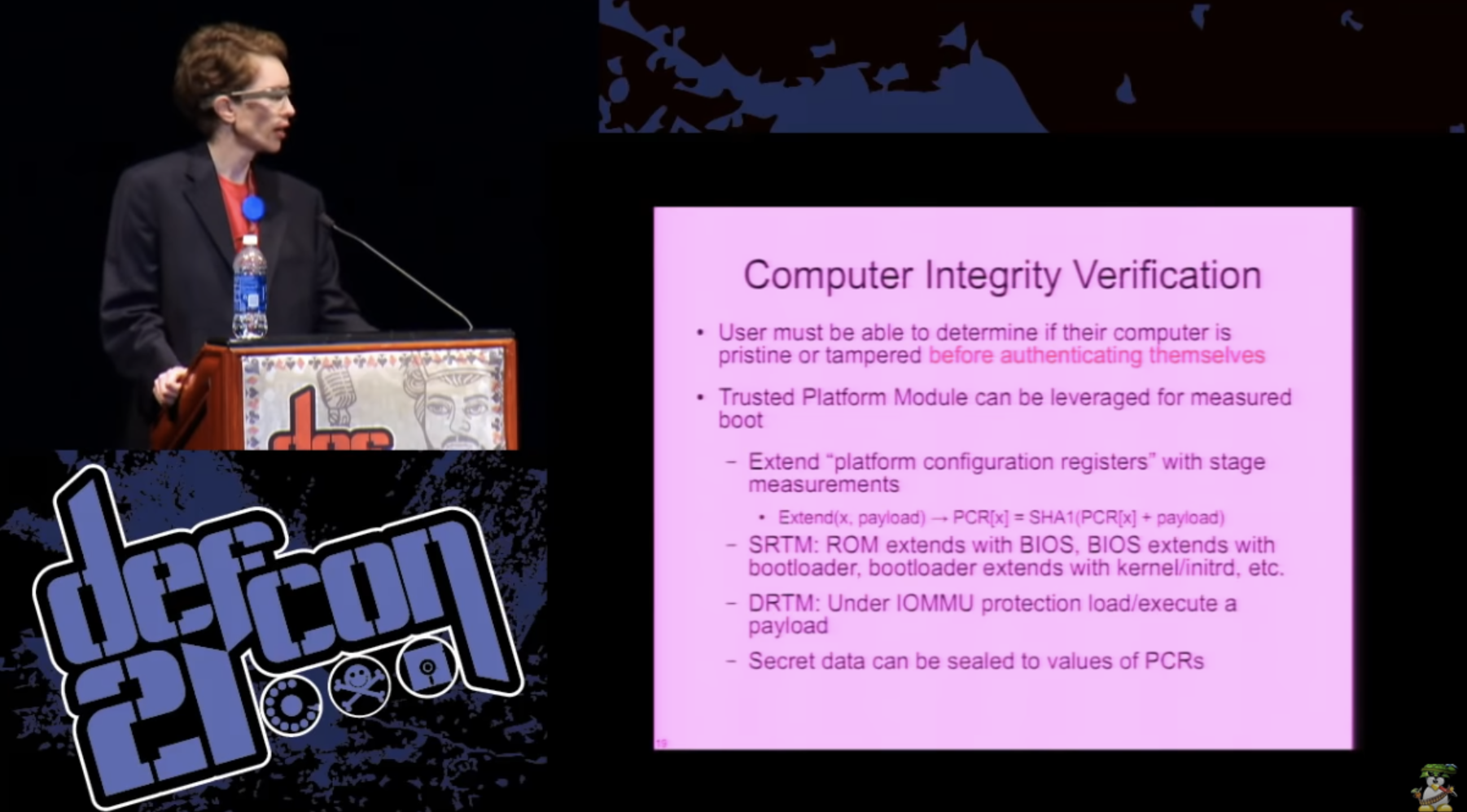

Thus, one of the most important topics is the ability to check the integrity of our computers. The user must be able to verify that the computer has not been hacked before being authenticated. To do this, we can use the tool — the trusted platform module of the Trusted Platform Module — an implementation of the specification describing the crypto processor. This is kind of a bad rap, later we'll talk more about it, but it has the ability to measure the boot sequence in several different ways to allow you to control what TPM data will be provided to specific system configuration states. Thus, you can “seal” the data in a specific configuration of the software that you run on the system. There are several different approaches to realizing this, and there is an intricate cryptography that makes it difficult to bypass this implementation. So maybe this is really done.

What is TRM? Initially, it was a kind of a grandiose solution for managing the digital rights of media companies. Media companies can remotely verify that your system is running in some “approved” configuration, before they allow you to run the software and unlock the access key to the video files. In fact, this turned out to be a very unfortunate decision, so no one even tries to practically use it for these purposes.

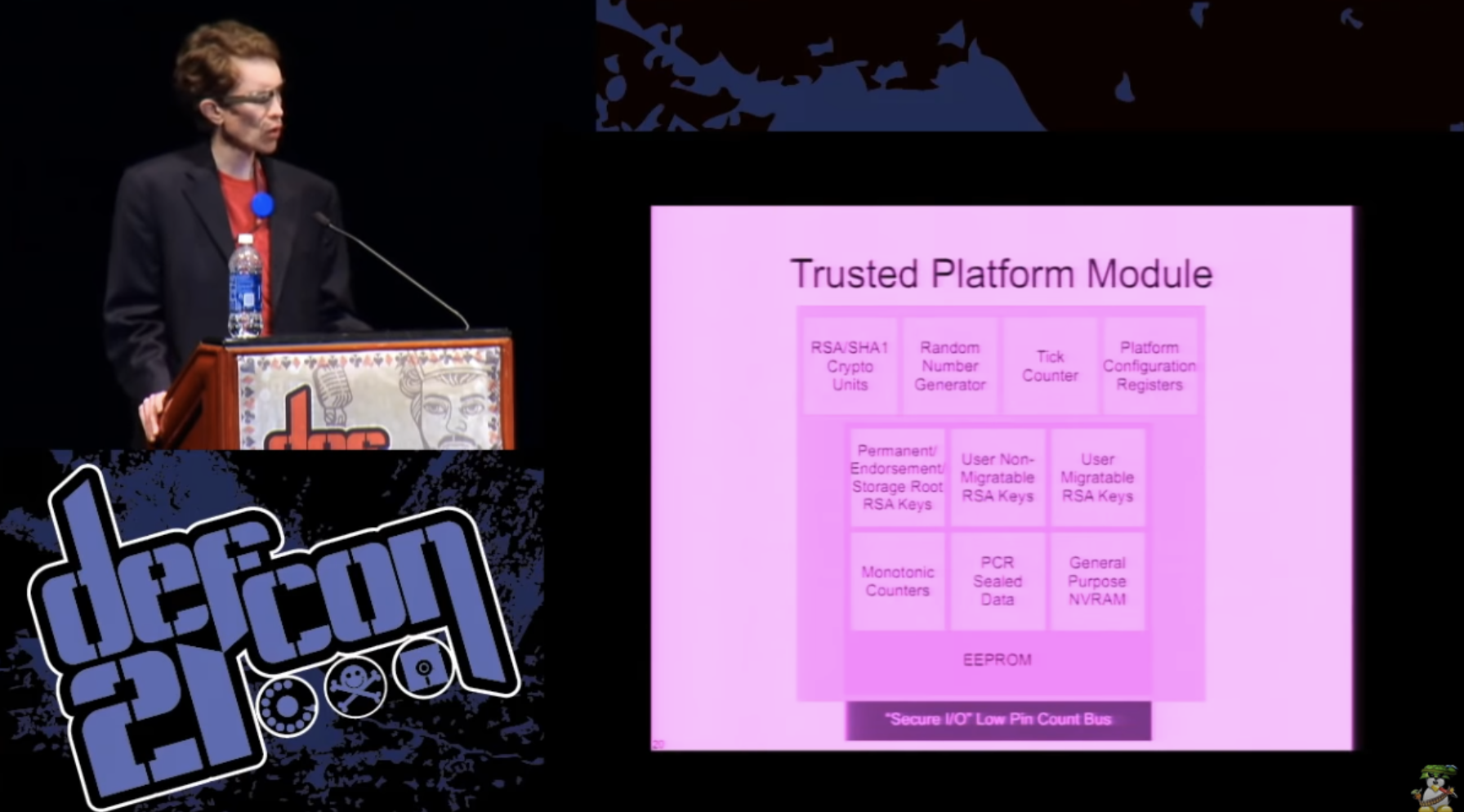

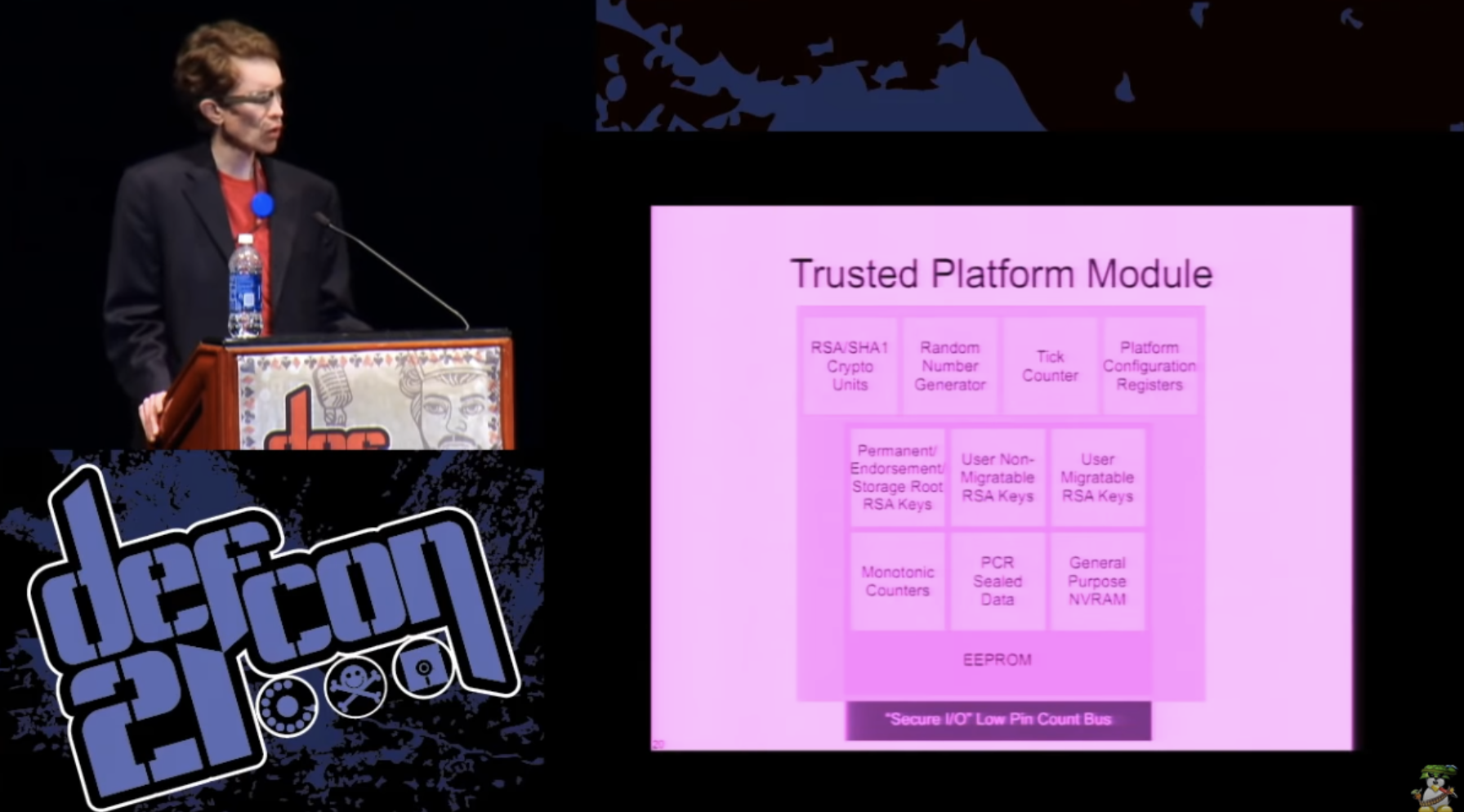

I think that the best way to implement such an idea is a smart card that is attached to your motherboard. She can perform some cryptographic operations, RSA / SHA1, has a random number generator, and she has countermeasures against physical attacks, during which someone tries to gain access to the data stored on her. The only real difference between the TPM and the smart card is that it has the ability to measure the system load status in the platform configuration registers and usually it is a separate chip on the motherboard. Thus, it can have a positive impact on security.

23:10 min

DEFCON 21. Passwords alone are not enough, or why disk encryption “breaks down” and how it can be fixed. Part 2

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, 30% discount for Habr's users on a unique analogue of the entry-level servers that we invented for you: The whole truth about VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps from $ 20 or how to share the server? (Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps until spring for free if you pay for a period of six months, you can order here .

Dell R730xd 2 times cheaper?Only we have 2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read about How to build an infrastructure building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?

It appears that 90% of you use open source software to encrypt a disk to be able to audit them. Now let those who completely turn off the computer raise their hands if they leave it unattended. I think about 20% of those present. Tell me, and who generally leaves your computer unattended for a few hours, doesn’t it turn on or off? Consider that I ask these questions, just to make sure that you are not a zombie and do not sleep. I think that almost everyone had to leave their computer for at least a few minutes.

So why do we encrypt our computers? It is difficult to find someone who would ask this question, so I think it is really important to formulate the motivation of specific actions in the field of security. If we do not do this, we will not be able to understand how to organize this work.

There is a lot of documentation on software that encrypts the disk, which describes what the software does, what algorithms it uses, which passwords, and so on, but almost never says why.

So, we encrypt your computer because we want to control our data, we want to guarantee their confidentiality and that no one can steal or change it without our knowledge. We want to decide for ourselves how to deal with our data and control what happens to it.

There are situations where you simply must ensure the secrecy of data, for example, if you are a lawyer or a doctor who has confidential client information. The same applies to financial and accounting documentation. Companies are obliged to inform customers about the leakage of such information, for example, if someone left an unprotected laptop in a car that was hijacked, and now this confidential information may be freely available on the Internet.

In addition, you need to control the physical access to the computer and ensure that it is protected from physical impact, because FDE will not help if someone physically takes over your computer.

If we want to secure the network, we need to control access to the end user's computer. We will not be able to build a secure Internet without securing every end user.

So, we have dealt with the theoretical aspects of the need for disk encryption. We know how to generate random numbers to secure keys, how to control block encryption modes used for full disk encryption, how to securely inherit a key for passwords, so that we can assume that “the mission is complete”, as President Bush said, speaking on board aircraft carrier. But you know that this is not the case, and we still have a lot to do to complete it.

Even if you have flawless cryptography and you know that it is almost impossible to crack it, in any case it should be implemented on a real computer, where you have no analogs of reliable black boxes. An attacker does not need to attack cryptography if he tries to crack the full disk encryption. To do this, he just needs to attack the computer itself or somehow deceive the user, convincing him to provide a password, or use a keylogger, etc.

The actual use of encryption does not match the FDE security model. If we consider software designed for full disk encryption, it is clear that its creators paid a lot of attention to the theoretical aspects of encryption. I will quote an excerpt from the technical documentation from the TrueCrypt website: “Our program will not protect any data on the computer if the attacker has physical access to the computer before starting or in the process of running TrueCrypt.”

In principle, their entire security model looks like this: “if our program correctly encrypts a disk and correctly decrypts it, we did our job.” I apologize for the text shown on the next slide, if you find it difficult to read it, I will do it myself. These are excerpts from the correspondence between the developers of TrueCrypt and security researcher Joanna Rutkowska about the attacks of the janitor.

TrueCrypt: “We never consider the possibility of hardware attacks, we just assume the worst. After the attacker has “worked” on your computer, you just have to stop using it to store confidential information. The TPM crypto processor is not able to prevent hardware attacks, for example, using keyloggers.

Joanna Rutkovskaya asked them: “How can you determine whether the attacker“ worked ”on your computer or not, because you don’t carry a laptop with you all the time?”, To which the developers replied: “We don’t care how the user ensures safety and security your computer. For example, a user could use a lock or place a laptop while in his absence in a lockable cabinet or safe. ” Joanna answered them very correctly: “If I am going to use a lock or safe, why do I need your encryption at all”?

Thus, ignoring the possibility of such an attack is a hoax, we cannot do that! We live in the real world, where these systems exist, with which we interact and which we use. There is no way to compare 10 minutes of attack, performed only with the help of software, for example, from a “flash drive”, with something that you can perform by manipulating the system solely with the help of hardware.

Thus, regardless of what they say, physical security and resistance to physical attacks depends on FDE. It doesn’t matter what you give up on your security model, and at least if they don’t want to take responsibility, they should be very clear and honest about how you can easily break the protection they offer.

The next slide shows the scheme for downloading abstract FDE, which is used in most modern computers.

As we know, the bootloader is loaded from the SSD / HDD using the BIOS and copied to main memory on the data transfer path. Storage Controller - PCI Bus - Platform Controller Hub. The bootloader then prompts the user for authentication information, such as a password or smart card key. Next, the password goes from the keyboard to the processor, after which the loader takes control, while both components — OS and key — remain in memory in order to ensure the transparency of the encryption and disk decryption process. This is an idealized view of the process, suggesting that no one will try to interfere in it in any way. I think you know perfectly well how to hack it, so let's list things that can go wrong if someone tries to attack you. I divide the attacks into 3 levels.

The first one is non-invasive, which does not require the capture of your computer, as it is done with the help of a flash drive with malware. You do not need to “disassemble” the system if you can easily connect to it any hardware component such as a PCI card, Express Card or Thunderbolt — Apple's newest adapter, which provides open access to the PCI bus.

A second-level attack will require a screwdriver, because you may need to temporarily remove some component of the system in order to deal with it in your own small environment. The third level, or “soldering iron attack,” is the most difficult, here you physically either add or modify system components, such as chips, to try to crack them.

One type of first-level attack is a compromised bootloader, also known as the evil maid attack, where you need to run some unencrypted code as part of the system boot process, something you can download yourself using the user's personal information in order to get access to the rest of the data encrypted on your hard drive. There are several different ways to do this. You can physically change the boot loader in the storage system. You can compromise the BIOS or download a malicious BIOS that will take control of the keyboard adapter or disk reading procedures and modify them so that they are resistant to removing the hard disk. But in any case, you can modify the system so that when the user enters his password,

You can do something similar at the operating system level. This is especially true if you are not using full disk encryption, but container encryption.

This can also occur when an exploit is attacked by a system, thanks to which an attacker gets root rights and can read the key from the main memory, this is a very common attack method. This key can be saved as a plaintext on the hard disk for later use by an attacker or sent over the network to Command & Control.

Another possibility is keyboard interception using a keylogger, be it software, hardware, or something exotic, such as a needle-eye camera or, for example, a microphone that records sounds that a user presses with a key and tries to figure out what keys. It is difficult to prevent such an attack, because it potentially includes components that are outside the system.

I would also like to mention data recovery attacks, better known as Cold Boot Attacks, “cold boot attacks”. If 5 years ago you asked even very computer-savvy people what the main memory security properties were, they would say that when the power is turned off, the data disappears very quickly.

But in 2008, excellent studies were published by Princeton, who found that in fact, even at room temperature, there was very little data loss in RAM memory for a few seconds. And if you cool the module to cryogenic temperatures, you can get a few minutes, during which there is only a slight degradation of data in the main memory.

Thus, if your key is in the main memory and someone has extracted the modules from your computer, they can attack your key by finding out where it is in the main memory in the clear. There are certain methods to counter this at the hardware level, for example, forcing the memory contents to be cleared when the power is turned off or when the computer is restarted, but this does not help if someone simply pulls out the module and places it in another computer or in a dedicated piece of equipment to retrieve its contents.

Finally, there is the possibility of direct memory access. Any PCI device in your computer has the ability to normally read and write the contents of any sector in the main memory. They can do anything.

This was developed even when the computers were much slower and we didn’t want the CPU to “nurse” with each data transfer to the device from the main memory. Thus, devices get direct memory access, the processor can issue commands to them that they can simply complete, but the data will remain in memory whenever you need it.

This is a problem because PCI devices can be reprogrammed. Many of these things have a writeable firmware, which you can just reflash to something hostile. And this may jeopardize the security of the entire operating system, as it will allow to carry out any form of attack, even modify the OS itself, or remove the key directly. In computer forensics, there is equipment designed for such things in the process of investigating crimes: they connect something to your computer and pull out the contents of the memory. You can do this with FireWire, ExpressCard or Thunderbolt ... In fact, all these are external ports that provide access to the internal system bus.

So, it would be nice if you could not store the key in RAM, because we sort of showed that the RAM is not very reliable from a security point of view. Is there any dedicated keystore or special cryptographic equipment? Yes there is. You can use cryptographic accelerators for a web server to process more SSL transactions per second. They are resistant to unauthorized interference. CA certificate authorities have things that keep them completely secret keys, but in fact they are not intended for such high-performance operations as using disk encryption. So are there any other options?

Can we use the processor as a kind of pseudo-hardware crypto module? Can we calculate something like a symmetric AES block encryption algorithm in the CPU, using instead of RAM only something like CPU registers?

Intel and AMD have added great new instructions to their processors that have taken on the job of doing AES, so now you can perform primitive block encryption operations with just one simple assembly instruction. The question is, can we keep our key in memory, or can we perform this process without relying on main memory? In modern x86 processors there is a fairly large set of registers, and if one of you really tried to add all the bits that are in these, it turns out about 4 kilobytes. Thus, we can actually use some CPUs to store keys and create space to perform encryption operations.

One of the possibilities is the use of hardware debug point registers. In a typical Intel processor, there are 4 such registers, and on the x64 system, each of them will contain a 64-bit pointer. This is 256 bits of potential disk space that most people will never use. Of course, the advantage of using debug registers is their privilege, because only the operating system can get access to them. There are other nice advantages, for example, if you turn off the power of the processor when you turn off the system or go into sleep mode, you really lose the entire contents of the register, so you can not be afraid of a “cold reset”.

The guy from Germany, Tilo Muller, implemented this similar thing called TRESOR for Linux in 2011. He tested the performance of such a system and concluded that it works no slower than with regular AES software calculations.

How about storing two 128-bit keys instead of one key? This will lead us to a larger crypto module space. We can store one master key that never leaves the processor when it is loaded, and then load and unload the key versions we need to perform additional operations and solve additional tasks.

The problem is that we can store our code or our keys outside the main memory, but the CPU will still handle the contents of the memory anyway. Thus, the use of DMA, direct memory access technology, bypassing the central processor, or other manipulations, allows you to change the operating system and keep its dump outside the main memory registers, or, if more exotic methods are used, outside the debug registers.

Can we do something with a DMA angle of attack? As it turned out, yes, we can. Recently, as part of new technologies to increase server virtualization, for performance reasons, people like to be able to connect, say, a network adapter to a virtual server, so it must be connected through a hypervisor.

IOMMU technology has been designed in such a way that you can isolate a PCI device in your own small memory section, from where it cannot read and write arbitrarily anywhere in the system. This is perfect: we can configure the IOMMU permissions to protect our operating system or what we use to process keys, and protect them from random access.

Again, our friend from Germany, Tilo Muller, implemented the TRESOR version on a microbit bit called BitVisor, which does this. This allows you to run a separate operating system and transparently accesses disk encryption, and it's great that you don't need to take care of it or know anything about it. The disk access is completely transparent to the OS, the operating system cannot access the debug registers, and the IOMMU is configured in such a way that the hypervisor is completely protected from any manipulation.

But, as it turned out, in memory, in addition to the disk encryption keys, there are other things that should be taken care of. There is a problem that I already mentioned - earlier we used container encryption, and now we mainly perform full disk encryption.

We do full disk encryption, because it is very difficult to make sure that you do not accidentally write your confidential data to temporary files or the system cache during container encryption. Now that we consider RAM as an insecure, unreliable place to store data, we need to treat it accordingly. We need to encrypt all data that is not wanted to be leaked, everything that is really important, such as SSH keys, private keys or PGP keys, even the password manager and any “top secret” documents you work with.

I had a very stupid idea: can we encrypt RAM? Or at least most of the main memory in which we will keep secrets in order to minimize the possible amount of leakage.

And, again, it is surprising or not, but the answer is yes, we can! As proof of this concept, in 2010 a guy named Peter Peterson tried to implement a RAM encryption solution. Not all of the RAM is actually encrypted - it divided the main memory into two parts: a small unencrypted component of a fixed size called “clear”, and a larger pseudo-device of paging, where all data was encrypted before being stored in the main memory. In this case, synthetic tests showed a decrease in system performance by 10-50 times. However, in the real world, when you run a web browser test, for example, it actually works quite well - only 10% slower. I think this can be reconciled. The problem with this proof of concept realization was that that he stored the decryption key in main memory, because where else could it be placed? The author considered the possibility of using such things as TPM for bulk encryption operations, but these things worked even more slowly than the dedicated hardware cryptosystem, so they were completely unsuitable for use.

But if we have the opportunity to use the processor as a kind of pseudo-hardware crypto module, then it is right in the center of events and must be very fast to do such things. So maybe we can use something like this with a high-performance CPU.

Suppose that we have such a system. Our keys are not in the main memory, and our code responsible for managing keys is protected from random access of malicious hardware components to read and write. The main memory is encrypted, so most of our secrets will not leak, even if someone tries to perform a cold boot attack. But how do we get the system loaded to this state? After all, we need to start with the system turned off, authenticate and start the system. How can this be done in the most reliable way? In the end, someone can still modify the system software to deceive us so that we think that we are launching this “big new” system without actually doing anything like that.

Thus, one of the most important topics is the ability to check the integrity of our computers. The user must be able to verify that the computer has not been hacked before being authenticated. To do this, we can use the tool — the trusted platform module of the Trusted Platform Module — an implementation of the specification describing the crypto processor. This is kind of a bad rap, later we'll talk more about it, but it has the ability to measure the boot sequence in several different ways to allow you to control what TPM data will be provided to specific system configuration states. Thus, you can “seal” the data in a specific configuration of the software that you run on the system. There are several different approaches to realizing this, and there is an intricate cryptography that makes it difficult to bypass this implementation. So maybe this is really done.

What is TRM? Initially, it was a kind of a grandiose solution for managing the digital rights of media companies. Media companies can remotely verify that your system is running in some “approved” configuration, before they allow you to run the software and unlock the access key to the video files. In fact, this turned out to be a very unfortunate decision, so no one even tries to practically use it for these purposes.

I think that the best way to implement such an idea is a smart card that is attached to your motherboard. She can perform some cryptographic operations, RSA / SHA1, has a random number generator, and she has countermeasures against physical attacks, during which someone tries to gain access to the data stored on her. The only real difference between the TPM and the smart card is that it has the ability to measure the system load status in the platform configuration registers and usually it is a separate chip on the motherboard. Thus, it can have a positive impact on security.

23:10 min

DEFCON 21. Passwords alone are not enough, or why disk encryption “breaks down” and how it can be fixed. Part 2

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, 30% discount for Habr's users on a unique analogue of the entry-level servers that we invented for you: The whole truth about VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps from $ 20 or how to share the server? (Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps until spring for free if you pay for a period of six months, you can order here .

Dell R730xd 2 times cheaper?Only we have 2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read about How to build an infrastructure building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?