How professional interest stole my weekend

Good day to all! After reading this article (an online flower shop, or how we screwed up on Valentine's Day ), I decided to share the experience of optimizing one of the sites on Bitrix. For an unknown reason, it was this article that gave a decisive kick to share their experience. I would like to believe that my story will save someone valuable time (because of my “bring it to the end” trait, I spent 2 days off to achieve the goal. I didn’t want to leave the client without a working site on the weekends), and I hope that more experienced colleagues will point out my mistakes.

On Friday, I got a Bitrix website with a catalog of auto parts and a database of 3.2 GB in size. Problem: the site either didn’t give the page at all, or during the waiting time it was possible to forget why I visited this site. What attempts I made and what I managed to achieve in the end I will tell under the cut.

So, in more detail, the parameters of the old hosting:

Usually I take the following steps to optimize the site for Bitrix (VDS), but this time it did not give tangible results:

When I decided to deploy the local version I was very surprised by the 3.2 GB site database , especially the b_sale_fuser table (2.4 GB), which is responsible for the visitors' baskets. As it turned out, it contained data from 2014. When I looked inside this table, I noticed several features:

At this stage, I made the assumption that the problem lies in the use of the CsaleBasket :: GetBasketUserID (bSkipFUserInit) method without an additional parameter. The nuance is that the bSkipFUserInit parameter is responsible for creating a record in the table, even if the client has not put anything in the basket yet. My guess was confirmed when in one of the files result_modifier.phpFound a call to the ill-fated method without the required parameter. Correcting this point and clearing the table of irrelevant data (around 3 hours, because the muscle constantly fell off, and the data needed to be removed from related tables. All this was done using standard bitrix methods, which I regretted later. In more detail After cleaning, the number of records decreased from 19+ million to 400+ thousand, which had a beneficial effect on the local version, but it still didn’t work. The page began to appear in 20-30 seconds, and earlier in a few minutes.

Then it was decided to search for long-term queries Since we use bitrixenv, the order of the commands for editing the muscle config looks like this:

After a lapse, two requests were found that ran for 300+ seconds (see below). One of them showed 4 random goods from the entire catalog. At that time, I decided to comment on the call of this component until better times. But the second one cannot be ruled out just like that, since he is responsible for the formation of the main menu (see below).

At first, I was not embarrassed that on the combat server, this request was completed in 300+ seconds, and on the local machine in 20+, and I thought that the reason for this was insufficient load on the site. Those. on the combat site per minute, the visit was 20 people per minute, and on the local copy I was the only one to make requests. I decided to use the Jmeter utility (see below).

After running this test in 20 queries, I decided to open the site in a browser and immediately received the following error: Incorrect key file for table / tmp / *. As it turned out, for each sql request, the muscle created temporary tables on disk in a temporary folder, and there was not enough space. Because not strong in principle of work MySql went with the question to the all-knowing Google (and you had at least one day without resorting to search ?!), which explained the following:

if the sample contains fields likeTEXT / BLOB , the database will create temporary tables on the disk

And the great assistant as always turned out to be right! In the b_iblock_section table, there were a couple of such fields (see on the right), namely DESCRIPTION and SEARCHABLE_CONTENT .

Having removed these fields from the request and rewriting it (see below), we managed to win several times in speed! As a result, the query instead of 20+ seconds on the local machine began to return the result after 1.5 seconds. However, it was too early to rejoice. since this request in the database was generated in the system bitrix file /bitrix/modules/iblock/classes/mysql/iblocksection.php. Unfortunately, I couldn’t find anything better than to fix it, although I am aware that in the very first update of the Bitrix kernel my editing may be lost. But at that time I was already struggling with this site for 3 days in a row and time passed by Sunday evening. And left this farm ...

However, it was too early to rejoice. When I uploaded the edits to the combat site, the result became better, but far from what I wanted (300 sec -> 100+ sec). After spending some time in bewilderment and swearing to myself, I decided to try to work out the assumption about the difference in mysql versions on the battle server and on the local machine. You might think that the issue is in the settings of the database itself, however, I cut off this item at the beginning of the way, when I set the same settings as on the combat vehicle. It only remained to upgrade from version 5.5. * To 5.6.35on the server (the latest available version of mysql on the machine). He had high hopes for this step, since ideas and assumptions about what could have been the case were exhausted. Yes, and it was a pity the weekend I spent on finding and solving the problem. Along with the weekend, my nerves also ended ... But how glad I was when after the update everything worked as it should, the numbers in the request logs were identical to those on the local machine, and the site just started to fly. There was no limit of joy, it was enough for two: me and my girlfriend, who realized that I would spend the rest of the weekend with her, and not behind the monitor screen.

What methods did for myself:

I thank everyone who took their time. It will be great if you leave constructive criticism or advice.

PS At the end of the second day of torment, I recalled the story “The Old Man and the Sea”, and thought that my attempts would not be rewarded either, but nothing happened.

PPS In order to increase the rate of data deletion from the b_sale_fuser table and other related tables , it was possible to remove indexes from them, and after updating to add them again.

On Friday, I got a Bitrix website with a catalog of auto parts and a database of 3.2 GB in size. Problem: the site either didn’t give the page at all, or during the waiting time it was possible to forget why I visited this site. What attempts I made and what I managed to achieve in the end I will tell under the cut.

So, in more detail, the parameters of the old hosting:

- VDS

- 8 GB of RAM (on a new hosting 4GB);

- 40GB SSD;

- bitrix environment 5. * (on the new hosting, clean version 7.0);

- PHP 5.6 (on new hosting PHP 7.0);

- MySql 5.5. *;

- Bitrix file caching

- agents run on hits.

Usually I take the following steps to optimize the site for Bitrix (VDS), but this time it did not give tangible results:

- transfer agent execution from hits to crowns ( more );

- memcached setup ( more );

- This time added a transfer to a new hosting with updated components (php, mysql, etc.)

When I decided to deploy the local version I was very surprised by the 3.2 GB site database , especially the b_sale_fuser table (2.4 GB), which is responsible for the visitors' baskets. As it turned out, it contained data from 2014. When I looked inside this table, I noticed several features:

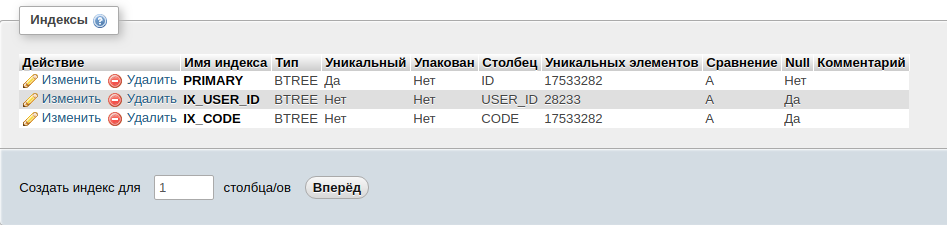

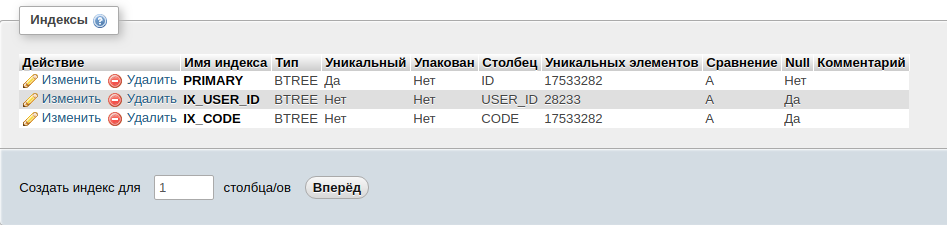

- 80% of the data were only for the last month (total 17+ million records);

- Records were created with a frequency of several seconds. The standard method for cleaning abandoned baskets simply could not cope;

- there are three indexes in the table, which means that when the data in it changes, the indexes will be updated, which entails additional costs for resources;

At this stage, I made the assumption that the problem lies in the use of the CsaleBasket :: GetBasketUserID (bSkipFUserInit) method without an additional parameter. The nuance is that the bSkipFUserInit parameter is responsible for creating a record in the table, even if the client has not put anything in the basket yet. My guess was confirmed when in one of the files result_modifier.phpFound a call to the ill-fated method without the required parameter. Correcting this point and clearing the table of irrelevant data (around 3 hours, because the muscle constantly fell off, and the data needed to be removed from related tables. All this was done using standard bitrix methods, which I regretted later. In more detail After cleaning, the number of records decreased from 19+ million to 400+ thousand, which had a beneficial effect on the local version, but it still didn’t work. The page began to appear in 20-30 seconds, and earlier in a few minutes.

Then it was decided to search for long-term queries Since we use bitrixenv, the order of the commands for editing the muscle config looks like this:

nano /etc/mysql/bx/bvxat.cnf

# добавляем строки в файл

log_slow_queries = /var/log/mysql/mysql-slow.log

long_query_time = 1

service mysqld restart

After a lapse, two requests were found that ran for 300+ seconds (see below). One of them showed 4 random goods from the entire catalog. At that time, I decided to comment on the call of this component until better times. But the second one cannot be ruled out just like that, since he is responsible for the formation of the main menu (see below).

Sql query

Tcp port: 3306 Unix socket: /var/lib/mysqld/mysqld.sock

Time Id Command Argument

# Time: 180318 18:30:07

# User@Host: bitrix[bitrix] @ localhost []

# Thread_id: 96 Schema: testdb QC_hit: No

# Query_time: 301.414008 Lock_time: 0.000324 Rows_sent: 13 Rows_examined: 260456

use testdb;

SET timestamp=1521387007;

SELECT DISTINCT

BS.*,

B.LIST_PAGE_URL,

B.SECTION_PAGE_URL,

B.IBLOCK_TYPE_ID,

B.CODE as IBLOCK_CODE,

B.XML_ID as IBLOCK_EXTERNAL_ID,

BS.XML_ID as EXTERNAL_ID,

DATE_FORMAT(BS.TIMESTAMP_X, '%d.%m.%Y %H:%i:%s') as TIMESTAMP_X,

DATE_FORMAT(BS.DATE_CREATE, '%d.%m.%Y %H:%i:%s') as DATE_CREATE

,COUNT(DISTINCT BE.ID) as ELEMENT_CNT

FROM b_iblock_section BS

INNER JOIN b_iblock B ON BS.IBLOCK_ID = B.ID

INNER JOIN b_iblock_section BSTEMP ON BSTEMP.IBLOCK_ID = BS.IBLOCK_ID

LEFT JOIN b_iblock_section_element BSE ON BSE.IBLOCK_SECTION_ID=BSTEMP.ID

LEFT JOIN b_iblock_element BE ON (BSE.IBLOCK_ELEMENT_ID=BE.ID

AND ((BE.WF_STATUS_ID=1 AND BE.WF_PARENT_ELEMENT_ID IS NULL )

AND BE.IBLOCK_ID = BS.IBLOCK_ID

)

AND BE.ACTIVE='Y'

AND (BE.ACTIVE_TO >= now() OR BE.ACTIVE_TO IS NULL)

AND (BE.ACTIVE_FROM <= now() OR BE.ACTIVE_FROM IS NULL))

WHERE 1=1

AND BSTEMP.IBLOCK_ID = BS.IBLOCK_ID

AND BSTEMP.LEFT_MARGIN >= BS.LEFT_MARGIN

AND BSTEMP.RIGHT_MARGIN <= BS.RIGHT_MARGIN

AND BSTEMP.GLOBAL_ACTIVE = 'Y'

AND ((((BS.ACTIVE='Y'))))

AND ((((BS.GLOBAL_ACTIVE='Y'))))

AND ((((BS.IBLOCK_ID = '9'))))

AND ((((BS.DEPTH_LEVEL <= '1'))))

AND ((((B.ID = '9'))))

AND ((

B.ID IN (

SELECT IBLOCK_ID

FROM b_iblock_group IBG

WHERE IBG.GROUP_ID IN (2)

AND IBG.PERMISSION >= 'R'

AND (IBG.PERMISSION='X' OR B.ACTIVE='Y')

)

OR (B.RIGHTS_MODE = 'E' AND EXISTS (

SELECT SR.SECTION_ID

FROM b_iblock_section_right SR

INNER JOIN b_iblock_right IBR ON IBR.ID = SR.RIGHT_ID

INNER JOIN b_user_access UA ON UA.ACCESS_CODE = IBR.GROUP_CODE AND UA.USER_ID = 0

WHERE SR.SECTION_ID = BS.ID

AND IBR.OP_SREAD = 'Y'

))

))

GROUP BY BS.ID, B.ID

ORDER BY BS.LEFT_MARGIN asc;

At first, I was not embarrassed that on the combat server, this request was completed in 300+ seconds, and on the local machine in 20+, and I thought that the reason for this was insufficient load on the site. Those. on the combat site per minute, the visit was 20 people per minute, and on the local copy I was the only one to make requests. I decided to use the Jmeter utility (see below).

After running this test in 20 queries, I decided to open the site in a browser and immediately received the following error: Incorrect key file for table / tmp / *. As it turned out, for each sql request, the muscle created temporary tables on disk in a temporary folder, and there was not enough space. Because not strong in principle of work MySql went with the question to the all-knowing Google (and you had at least one day without resorting to search ?!), which explained the following:

if the sample contains fields likeTEXT / BLOB , the database will create temporary tables on the disk

And the great assistant as always turned out to be right! In the b_iblock_section table, there were a couple of such fields (see on the right), namely DESCRIPTION and SEARCHABLE_CONTENT .

Having removed these fields from the request and rewriting it (see below), we managed to win several times in speed! As a result, the query instead of 20+ seconds on the local machine began to return the result after 1.5 seconds. However, it was too early to rejoice. since this request in the database was generated in the system bitrix file /bitrix/modules/iblock/classes/mysql/iblocksection.php. Unfortunately, I couldn’t find anything better than to fix it, although I am aware that in the very first update of the Bitrix kernel my editing may be lost. But at that time I was already struggling with this site for 3 days in a row and time passed by Sunday evening. And left this farm ...

It was

BS.*,

...

Has become

BS.ID,

BS.TIMESTAMP_X,

BS.MODIFIED_BY,

BS.DATE_CREATE,

BS.CREATED_BY,

BS.IBLOCK_ID,

BS.IBLOCK_SECTION_ID,

BS.ACTIVE,

BS.GLOBAL_ACTIVE,

BS.SORT,

BS.NAME,

BS.PICTURE,

BS.LEFT_MARGIN,

BS.RIGHT_MARGIN,

BS.DEPTH_LEVEL,

BS.CODE,

BS.XML_ID,

BS.TMP_ID,

BS.DETAIL_PICTURE,

BS.SOCNET_GROUP_ID,

...

However, it was too early to rejoice. When I uploaded the edits to the combat site, the result became better, but far from what I wanted (300 sec -> 100+ sec). After spending some time in bewilderment and swearing to myself, I decided to try to work out the assumption about the difference in mysql versions on the battle server and on the local machine. You might think that the issue is in the settings of the database itself, however, I cut off this item at the beginning of the way, when I set the same settings as on the combat vehicle. It only remained to upgrade from version 5.5. * To 5.6.35on the server (the latest available version of mysql on the machine). He had high hopes for this step, since ideas and assumptions about what could have been the case were exhausted. Yes, and it was a pity the weekend I spent on finding and solving the problem. Along with the weekend, my nerves also ended ... But how glad I was when after the update everything worked as it should, the numbers in the request logs were identical to those on the local machine, and the site just started to fly. There was no limit of joy, it was enough for two: me and my girlfriend, who realized that I would spend the rest of the weekend with her, and not behind the monitor screen.

What methods did for myself:

- testing and identifying problems on the local computer is logical to carry out in conditions close to combat. Unfortunately, I thought of this several hours later, updating the page of the site with single requests;

- sometimes it’s easier to update the components used. For example, it helped in my multi-day quest, though it's a pity that I only thought of it at the end of the epic.

- After some time, I think that it would be better to analyze the CMS system files to create several sql queries in the database that would clear the ill-fated table b_sale_fuser and the data associated with it. And then I sat and waited until system records deleted pieces per pass ...

- It’s better to spend time learning the tools you work with. In my case, I’m going to read a book on MySql so that new problems are not an inexplicable focus for me.

I thank everyone who took their time. It will be great if you leave constructive criticism or advice.

PS At the end of the second day of torment, I recalled the story “The Old Man and the Sea”, and thought that my attempts would not be rewarded either, but nothing happened.

PPS In order to increase the rate of data deletion from the b_sale_fuser table and other related tables , it was possible to remove indexes from them, and after updating to add them again.