What really happened with Vista: insider retrospective

- Transfer

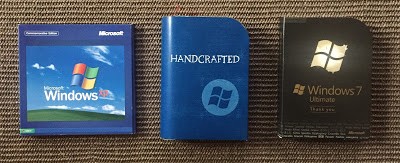

Traditionally, the Windows development team signs a poster (in this case, a DVD image) with the release of a new version of Windows. By the time the release party ends, it will have hundreds or thousands of signatures

“Experience is what you get only after you need it.” - Stephen Wright

I liked Terry Crowley’s informative blog ( “What really happened with Vista” ). Terry worked in the Office group and did a fantastic job describing the intricate intrigues surrounding Windows Vista and the related but abandoned Longhorn project - from the perspective of an outside observer.

He correctly noted many of the problems that plagued the project, and I do not want to repeat about them again. I just thought that it would be honest to give an insider look at the same events. I do not expect the same eloquent or exhaustive exposition as Terry’s, but I hope to shed some light on what went wrong. Ten years have passed since the release of the first version of Windows Vista, but these lessons now seem relevant as never before.

Windows is a monster. Thousands of developers, testers, program managers, security professionals, UI designers, architects, etc. And this is not counting the staff from the personnel department, recruiters, guys from marketing, sales people, lawyers, and, of course, many managers, directors and vice presidents in each of these areas. This entire group is surrounded by many thousands of employees from our partners (both inside and outside Microsoft), who supplied everything from equipment and device drivers to applications running on the platform.

Aerial photography of a Windows development team on a soccer field at Microsoft

At that time, Windows organizationally actually divided into three groups: Core, Server, and Client. The Core group supplied the framework: all key components of the operating system common to all versions of Windows (the kernel itself, storage, security, network subsystem, device drivers, installation and update model, Win32, etc.). In turn, the server group focused on technologies for the server market (terminal services, clustering and smooth operation, corporate management tools, etc.), and the client group was responsible for technologies related to the desktop and user versions (web browser, media player, graphics , shell, etc.).

Of course, there were many reorganizations, but the basic structure always remained, even when the popularity of Windows grew, and the groups themselves increased in size. It will also be fair to say that, from a cultural and organizational point of view, the Core group was closer to the server group than to the client group - at least that was before Vista.

By the time I joined Microsoft in early 1998, Windows meant Windows NT - architecturally, organizationally, and with respect to the product itself. The Windows 95 code base was largely abandoned, and Windows NT was introduced for each type of Windows - from laptops to servers in the cluster. Two years later, the Windows 95/98 code base was supposed to be resurrected for one last release - the very Windows ME that was talked about so much - but this project was run by a small group, while the vast majority worked on the NT code base. I was lucky to spend more than ten years in the womb of a monster. I started in the midst of developing Windows 2000 and stayed until the completion of Windows 7.

The first seven years I spent in the groups responsible for storage, file systems, uptime / clustering, file-level network protocols, distributed file systems, and related technologies. I later spent a year or two in the Microsoft Security Management team. It included everything from Windows security technologies to antivirus products, security marketing, and emergency response, such as the release of security updates. It was closer to the end of the Vista life cycle when viruses and worms brought Windows to its knees and when Microsoft's reputation as a developer of secure and secure software was massively beaten in public.

For the past three or four years, during the preparation for the release of Windows 7, I managed the entire development of the Core group on Windows. This means the possession of almost all technologies that work “under the hood” and are used by both the server and client groups. After the release of Vista, the entire Windows team was organized by direction and triad (Dev, Test, PM) at all levels of the organization, so I had two partners in crime. I led the development teams, while they led the test and management groups, respectively.

The Windows team in the past often tried to overpower ambitious and massive projects that were abandoned or redesigned a few years later. The previous example was the ambitious Cairo project, which was eventually gutted: only some of its parts included in Windows 2000 were saved.

In my humble opinion, the duration of each release was still the biggest problem with the release of Windows. On average, each release took three years from the start of development to completion, but only 6–9 months of this time took the development of the “new” code. The rest of the time was spent on integration, testing, alpha and beta stages - each for several months.

Some projects required more than six months for key development, so they were created in parallel and merged with the main code base upon completion. This means that the main branch has always been in limbo as large pieces of functionality were added or changed to it. During the development of Windows 7, a much tighter control was established to guarantee a continuously healthy and functioning code base, but previous versions were in a constantly unhealthy state with instability for several months in a row.

The chaotic nature of development often led development teams to play dangerous games with a schedule. They convinced themselves and others that their code was in better condition than other projects, that they could “polish” the remaining fragments on time, so that they were allowed to put their component in a semi-finished form.

The three-year release cycle meant that we rarely imagined what the competitive landscape and external ecosystem would look like at the time of release. If the development of the function did not have time for release, then it was completely abandoned (since it hardly made sense 6 years after the start of development) or, worse, it was "sent to Siberia", that is, they continued to develop the component, which for the most part ignored the rest of the organization and which was doomed to failure or futility - but the group or management simply could not decide to abandon development. I personally was responsible for several of these projects. Vision when looking at the past becomes one hundred percent.

Given that each team was busy promoting its own plan and set of functions in the release, it often skimped on integration with other components, the user interface, end-to-end testing, as well as such unpleasant and tedious things as updating, leaving these difficult things for later . In turn, this meant that some groups quickly became the bottleneck of the whole development, and at the last minute everyone ran to their aid in completing work on the UI or testing the update mechanism.

At each point in time, there were several major releases in development, as well as numerous side projects. Different groups were responsible for the codebases in different states of readiness, which eventually led to the result, where “the rich get richer and the poor get poorer”. Groups that began to lag, for one reason or another, usually remained behind.

When the project was nearing completion, program managers began to make requirements for the next release, and groups in a “healthy” state (rich) began to implement new code, while most of the organization (poor) still delved into the current release. In particular, testing groups were rarely released before the release, so at the beginning of the projects the new code was not thoroughly tested. "Unhealthy" groups always lagged behind, making the finishing touches for the current release and lagging farther and farther. It was in these groups that developers with the lowest level of morale and the most exhausted often worked. This means that new employees of the groups [instead of the departed exhausted - approx. Per.] Inherited a fragile code that they did not write and therefore did not understand.

For almost the entire development period of Vista / Longhorn, I have been responsible for the storage and file systems. This means that I was involved in the WinFS project, although it was mainly led by employees of the SQL DBMS group, a sister structure for the Windows team.

Bill Gates personally participated in the project at a very detailed level, he was even jokingly called the "WinFS PM project manager." Hundreds, if not thousands of man-years, have spent on developing an idea whose time has simply gone: what if we combine the DBMS query capabilities and the file system functionality for streaming and storing unstructured data - and open it up as a programming paradigm for creating unique new rich applications.

In hindsight, it’s now obvious that Google skillfully solved this problem by providing transparent and quick indexing of unstructured data. And they did it for the entire Internet, and not just for your local drive. And you don’t even need to rewrite your applications to take advantage of this system. Even if the WinFS project were successful, it would take years to rewrite the applications so that they could take advantage of it.

When Longhorn was canceled, and Vista was hastily assembled from its embers, WinFS was already thrown out of the OS release. The SQL group continued to work on it as a separate project for several years. By this time, Windows had a built-in indexing engine and integrated search - implemented purely on the side without the need for changes in applications. So the need for WinFS became even more obscure, but the project continued as before.

The massive architectural changes related to security in Longhorn continued as part of the Windows Vista project after the Longhorn “reboot”. We learned a lot about security in the rapidly expanding universe of the Internet and wanted to apply this knowledge at the architectural level of the OS to improve the overall security of all users.

We had no choice. Windows XP showed that we were victims of our own success. Designed for convenience, the system clearly did not meet the security requirements, faced with the reality of the Internet era. To solve these security problems, a parallel project was required. Windows XP Service Pack 2 (despite its name) was a huge undertaking that sucked thousands of resources from Longhorn.

In our next major OS release, we definitely could not take a step back in security requirements. So Vista has become much more secure than any other OS that Microsoft has ever released, but this process has managed to break application and device driver compatibility at an unprecedented level for the ecosystem. Users hated it because their applications did not work, and our partners hated it because it seemed to them that they did not have enough time to update and certify their drivers and applications, since Vista was in a hurry to release to compete with the resurgent Apple.

In many ways, these security changes required third-party applications to make deep architectural changes. And most ecosystem vendors were not ready to invest so much in changing their legacy programs. Some of them used an unconventional approach to change data structures and even instructions in the kernel in order to implement their functionality, bypass regular APIs and for multiprocessor locking, which often caused chaos in the system. At some stage, about 70% of all “blue screens” of Windows were caused by these third-party drivers and their reluctance to use regular APIs to implement their functionality. Especially often, this approach was used by antivirus developers.

As the head of security at Microsoft, I personally spent several years explaining to antivirus manufacturers why we will no longer allow them to “patch” kernel instructions and data structures in memory, why this poses a security risk, and why they should use regular APIs in the future, which we will no longer support their legacy programs with deep hooks to the Windows kernel - the very methods that hackers use to attack user systems. Our “friends”, manufacturers of antiviruses, turned around and sued us, accusing us of depriving them of their livelihood and abusing our monopoly position! With friends like that, who needs enemies? They just wanted their old solutions to continue to work,

Over the years, there have been so many dramatic changes in the computer industry - the advent of the Internet, the spread of mobile phones, the advent of cloud computing, the creation of new business models based on advertising, the viral growth of social media, the inexorable procession of Moore’s law and the popularity of free software. These are just some of the factors that attacked Windows from all sides.

The answer was quite logical for the wildly successful platform: stubbornly continue the course and gradually improve the existing system - the innovator's dilemma in a nutshell. The more we added the code, the more complex the system became, the more the staff increased, the more the ecosystem grew and the more difficult it was to make up for the growing backlog from competitors.

As if the pressure of competition was not enough, at one time, entire armies of our engineers and program managers spent countless hours, days, weeks, and months talking with representatives of the Ministry of Justice and corporate lawyers to document existing APIs from previous releases to comply with government antitrust regulations.

The harsh reality is that at that point in the life cycle, it took about three years to release the main release of Windows and it was too long for a fast-changing market. WinFS, security, and managed code are just some of the massive projects that were on the Longhorn agenda. And there were hundreds of smaller bets.

When you have an organization of many thousands of employees and literally billions of users, then absolutely everything needs to be envisaged. The same OS release, which was supposed to be launched on tablets and smartphones, should also work on your laptop, on servers in the data center and in embedded devices such as network attached storage, “Powered by Windows” boxes - not to mention working on top of the hypervisor ( HyperV) in the cloud. These requirements pulled the team in opposite directions, as we tried to achieve progress in all market segments at the same time.

It is not possible to view Longhorn and Vista separately. They make sense only in combination with the versions immediately before and after - Windows 2000 and XP on the one hand, Windows Server 2008 and Windows 7 on the other - and a full understanding of the industry’s broad context in retrospect.

Windows has been a victim of its own success. She successfully conquered too many markets, and the business in each of these segments now exerted some influence on the design of the operating system and pulled it in different directions, often incompatible with each other.

The exceptionally successful architecture in the 90s simply drowned out a decade later, because the world around was changing too quickly while the organization tried to keep up with it. For clarity, we saw all these trends and tried hard to conform to them, but if you allow me to mix metaphors, it is difficult to turn the air liner in the opposite direction if you are in the second year of pregnancy of your three-year release.

In short, what we knew three or four years ago when planning this OS release became ridiculously outdated, and sometimes clearly wrong, when the product finally came out. The best we could do was switch to the gradual and painless delivery of new cloud services to an ever-simplifying device. Instead, we continued to add features to the monolithic client system, which required many months of testing before each release, slowing us down when we needed to speed up. And of course, we did not care about removing the old functionality that was needed for compatibility with applications from older versions of Windows.

Now imagine supporting the same OS for ten years or more for billions of users, millions of companies, thousands of partners, hundreds of use cases and dozens of form factors - and you will begin to enter into a nightmare of support and updates.

Looking back, Linux has proven more successful in this regard. An undoubted part of the solution was the open source community and such an approach to development. The modular and plug-in Unix / Linux architecture is also a significant architectural improvement in this regard.

Sooner or later, any organization begins to give out its organizational chart as a product, and Windows is no exception. Open source has no such problem.

The “war room” Windows, later renamed the “bridge” (ship room)

If you want, add to this the internal organizational dynamics and personalities. Each of us had our own favorite features, partners from our own ecosystem pushed us to support new standards to help them get certified on the platform, add an API for their specific scenarios. Everyone had ambitions and a desire to prove that our technology, our idea will win ... if only we include it in the next release of Windows and instantly deliver it to millions of users. We believed in it strong enough to wage battles in daily meetings in our war rooms. Each also had a manager who longed to increase and expand his sphere of influence - or the number of his employees as an intermediate step along this path.

Development and testing teams often clashed. The former insisted on completing code verification, while the latter were rewarded for finding increasingly complex and esoteric test cases that did not have any real resemblance to client environments. The internal dynamics were complex, to say the least. As if this is not enough, at least once a year the company underwent a large-scale reorganization - and was dealing with a new organizational dynamics.

By the way, none of this should be accepted as an apology or excuse. This is not about that.

Have we made any mistakes? Yes, in abundance.

Have we made specially wrong decisions? No, I can’t remember a single one.

Was it an incredibly complex product with an incredibly gigantic ecosystem (the largest in the world at that time)? Yes there was.

Could we do better? Yes, how else.

Would we make other decisions today? Yes. Vision when looking at the past becomes one hundred percent. Then we did not know what we know now.

Should we look back with disappointment or regret? No, I prefer to learn the lessons learned. I am sure that none of us made the same mistakes in subsequent projects. We got lessons from that experience - which means that next time we will make completely different mistakes. Humans tend to make mistakes.