Creating a REST API on Falcon

Translation of Create a scalable REST API with Falcon and RHSCL by Shane Boulden.

In this article, we will create a REST API based on the Python Falcon framework, test the performance and try to scale it to cope with the loads.

To implement and test our API, we need the following components:

Falcon is a minimalistic web framework for building a web API, according to the Falcon site it is up to 10 times faster than Flask. Falcon is fast!

I assume that you already have PostgreSQL installed (where would we be without it). We need to create an orgdb database and an orguser user .

This user needs to register password access to the newly created database in the PostgreSQL settings in the pg_hba.conf file and give all rights.

Database configuration completed. Let's move on to creating our Falcon application.

For our application we will use Python3.5.

Create virtualenv and install the necessary libraries:

Create the file 'app.py':

Now we describe the models in the file 'models.py':

We created two helper methods for setting up the application 'init_tables' and 'generate_users'. Run them to initialize the application:

If you go to the orgdb database , then in the orguser table you will see the created users.

Now you can test the API:

Let's evaluate the performance of our API using Taurus . If possible, deploy Taurus on a separate machine.

Install Taurus in our virtual environment:

Now we can create a script for our test. Create the bzt-config.yml file with the following contents (do not forget to specify the correct IP address):

This test will simulate web traffic from 100 users, with an increase in their number within a minute, and hold the load for 2 minutes 30 seconds.

Run the API with one worker:

Now we can run Taurus. At the first start, it downloads the necessary dependencies:

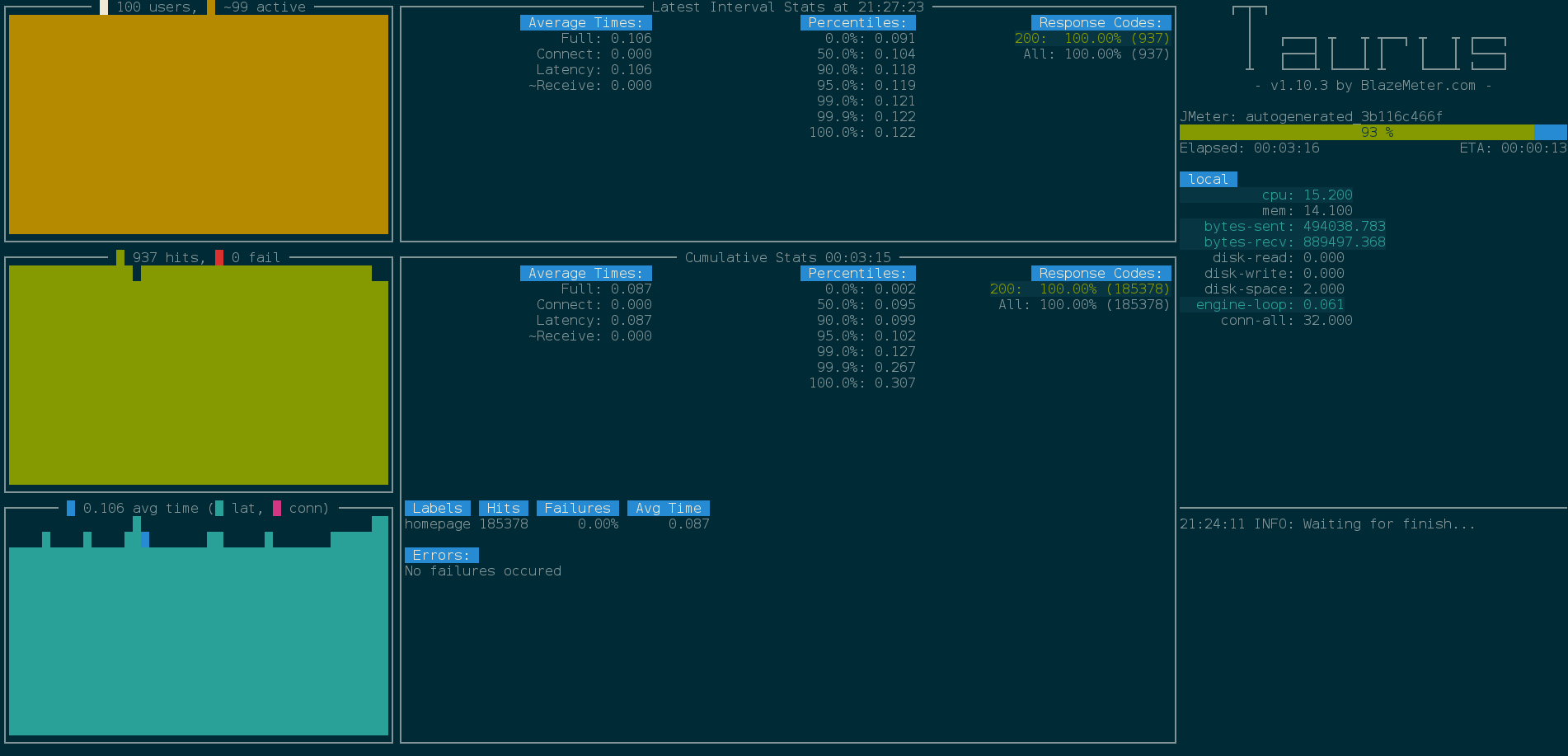

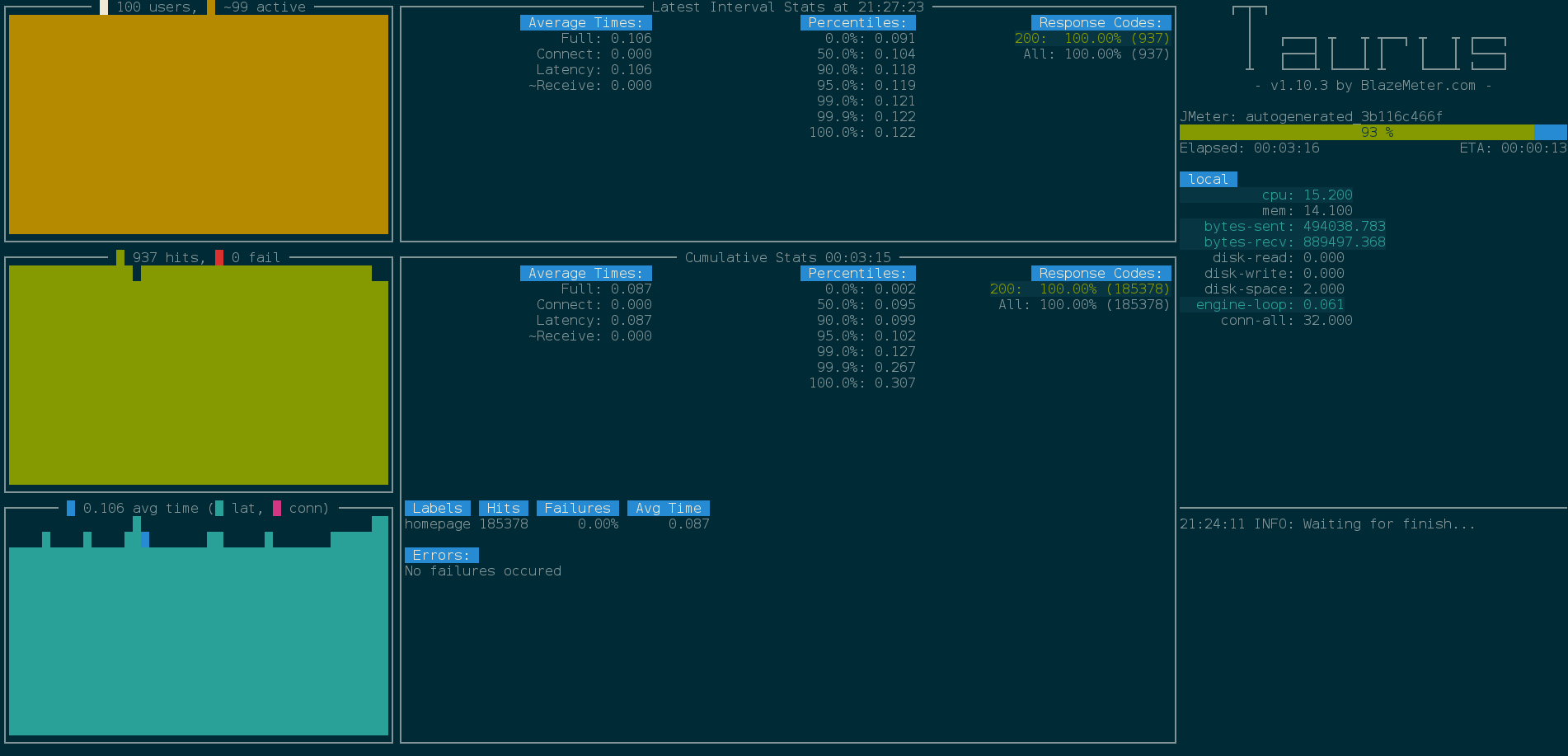

After you install the dependencies displayed our console with the progress of the test execution:

option -report we use to download the results in BlazeMeter and generate web report.

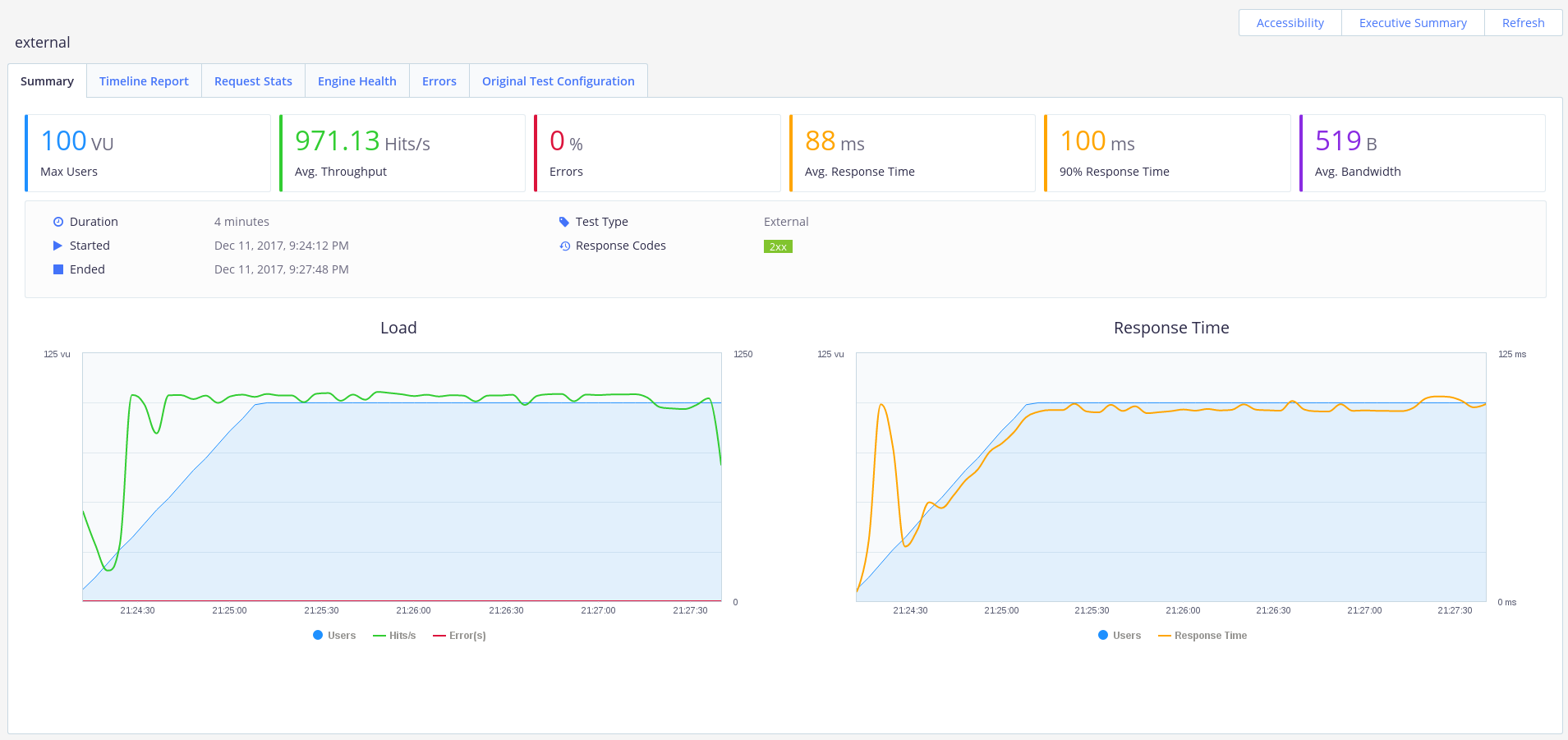

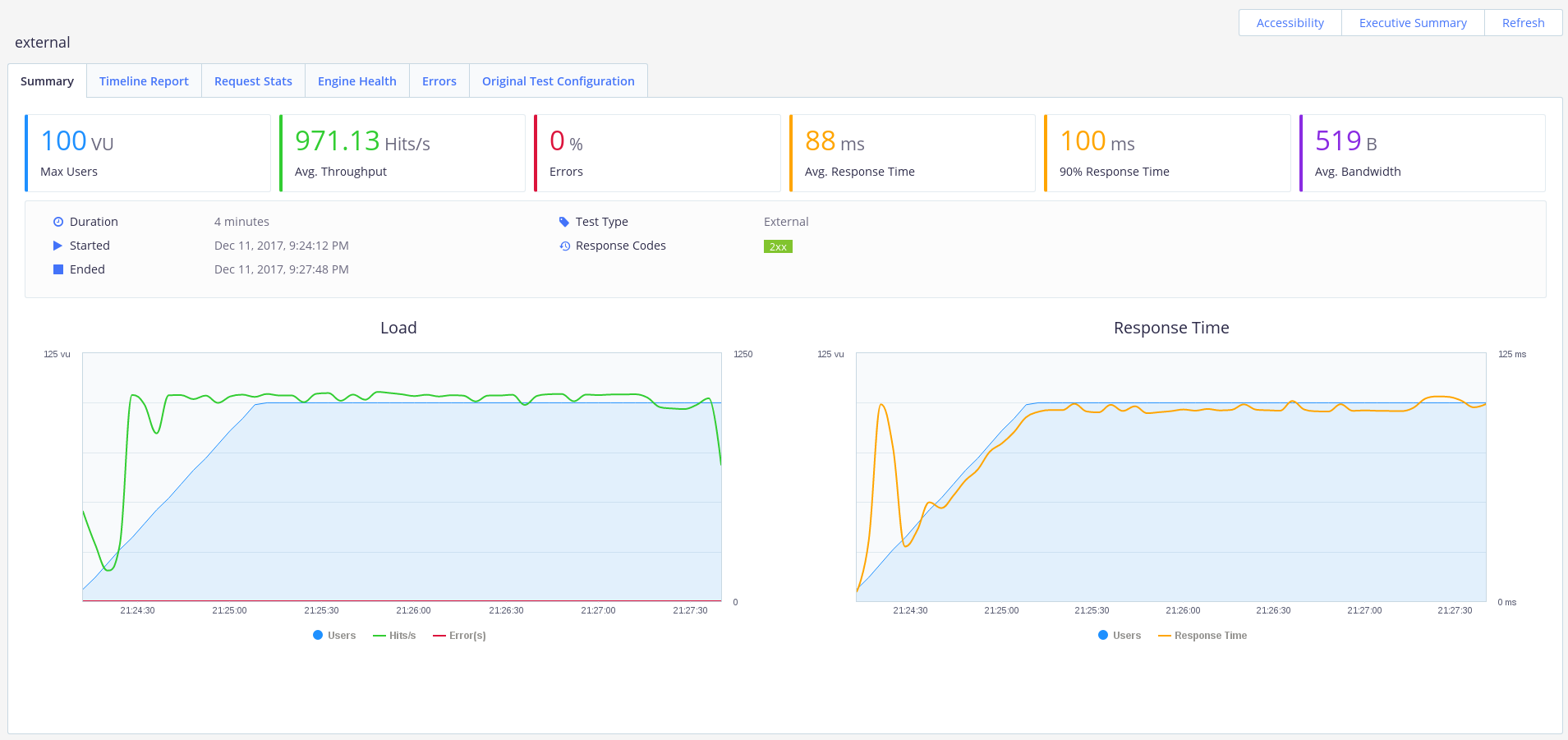

Our API does a great job with 100 users. We reached a throughput of ~ 1000 requests / second, with no errors and with an average response time of 0.1s.

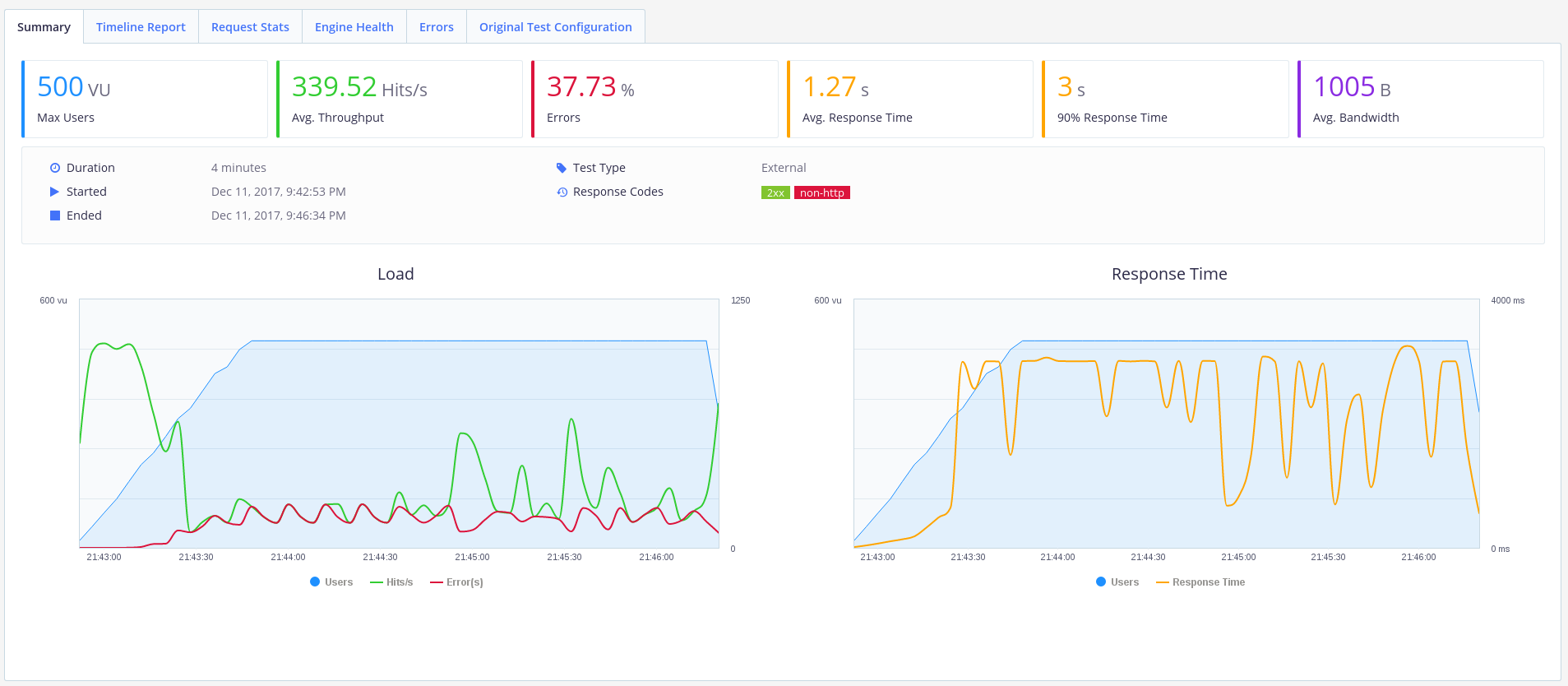

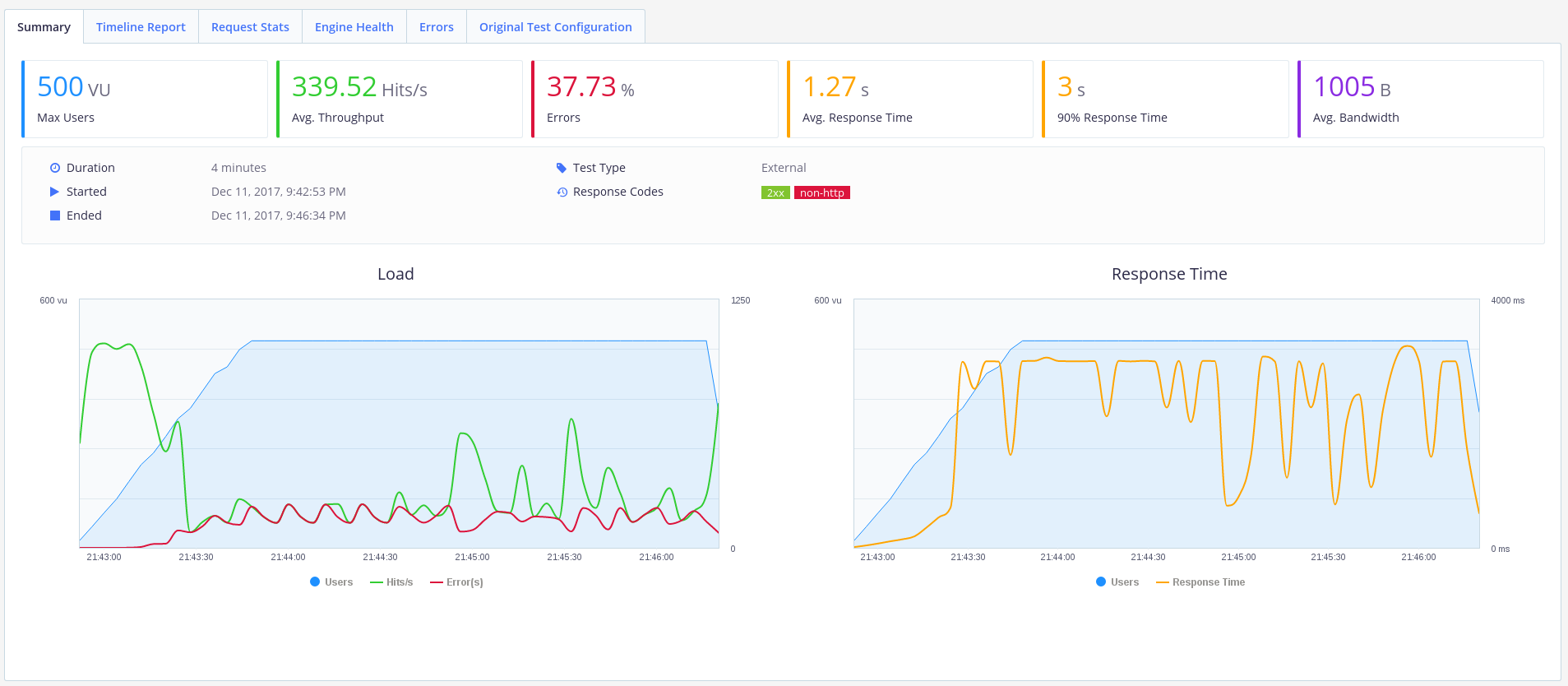

Well, what if there are 500 users? Change the concurrency parameter to 500 in our bzt-config.yml file and run Taurus again.

Hm. It seems that our lonely worker did not cope with the load. 40% of errors are not the case.

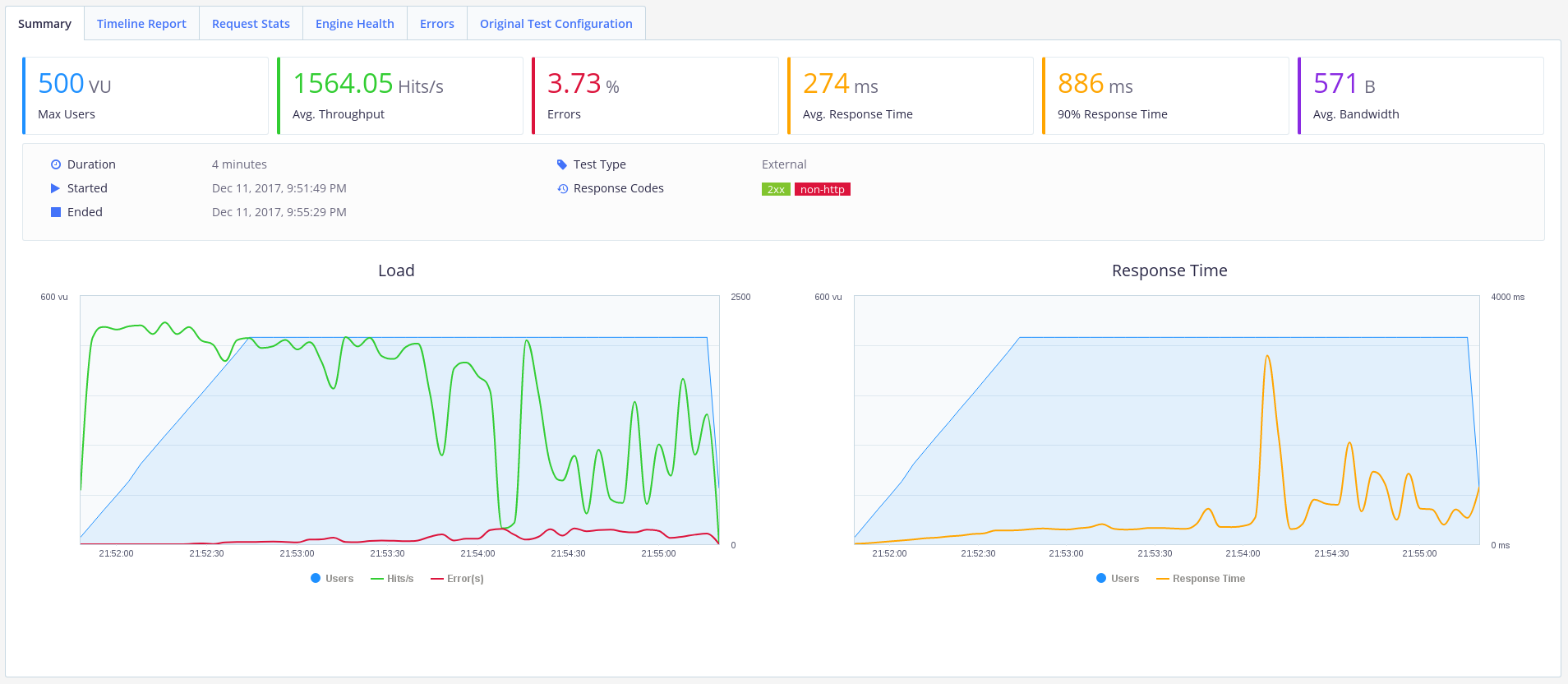

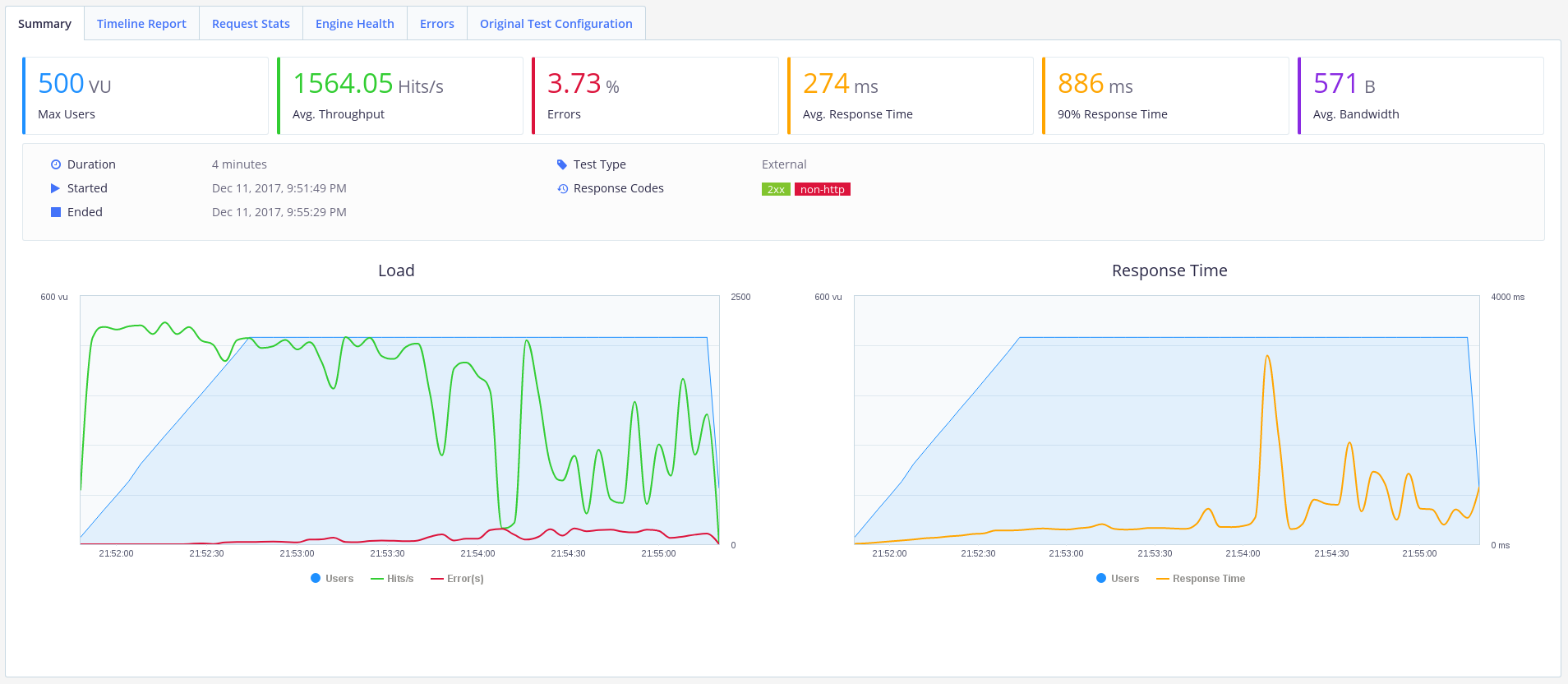

Let's try to increase the number of workers.

It looks better. There are still errors, but the throughput increased to ~ 1500 requests / second, and the average response time decreased to ~ 270 ms. Such an API can already be used.

You can configure PostgreSQL for hardware using PgTune .

That's all for today. Thanks for reading!

In this article, we will create a REST API based on the Python Falcon framework, test the performance and try to scale it to cope with the loads.

To implement and test our API, we need the following components:

Why Falcon?

Falcon is a minimalistic web framework for building a web API, according to the Falcon site it is up to 10 times faster than Flask. Falcon is fast!

Start

I assume that you already have PostgreSQL installed (where would we be without it). We need to create an orgdb database and an orguser user .

This user needs to register password access to the newly created database in the PostgreSQL settings in the pg_hba.conf file and give all rights.

Database configuration completed. Let's move on to creating our Falcon application.

API Creation

For our application we will use Python3.5.

Create virtualenv and install the necessary libraries:

$ virtualenv ~/falconenv

$ source ~/falconenv/bin/activate

$ pip install peewee falcon gunicorn

Create the file 'app.py':

import falcon

from models import *

from playhouse.shortcuts import model_to_dict

import json

class UserIdResource():

def on_get(self, req, resp, user_id):

try:

user = OrgUser.get(OrgUser.id == user_id)

resp.body = json.dumps(model_to_dict(user))

except OrgUser.DoesNotExist:

resp.status = falcon.HTTP_404

class UserResource():

def on_get(self, req, resp):

users = OrgUser.select().order_by(OrgUser.id)

resp.body = json.dumps([model_to_dict(u) for u in users])

api = falcon.API()

users = UserResource()

users_id = UserIdResource()

api.add_route('/users/', users)

api.add_route('/users/{user_id}', users_id)

Now we describe the models in the file 'models.py':

from peewee import *

import uuid

psql_db = PostgresqlDatabase(

'orgdb',

user='orguser',

password='123456',

host='127.0.0.1')

def init_tables():

psql_db.create_tables([OrgUser], safe=True)

def generate_users(num_users):

for i in range(num_users):

user_name = str(uuid.uuid4())[0:8]

OrgUser(username=user_name).save()

class BaseModel(Model):

class Meta:

database = psql_db

class OrgUser(BaseModel):

username = CharField(unique=True)

We created two helper methods for setting up the application 'init_tables' and 'generate_users'. Run them to initialize the application:

$ python

Python 3.5.1 (default, Sep 15 2016, 08:30:32)

Type "help", "copyright", "credits" or "license" for more information.

>>> from app import *

>>> init_tables()

>>> generate_users(20)

If you go to the orgdb database , then in the orguser table you will see the created users.

Now you can test the API:

$ gunicorn app:api -b 0.0.0.0:8000

[2017-12-11 23:19:40 +1100] [23493] [INFO] Starting gunicorn 19.7.1

[2017-12-11 23:19:40 +1100] [23493] [INFO] Listening at: http://0.0.0.0:8000 (23493)

[2017-12-11 23:19:40 +1100] [23493] [INFO] Using worker: sync

[2017-12-11 23:19:40 +1100] [23496] [INFO] Booting worker with pid: 23496

$ curl http://localhost:8000/users

[{"username": "e60202a4", "id": 1}, {"username": "e780bdd4", "id": 2}, {"username": "cb29132d", "id": 3}, {"username": "4016c71b", "id": 4}, {"username": "e0d5deba", "id": 5}, {"username": "e835ae28", "id": 6}, {"username": "952ba94f", "id": 7}, {"username": "8b03499e", "id": 8}, {"username": "b72a0e55", "id": 9}, {"username": "ad782bb8", "id": 10}, {"username": "ec832c5f", "id": 11}, {"username": "f59f2dec", "id": 12}, {"username": "82d7149d", "id": 13}, {"username": "870f486d", "id": 14}, {"username": "6cdb6651", "id": 15}, {"username": "45a09079", "id": 16}, {"username": "612397f6", "id": 17}, {"username": "901c2ab6", "id": 18}, {"username": "59d86f87", "id": 19}, {"username": "1bbbae00", "id": 20}]

Testing API

Let's evaluate the performance of our API using Taurus . If possible, deploy Taurus on a separate machine.

Install Taurus in our virtual environment:

$ pip install bzt

Now we can create a script for our test. Create the bzt-config.yml file with the following contents (do not forget to specify the correct IP address):

execution:

concurrency: 100

hold-for: 2m30s

ramp-up: 1m

scenario:

requests:

- url: http://ip-addr:8000/users/

method: GET

label: api

timeout: 3sThis test will simulate web traffic from 100 users, with an increase in their number within a minute, and hold the load for 2 minutes 30 seconds.

Run the API with one worker:

$ gunicorn --workers 1 app:api -b 0.0.0.0:8000Now we can run Taurus. At the first start, it downloads the necessary dependencies:

$ bzt bzt-config.yml -reportAfter you install the dependencies displayed our console with the progress of the test execution:

option -report we use to download the results in BlazeMeter and generate web report.

Our API does a great job with 100 users. We reached a throughput of ~ 1000 requests / second, with no errors and with an average response time of 0.1s.

Well, what if there are 500 users? Change the concurrency parameter to 500 in our bzt-config.yml file and run Taurus again.

Hm. It seems that our lonely worker did not cope with the load. 40% of errors are not the case.

Let's try to increase the number of workers.

gunicorn --workers 20 app:api -b 0.0.0.0:8000

It looks better. There are still errors, but the throughput increased to ~ 1500 requests / second, and the average response time decreased to ~ 270 ms. Such an API can already be used.

Further performance optimization

You can configure PostgreSQL for hardware using PgTune .

That's all for today. Thanks for reading!