Netflix selects the best movie covers for each viewer

- Transfer

From the Netflix Technology Blog

For many years, the main goal of Netflix’s personal recommendation system was to choose the right movies - and offer them to users on time. With thousands of films in the catalog and versatile customer preferences on hundreds of millions of accounts, it is critical to recommend the exact films to each of them. But the work of the recommendation system does not end there. What can you say about a new and unfamiliar film that will arouse your interest? How to convince you that he is worth watching? It is important to answer these questions to help people discover new content, especially unfamiliar films.

One way to solve the problem is to take into account pictures or covers for films. If the picture looks convincing, then it serves as an impetus and a kind of visual "proof" that the film is worth watching. It can depict an actor you know, an exciting moment like a car chase or a dramatic scene that conveys the essence of a film or series. If we show the perfect film cover on your home page (as they say, a picture is worth a thousand words), then maybe, just maybe, you decide to choose this movie. This is just another thing in which Netflix differs from traditional media: we have not one product, but more than 100 million different products, and each user receives personalized recommendations and personalized covers .

Netflix front page without covers. So, historically, our recommendation algorithms saw a page.

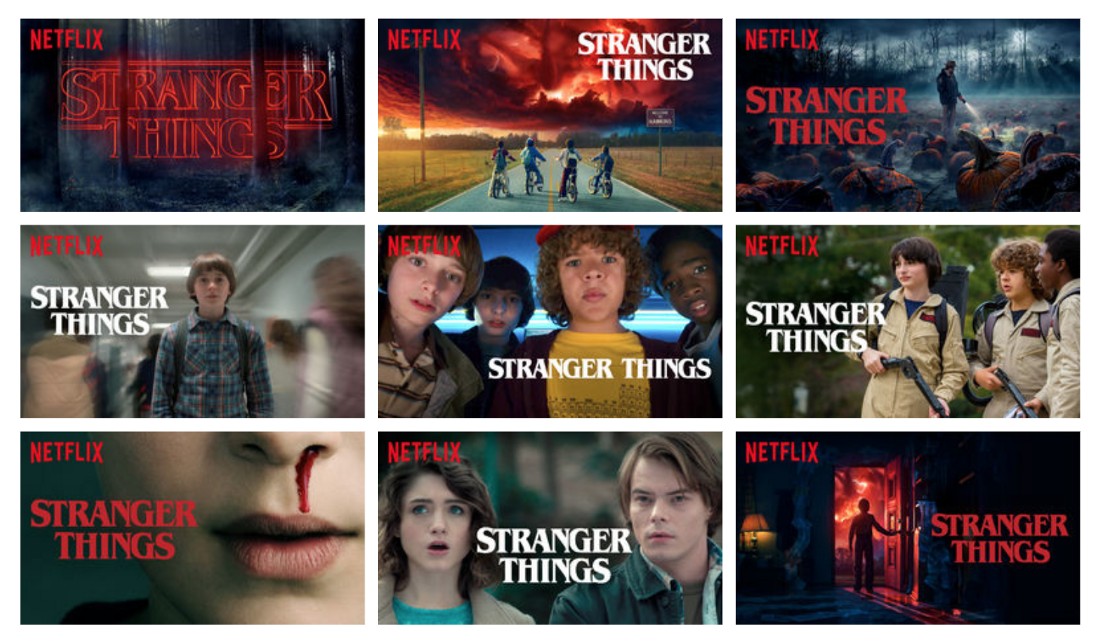

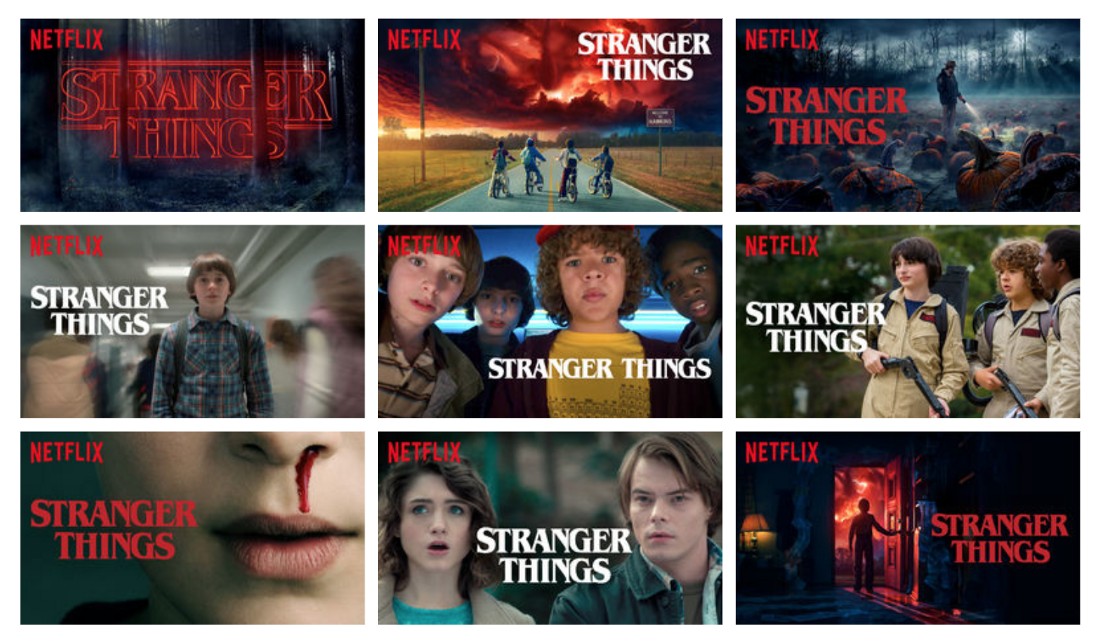

In a previous work, we discussed how to find one perfect cover for each movie for all users. With the help of multi-armed bandit algorithms, we searched for the best cover for a particular movie or series, for example, Stranger Things. This picture provided the maximum number of views from the maximum number of our users. But given the incredible variety of tastes and preferences - wouldn’t it be better to choose the optimal picture for each of the viewers to emphasize aspects of the film that are important specifically for him ?

The covers of the sci-fi series “Very Strange Things”, each of which our personalization algorithm has chosen for more than 5% of impressions. Different pictures show the breadth of topics in the series and go beyond what a single cover can show.

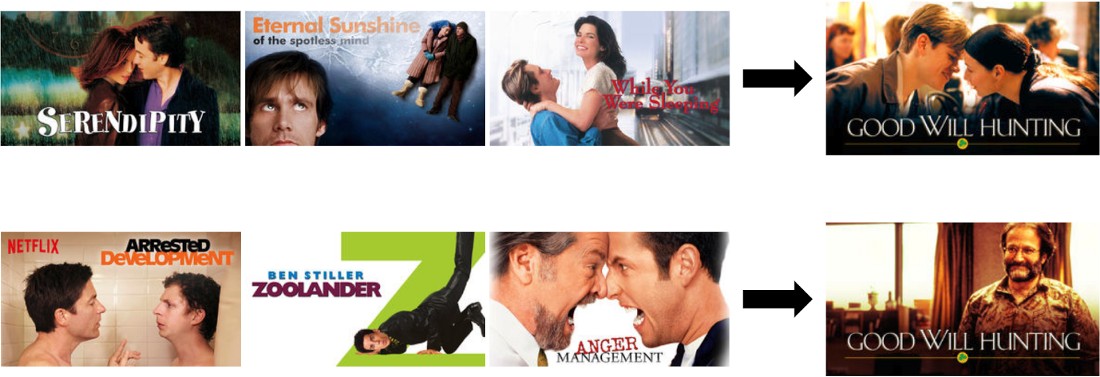

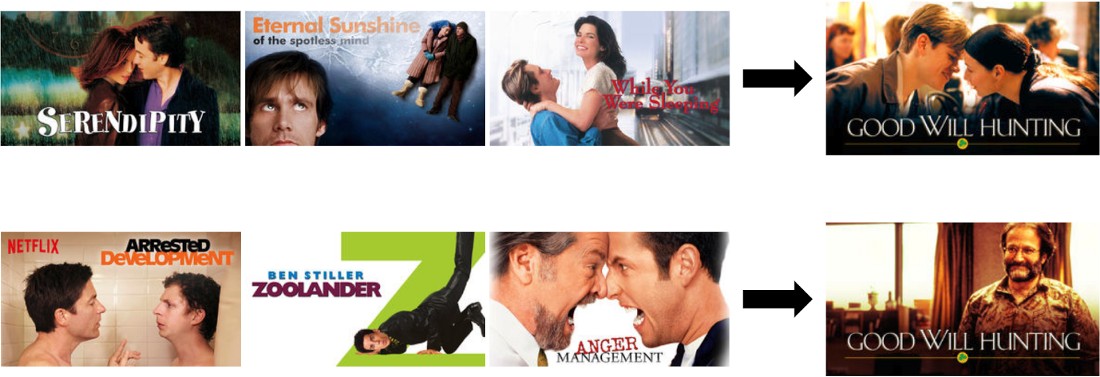

For inspiration, let's go through options where personalizing covers makes sense. Consider the following examples, where different users have different histories of watched movies. On the left are three films that a person watched in the past. To the right of the arrow is the cover that we will recommend to him.

Let's try to choose a personal picture for the film "Good Will Hunting." Here we can derive a solution based on the extent to which the user prefers different genres and themes. If a person has watched a lot of romantic films, then “Good Will Hunting” can attract his attention with a picture of Matt Damon and Minnie Driver. And if a person watched a lot of comedies, he might be interested in this film after seeing the image of Robbie Williams, a famous comedian.

In another case, imagine how different preferences about the cast can affect the personalization of the cover for Pulp Fiction. If the user has watched many films with Uma Thurman, then he is more likely to respond positively to the cover of "Pulp Fiction" with Uma. At the same time, a John Travolta fan may be more interested in watching a movie if he sees John on the cover.

Of course, not all cover personalization tasks are so simple. So we will not rely on manually prescribed rules, but rely on signals from the data. In general, with the help of personalization of covers, we help each individual image to pave the best path to each user, thereby improving the quality of the service.

We at Netflix algorithmically adapt many aspects of the site personally for each user, including the series on the main page , films for these series , galleries for display, our messages and so on. Each new aspect that we personalize presents new challenges. Personalization of covers is no exception; there are unique problems here.

One of the problems is that we can only use a single instance of an image to display to the user. In contrast, typical recommendation settings allow the user to demonstrate different optionsso that we can gradually study his preferences based on the choices made. This means that choosing a picture is a chicken and egg problem in a closed loop: a person chooses films only with pictures that we decided to choose for him. What we are trying to understand is when showing a particular cover prompted a person to watch a movie (or not), and when a person would watch a film (or not) regardless of the cover. Therefore, personalization of covers combines different algorithms together. Of course, to properly personalize covers, you need to collect a lot of data in order to find signals about which specific copies of covers have a significant advantage over the others for a particular user.

Another problem is to understand the effect of a cover change that we show the user in different sessions. Does changing the cover reduce the recognition of the film and complicate its visual search, for example, if the user was previously interested in the film but has not yet watched it? Or does changing the cover contribute to a change in decision thanks to improved image selection? Obviously, if we found the best cover for this person - you should use it; but constant changes can confuse the user. Changing images cause an attribution problem, as it becomes unclear which cover has generated interest in the film from the user.

Further, there is a problem of understanding how covers interact on a single page or in one session. Maybe the bold close-up of the protagonist is effective for the film because it stands out from the other covers on the page. But if all covers have similar characteristics, then the page as a whole will lose its appeal. It may not be enough to consider each cover individually and you need to consider choosing a diverse set of covers on the page or during the session. In addition to neighboring covers, the effectiveness of each particular cover may also depend on what other facts and resources (for example, synopsis, trailers, etc.) we show for this film. Various combinations appear in which each object emphasizes additional aspects of the film, increasing the chances of positively influencing the viewer.

Effective personalization requires a good set of covers for each movie. This means that various resources are required, each of which is attractive, informative and indicative of the film, in order to avoid clickbait. The set of images for the film should also be diverse enough to cover a wide potential audience interested in different aspects of the content. In the end, how attractive and informative a particular cover is depends on the individual person who sees it. Therefore, we need artwork that emphasizes not only different themes in the film, but also different aesthetics. Our groups of artists and designers are trying as they can to create pictures that are diverse in all respects. They take into account personalization algorithms that select pictures during the creative process of their creation.

Finally, the challenge for engineers is to personalize covers on a large scale. The site actively uses images - and therefore there are a lot of pictures on it. So the personalization of each resource means processing at the peak of more than 20 million requests per second with a small delay. Such a system must be reliable: improper rendering of the cover in our UI will significantly worsen the impression of the site. Our personalization engine should also quickly respond to new films, that is, quickly master personalization in a cold start situation. Then, after the launch, the algorithm should continuously adapt, since the effectiveness of covers can change over time - this depends on the life cycle of the film and on the changing tastes of the audience.

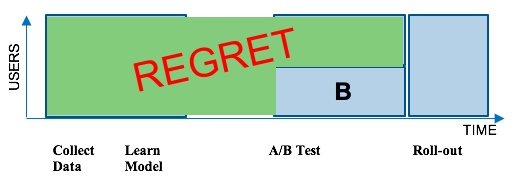

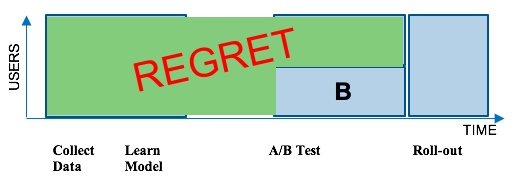

Netflix's engine of recommendations works largely on machine learning algorithms. Traditionally, we collect a lot of data about the use of the service by users. Then we launch a new machine learning algorithm on this data packet. Then we test it in production through A / B tests on a random sample of users. Such tests make sure that the new algorithm is better than the current system in production. Users in group A are given the current system in production, and users in group B are the result of the new algorithm. If group B demonstrates a greater involvement in the work of the Netflix service, then we are rolling out a new algorithm to the entire audience. Unfortunately, with this approach, many users for a long time cannot use the new system, as shown in the illustration below.

To reduce the period of unavailability of the new service, we abandoned the batch method of machine learning in favor of the online method. To personalize covers, we use a specific machine learning framework - contextual bandits . Instead of waiting for a complete data packet to be collected, waiting for the model to learn, and then waiting for the A / B tests to complete, contextual bandits quickly determine the optimal choice of film cover for each user and context. In short, contextual bandits are a class of online machine learning algorithms that offset the cost of collecting the training data needed to continuously train an unbiased model with the benefits of using a trained model in the context of each user. In ourprevious work on choosing optimal covers without personalization used non-contextual bandits who found the best cover regardless of context. In the case of personalization, the context of a specific user is taken into account, since each of them perceives each image differently.

A key property of contextual bandits is that they reduce the period of inaccessibility of a service. At a high level, data for contextual bandit training comes through the introduction of controlled randomization in the predictions of the trained model. Randomization schemes can be of varying complexity: from simple epsilon-greedy algorithms with a uniform distribution of randomness to closed-loop schemes that adaptively change the degree of randomization, which is a function of the uncertainty of the model parameters. We broadly call this process data exploration.. The choice of strategy to study depends on the number of candidate covers available and the number of users for whom the system is being deployed. With this study of the data, it is required to record in the journal information about the randomization of each cover selection. This journal allows you to correct distorted deviations from the selection and, thus, impartially perform an autonomous evaluation of the model, as described below.

Studying data with contextual bandits usually comes at a price, since choosing a cover during a user session with some probability may not coincide with the most optimal predicted cover for that session. How does such randomization affect the impression of working with the site (and therefore also our metrics)? Based on over 100 million users, data loss is usually very small and depreciated on a large user base, since each user implicitly helps provide feedback for covers in a small part of the catalog. This makes losses insignificant due to the study of data per user, which is important to take into account when choosing contextual bandits for a key aspect of user interaction with the site.

In our online study scheme, we get a training data set, where for each element of a set of related data (user, film, cover) it is indicated whether this choice led to the decision to watch a movie or not. Moreover, we can control the study process so that the choice of covers does not change too often. This gives a clearer attribution of the attractiveness of a particular cover to the user. We also carefully monitor the performance and avoid clickbait when teaching the model to recommend certain covers, as a result of which the user begins to watch the movie, but in the end remains unsatisfied.

In this setting of online learning, we train the contextual gangster model to choose the best cover for each user, depending on their context. Usually we have dozens of candidate covers for each film. To train the model, we can simplify the problem by ranking covers. Even with this simplification, we still get information about the user's preferences, since each candidate cover is liked by one user and not like the other. These preferences are used to predict the effectiveness of each triad (user, film, cover). The best predictions with learning data are given by a good balance of teaching models with a teacher or contextual bandits with Thompson Sampling, LinUCB or Bayesian methods of assessment.

In contextual models, context is usually represented as a feature vector, which is used as input to the model. Many signals are suitable for the role of signs. In particular, these are many user attributes: movies watched, movie genres, user interaction with a specific movie, his country, language, device used, time of day and day of the week. Since the algorithm selects the covers together with the movie recommendation engine, we can also use signals that different recommendation algorithms think of the name, regardless of the cover.

An important consideration is that some images are obviously on their own better than others in the candidate pool. We note the total share of views ( take rates) for all images in the study data. These ratios simply represent the number of quality views divided by the number of impressions. In our previous work on choosing covers without personalization, the image was selected according to the overall performance coefficient for the entire audience. In the new contextual personalization model, the overall coefficient is still important and is taken into account when selecting a cover for a specific user.

After the above model training, it is used to rank images in each context. The model predicts the likelihood of watching a movie for a given cover in a given user context. Based on these probabilities, we sort the set of candidate covers and choose the one that gives the greatest probability. We show it to a specific user.

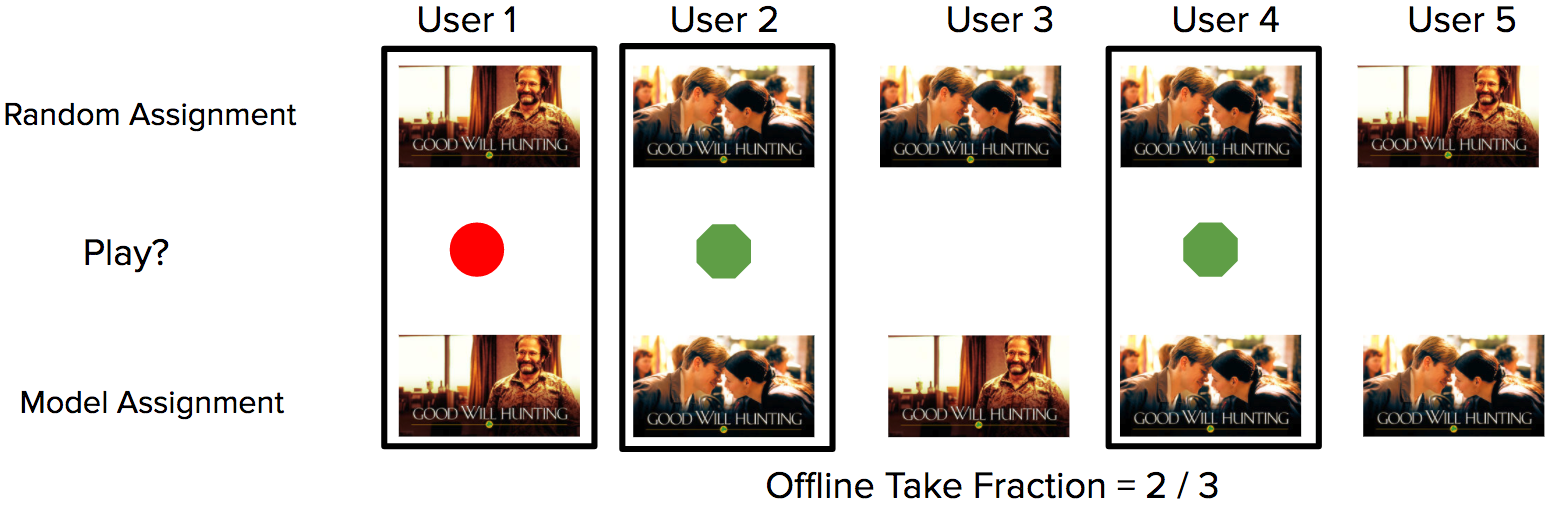

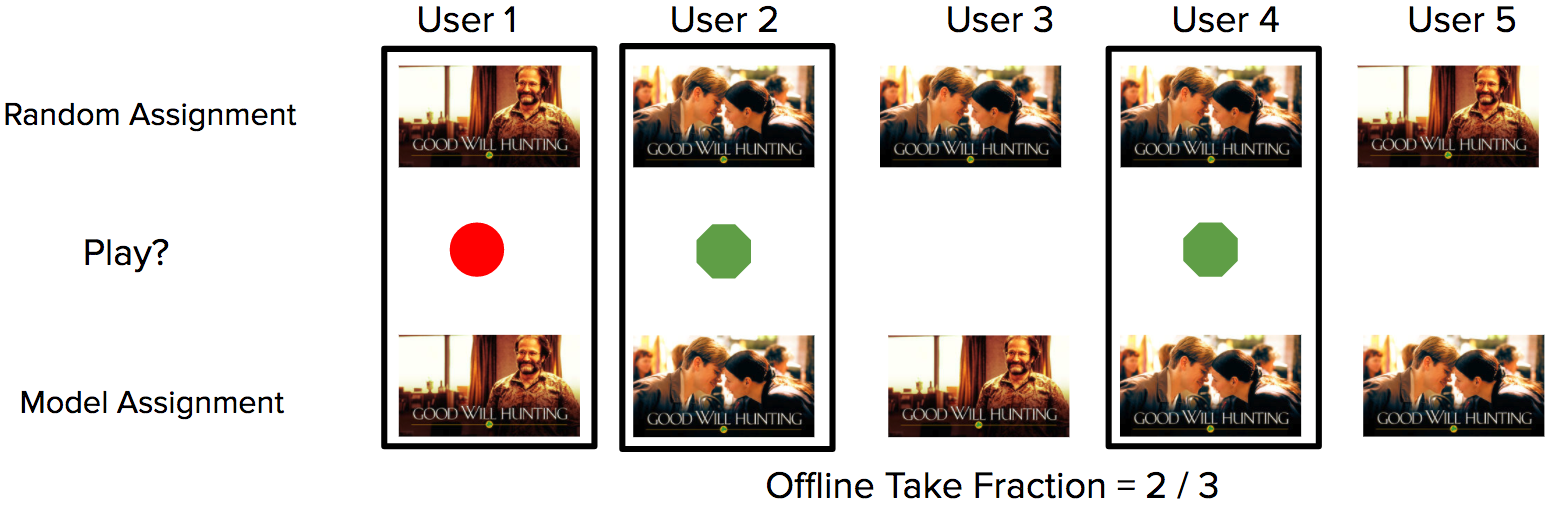

Our algorithms context thugs to overrun events online first evaluated offline by the technique known as replay (replay) [1]. This method allows you to answer hypothetical questions based on entries in the data study log (Fig. 1). In other words, we can compare offline what would happen in historical sessions in different scenarios when using different algorithms in an unbiased way

Fig. 1. A simple example of calculating the replay metric based on data from the log. Each user is assigned a random image (top row). The system logs the cover display and the fact whether the user launched the movie to play (green circle) or not (red). The metric for the new model is calculated by comparing profiles where the random assignments and assignments of the model are the same (black square) and calculating in this subset the share of successful launches (take fraction).

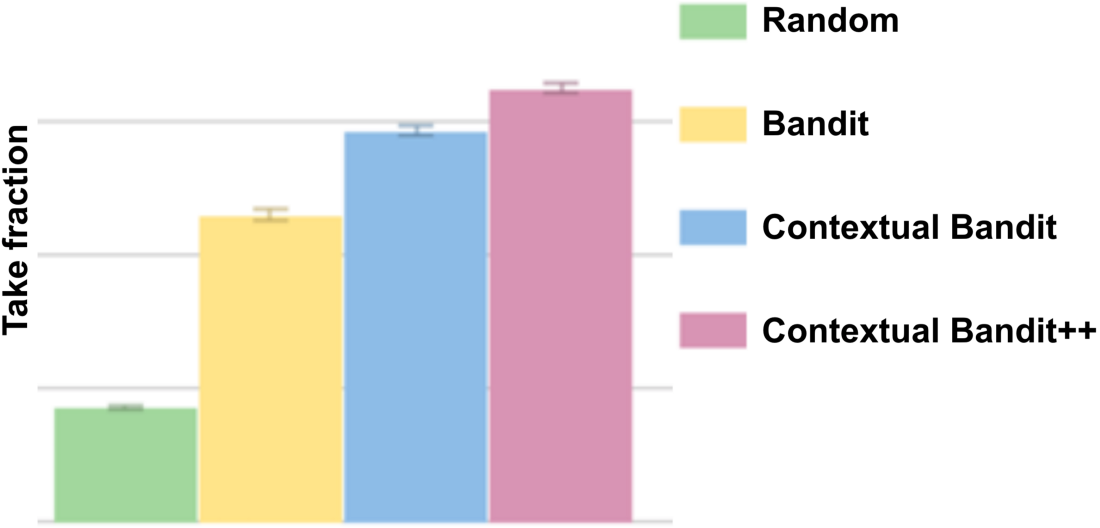

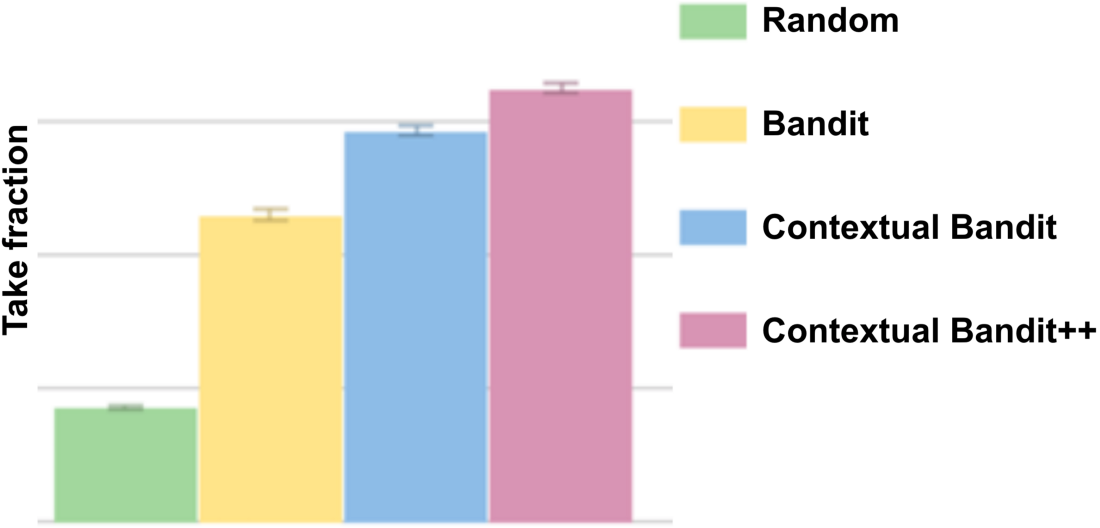

The replay metric shows how much the percentage of users who run the movie will change when using the new algorithm compared to the algorithm that is currently used in production. For covers, we are interested in several metrics, including the percentage of successful launches described above. In fig. Figure 2 shows how a contextual bandit helps to increase the proportion of starts in a directory compared to a random choice or non-contextual bandit.

Fig. 2: The average share of launches (the larger the better) for different algorithms based on the replay metric from the image data log. The Rule Random (green) selects one picture randomly. The simple Bandit algorithm (yellow) selects the image with the largest share of starts. Context gang algorithms (blue and pink) use context to select different images for different users.

Fig. 3: An example of contextual image selection depending on the type of profile. "Comedy" corresponds to a profile that looks mainly comedies. Similarly, the “romantic” profile watches mostly romantic films. The contextual gangster selects an image of Robin Williams, a famous comedian, for comedy-prone profiles, while at the same time choosing an image of a kissing couple for profiles more prone to romance.

After offline experiments with many different models, we identified those that showed a significant increase in the replay metric - and eventually launched A / B testing to compare the most promising personalization contextual bandits compared to bandits without personalization. As expected, personalization worked and led to a significant increase in our key metrics. We also saw a reasonable correlation between offline replay metrics and online metrics. The online results also gave some interesting insights. For example, personalization showed a greater effect if the user had not encountered the movie before. This makes sense: it is logical to assume that the cover is more important for people less familiar with the film.

Using this approach, we took the first steps to personalize the selection of covers for our recommendations and on the site. The result was a significant improvement in how users find new content ... so we rolled out the system for everyone! This example is the first example of personalization, not only of what we recommend to our users, but of how we recommend it. But there are many opportunities to expand and improve the initial approach. This includes the development of algorithms for a cold start, when the personalization of new covers and new films is carried out as quickly as possible, for example, using computer vision techniques. Another possibility is the extension of the personalization approach to other types of used covers and to other informational fragments in the film description: synopsises, metadata and trailers. There is a wider problem:

[1] L. Li, W. Chu, J. Langford, X. Wang, “Unbiased Offline Evaluation of Contextual-bandit-based News Article Recommendation Algorithms” in the Proceedings of the Fourth ACM International Conference on Web Search and Data Mining , New York, NY, USA, 2011, 297-306.

For many years, the main goal of Netflix’s personal recommendation system was to choose the right movies - and offer them to users on time. With thousands of films in the catalog and versatile customer preferences on hundreds of millions of accounts, it is critical to recommend the exact films to each of them. But the work of the recommendation system does not end there. What can you say about a new and unfamiliar film that will arouse your interest? How to convince you that he is worth watching? It is important to answer these questions to help people discover new content, especially unfamiliar films.

One way to solve the problem is to take into account pictures or covers for films. If the picture looks convincing, then it serves as an impetus and a kind of visual "proof" that the film is worth watching. It can depict an actor you know, an exciting moment like a car chase or a dramatic scene that conveys the essence of a film or series. If we show the perfect film cover on your home page (as they say, a picture is worth a thousand words), then maybe, just maybe, you decide to choose this movie. This is just another thing in which Netflix differs from traditional media: we have not one product, but more than 100 million different products, and each user receives personalized recommendations and personalized covers .

Netflix front page without covers. So, historically, our recommendation algorithms saw a page.

In a previous work, we discussed how to find one perfect cover for each movie for all users. With the help of multi-armed bandit algorithms, we searched for the best cover for a particular movie or series, for example, Stranger Things. This picture provided the maximum number of views from the maximum number of our users. But given the incredible variety of tastes and preferences - wouldn’t it be better to choose the optimal picture for each of the viewers to emphasize aspects of the film that are important specifically for him ?

The covers of the sci-fi series “Very Strange Things”, each of which our personalization algorithm has chosen for more than 5% of impressions. Different pictures show the breadth of topics in the series and go beyond what a single cover can show.

For inspiration, let's go through options where personalizing covers makes sense. Consider the following examples, where different users have different histories of watched movies. On the left are three films that a person watched in the past. To the right of the arrow is the cover that we will recommend to him.

Let's try to choose a personal picture for the film "Good Will Hunting." Here we can derive a solution based on the extent to which the user prefers different genres and themes. If a person has watched a lot of romantic films, then “Good Will Hunting” can attract his attention with a picture of Matt Damon and Minnie Driver. And if a person watched a lot of comedies, he might be interested in this film after seeing the image of Robbie Williams, a famous comedian.

In another case, imagine how different preferences about the cast can affect the personalization of the cover for Pulp Fiction. If the user has watched many films with Uma Thurman, then he is more likely to respond positively to the cover of "Pulp Fiction" with Uma. At the same time, a John Travolta fan may be more interested in watching a movie if he sees John on the cover.

Of course, not all cover personalization tasks are so simple. So we will not rely on manually prescribed rules, but rely on signals from the data. In general, with the help of personalization of covers, we help each individual image to pave the best path to each user, thereby improving the quality of the service.

Problems

We at Netflix algorithmically adapt many aspects of the site personally for each user, including the series on the main page , films for these series , galleries for display, our messages and so on. Each new aspect that we personalize presents new challenges. Personalization of covers is no exception; there are unique problems here.

One of the problems is that we can only use a single instance of an image to display to the user. In contrast, typical recommendation settings allow the user to demonstrate different optionsso that we can gradually study his preferences based on the choices made. This means that choosing a picture is a chicken and egg problem in a closed loop: a person chooses films only with pictures that we decided to choose for him. What we are trying to understand is when showing a particular cover prompted a person to watch a movie (or not), and when a person would watch a film (or not) regardless of the cover. Therefore, personalization of covers combines different algorithms together. Of course, to properly personalize covers, you need to collect a lot of data in order to find signals about which specific copies of covers have a significant advantage over the others for a particular user.

Another problem is to understand the effect of a cover change that we show the user in different sessions. Does changing the cover reduce the recognition of the film and complicate its visual search, for example, if the user was previously interested in the film but has not yet watched it? Or does changing the cover contribute to a change in decision thanks to improved image selection? Obviously, if we found the best cover for this person - you should use it; but constant changes can confuse the user. Changing images cause an attribution problem, as it becomes unclear which cover has generated interest in the film from the user.

Further, there is a problem of understanding how covers interact on a single page or in one session. Maybe the bold close-up of the protagonist is effective for the film because it stands out from the other covers on the page. But if all covers have similar characteristics, then the page as a whole will lose its appeal. It may not be enough to consider each cover individually and you need to consider choosing a diverse set of covers on the page or during the session. In addition to neighboring covers, the effectiveness of each particular cover may also depend on what other facts and resources (for example, synopsis, trailers, etc.) we show for this film. Various combinations appear in which each object emphasizes additional aspects of the film, increasing the chances of positively influencing the viewer.

Effective personalization requires a good set of covers for each movie. This means that various resources are required, each of which is attractive, informative and indicative of the film, in order to avoid clickbait. The set of images for the film should also be diverse enough to cover a wide potential audience interested in different aspects of the content. In the end, how attractive and informative a particular cover is depends on the individual person who sees it. Therefore, we need artwork that emphasizes not only different themes in the film, but also different aesthetics. Our groups of artists and designers are trying as they can to create pictures that are diverse in all respects. They take into account personalization algorithms that select pictures during the creative process of their creation.

Finally, the challenge for engineers is to personalize covers on a large scale. The site actively uses images - and therefore there are a lot of pictures on it. So the personalization of each resource means processing at the peak of more than 20 million requests per second with a small delay. Such a system must be reliable: improper rendering of the cover in our UI will significantly worsen the impression of the site. Our personalization engine should also quickly respond to new films, that is, quickly master personalization in a cold start situation. Then, after the launch, the algorithm should continuously adapt, since the effectiveness of covers can change over time - this depends on the life cycle of the film and on the changing tastes of the audience.

Contextual Bandit Approach

Netflix's engine of recommendations works largely on machine learning algorithms. Traditionally, we collect a lot of data about the use of the service by users. Then we launch a new machine learning algorithm on this data packet. Then we test it in production through A / B tests on a random sample of users. Such tests make sure that the new algorithm is better than the current system in production. Users in group A are given the current system in production, and users in group B are the result of the new algorithm. If group B demonstrates a greater involvement in the work of the Netflix service, then we are rolling out a new algorithm to the entire audience. Unfortunately, with this approach, many users for a long time cannot use the new system, as shown in the illustration below.

To reduce the period of unavailability of the new service, we abandoned the batch method of machine learning in favor of the online method. To personalize covers, we use a specific machine learning framework - contextual bandits . Instead of waiting for a complete data packet to be collected, waiting for the model to learn, and then waiting for the A / B tests to complete, contextual bandits quickly determine the optimal choice of film cover for each user and context. In short, contextual bandits are a class of online machine learning algorithms that offset the cost of collecting the training data needed to continuously train an unbiased model with the benefits of using a trained model in the context of each user. In ourprevious work on choosing optimal covers without personalization used non-contextual bandits who found the best cover regardless of context. In the case of personalization, the context of a specific user is taken into account, since each of them perceives each image differently.

A key property of contextual bandits is that they reduce the period of inaccessibility of a service. At a high level, data for contextual bandit training comes through the introduction of controlled randomization in the predictions of the trained model. Randomization schemes can be of varying complexity: from simple epsilon-greedy algorithms with a uniform distribution of randomness to closed-loop schemes that adaptively change the degree of randomization, which is a function of the uncertainty of the model parameters. We broadly call this process data exploration.. The choice of strategy to study depends on the number of candidate covers available and the number of users for whom the system is being deployed. With this study of the data, it is required to record in the journal information about the randomization of each cover selection. This journal allows you to correct distorted deviations from the selection and, thus, impartially perform an autonomous evaluation of the model, as described below.

Studying data with contextual bandits usually comes at a price, since choosing a cover during a user session with some probability may not coincide with the most optimal predicted cover for that session. How does such randomization affect the impression of working with the site (and therefore also our metrics)? Based on over 100 million users, data loss is usually very small and depreciated on a large user base, since each user implicitly helps provide feedback for covers in a small part of the catalog. This makes losses insignificant due to the study of data per user, which is important to take into account when choosing contextual bandits for a key aspect of user interaction with the site.

In our online study scheme, we get a training data set, where for each element of a set of related data (user, film, cover) it is indicated whether this choice led to the decision to watch a movie or not. Moreover, we can control the study process so that the choice of covers does not change too often. This gives a clearer attribution of the attractiveness of a particular cover to the user. We also carefully monitor the performance and avoid clickbait when teaching the model to recommend certain covers, as a result of which the user begins to watch the movie, but in the end remains unsatisfied.

Model training

In this setting of online learning, we train the contextual gangster model to choose the best cover for each user, depending on their context. Usually we have dozens of candidate covers for each film. To train the model, we can simplify the problem by ranking covers. Even with this simplification, we still get information about the user's preferences, since each candidate cover is liked by one user and not like the other. These preferences are used to predict the effectiveness of each triad (user, film, cover). The best predictions with learning data are given by a good balance of teaching models with a teacher or contextual bandits with Thompson Sampling, LinUCB or Bayesian methods of assessment.

Potential signals

In contextual models, context is usually represented as a feature vector, which is used as input to the model. Many signals are suitable for the role of signs. In particular, these are many user attributes: movies watched, movie genres, user interaction with a specific movie, his country, language, device used, time of day and day of the week. Since the algorithm selects the covers together with the movie recommendation engine, we can also use signals that different recommendation algorithms think of the name, regardless of the cover.

An important consideration is that some images are obviously on their own better than others in the candidate pool. We note the total share of views ( take rates) for all images in the study data. These ratios simply represent the number of quality views divided by the number of impressions. In our previous work on choosing covers without personalization, the image was selected according to the overall performance coefficient for the entire audience. In the new contextual personalization model, the overall coefficient is still important and is taken into account when selecting a cover for a specific user.

Image selection

After the above model training, it is used to rank images in each context. The model predicts the likelihood of watching a movie for a given cover in a given user context. Based on these probabilities, we sort the set of candidate covers and choose the one that gives the greatest probability. We show it to a specific user.

Efficiency mark

Offline

Our algorithms context thugs to overrun events online first evaluated offline by the technique known as replay (replay) [1]. This method allows you to answer hypothetical questions based on entries in the data study log (Fig. 1). In other words, we can compare offline what would happen in historical sessions in different scenarios when using different algorithms in an unbiased way

Fig. 1. A simple example of calculating the replay metric based on data from the log. Each user is assigned a random image (top row). The system logs the cover display and the fact whether the user launched the movie to play (green circle) or not (red). The metric for the new model is calculated by comparing profiles where the random assignments and assignments of the model are the same (black square) and calculating in this subset the share of successful launches (take fraction).

The replay metric shows how much the percentage of users who run the movie will change when using the new algorithm compared to the algorithm that is currently used in production. For covers, we are interested in several metrics, including the percentage of successful launches described above. In fig. Figure 2 shows how a contextual bandit helps to increase the proportion of starts in a directory compared to a random choice or non-contextual bandit.

Fig. 2: The average share of launches (the larger the better) for different algorithms based on the replay metric from the image data log. The Rule Random (green) selects one picture randomly. The simple Bandit algorithm (yellow) selects the image with the largest share of starts. Context gang algorithms (blue and pink) use context to select different images for different users.

| Type of profile | Image Rating A | Image Rating B |

|---|---|---|

| Comedic | 5.7 | 6.3 |

| Romantic | 7.2 | 6.5 |

Fig. 3: An example of contextual image selection depending on the type of profile. "Comedy" corresponds to a profile that looks mainly comedies. Similarly, the “romantic” profile watches mostly romantic films. The contextual gangster selects an image of Robin Williams, a famous comedian, for comedy-prone profiles, while at the same time choosing an image of a kissing couple for profiles more prone to romance.

Online

After offline experiments with many different models, we identified those that showed a significant increase in the replay metric - and eventually launched A / B testing to compare the most promising personalization contextual bandits compared to bandits without personalization. As expected, personalization worked and led to a significant increase in our key metrics. We also saw a reasonable correlation between offline replay metrics and online metrics. The online results also gave some interesting insights. For example, personalization showed a greater effect if the user had not encountered the movie before. This makes sense: it is logical to assume that the cover is more important for people less familiar with the film.

Conclusion

Using this approach, we took the first steps to personalize the selection of covers for our recommendations and on the site. The result was a significant improvement in how users find new content ... so we rolled out the system for everyone! This example is the first example of personalization, not only of what we recommend to our users, but of how we recommend it. But there are many opportunities to expand and improve the initial approach. This includes the development of algorithms for a cold start, when the personalization of new covers and new films is carried out as quickly as possible, for example, using computer vision techniques. Another possibility is the extension of the personalization approach to other types of used covers and to other informational fragments in the film description: synopsises, metadata and trailers. There is a wider problem:

References

[1] L. Li, W. Chu, J. Langford, X. Wang, “Unbiased Offline Evaluation of Contextual-bandit-based News Article Recommendation Algorithms” in the Proceedings of the Fourth ACM International Conference on Web Search and Data Mining , New York, NY, USA, 2011, 297-306.